OneAIFW (aifw) is an open-source tool developed by Funstory.ai that aims to address the data privacy issues of Large Language Models (LLMs). In current LLM applications, users often need to send text containing personally identifiable information (PII) or trade secrets to cloud models (e.g., ChatGPT, Claude, etc.) for processing, which poses a significant risk of privacy leakage. oneAIFW solves the problem by adhering to the "principle of firstness": Data must be secure before it leaves the user-controlled environment. It acts as an intermediate layer "firewall", blocking requests to LLM locally (or on a controlled server).

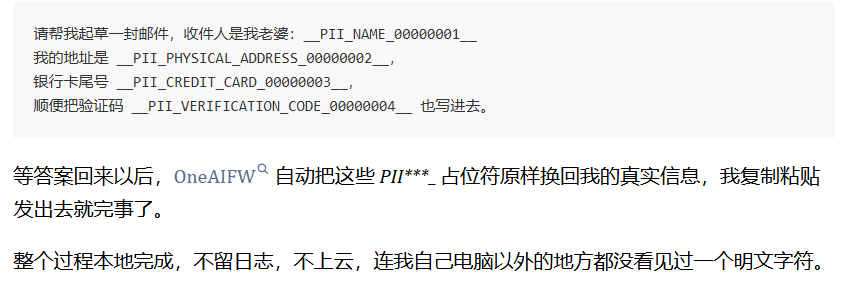

The core working mechanism of the tool is very intuitive:"Mask" and "Restore". Before the request is sent to the external LLM, OneAIFW will automatically identify and replace the sensitive information in the text (such as name, phone number, email address, etc.) with anonymous placeholders; when the LLM returns the result, it will accurately restore these placeholders to the original data. This process is transparent to the LLM service provider, who can only see the desensitized data, thus eliminating the leakage of sensitive data from the source. oneAIFW uses high-performance Zig and Rust to write the core engine, and supports WebAssembly (WASM), which means that it can be efficiently run on the server side as well as directly run offline in the user's browser, realizing true end-side zero-trust privacy. Realizing true end-side zero-trust privacy protection.

Function List

- Two-way Privacy Pipeline: Provided

mask(shielded) andrestore(Restore) two core interfaces. Replace sensitive entities with generic labels before sending (e.g.<PERSON>), which restores the label to its original content upon reception. - Highly accurate PII recognition: Built-in hybrid recognition engine that combines high-performance regular expressions (based on Rust regex) and Named Entity Recognition (NER) models to accurately capture sensitive information such as names of people, places, and contact information.

- Multi-language and cross-platform support:

- Core: The core library is written in Zig and Rust, and is extremely lightweight and high-performance.

- Python Bindings: Provided

aifw-pyIt is compatible with the HuggingFace transformers ecosystem and is suitable for back-end service integration. - JavaScript/WASM Binding: Provided

aifw-js, combined with transformers.js, supports running the complete desensitization process directly on the browser side, without the need for a back-end server.

- Flexible deployment: Support for running as a standalone HTTP service (based on FastAPI/Presidio), integrating into existing code as a library, or even working as a browser plugin.

- Customized Configuration: Supports customization of blocking rules, ignore lists, and the underlying detection model used via a YAML configuration file.

- zero-trace processing: All processing is done in memory and no user data is stored persistently, ensuring "burn-after-reading".

Using Help

OneAIFW is designed to be "lightweight" and "portable". In order for you to use the tool in production or local development, the following is a detailed description of the complete process from environment preparation, compilation and installation to actual code invocation.

I. Environmental preparation and installation

Since the core of OneAIFW is written in the Zig language and integrates Rust components, you will need to prepare the appropriate compilation toolchain.

1. Installation of the basic tool chain

Before you begin, make sure you have the following tools installed on your system:

- Zig Compiler: the version needs to be

0.15.2You can download it from the Zig website and add it to the system environment variable PATH. You can download it from the Zig website and add it to the system environment variable PATH. Verify the command:zig version。 - Rust Toolchain: Recommended installation

stableversion. If you need to compile the WASM version, you also need to add a target.- Install Rust:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh - Add WASM support:

rustup target add wasm32-unknown-unknown

- Install Rust:

- Node.js & pnpm(Required for JS development only): Node.js 18+ and pnpm 9+.

- Python(Required for Python development only): Python 3.10+.

2. Obtaining source code and compiling core libraries

Start by cloning the repository from GitHub:

git clone https://github.com/funstory-ai/aifw.git

cd aifw

Use Zig to build the core libraries (both Native and WASM products):

# 在项目根目录下执行

zig build

# 如果需要运行单元测试以确保环境正常

zig build -Doptimize=Debug test

This step generates the underlying aifw Static libraries and dynamic libraries for upper level language calls.

II. Use in the Python environment (back-end integration)

If you are a Python developer looking to integrate a privacy firewall in the backend of your AI application, follow these steps.

1. Installation of dependencies

Go to the Python bindings directory and install the dependencies:

cd libs/aifw-py

# 建议创建虚拟环境

python -m venv .venv

source .venv/bin/activate

pip install -e .

2. Examples of core code calls

The following code shows how to load a firewall and "desensitize" and "restore" a text containing sensitive information.

from aifw import AIFirewall

# 初始化防火墙,加载默认配置

fw = AIFirewall()

# 模拟用户输入的敏感文本

user_prompt = "请帮我联系张三,他的电话是 13800138000,我们要讨论关于 ProjectX 的秘密。"

# 步骤1:屏蔽(Mask)

# firewall 会自动识别 PII 并替换,同时返回一个 session 对象用于后续还原

masked_text, session_id = fw.mask(user_prompt)

print(f"发送给LLM的文本: {masked_text}")

# 输出示例: "请帮我联系<PERSON_1>,他的电话是<PHONE_NUMBER_1>,我们要讨论关于<ORG_1>的秘密。"

# 注意:此时真实数据从未离开本地内存

# ... 模拟将 masked_text 发送给 LLM,并获取回复 ...

# 假设 LLM 回复了包含占位符的内容:

llm_response = "好的,我已经记下了<PERSON_1>的电话<PHONE_NUMBER_1>,关于<ORG_1>的事项会保密。"

# 步骤2:还原(Restore)

# 使用之前的 session_id 将占位符还原为真实信息

final_response = fw.restore(llm_response, session_id)

print(f"展示给用户的文本: {final_response}")

# 输出: "好的,我已经记下了张三的电话 13800138000,关于 ProjectX 的事项会保密。"

III. Use in JavaScript/browser environments (front-end integration)

The power of OneAIFW is that it can be run directly in the browser and desensitized without sending data to any server.

1. Compiling the JS SDK

# 安装依赖

pnpm -w install

# 构建 JS 库(会自动处理 WASM 和 模型文件)

pnpm -w --filter @oneaifw/aifw-js build

2. Front-end call example

In your Web project (e.g. React or Vue), introducing the aifw-js。

import { AIFirewall } from '@oneaifw/aifw-js';

async function protectData() {

// 初始化防火墙(会自动加载 WASM 和 浏览器端的小型 NER 模型)

const firewall = await AIFirewall.create();

const text = "我的邮箱是 alice@example.com";

// 1. Mask

const result = await firewall.mask(text);

console.log(result.masked); // 输出: "我的邮箱是 <EMAIL_1>"

// 模拟 AI 处理过程...

const aiOutput = `已向 <EMAIL_1> 发送邮件`;

// 2. Restore

const final = await firewall.restore(aiOutput, result.session);

console.log(final); // 输出: "已向 alice@example.com 发送邮件"

}

Run the Web Demo

An intuitive web demo application is provided so you can run it directly:

cd apps/webapp

pnpm dev

Open a browser to access the local address of the console output (usually the http://localhost:5173). In this interface, you can enter any text containing sensitive information in the left input box and click Process to visualize it:

- PII analysis results: Which entities are identified.

- Masked Prompt: What the actual text sent out looks like.

- Restored Response: The final restored look.

V. Advanced configuration

You can do this by modifying the aifw.yaml or set environment variables to adjust the behavior:

AIFW_MODELS_DIR: Specifies the NER model storage path.AIFW_API_KEY_FILE: If you use its built-in LLM forwarding service. configure the API Key.- Custom Regex: In the configuration section of the source code, you can add specific regular expressions to recognize specific confidential formats (e.g., the internal company format for project designators).

By following these steps, you can integrate OneAIFW into any modern AI application stack, enabling advanced privacy protection with "data available but not visible".

application scenario

- In-house Knowledge Base Q&A

When using public cloud LLM-based knowledge base assistant, employees often involve customer lists or financial data. After deploying OneAIFW, sensitive entities in employees' questions are automatically replaced before being sent to models such as GPT-4, ensuring that core corporate data does not flow out of the intranet without affecting the model's answer logic. - GDPR/CCPA Compliance Development

AI applications for the US and European markets must comply with strict data privacy regulations. Developers can use OneAIFW to automatically filter PII (personally identifiable information) from user inputs, eliminating the need to manually write complex cleansing rules for each input and quickly meet legal compliance requirements. - Browser-side Privacy Plugin

Development of browser extensions that work on the user's web version ChatGPT 或 Claude When the request box is blocked, the content of the request box is automatically blocked, locally desensitized and then populated back to the web page. In this way, even if users use the official web version of the service, they can still ensure the security of their personal privacy. - Medical and Legal Documentation Assistance

When working with medical records or legal contracts, documents contain a large number of extremely sensitive names and ID numbers. Using OneAIFW allows AI to assist in touching up or summarizing documents while keeping the structure and context of the document intact, without worrying about patient or customer privacy being compromised to the model service provider.

QA

- Does OneAIFW slow down the AI response?

OneAIFW is highly optimized and the core layer is written in Zig/Rust for extremely fast (microsecond) processing. The main latency usually comes from the inference of the NER model, but it can be reduced when using lightweight models (such as theneurobert-mini), this delay is typically on the order of milliseconds, which is almost negligible compared to the network request and generation time of the LLM. - What types of sensitive information recognition does it support?

Common PII types are supported by default, including names of people, places, and organizations (identified by NER models), as well as mailboxes, phone numbers, ID numbers, and credit card numbers (identified by Regex). Users can also customize regular expressions to extend the recognition types. - What if the LLM response does not contain placeholders?

If the LLM is generating content with missing placeholders (e.g.<PERSON_1>), the restore step will not be able to retrieve the corresponding original message. However, OneAIFW optimizes the prompt words when designing the Prompt to guide the LLM to keep the placeholders intact. In most Q&A and summarization scenarios, the LLM does a good job of preserving these tokens. - Will the data be stored on OneAIFW's servers?

No. OneAIFW is purely a tool library or local service, Funstory.ai does not operate a centralized interception server. All data processing is done in the memory of your deployed server or in the memory of the user's browser, and the data is destroyed when the process ends.