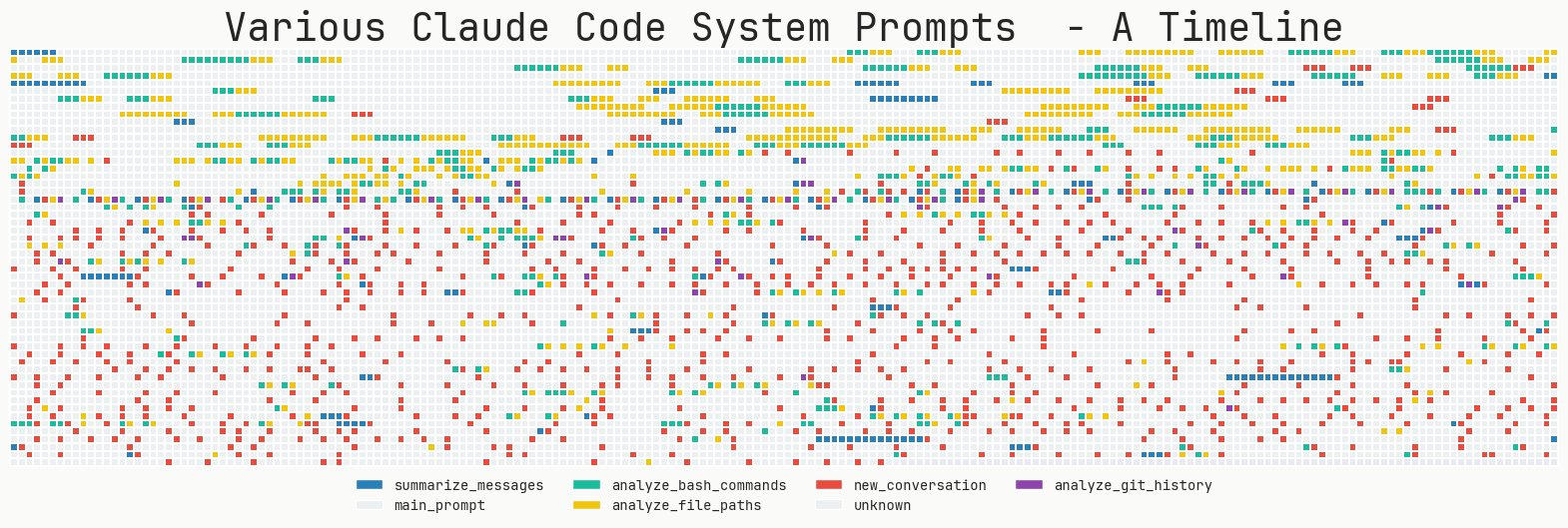

Claude Code is one of the most enjoyable AI Agent workflows to date. Not only does it make directed editing of code and improvised tool development less annoying, the experience of using it is even described as a pleasure in itself. It has enough autonomy to accomplish interesting tasks, while not giving developers the sudden...

When building knowledge base applications based on Retrieval Augmented Generation (RAG), document preprocessing and slicing (Chunking) is a key step to determine the final retrieval results. The open-source RAG engine RAGFlow provides a variety of slicing strategies, but its official documentation lacks clear explanations on the details of the methodology and specific cases, which brings a lot of confusion to developers....

When building Retrieval Augmented Generation (RAG) systems, developers often encounter the following perplexing scenarios: Headers of cross-page tables are left on the previous page, causing data to become unrelated. Models confidently give completely incorrect content in the face of ambiguous scans. The summation symbol “Σ” in a mathematical formula is incorrectly recognized as the letter “E”. Watermarks in documents...

Let's start with a simple task: scheduling a meeting. When a user says, “Hey, let's see if we can do a quick sync tomorrow?” An AI that relies only on Prompt Engineering might reply, “Yes, tomorrow is fine. What time would you like to schedule it, please?” This response, while correct, is mechanical and...

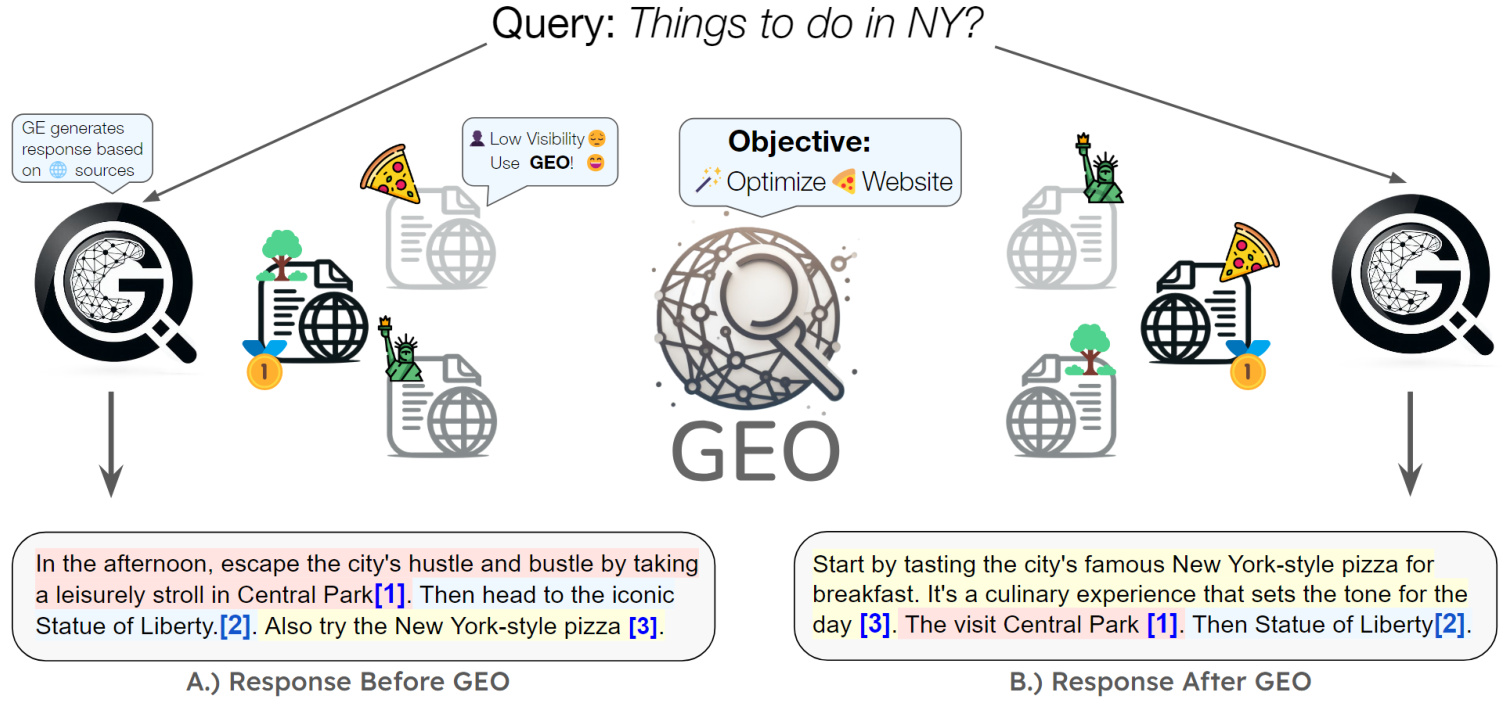

Abstract The emergence of large-scale language models (LLMs) has opened up a new paradigm of search engines that utilize generative models to gather and summarize information to answer user queries. We unify this emerging technology under the framework of generative engines (GEs), which generate accurate and personalized responses, rapidly replacing traditional search engines such as Google and ...

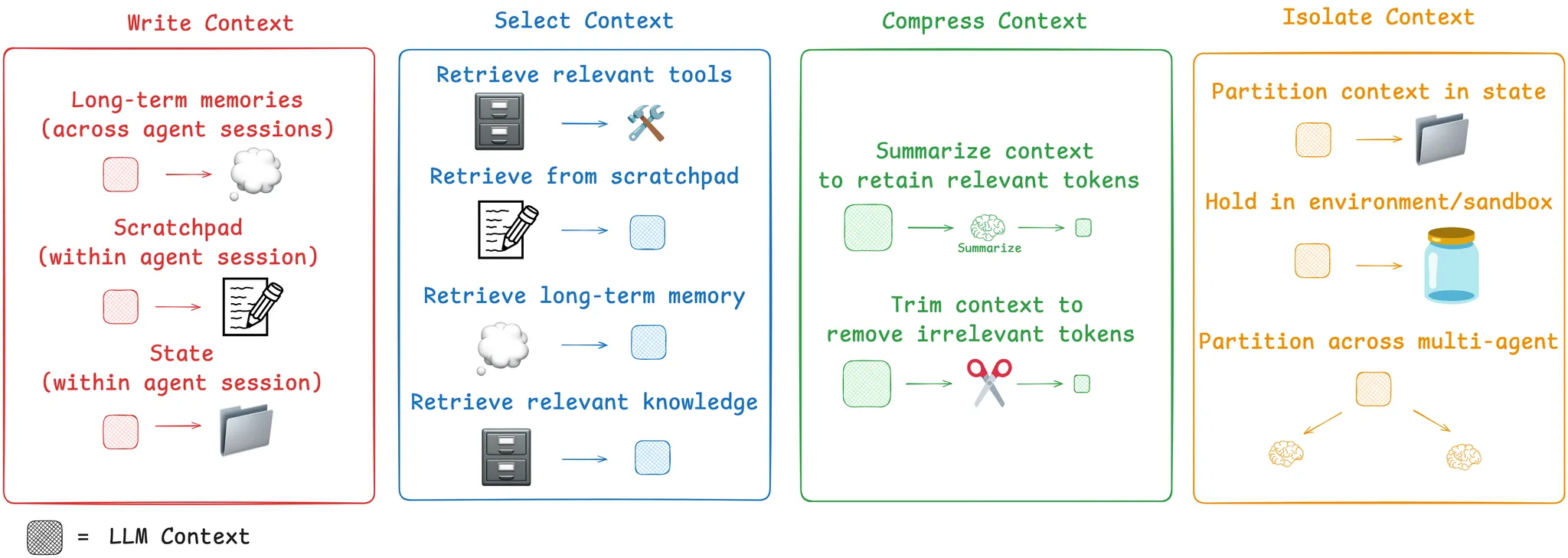

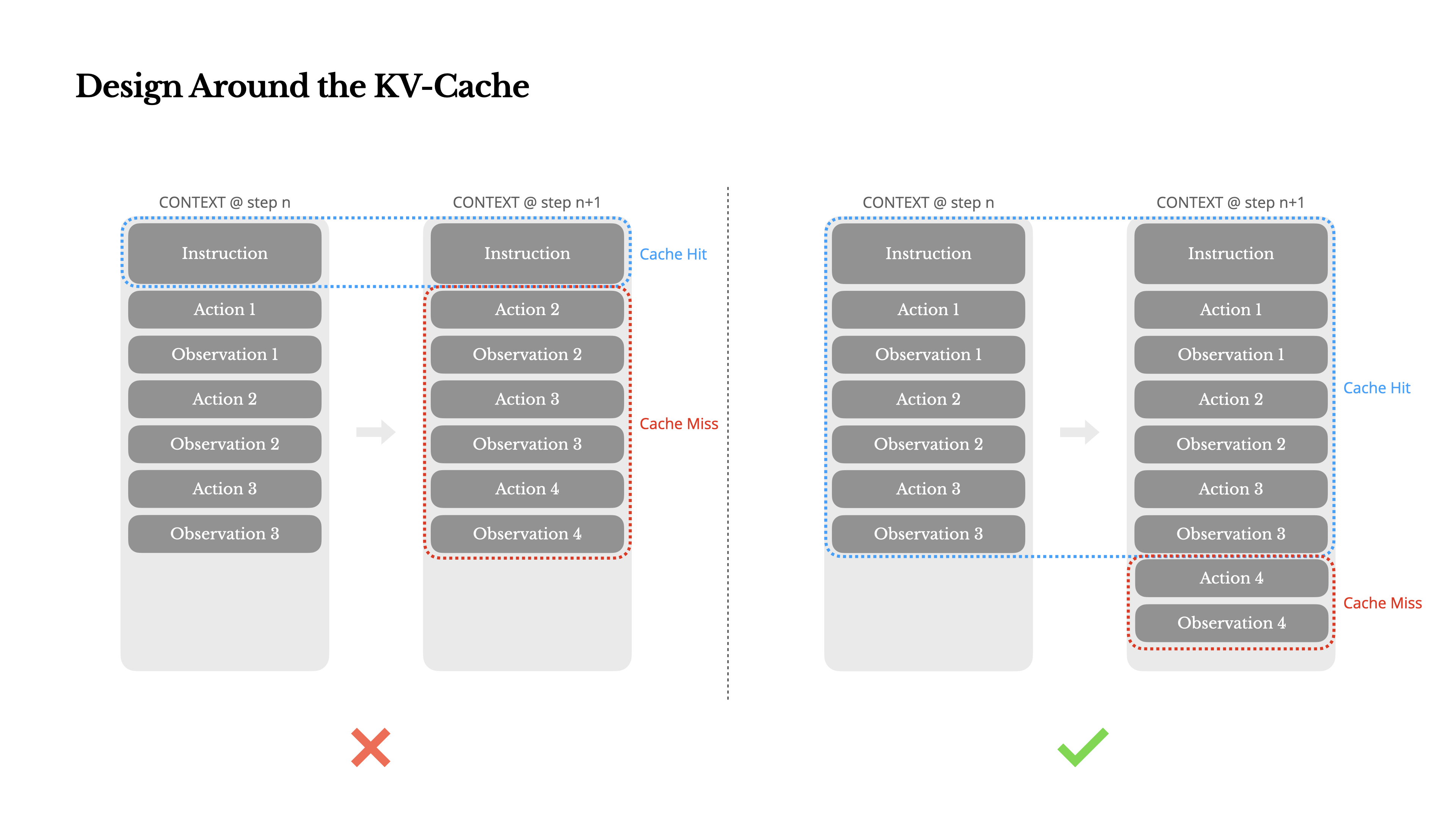

In the early days of the Manus project, the team faced a critical decision: should they train an end-to-end agent model based on open source models, or should they build agents that take advantage of the powerful “context learning” capabilities of cutting-edge models? Go back ten years and developers didn't even have a choice in the field of natural language processing. In the era of BERT, any model...

When building AI systems such as RAGs or AI agents, the quality of the retrieval is key in determining the upper limit of the system. Developers typically rely on two main retrieval techniques: keyword search and semantic search. Keyword search (e.g. BM25): fast and good at exact matching. However, once the wording of a user's question changes, the recall rate drops. ...

The experience of communicating with a friend who always forgets the content of the conversation and has to start from the beginning every time is undoubtedly inefficient and exhausting. However, this is precisely the norm for most current AI systems. They're powerful, but they're generally missing a key ingredient: memory. To build AI intelligences (Agents) that can truly learn, evolve, and collaborate, memory is not...

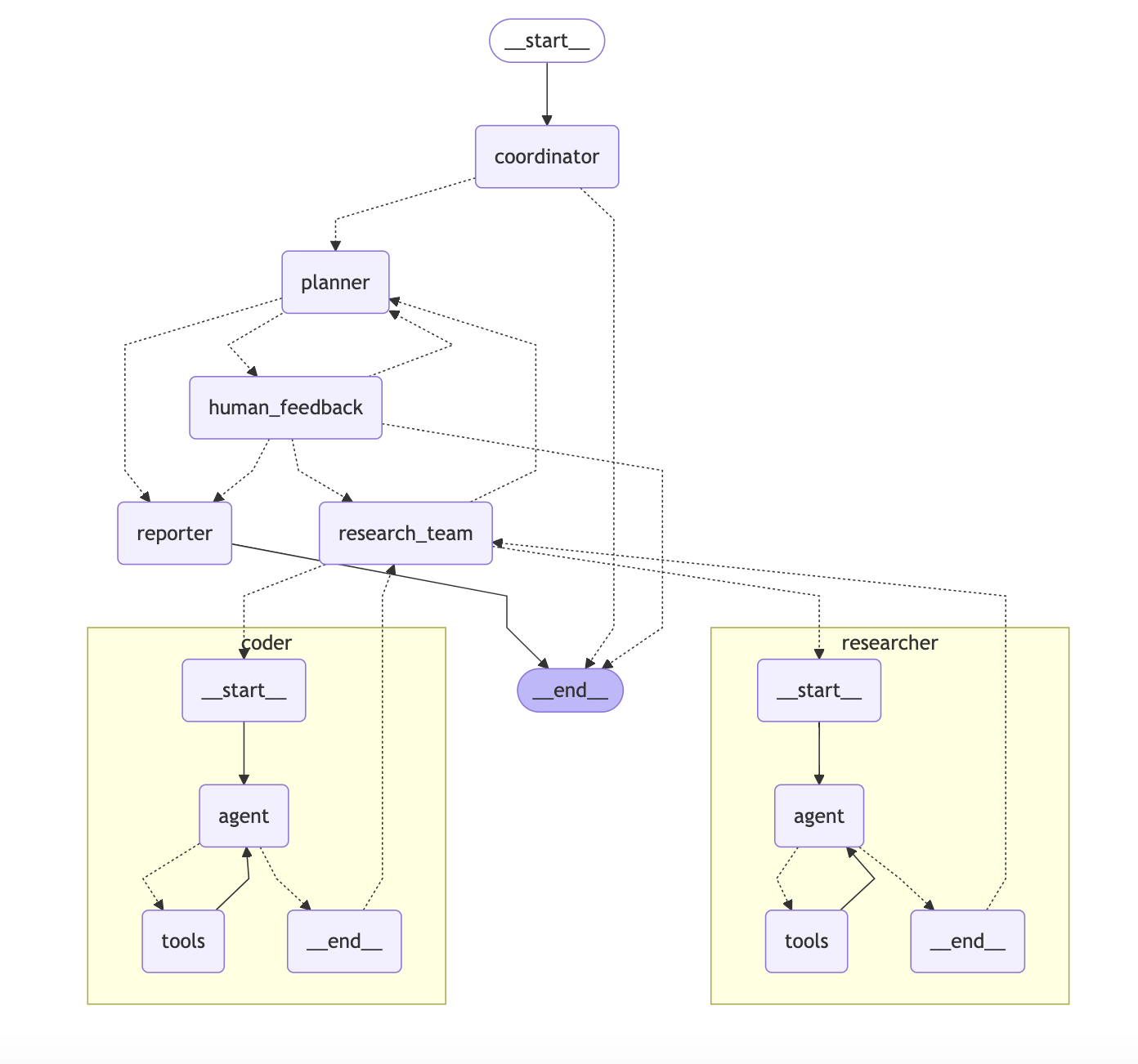

From API calls for Large Language Models (LLMs) to autonomous, goal-driven Agentic Workflows, there is a fundamental shift in the paradigm of AI applications. The open source community has played a key role in this wave, giving rise to a plethora of AI tools focused on specific research tasks. These tools ...

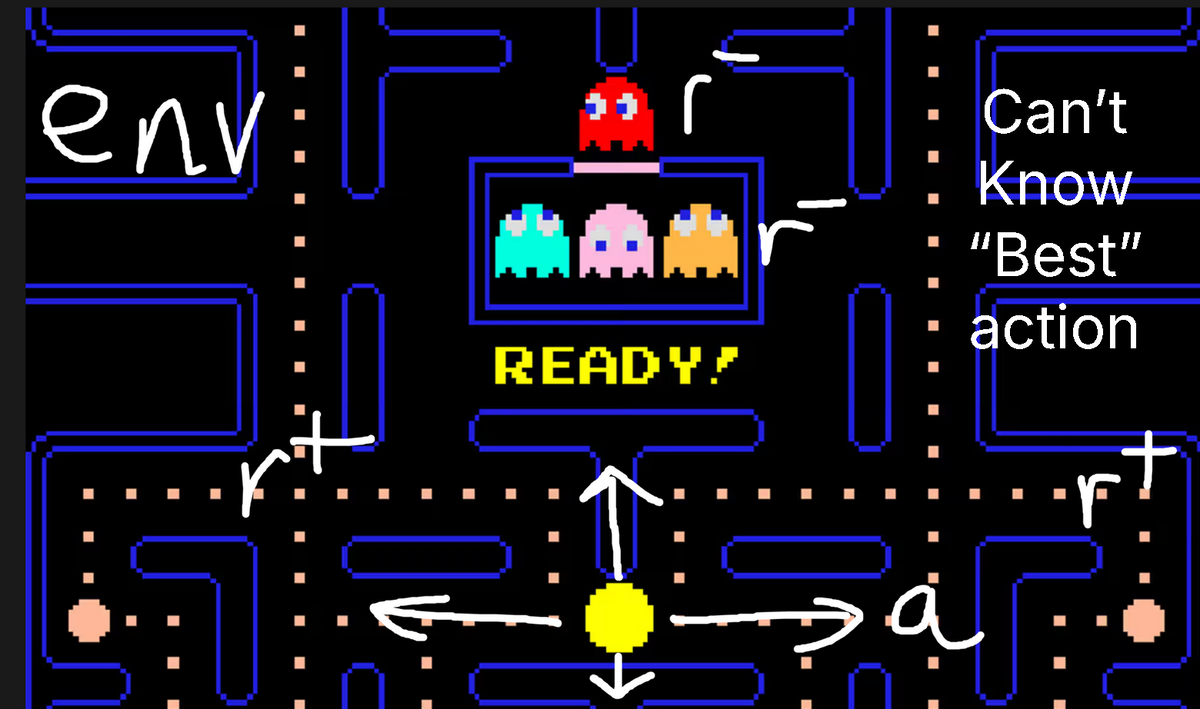

Learn all about Reinforcement Learning (RL) and how to train your own DeepSeek-R1 inference model using Unsloth and GRPO. A complete guide from introductory to mastery. 🦥 What you'll learn What is RL? RLVR? PPO? GRPO? RLHF? RFT?...

With the rapid development and wide application of large-scale language modeling technology, its potential security risks have increasingly become the focus of the industry's attention. In order to address these challenges, many of the world's top technology companies, standardization organizations and research institutions have constructed and released their own security frameworks. In this paper, we will analyze nine representative security frameworks for large models, aiming to provide a comprehensive overview for the related areas...

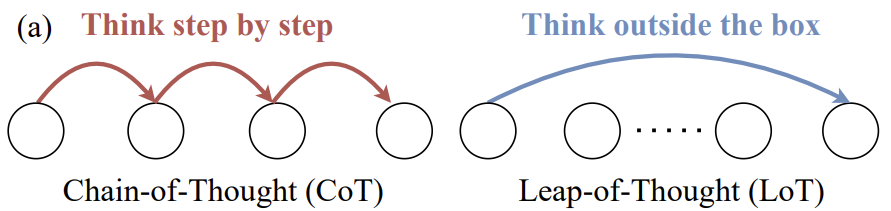

In the field of Large Language Modeling (LLM) research, the model's Leap-of-Thought ability, i.e., creativity, is no less important than the logical reasoning ability represented by Chain-of-Thought. However, there is still a relative lack of in-depth discussions and valid assessment methods for LLM creativity, which in ...

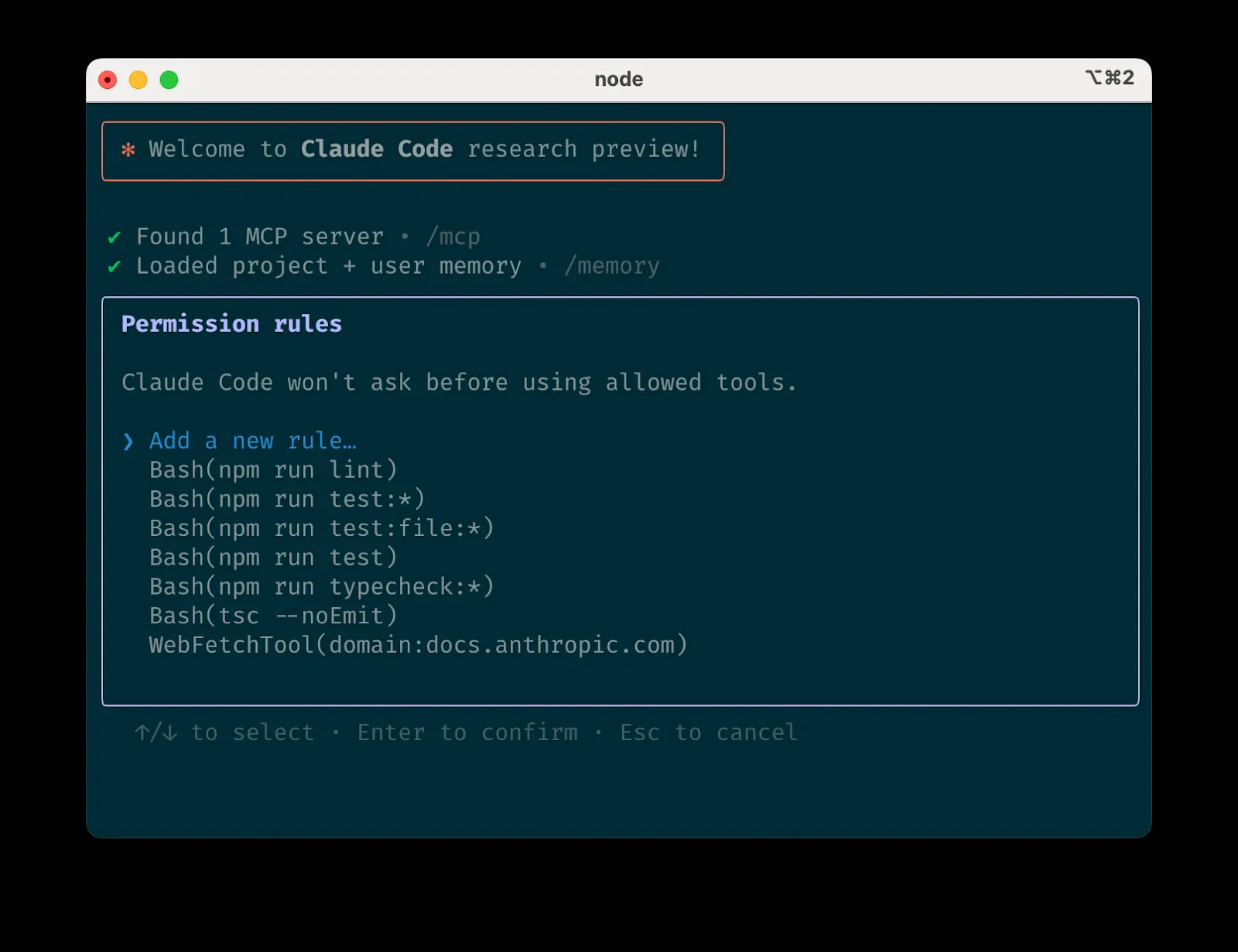

Mastering Claude Code: Hands-on Agentic Coding Tips from the Front Lines Claude Code is a command line tool for Agentic Coding. By Agentic Coding, we mean giving AI a certain degree of autonomy to understand tasks, plan steps, and perform actions (such as...

The GPT-4.1 family of models offers significant improvements in coding, instruction adherence, and long context processing capabilities over GPT-4o. Specifically, it performs better on code generation and repair tasks, understands and executes complex instructions more accurately, and can efficiently handle longer input text. This hint engineering guide brings together OpenAI ...

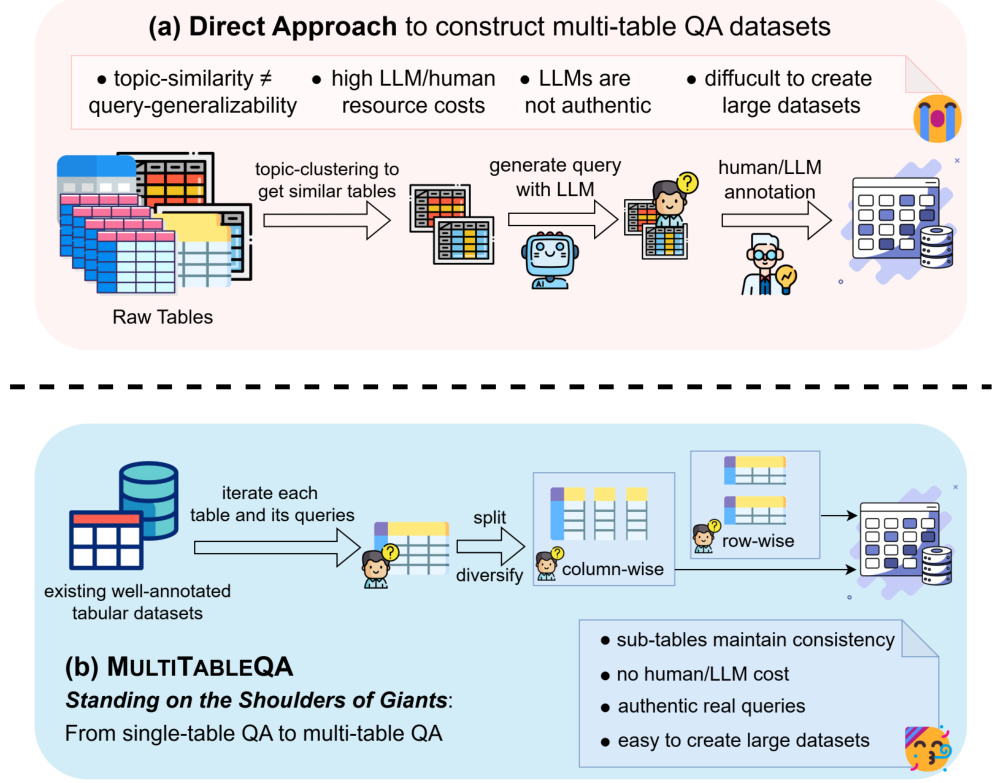

1. INTRODUCTION In today's information explosion, a large amount of knowledge is stored in the form of tables in web pages, Wikipedia and relational databases. However, traditional Q&A systems often struggle to handle complex queries across multiple tables, which has become a major challenge in the field of artificial intelligence. To cope with this challenge, researchers have proposed GTR (Graph-Table ...

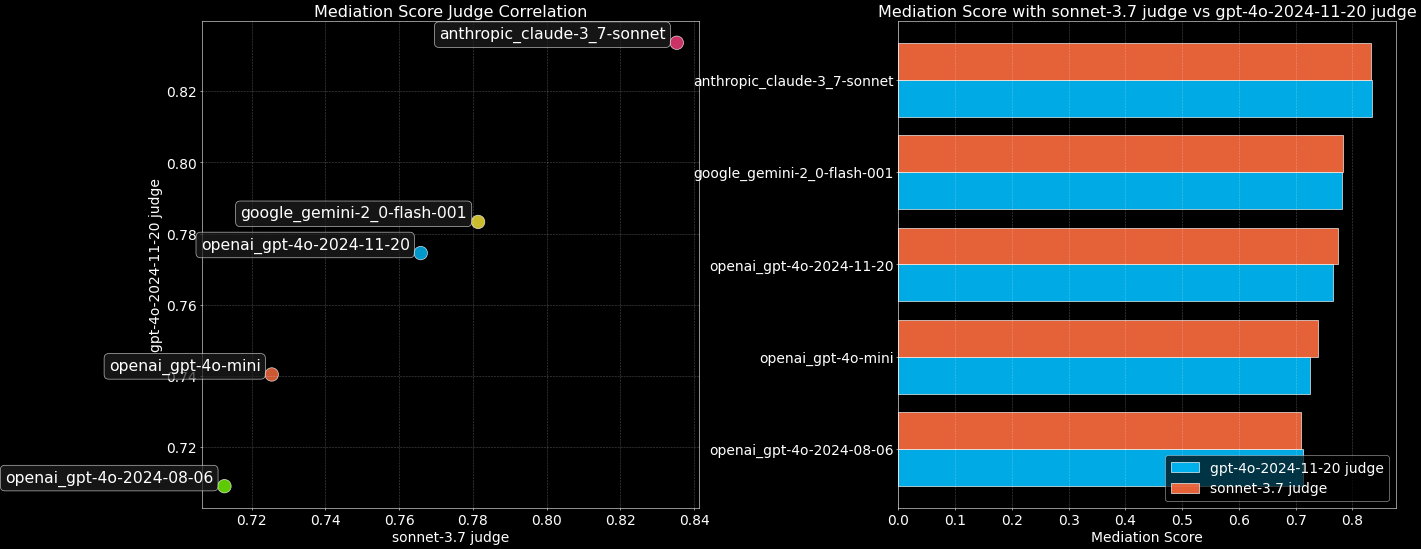

As the capabilities of large-scale language models (LLMs) evolve at a rapid pace, traditional benchmark tests, such as MMLU, are showing limitations in distinguishing top models. It is no longer possible to rely solely on knowledge quizzes or standardized tests to comprehensively measure the nuanced abilities of models that are critical in real-world interactions, such as emotional intelligence, creativity, judgment, and communication skills. It is in this ...

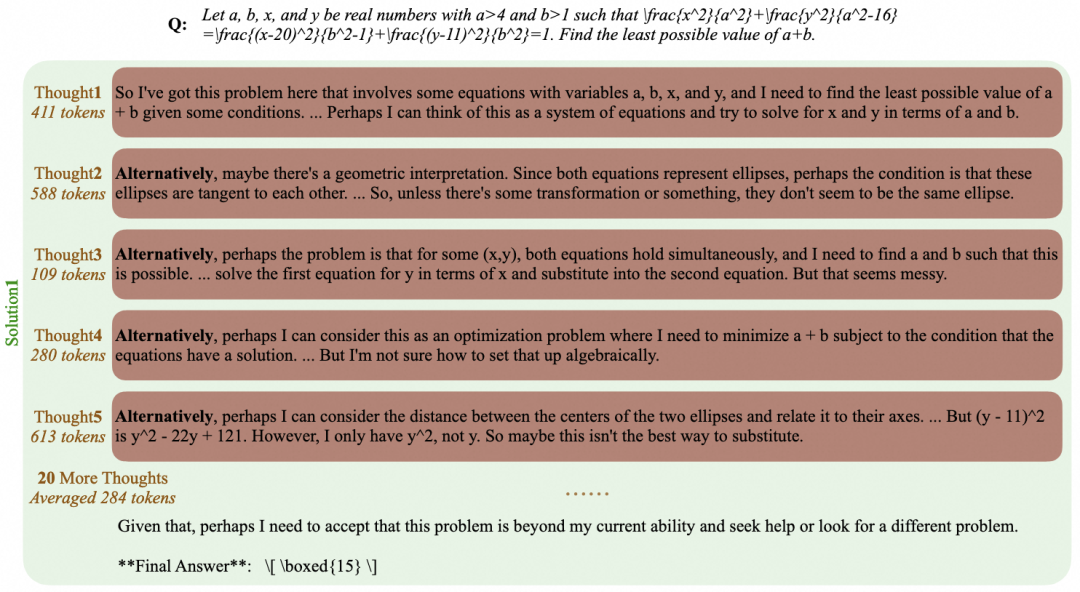

The development of large language models (LLMs) is rapidly changing, and their reasoning ability has become a key indicator of their intelligence level. In particular, models with long reasoning capabilities, such as OpenAI's o1, DeepSeek-R1, QwQ-32B, and Kimi K1.5, which simulate the human deep thinking process by solving compound...

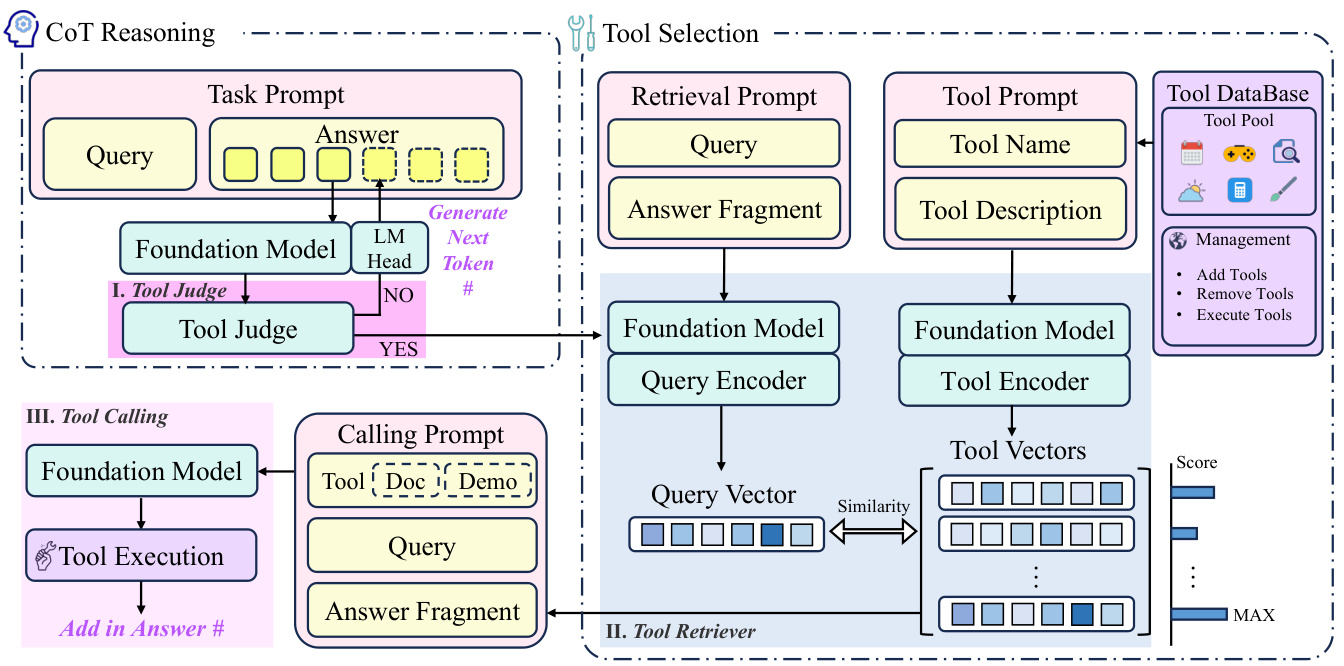

INTRODUCTION In recent years, Large Language Models (LLMs) have made impressive progress in the field of Artificial Intelligence, and their powerful language comprehension and generation capabilities have led to a wide range of applications in several domains. However, LLMs still face many challenges when dealing with complex tasks that require the invocation of external tools. For example, when a user asks “What is the weather at my destination tomorrow...

The Python ecosystem has always had a shortage of package management and environment management tools, from the classic pip and virtualenv to pip-tools and conda to the modern Poetry and PDM. Each tool has its area of specialization, but they often make a developer's toolchain fragmented and complex. Now, ...

Top