Original: [State-of-the-art Code Generation with AlphaCodium - From Prompt Engineering to Flow Engineering]

By Tal Ridnik

AlphaCodium: Leading the Way to a New Realm of Code Generation, from Hint Engineering to Process Engineering

skim through

The challenges of code generation are different from ordinary natural language processing -- they involve strictly following the syntactic rules of the target programming language, recognizing normal and boundary cases, attending to numerous details in the problem specification, and coping with other problems and requirements specific to the code. As a result, many of the optimization techniques commonly used in the field of natural language generation may not be applicable for code generation tasks.

In this study, we propose a novel code generation method called AlphaCodium -- A test-based, phased, code-focused, iterative treatment process. This approach significantly improves the ability of the Large Language Model (LLM) to deal with code problems.

We tested AlphaCodium on a challenging code generation dataset, CodeContests, which contains competition programming topics from platforms such as Codeforces. Our approach consistently achieves significant performance gains in these tests.

For example, on the validation dataset, after using the AlphaCodium process, the accuracy (pass@5) of GPT-4 improved from 19% with a single well-designed direct cue to 44%.Not only does AlphaCodium outperform previous research, such as AlphaCode, but it also requires significantly less computational resource AlphaCodium.

We believe that many of the principles and best practices developed in this work are generally applicable to a wide variety of tasks in code generation. Our latest open-source project [AlphaCodiumOur AlphaCodium solution for CodeContests is shared in ], with full dataset evaluation and benchmarking scripts for further research and exploration by the community.

CodeContests dataset parsing

[CodeContests] is a challenging programming dataset from Google Deepmind. It draws from data such as [CodeforcesA competition programming platform such as ] features a selection of about 10,000 programming topics designed to train and evaluate large language models (large language models such as GPT or DeepSeek) Ability to solve complex programming problems.

This study did not focus on developing an entirely new model, but rather on creating a programming process that is applicable to a variety of large language models that are already capable of handling coding tasks. Therefore, we focused on the validation and test sets of CodeContests, which consisted of 107 and 165 programming problems. Figure 1 shows an example of a typical problem from the dataset:

Figure 1. A standard problem in CodeContests.

Each problem includes a problem description and some publicly available test data that can be used directly as model input. The challenge is to write a program that can give the correct answer for any legitimate input. In addition, there is a test set, which is not publicly available, that is used to evaluate the correctness of the submitted program.

Why are CodeContests an ideal dataset for testing the programming power of large language models? First, unlike other programming contest datasets, CodeContests contains a large amount of private test data (about 200 test cases per problem) to ensure the accuracy of the evaluation. Second, large language models are typically not good at noticing details in problem descriptions that are often critical to finding the right solution.The problem descriptions in CodeContests are typically both complex and detailed, full of nuances that affect the solution (a typical example is illustrated in Figure 1). This design simulates the complexity of real-world problems, forcing the model to consider multiple factors, which is in contrast to some of the simpler and more straightforward datasets (e.g. [HumanEval]) contrasts sharply. A typical HumanEval programming problem is illustrated in Appendix 1.

Figure 2 illustrates how the model analyzes the problem in Figure 1 in depth. Through in-depth analysis, the problem becomes clearer and more organized, which emphasizes the importance of a deeper understanding of the problem during programming.

Figure 2. Self-reflection generated by the AI for the problem described in Figure 1.

Proposed methodology

When dealing with the complex challenges of code generation, we have found that neither single-prompt optimizations nor continuous-thinking prompts have significantly improved the problem-solving efficiency of Large Language Models (LLMs) on CodeContest. This is because the model often struggles to fully understand the problem, and thus repeatedly generates code that is incorrect or unable to cope with new test cases. Approaches applicable to general natural language processing may not be ideal for code generation tasks. Such tasks hide great potential, such as repeatedly executing the generated code and verifying it against known examples. In contrast to cue optimization techniques in regular natural language processing, we find that solving CodeContest's problem using code generation and testing specifically forworkflowsmore effective. This process is centered arounditeration (math.)The process unfolds, i.e., we continually run and tweak the generated code so that it passes the input-output tests. The two key aspects of this code-specific process are:

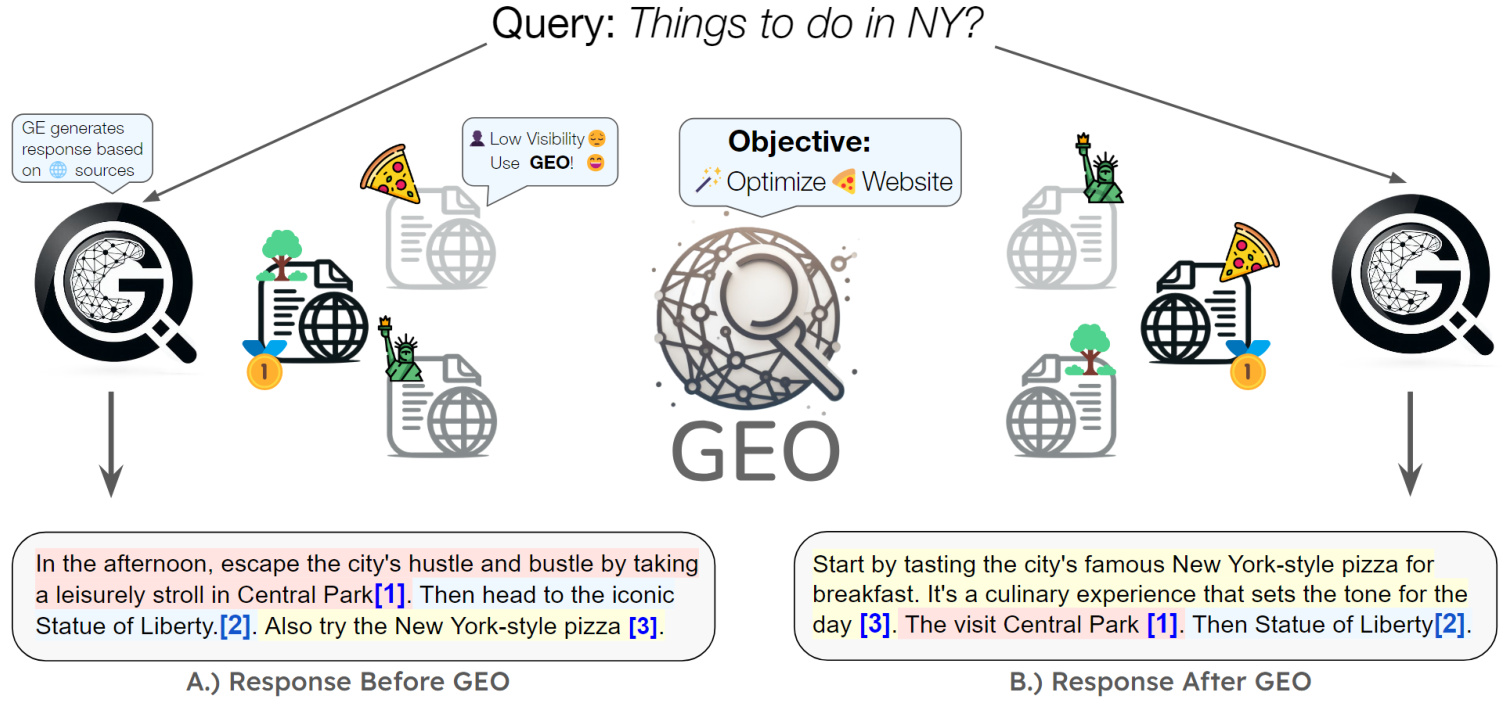

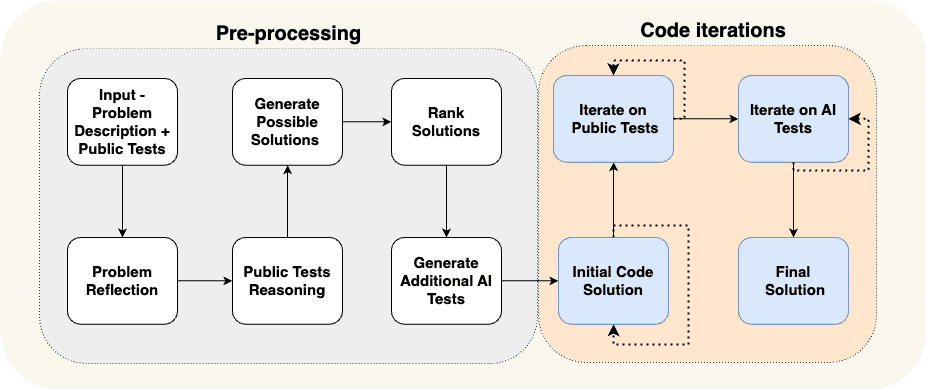

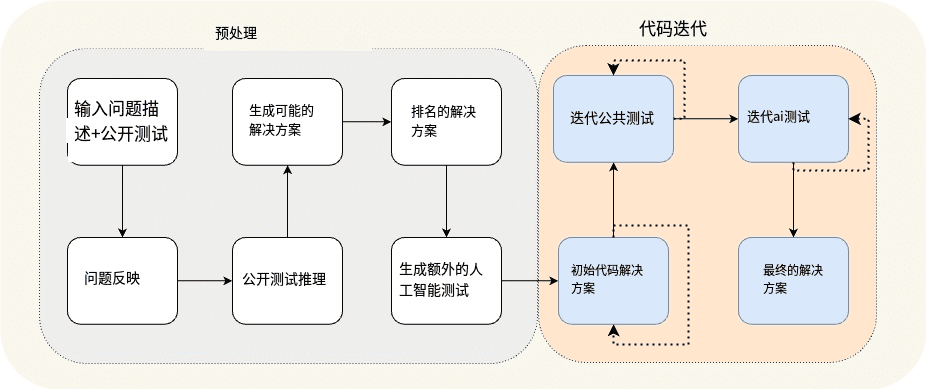

(a) generating additional data in the preprocessing phase, such as self-reflection and reasoning for open test cases, to support the iterative process, and (b) augmenting open test cases with additional test cases generated by AI. In Fig. 3, we show the process we designed to solve the race programming problem:

Figure 3. The proposed AlphaCodium process.

Figure 3 Build preprocessing and code iteration flow

The process in Figure 3 is divided into two main phases:

- preprocessing stage, we reason about the problem using natural language, which is a linear process.

- Code Iteration phase, including multiple iterative sessions in which we generate, run, and fix code for various tests.

In Table 1, we review these different stages in detail:

| Stage name | mission statement |

| Problem Reflection | Summarize the problem in the form of concise bullet points that address the problem's objectives, inputs, outputs, rules, constraints, and other important details. |

| Logical analysis of open test cases | Describe how the inputs to each test case lead to a particular output. |

| Conceptualizing possible solutions | Suggest 2-3 possible solutions and describe them in layman's terms. |

| Solution Evaluation | Evaluate the various possible solutions and select the best one, taking into account its correctness, simplicity and robustness. (It is not necessary to limit oneself to the most efficient solution). |

| Supplemental AI testing | Supplement the problem with 6-8 different types of input-output tests that attempt to cover situations and aspects not covered by the original open test cases. |

| Initial code program | The goal of this phase is to form an initial code solution to the problem. It is important that this code should be as close to the correct answer as possible, so that it is more likely to succeed in the correction process that follows. The operation process is as follows: - Select a possible scenario, write the appropriate code for it, and try it out in selected public test cases and AI tests. - Repeat this process until the test is passed or the maximum number of attempts is reached. - The first code that passes the test, or the code whose output is closest to the correct answer, will be used as the base code for subsequent steps. |

| Iterative optimization of open test cases | Take the base code as a starting point, run it one by one in open test cases and optimize it. If the code has a problem in one of the tests, try to fix it based on the error message. |

| Iterative Optimization for AI Testing | Continue iterative optimization in AI-generated tests. Utilize "test anchors" (a technique for fixing specific elements in tests to more accurately debug and improve code). |

Table 1. Characterization of the AlphaCodium phases.

In exploring the proposed process, we gained some deep intuitions and insights.

firstlycumulative knowledgestages of the process: we will start with simple tasks and gradually challenge ourselves with more complex problems. For example, in the first step of the process, _Self-Reflection_, we learn knowledge that can be used in subsequent, more difficult steps, such asGenerating possible solutions. The preprocessing phase of the process produces results that fuel the most challenging and critical part of the process: code iteration, where we actually try to write code that solves the problem correctly.

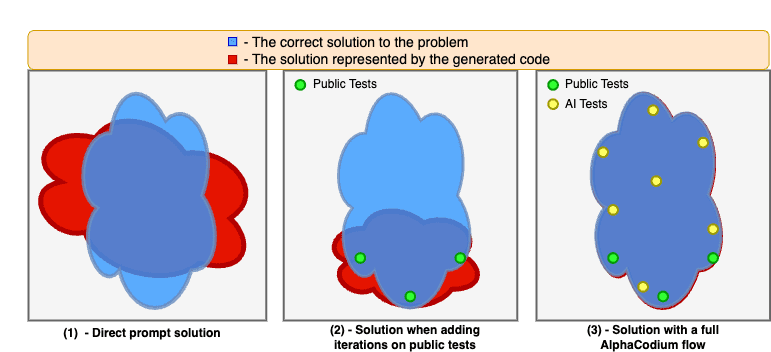

Next.Generating additional AI tests is easier than generating a full set of solution code -- This process relies heavily on understanding the problem and basic brute-force solving or logical reasoning without having to fully solve the problem to generate useful input-output test pairs. This is different from writing a complete and correct solution code, which requires us to come up with a complete algorithmic scheme that can correctly respond to any input-output test pair. As a result, we can create more AI tests and use them to optimize the code creation phase, as shown in Figure 4. We also further enhance the effectiveness of these additional tests by requiring the model to focus on what is not covered by the original public test cases, such as handling large inputs, edge cases, etc.

Finally.Multiple steps can be combined into a single large language model (LLM) call -- The process shown in Figure 3 is a conceptual demonstration, emphasizing the main steps of the process. In practice, by structuring the output (see next section), we can combine multiple phases into a single biglanguage model call to conserve resources or to improve the performance of the model when dealing with specific tasks at the same time.

Figure 4. demonstrates the improvements made when applying the AlphaCodium process.

Models often struggle when they solve code problems based only on direct hints. Iterating on publicly available test cases stabilizes and improves the solution, but leaves "blind spots" due to the lack of comprehensiveness of publicly available test cases. When we use the full AlphaCodium process, including the preprocessing phase and iterating on public and AI-generated tests, we can further improve the quality of the solution and significantly increase the success rate of problem solving.

Design concepts for code

In this section, we present some design concepts, techniques, and best practices that we have found useful when solving code generation problems. The AlphaCodium process we present in Figure 3 makes extensive use of these design concepts:

YAML structured output: A key part of our proposed process is the use of structured output - requiring the model to generate a YAML-formatted output equivalent to a specific Pydantic class. An example:

…

Your goal is to come up with possible solutions.

Ensure that the objectives, rules and constraints of the problem are fully considered in each program.

The output shall be a YAML object corresponding to the $PossibleSolutions type, according to the following Pydantic definition:

class Solution(BaseModel):

name: str = Field(description="Name of the solution")

content: str = Field(description=Description of the solution")

why_it_works: str = Field(description="Why this solution works. Needs to be specifically detailed for the rules and goals of the problem.")

complexity: str = Field(description="The complexity of the solution")

class PossibleSolutions(BaseModel):

possible_solutions: List[Solution] = Field(max_items=3, description="A list of possible solutions to the problem. Ensure that each solution fully considers the rules and goals of the problem and has a reasonable run time on a modern computer - no more than three seconds for problem constraints with a large number of inputs.")

Table 2. Examples of structured output prompts (Generate Possible Solutions phase).

Structured output reduces the complexity of "cue engineering" and the need for hacking, and instead presents complex tasks in a straightforward, code-like manner. It also makes it possible to obtain complex answers with multiple stages that reflect logical and organized thought processes.

Although the new version of GPT supports [JSON style] output, but we believe that, especially in code generation tasks, YAML output is more appropriate, as detailed in the Appendix.

Bullet points analysis - When a Large Language Model (LLM) is asked to analyze a problem, better results are usually obtained by asking for the output in a Bullet points format. Bullet points promote a deeper understanding of the problem and force the model to divide the output into logical semantic regions, thus improving the quality of the results. For example, to self-reflect on a problem in terms of bullet points (see Figure 2), each bullet point represents a semantic understanding of a different part of the problem-general description, goals and rules, input structure, and output structure.

Big Language Models are better at generating modular code - When we let the Large Language Model (LLM) go to work writing a long block of individual functions, we often run into problems: there are often errors or logic holes in the code. Worse, such large and monolithic chunks of code can interfere with subsequent iterations to fix them. Even when error information is provided, it is difficult for the model to accurately locate and fix the problem. However, if we explicitly instruct the model to "_Split the generated code into multiple small sub-functional modules and give them meaningful names_", the results will be much better, with less errors in the generated code and a higher success rate in the iterative fixing phase.

The importance of flexible decision-making and dual validation - Large language models often struggle with code tasks that require thoughtful, rational inferences and the ability to make serious, non-routine decisions. For example, when generating additional tests for a problem, the tests generated by the model often contain errors. To address this problem, we introduce the process of double validation. In this process, after generating the initial output, the model is asked to generate the same output again and correct it if necessary. For example, after receiving as input its own generated AI tests, the model needs to re-generate these tests and correct the errors in them (if any) in time. We found that this double validation step not only motivates the model to think and reason critically, but is also more effective than asking direct yes/no questions such as "Is this test correct?" such as yes/no questions.

Delay decision making, avoid direct questions, give space for exploration - When we ask complex questions directly to the model, we often get wrong or unrealistic answers. Therefore, we took an approach similar to the one Karpathy describes in the tweet below, accumulating data incrementally and gradually transitioning from simple to complex tasks:

- Start with the simplest tasks, namely self-reflection on the problem and reasoning about open test cases.

- Then move to generating additional AI tests and possible solutions to the problem.

- Only after obtaining the model's answers to the above tasks do we move on to the actual iterative process of generating code and running fixes.

Karpathy: This fits perfectly with the "need for a large language model (LLM)". Token The concept of "to think". In some cases, the chain of thought may simply serve to provide an additional store of information, rather than playing other, more important roles.

As another example, instead of choosing a single algorithmic solution, we evaluate and rank multiple possible solutions, prioritizing the top-ranked one for initial code writing. Since the model can be wrong, we prefer to avoid making irreversible decisions, and instead leave room for exploration, and code iterations that try different possible solutions.

Testing Anchor Technology - Despite being validated twice, some AI-generated tests can still be wrong. This poses a problem: when a test fails, how do we determine whether it is the code or the test itself that is at fault? When querying the model directly for "what is wrong", we often get unrealistic answers, sometimes leading to incorrectly modified code. To address this challenge, we introduced an approach called "test anchors":

- Start by iterating over publicly available tests that are known to be correct. Once this step is completed, designate all passed tests as benchmark tests (anchor tests).

- Then start checking the AI-generated tests one by one.

- Those that pass the test are added to the anchor test list.

- If the test fails, it defaults to an error in the code, which in turn attempts to correct the code. Importantly, the corrected code must also pass all existing anchor tests.

In this way, anchor tests act as a protection against incorrectly fixing our code as we fix it. Additionally, another improvement for anchor point tests is to prioritize the tests generated by the AI in order of difficulty. This made anchor tests more readily available early in the iterative process, which provided additional safeguards when dealing with more complex AI tests, especially when dealing with AI tests that were more likely to have incorrect output. This strategy effectively enhances the stability and reliability of the testing process, especially when dealing with complex and demanding AI-generated tests.

in the end

Comparison of Direct Tips with AlphaCodium

In Figure 5, we compare the results of AlphaCodium with those of a single well-designed direct hinting method. The evaluation criterion is pass@k (success rate in solving the problem), i.e., the proportion of solutions generated by using k for each problem.

Fig. 5. Comparison of the AlphaCodium method with the direct cueing method on different models.

It can be seen that the AlphaCodium approach significantly and consistently improves the performance of Large Language Models (LLMs) in solving programming problems with CodeContests. This conclusion applies to both open-source models (e.g., DeepSeek) and closed-source models (e.g., GPT), both on validation and test sets.

Comparison with other studies:

In Table 3, we show the results of AlphaCodium compared to other methods in the literature.

| mould | data set | methodologies | score |

| GPT-3.5 | validation set | AlphaCodium (pass@5) | 25% |

| GPT-3.5 | validation set | CodeChain (pass@5) | 17% |

| GPT-3.5 | test set | AlphaCodium (pass@5) | 17% |

| GPT-3.5 | test set | CodeChain (pass@5) | 14% |

| GPT-4 | validation set | AlphaCodium (pass@5) | 44% |

| DeepMind Fine-tuning | validation set | AlphaCode (pass@10@1K) | 17% |

| DeepMind Fine-tuning | AlphaCode (pass@10@100K) | 24% | |

| GPT-4 | test set | AlphaCodium (pass@5) | 29% |

| DeepMind Fine-tuning | test set | AlphaCode (pass@10@1K) | 16% |

| DeepMind Fine-tuning | test set | AlphaCode (pass@10@100K) | 28% |

| Gemini-pro | AlphaCode2: Comparison results for AlphaCode2 are not reported in existing CodeContests versions. According to Technical report on AlphaCode2, the researchers compared the results of AlphaCode with those of AlphaCode2 on an unpublished dataset and found a significant reduction in the number of large language model (LLM) calls (@100In the case of AlphaCode2, the performance of AlphaCode2 is comparable to that of AlphaCode, with both 29%, pass@10。 |

Table 3: Comparison of AlphaCodium with other research works in the literature

Fig. 6: Efficiency comparison.

The figure shows that the AlphaCodium method demonstrates excellent performance under a variety of models and evaluation criteria, especially when solving programming challenges using large language models. These comparative results not only demonstrate AlphaCodium's technical innovation, but also highlight its effectiveness and applicability in real-world applications.

Overall, AlphaCodium demonstrates its remarkable potential in the field of intelligent programming, especially in enhancing the ability of large language models to handle complex programming problems. These findings provide important insights for future research and development, and provide valuable references for further development and optimization of large language models.

Fig. 6: Efficiency comparison. This is how AlphaCodium compares to other solutions in terms of accuracy vs. number of Large Language Model (LLM) calls. Compared to AlphaCode, AlphaCodium requires thousands of times fewer LLM calls to achieve similar accuracy.

When we compare AlphaCodium with the same GPT-3.5 model and the "5-try pass rate" criterion of the [...CodeChainWhen compared to [ ], it is clear that AlphaCodium performs better. When compared to [AlphaCodeWhen comparing the methods of [AlphaCode], it is important to note that AlphaCode employs a different code generation strategy: it optimizes a specific model to solve the coding problem, generates a large number of coding scenarios, and then classifies them, ultimately selecting a number of scenarios to submit from the main categories. For example, "10 attempts to pass out of 100,000 solutions" means that it generates 100,000 solutions, classifies them, and then selects 10 to submit.AlphaCode uses a specially optimized model that uses a higher number of LLM calls, similar to an exhaustive strategy. Nonetheless, AlphaCodium performed better in terms of top results.

It is also worth mentioning that neither AlphaCode nor CodeChain provides a replicable solution, including a complete end-to-end evaluation script. There are many details to consider when evaluating the results, such as handling multiple solution topics, fault tolerance mechanisms, timeout issues, etc. Our comparison is based on the data reported in their papers, but for reliability and reproducibility of future comparisons, we provide a complete set of reproducible code and evaluation scripts.

Comparison of computational strength: AlphaCode vs AlphaCode2

In AlphaCodium's process, solving each problem requires about 15-20 calls to the Large Language Model (LLM), which means that in the course of making five attempts, about 100 calls to the LLM are required.

And AlphaCode doesn't explicitly report how many calls to the big language model are needed per problem. If we assume that it is called once per attempt (which is still unknown and may actually be more), then for each of the 10 attempts, filtered from the 100,000 solutions, it would need to call the large language model 1 million times, which is four orders of magnitude more than AlphaCodium. However, from the results we have seen, AlphaCodium performs much better, as Figure 3 clearly demonstrates.

A recently published study called AlphaCode2 ([...Technical Report]) in which the researchers evaluated a model called Gemini-Pro fine-tuned for programming problems. The study also explored benchmarking of CodeContests, but using an unpublished updated version. According to the AlphaCode2 report, with only about 100 samples, AlphaCode2 achieves the level of performance that AlphaCode achieves with millions of samples, which makes it more than 10,000 times more sample-efficient than AlphaCode. As a result, both AlphaCode2 and AlphaCodium are much more efficient than AlphaCode in terms of the number of large language model calls.

However, AlphaCode2 employs an elaborate system specifically designed for CodeContests competitions.trimmingThe AlphaCodium uses a modern base model, whereas AlphaCodium uses an unmodified general-purpose model. Even so, AlphaCodium improves the performance of the model without additional data and expensive training phases.

appendice

1) An example of a manual evaluation of a code problem:

/*

In a set of numbers, checks if there are two numbers with a distance between them that is less than a specific numerical threshold. >>>

has_close_elements({1.0, 2.0, 3.0}, 0.5) false >>>

has_close_elements({1.0, 2.8, 3.0, 4.0, 5.0, 2.0}, 0.3) true

*/

#include<stdio.h>

#include<vector>

#include<math.h>

using namespace std;

bool has_close_elements(vector<float> numbers, float threshold){

Table 4.The problem is relatively intuitive and simple, without much detail or subtlety for the model to reason about.

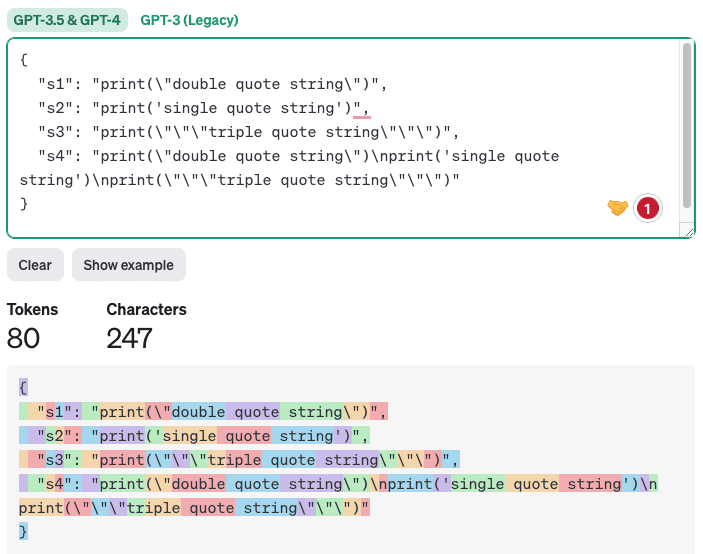

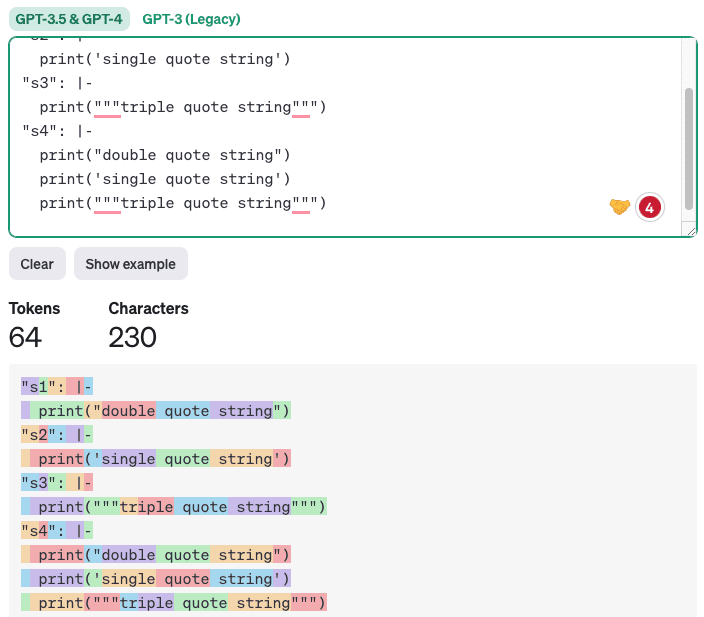

2) Why YAML output is better suited for code generation tasks than JSON output

Although the new version of GPT has [native support], but we believe that for code generation, YAML output is more appropriate. This is because generated code often contains single quotes, double quotes, and special characters. In JSON format, it is difficult for LLM to place these characters correctly because JSON output needs to be surrounded by double quotes. YAML output, on the other hand, [Adoption of block scalars] style, simply follow the indentation rules and any properly indented text or code is legal. Additionally, YAML output has fewer tokens, which means lower cost and faster inference times, as well as improved quality because the model has fewer non-critical tokens to focus on. Here's an example comparing JSON and YAML output (using [https://platform.openai.com/tokenizer] generated):

import json

import yaml

s1 = ‘print(“double quote string”)’

s2 = “print(‘single quote string’)”

s3 = ‘print(“””triple quote string”””)’

s4 = f”{s1}\n{s2}\n{s3}”

# Create a dictionary with keys as variable names and values as strings

data = {‘s1’: s1, ‘s2’: s2, ‘s3’: s3, ‘s4′: s4}

# Convert dictionary to JSON format string

json_data = json.dumps(data, indent=2)

print(json_data)

# Converting dictionaries to YAML format strings in block scalar style

yaml_data = yaml.dump(data, indent=2, default_style=’|’)

print(yaml_data)

Output:

Table 5.

JSON output:

Figure 7. Example of Token counting using JSON output.

A sample YAML output is shown below:

Figure 8. Example of Token counting using YAML output.

Obviously, generating code that only maintains proper indentation is not only more concise and clear, but also effective in reducing errors.