一个现象很常见:即使 RAG 系统用了最强的 LLM,Prompt 也经过了反复调校,问答效果依然不理想,答案要么上下文不全,要么存在事实错误。

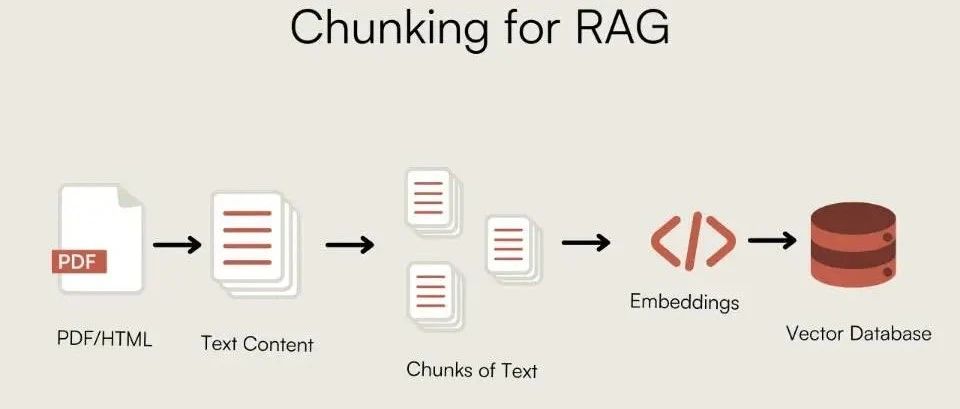

工程师们检查了检索算法,优化了 Embedding 模型,但常常忽略了数据进入向量库之前的关键步骤:文档分块。

不恰当的分块,等于给模型喂了一堆信息残缺的“坏数据”。模型的推理能力再强,也无法从碎片化的知识中拼凑出完整答案。分块的质量,直接决定了 RAG 系统性能的下限。

这篇文章不谈空泛的理论,而是聚焦于各类分块策略的实战代码和工程经验,为 RAG 系统打造坚实的地基。

为何要分块?

分块的必要性源于两个核心限制:

- 模型上下文窗口:大语言模型(LLM)无法一次性处理无限长度的文本。分块是将长文档切分为模型可以处理的片段。

- 检索效率与噪声:在检索时,如果一个文本块包含过多无关信息(噪声),就会稀释核心信号,导致检索器难以精确匹配用户意图。

理想的分块是在上下文完整性与信息密度之间找到平衡。chunk_size 和 chunk_overlap 是调控这一平衡的基础参数。chunk_overlap 通过在相邻块之间保留部分重复文本,来确保跨越块边界的语义连续性。

基础分块策略

固定长度分块

这是最直接的方法,按预设的字符数切割。它不考虑文本的任何逻辑结构,实现简单,但容易破坏语义完整性。

- 核心思想:按固定字符数

chunk_size切分文本。 - 适用场景:结构性弱的纯文本,或对语义要求不高的预处理阶段。

from langchain_text_splitters import CharacterTextSplitter

sample_text = (

"LangChain was created by Harrison Chase in 2022. It provides a framework for developing applications "

"powered by language models. The library is known for its modularity and ease of use. "

"One of its key components is the TextSplitter class, which helps in document chunking."

)

text_splitter = CharacterTextSplitter(

separator=" ", # Split on spaces

chunk_size=100, # Size of each chunk

chunk_overlap=20, # Overlap between chunks

length_function=len,

)

docs = text_splitter.create_documents([sample_text])

for i, doc in enumerate(docs):

print(f"--- Chunk {i+1} ---")

print(doc.page_content)

递归字符分块

LangChain 推荐的通用策略。它按预设的字符列表(例如 ["\n\n", "\n", " ", ""])进行递归分割,尝试优先保留段落、句子等逻辑单元。

- 核心思想:按层次化的分隔符列表进行递归切分。

- 适用场景:绝大多数文本类型的首选通用策略。

from langchain_text_splitters import RecursiveCharacterTextSplitter

# Using the same sample_text from the previous example

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=100,

chunk_overlap=20,

# Default separators are ["\n\n", "\n", " ", ""]

)

docs = text_splitter.create_documents([sample_text])

for i, doc in enumerate(docs):

print(f"--- Chunk {i+1} ---")

print(doc.page_content)

参数调优:对于固定长度和递归分块,chunk_size 和 chunk_overlap 的设置至关重要。

chunk_size:决定每个块的大小。块太小,上下文信息不足;块太大,引入过多噪声,增加API调用成本。这个值通常根据Embedding模型的输入token限制来选择,常见的256,512,1024等值,正是为了适配BERT等模型的512token上下文窗口。chunk_overlap:决定相邻块之间的重叠字符数。设置合理的重叠(例如chunk_size的 10%-20%)可以有效防止在块边界处切断完整的语义单元,是保证语义连续性的关键。

基于句子的分块

以句子为最小单元进行组合,确保了最基本的语义完整性。

- 核心思想:将文本分割成句子,再将句子聚合成块。

- 适用场景:对句子完整性要求高的场景,如法律文书、新闻报道。

import nltk

try:

nltk.data.find('tokenizers/punkt')

except nltk.downloader.DownloadError:

nltk.download('punkt')

from nltk.tokenize import sent_tokenize

def chunk_by_sentences(text, max_chars=500, overlap_sentences=1):

sentences = sent_tokenize(text)

chunks = []

current_chunk = ""

for i, sentence in enumerate(sentences):

if len(current_chunk) + len(sentence) <= max_chars:

current_chunk += " " + sentence

else:

chunks.append(current_chunk.strip())

# Create overlap

start_index = max(0, i - overlap_sentences)

current_chunk = " ".join(sentences[start_index:i+1])

if current_chunk:

chunks.append(current_chunk.strip())

return chunks

long_text = "This is the first sentence. This is the second sentence, which is a bit longer. Now we have a third one. The fourth sentence follows. Finally, the fifth sentence concludes this paragraph."

chunks = chunk_by_sentences(long_text, max_chars=100)

for i, chunk in enumerate(chunks):

print(f"--- Chunk {i+1} ---")

print(chunk)

注意:处理中文时,nltk.tokenize.sent_tokenize 默认的英文模型会失效。必须采用适合中文的分割方法,例如基于中文标点符号(。!?)的正则表达式,或使用加载了中文模型的 spaCy 或 HanLP 等库。

结构感知分块

利用文档固有的结构信息(如标题、列表)作为分块边界,这种方法逻辑性强,能更好地保留上下文。

Markdown 文本分块

- 核心思想:根据

Markdown的标题层级来定义块的边界。 - 适用场景:格式规范的

Markdown文档,如GitHubREADME, 技术文档。

from langchain_text_splitters import MarkdownHeaderTextSplitter

markdown_document = """

# Chapter 1: The Beginning

## Section 1.1: The Old World

This is the story of a time long past.

## Section 1.2: A New Hope

A new hero emerges.

# Chapter 2: The Journey

## Section 2.1: The Call to Adventure

The hero receives a mysterious call.

"""

headers_to_split_on = [

("#", "Header 1"),

("##", "Header 2"),

]

markdown_splitter = MarkdownHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

md_header_splits = markdown_splitter.split_text(markdown_document)

for split in md_header_splits:

print(f"Metadata: {split.metadata}")

print(split.page_content)

print("-" * 20)

对话式分块

- 核心思想:根据对话的发言人或轮次进行分块。

- 适用场景:客服对话、访谈记录、会议纪要。

dialogue = [

"Alice: Hi, I'm having trouble with my order.",

"Bot: I can help with that. What's your order number?",

"Alice: It's 12345.",

"Alice: I haven't received any shipping updates.",

"Bot: Let me check... It seems your order was shipped yesterday.",

"Alice: Oh, great! Thank you.",

]

def chunk_dialogue(dialogue_lines, max_turns_per_chunk=3):

chunks = []

for i in range(0, len(dialogue_lines), max_turns_per_chunk):

chunk = "\n".join(dialogue_lines[i:i + max_turns_per_chunk])

chunks.append(chunk)

return chunks

chunks = chunk_dialogue(dialogue)

for i, chunk in enumerate(chunks):

print(f"--- Chunk {i+1} ---")

print(chunk)

语义与主题分块

这类方法超越文本的物理结构,根据内容的语义进行切分。

语义分块

- 核心思想:计算相邻句子或段落的向量相似度,在语义发生突变(相似度低)的位置进行切分。

- 适用场景:知识库、研究论文等需要高精度语义内聚的文档。

import os

from langchain_experimental.text_splitter import SemanticChunker

from langchain_huggingface import HuggingFaceEmbeddings

os.environ["TOKENIZERS_PARALLELISM"] = "false"

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

# LangChain's SemanticChunker offers different threshold types:

# "percentile": Threshold based on the percentile of similarity score differences. Good for adaptability.

# "standard_deviation": Threshold based on standard deviation of similarity scores.

# "interquartile": Uses the interquartile range, robust to outliers.

# "gradient": Looks for sharp changes in similarity, useful for detecting abrupt topic shifts.

text_splitter = SemanticChunker(

embeddings,

breakpoint_threshold_type="percentile",

breakpoint_threshold_amount=95 # A higher percentile means it only breaks on very significant semantic shifts.

)

long_text = (

"The Wright brothers, Orville and Wilbur, were two American aviation pioneers "

"generally credited with inventing, building, and flying the world's first successful motor-operated airplane. "

"They made the first controlled, sustained flight of a powered, heavier-than-air aircraft on December 17, 1903. "

"In the following years, they continued to develop their aircraft. "

"Switching topics completely, let's talk about cooking. "

"A good pizza starts with a perfect dough, which needs yeast, flour, water, and salt. "

"The sauce is typically tomato-based, seasoned with herbs like oregano and basil. "

"Toppings can vary from simple mozzarella to a wide range of meats and vegetables. "

"Finally, let's consider the solar system. "

"It is a gravitationally bound system of the Sun and the objects that orbit it. "

"The largest objects are the eight planets, in order from the Sun: Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus, and Neptune."

)

docs = text_splitter.create_documents([long_text])

for i, doc in enumerate(docs):

print(f"--- Chunk {i+1} ---")

print(doc.page_content)

print()

参数调优:SemanticChunker 的效果高度依赖 breakpoint_threshold_amount。这个阈值控制着“语义变化敏感度”。低阈值会产生大量小而内聚的块,高阈值则只在话题发生显著转变时才切分。需要根据文档内容反复实验。

基于主题的分块

- 核心思想:利用主题模型(如

LDA)或聚类算法,在文档的宏观主题发生转换时进行切分。 - 适用场景:长篇、多主题的报告或书籍。

import numpy as np

import re

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.decomposition import LatentDirichletAllocation

import nltk

from nltk.corpus import stopwords

try:

stopwords.words('english')

except LookupError:

nltk.download('stopwords')

def lda_topic_chunking(text: str, n_topics: int = 3) -> list[str]:

# 1. Preprocessing: Treat each paragraph as a "document"

paragraphs = [p.strip() for p in text.split('\n\n') if p.strip()]

if len(paragraphs) <= 1:

return [text]

cleaned_paragraphs = [re.sub(r'[^a-zA-Z\s]', '', p).lower() for p in paragraphs]

# 2. Bag of Words + Stopword Removal

vectorizer = CountVectorizer(min_df=1, stop_words=stopwords.words('english'))

X = vectorizer.fit_transform(cleaned_paragraphs)

if X.shape == 0:

return paragraphs

# 3. LDA Topic Modeling

lda = LatentDirichletAllocation(n_components=n_topics, random_state=42)

lda.fit(X)

# 4. Determine dominant topic for each paragraph

topic_dist = lda.transform(X)

dominant_topics = np.argmax(topic_dist, axis=1)

# 5. Chunking based on topic changes

chunks = []

current_chunk_paragraphs = []

current_topic = dominant_topics

for i, paragraph in enumerate(paragraphs):

if dominant_topics[i] == current_topic:

current_chunk_paragraphs.append(paragraph)

else:

chunks.append("\n\n".join(current_chunk_paragraphs))

current_chunk_paragraphs = [paragraph]

current_topic = dominant_topics[i]

chunks.append("\n\n".join(current_chunk_paragraphs))

return chunks

注意:基于主题的分块对文本长度、主题区分度和预处理步骤非常敏感,且需要预设主题数。此方法更适合作为一种探索性工具,在主题边界清晰的长文档上使用。

高级分块策略

小-大分块 (Small-to-Big)

- 核心思想:使用小块(如句子)进行高精度检索,然后将包含该小块的原始大块(如段落)作为上下文送入

LLM。它结合了小块的高检索精度和大块的丰富上下文。 - 适用场景:需要高检索精度和丰富生成上下文的复杂问答场景。

在 LangChain 中,ParentDocumentRetriever 实现了这一思想。它在后台管理两个并行的处理流程:

- 将文档分割成大的“父块”。

- 进一步将每个父块分割成小的“子块”。

- 只对子块建立向量索引。

- 检索时,首先找到相关的子块,然后通过一个独立的

docstore提取它们对应的父块返回给LLM。

# from langchain.embeddings import OpenAIEmbeddings

# from langchain_text_splitters import RecursiveCharacterTextSplitter

# from langchain.retrievers import ParentDocumentRetriever

# from langchain_community.document_loaders import TextLoader

# from langchain_chroma import Chroma

# from langchain.storage import InMemoryStore

# Assume 'docs' are loaded documents

# parent_splitter = RecursiveCharacterTextSplitter(chunk_size=2000)

# child_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

# vectorstore = Chroma(embedding_function=OpenAIEmbeddings(), collection_name="split_parents")

# store = InMemoryStore() # This store holds the parent documents

# retriever = ParentDocumentRetriever(

# vectorstore=vectorstore,

# docstore=store,

# child_splitter=child_splitter,

# parent_splitter=parent_splitter,

# )

# retriever.add_documents(docs)

# sub_docs = vectorstore.similarity_search("query") # Retrieves small chunks

# retrieved_docs = retriever.get_relevant_documents("query") # Retrieves large parent chunks

# print(retrieved_docs.page_content)

代理式分块 (Agentic Chunking)

- 核心思想:利用一个

LLMAgent来模拟人类的阅读理解过程,动态决定分块边界。例如,提示LLM将一段文本分解为多个“自包含的知识块”。 - 适用场景:实验性项目,或处理高度复杂、非结构化的文本。成本极高,稳定性待验证。

import textwrap

# from langchain_openai import ChatOpenAI

from langchain.prompts import PromptTemplate

from langchain_core.output_parsers import PydanticOutputParser

from pydantic import BaseModel, Field

from typing import List

class KnowledgeChunk(BaseModel):

chunk_title: str = Field(description="A concise title for this knowledge chunk.")

chunk_text: str = Field(description="A self-contained text extracted and synthesized from the original paragraph.")

representative_question: str = Field(description="A typical question that can be answered by this chunk.")

class ChunkList(BaseModel):

chunks: List[KnowledgeChunk]

parser = PydanticOutputParser(pydantic_object=ChunkList)

prompt_template = """

[ROLE]: You are a top-tier document analyst. Your task is to decompose complex text into a set of core, self-contained "Knowledge Chunks".

[TASK]: Read the provided text, identify the distinct core concepts, and create a knowledge chunk for each.

[RULES]:

1. Self-Contained: Each chunk must be understandable on its own.

2. Single Concept: Each chunk should focus on only one core idea.

3. Extract and Restructure: Pull all relevant sentences for a concept and combine them into a coherent paragraph.

4. Follow Format: Strictly adhere to the JSON format instructions below.

{format_instructions}

[TEXT TO PROCESS]:

{paragraph_text}

"""

prompt = PromptTemplate(

template=prompt_template,

input_variables=["paragraph_text"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

# The following part is a simulation, as it requires a running LLM model.

# model = ChatOpenAI(model="gpt-4", temperature=0.0)

# chain = prompt | model | parser

# result = chain.invoke({"paragraph_text": document_text})

混合分块:平衡效率与质量

在实践中,单一策略难以应对所有情况。混合分块是一种非常实用的技巧。

- 核心思想:先用一种宏观策略(如结构化分块)进行粗粒度切分,再对过大的块使用更精细的策略(如递归分块)进行二次切分。

- 适用场景:处理结构复杂且内容密度不均的文档。

from langchain_text_splitters import MarkdownHeaderTextSplitter, RecursiveCharacterTextSplitter

from langchain_core.documents import Document

markdown_document = """

# Chapter 1: Company Profile

Our company was founded in 2017...

## 1.1 Development History

The company has experienced rapid growth...

# Chapter 2: Core Technology

This chapter describes our core technologies in detail. Our framework is based on advanced distributed computing concepts... (A very long paragraph with multiple sentences describing different technical aspects like CNNs, Transformers, data pipelines, etc.)

## 2.1 Technical Principles

Our principles combine statistics, machine learning...

# Chapter 3: Future Outlook

Looking ahead, we will continue to invest in AI...

"""

def hybrid_chunking(

markdown_document: str,

coarse_chunk_threshold: int = 400,

fine_chunk_size: int = 100,

fine_chunk_overlap: int = 20

) -> list[Document]:

# 1. Coarse-grained splitting by structure

headers_to_split_on = [("#", "Header 1"), ("##", "Header 2")]

markdown_splitter = MarkdownHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

coarse_chunks = markdown_splitter.split_text(markdown_document)

# 2. Fine-grained recursive splitter for oversized chunks

fine_splitter = RecursiveCharacterTextSplitter(

chunk_size=fine_chunk_size,

chunk_overlap=fine_chunk_overlap

)

final_chunks = []

for chunk in coarse_chunks:

if len(chunk.page_content) > coarse_chunk_threshold:

# If a chunk is too large, split it further

finer_chunks = fine_splitter.split_documents([chunk])

final_chunks.extend(finer_chunks)

else:

final_chunks.append(chunk)

return final_chunks

final_chunks = hybrid_chunking(markdown_document)

for i, chunk in enumerate(final_chunks):

print(f"--- Final Chunk {i+1} (Length: {len(chunk.page_content)}) ---")

print(f"Metadata: {chunk.metadata}")

print(chunk.page_content)

print("-" * 80)

如何选择最佳分块策略?

面对众多策略,合理的选择路径比逐一尝试更重要。建议遵循以下分层决策框架。

第一步:从基准策略开始

- 默认选项:

RecursiveCharacterTextSplitter。无论处理何种文本,这都是最稳妥的起点。用它建立一个性能基线。

第二步:检查结构化特征

- 优先选项:结构感知分块。如果文档具有明确的结构(

Markdown标题、HTML标签),切换到MarkdownHeaderTextSplitter等方法。这是成本最低、收益最明显的优化。

第三步:当精度成为瓶颈时

- 进阶选项:语义分块或小-大分块。如果基础和结构化策略的检索效果仍不理想,说明需要更高维度的语义信息。

SemanticChunker:适用于需要块内语义高度一致的场景。ParentDocumentRetriever(小-大分块):适用于既要保证检索精准度,又需要为LLM提供完整上下文的复杂问答场景。

第四步:应对极端复杂的文档

- 高级实践:混合分块。对于结构复杂、内容密度不均的文档,混合分块是平衡成本与效果的最佳实践。

下表总结了所有讨论过的分块策略。

| 分块策略 | 核心逻辑 | 优点 | 缺点与成本 |

|---|---|---|---|

| 固定长度分块 | 按固定字符数或 token 数切分 |

实现简单,速度快 | 容易破坏语义,效果最差 |

| 递归分块 | 按预定分隔符(段落、句子)递归切分 | 通用性强,较好地保留结构 | 对无规律文档效果一般 |

| 基于句子的分块 | 以句子为最小单元,再组合成块 | 保证句子完整性 | 单句上下文可能不足,需处理长句 |

| 结构化分块 | 利用文档固有结构(如标题)切分 | 逻辑性强,上下文清晰 | 强依赖文档格式,不通用 |

| 语义分块 | 根据局部语义相似度变化切分 | 块内概念高度内聚,检索精度高 | 计算成本高(Embedding 计算),依赖模型质量 |

| 基于主题的分块 | 利用主题模型按全局主题边界切分 | 块内信息高度相关 | 实现复杂,对数据和参数敏感,效果不稳定 |

| 混合分块 | 宏观粗分 + 微观细分 | 平衡效率与质量,实用性强 | 实现逻辑更复杂 |

| 小-大分块 | 检索用小块,生成用大块 | 结合高精度检索和丰富上下文 | 管道复杂,需要管理两套索引,存储成本翻倍 |

| 代理式分块 | AI 代理动态分析和切分文档 |

理论上效果最优 | 实验性,成本极高(API 调用),延迟大 |