From API calls for Large Language Models (LLMs) to autonomous, goal-driven Agentic Workflows, there is a fundamental shift in the paradigm of AI applications. The open source community has played a key role in this wave, giving rise to a plethora of AI tools focused on specific research tasks. These tools are no longer single models, but complex systems that integrate planning, collaboration, information retrieval and tool invocation capabilities. In this paper, we will analyze ten representative open source research assistants and analyze their technology paths, design philosophies, and strategic positioning in the AI ecosystem.

Multi-Agent Systems (MAS): Structured Collaboration and Dynamic Adaptation

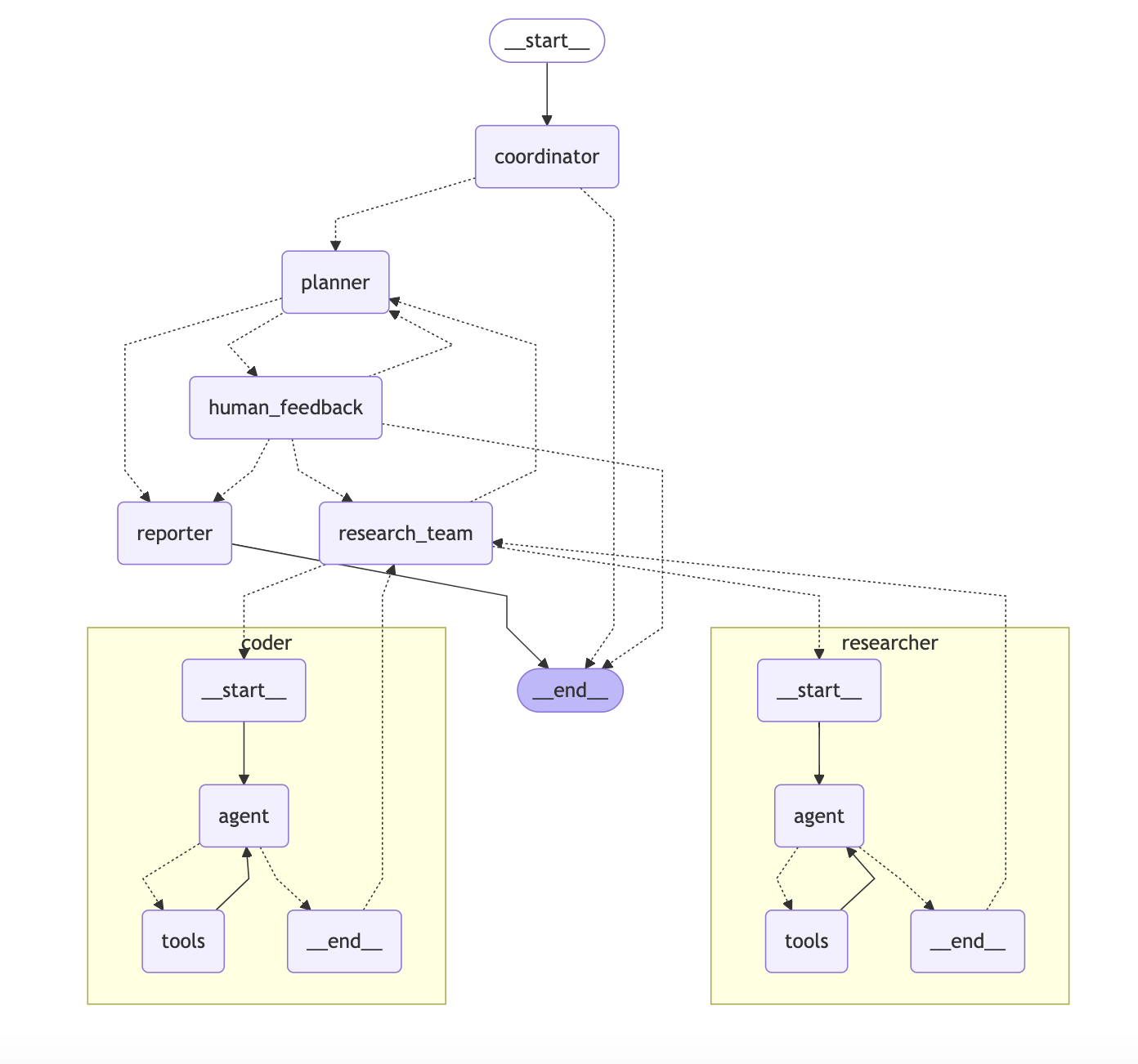

Multi-Agent Systems (MAS) solve complex problems that are difficult for a single intelligence to cope with by allowing multiple independent intelligences to work together.DeerFlow and OWL Two dominant implementation paths in the field are demonstrated: hierarchical vs. decentralized.

DeerFlow represents a hierarchical, structured model of collaboration. It deconstructs the system into Coordinator, Planner and Expert Teams, which is designed to resemble a well-organized corporate structure. The workflow is deterministic: the Planner decomposes tasks, the Coordinator assigns tasks, and the Expert Intelligence executes. The advantage of this model is that it is extremely efficient and predictable when dealing with clearly bounded tasks that can be decomposed efficiently. For example, when performing a security audit of a large-scale codebase, DeerFlow can stably assign tasks to intelligences with different roles, such as code analysis, vulnerability detection, etc., and aggregate reports.

Project Address:https://github.com/bytedance/deer-flow

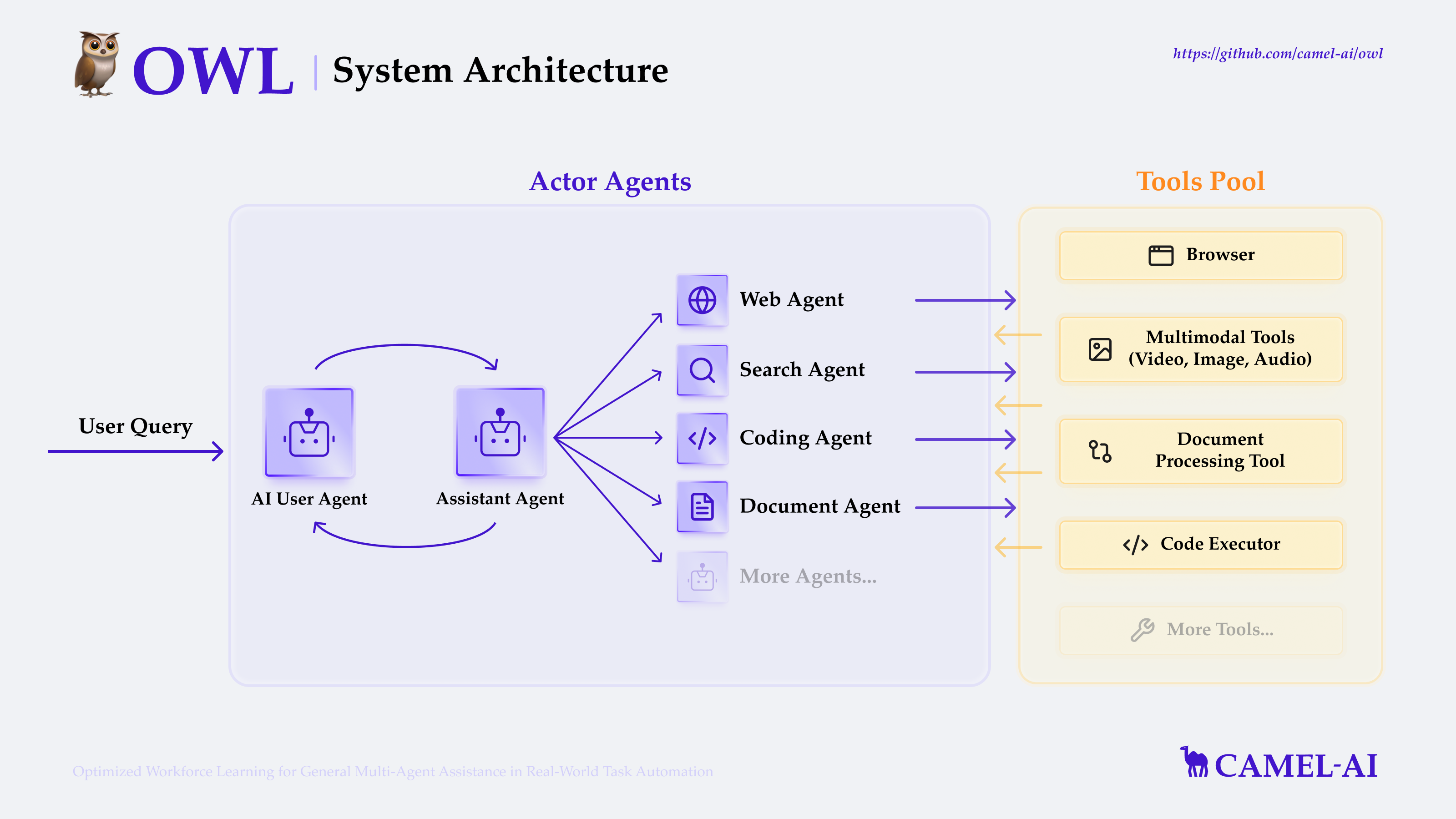

OWL, on the other hand, embodies the philosophy of decentralized, dynamically adaptive collaboration. It is based on CAMEL-AI The core of the framework is "role-playing" and "communication and negotiation" among the intelligences. Unlike the predefined division of labor in DeerFlow, the intelligences in OWL can dynamically negotiate and assume different roles according to the real-time progress of the task. This emergent collaboration model is advantageous when dealing with open-ended, unstructured problems (e.g., interdisciplinary scientific exploration) because it allows the system to explore the best way to collaborate on its own through interactions between intelligences without a clear path to a solution.The flexibility of OWL comes at the expense of some of its predictability, which represents a central trade-off in the design of multi-intelligence systems.

Project Address:https://github.com/camel-ai/owl

Advanced Retrieval Augmented Generation (Advanced RAG): From Information Retrieval to Knowledge Exploration

Retrieval-Augmented Generation (RAG) has become a standard technique for mitigating modeling illusions and introducing real-time information.WebThinker works with the Search-R1 The first step is to move from the simple "retrieve-generate" model to the more advanced "Agentic Exploration" (AE).

WebThinker The core breakthrough is the ability to give LLM Autonomous Browsing. It goes beyond the traditional RAG The scope of only extracting text fragments from search engine result pages (SERPs) or vector databases can simulate the behavior of human users clicking on links and digging deeper into the content of web pages. The closed-loop process of "think-search-write" essentially generates a detailed Reasoning Trace, which is optimized through reinforcement learning. This enables it to build a more comprehensive knowledge picture in public opinion analysis or industry tracking tasks that require both in-depth and broad information.

Project Address:https://github.com/RUC-NLPIR/WebThinker

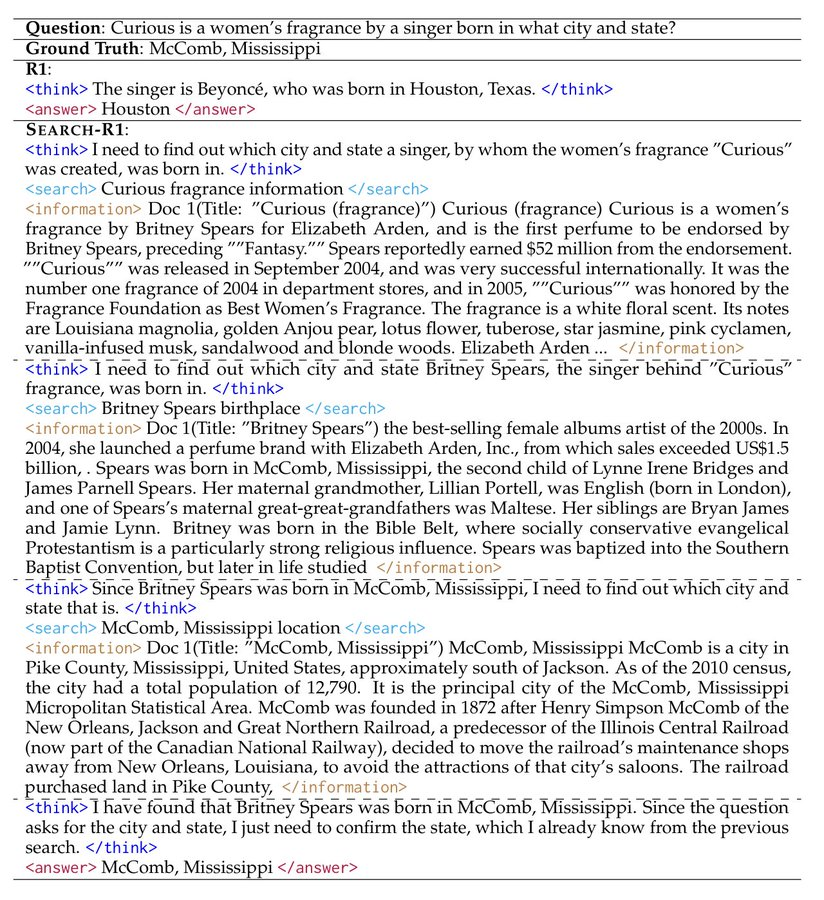

Search-R1 focuses on "Meta-cognition for Search". It is not a fixed search agent, but a modular framework for building and evaluating search agents. Users can freely combine different large language models such as LLaMA3 and Qwen2.5 with reinforcement learning algorithms such as PPO and GRPO, just like configuring a server. This meta-level abstraction makes it a powerful research tool, allowing developers to explore the fundamental question of how AI learns to search, rather than being satisfied with the search results themselves.

Project Address:https://github.com/PeterGriffinJin/Search-R1

Edge Intelligence and Data Sovereignty: The Rise of Localized AI

With increasing demands for data privacy and responsiveness, pushing AI computing from the cloud to edge devices has become inevitable. alita and AgenticSeek is a representative solution for this trend.

Alita The core idea of MCP is to simplify the integration and reuse of tools through standardized interfaces. Its Model Context Protocol (MCP) can be understood as an "Application Binary Interface (ABI) for AI tools". By defining a unified interaction format for different tools, Alita enables quick access and functionality expansion without the need for complex adaptations for each new tool. This "plug-and-play" nature allows the single-module reasoning architecture to remain lightweight while having strong potential for self-evolution, greatly reducing the barriers to building complex AI applications for individuals and small teams.

Project Address:https://github.com/CharlesQ9/Alita

AgenticSeek takes Data Sovereignty to the extreme. It is an AI agent that runs entirely on the local device and does not rely on any cloud API calls, ensuring that data never leaves the local area. Its internal task-intelligence matching mechanism can be viewed as a lightweight device-side "Mixture of Experts (MoE)" model, intelligently scheduling the most appropriate local resources based on the type of task (browsing, coding, planning). This gives it an irreplaceable advantage when dealing with highly sensitive data such as financial and medical data, or when working in offline environments.

Project Address:https://github.com/Fosowl/agenticSeek

Model Capability Unlocked: the Path Debate between Supervised Fine-Tuning and Reinforcement Learning

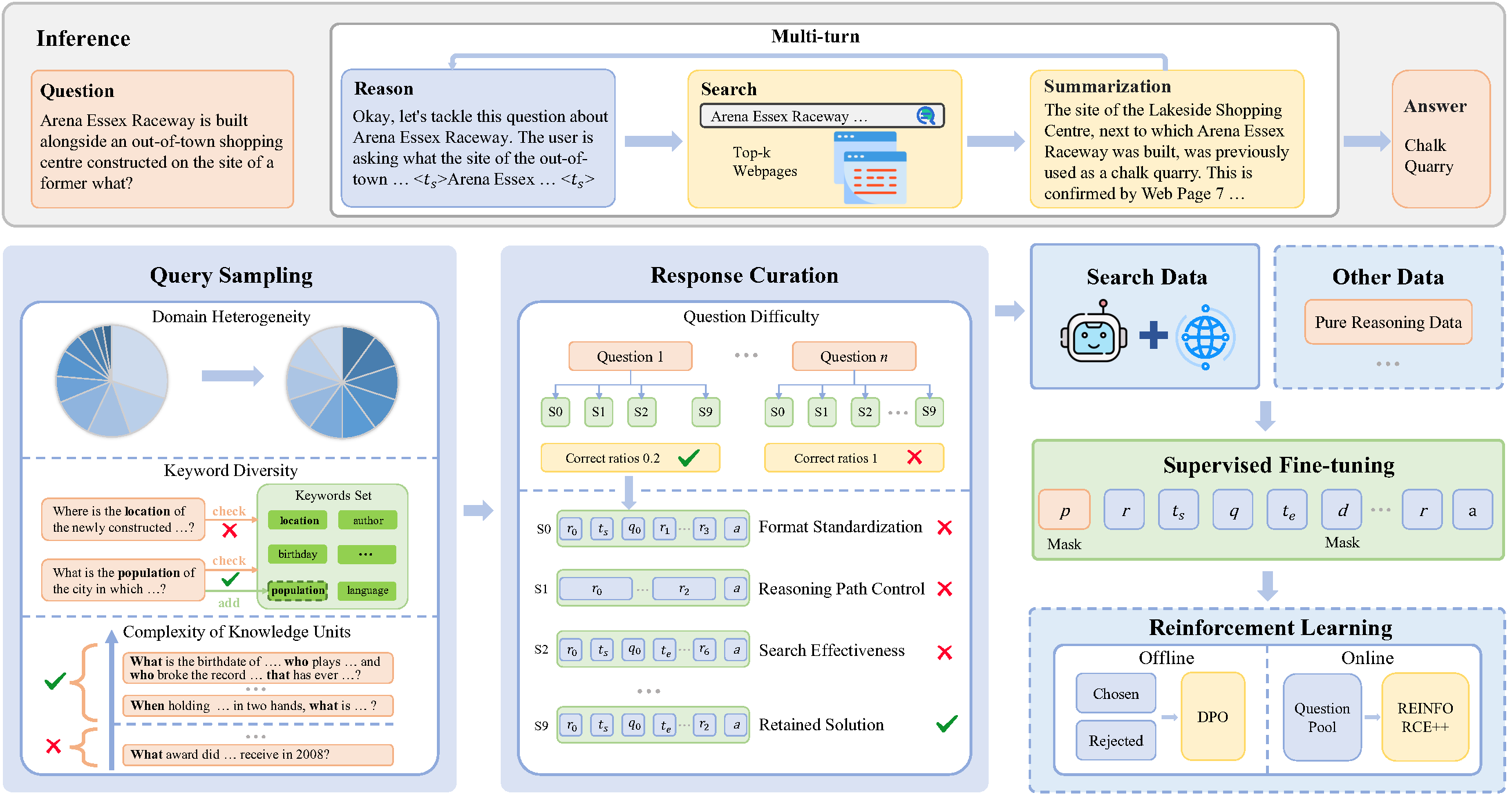

How to efficiently teach models to master new skills is a central topic in AI. SimpleDeepSearcher and ReCall represent innovative applications of two mainstream technology paths, respectively.

SimpleDeepSearcher has proven that high-quality Supervised Fine-Tuning (SFT) is equally capable of achieving complex research capabilities that were previously thought to be only achievable with Reinforcement Learning (RL). The key to its success lies in the way the training data is constructed. Instead of using simple (input, final output) data pairs, it generates "reasoning trajectory" data containing intermediate steps and decision-making processes by simulating real web page interactions. This kind of imitation learning of the "thinking process" is less expensive and more stable than starting from scratch reinforcement learning exploration, and provides a valuable shortcut for training high-performance specialized models with limited resources.

Project Address:https://github.com/RUCAIBox/SimpleDeepSearcher

ReCall focuses on a pure reinforcement learning path, addressing the generalization ability of LLMs to "learn to use tools". Its most compelling feature is that it does not require manually labeled tool call data. The model receives feedback from the returned results (success, failure, error messages) through direct interaction with the environment (e.g., API endpoints), and uses this as a learning signal to continuously optimize its tool invocation strategy. This is analogous to Reinforcement Learning from AI Feedback (RLAIF), which enables models to autonomously learn to invoke OpenAI-style tools in complex task flows. This capability is a critical step on the road to generalized artificial intelligence (AGI).

Project Address:https://github.com/Agent-RL/ReCall

End-to-end platforms and research rigor

As the underlying capabilities mature, integrating multiple functions into an end-to-end platform and ensuring the reliability of the output becomes a higher level of pursuit.

Suna The positioning of the company is an "AI-native workflow IDE". Instead of focusing on innovating an underlying AI technology, it aims to improve the developer experience (DX). By seamlessly integrating web browsing, file processing, command line execution, and even website deployment, Suna aims to eliminate the cost of switching between different tools in a technical workflow. It is more like a Zapier or Make for developers, deeply integrating AI capabilities into the entire process of project management and execution.

Project Address:https://github.com/kortix-ai/suna

DeepResearcher, on the other hand, directly addresses AI's biggest challenge in serious research: reliability. It uses end-to-end reinforcement learning to train models to build a rigorous research strategy. Its core "self-reflection" mechanism represents a kind of "Epistemic Humility". When the model is still unable to reach a high-confidence conclusion after verifying multiple sources of information, it will actively choose to "admit that it doesn't know" instead of confidently hallucinating as many models do. This kind of recognition and confession of uncertainty is a necessary quality for AI to transform from an "information generator" to a trustworthy "research partner".

Project Address:https://github.com/GAIR-NLP/DeepResearcher