Qwen-Image-Edit is an image editing AI model developed by Alibaba Tongyi Qianqian team. It is trained on the Qwen-Image model, which has 20 billion parameters, and its core function is to allow users to modify images through simple Chinese or English text commands. The model utilizes both visual semantic understanding and visual appearance control, allowing the editor to understand high-level commands (e.g., "change the background to Antarctica") and perform fine local modifications (e.g., "remove hair strands") while keeping the rest of the image as unchanged as possible. One of the outstanding features of the model is its powerful text editing ability, which can directly modify the Chinese and English characters in the image and retain the original font style. The model is currently open-sourced on Hugging Face and other platforms, and is licensed under the Apache 2.0 license, which allows commercial use.

Experience Address:

https://modelscope.cn/models/Qwen/Qwen-Image-Edit

Function List

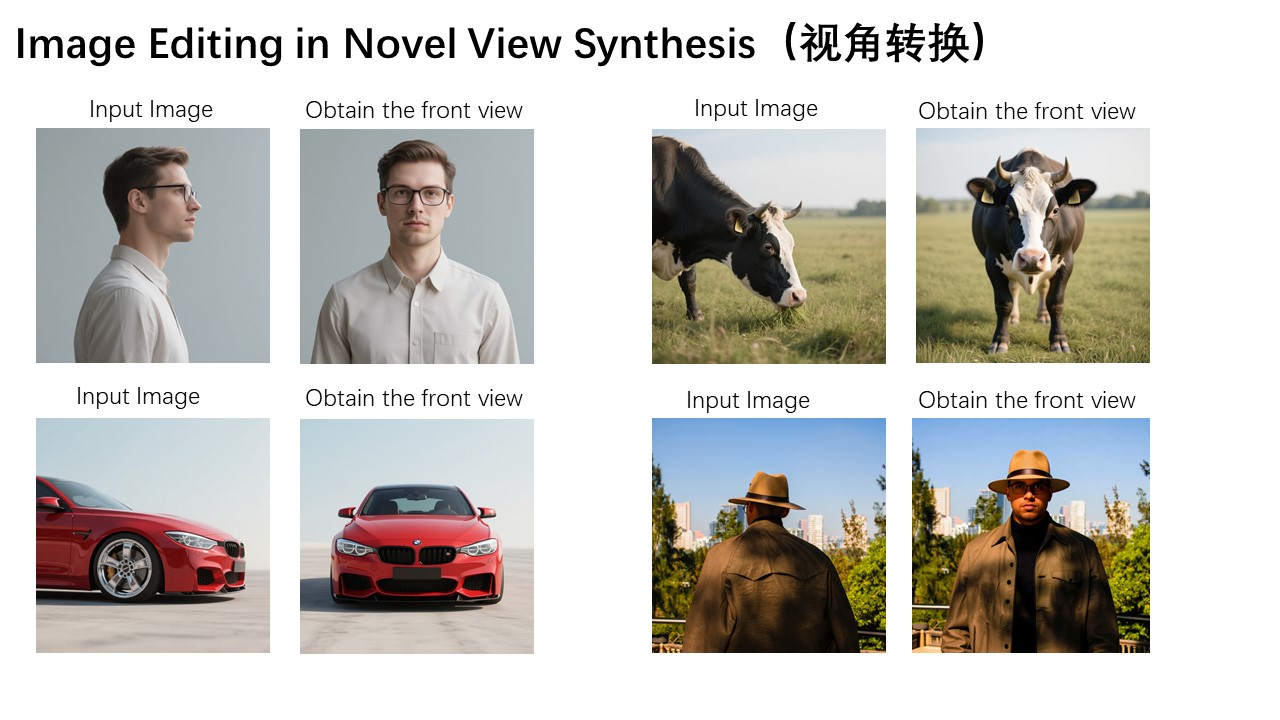

- semantic editor: Supports advanced modifications that involve an overall understanding of the image. For example, converting a portrait into a certain artistic style (e.g. Ghibli anime style), rotating the viewpoint of an object in a picture (even 180 degrees), or creating a picture of an IP character (e.g. mascot) with a different scenario and style while preserving its characteristics.

- Appearance Editor: Focuses on making precise changes to localized areas of an image while leaving the rest of the area unchanged. Examples include adding new objects to a scene (such as a signboard), removing unwanted elements (such as clutter or tiny strands of hair), replacing a character's clothing or background, and so on.

- Precision text editing: Ability to add, delete or modify Chinese and English characters directly on the image, and try to maintain the original font, size and style.

- Chain Editor: Supports continuous modification of images through multiple rounds and progressive commands to gradually achieve the final desired effect. For example, in the generated calligraphy work, you can box out the wrong or unsatisfactory characters one by one for correction.

Using Help

The Qwen-Image-Edit model can be used directly on platforms such as Hugging Face and AliCloud Hundred Refinements, and also supports local deployment via code or tools such as ComfyUI.

1. Online at Hugging Face Space

It's the easiest and most straightforward way to experience it without any programming knowledge.

- Accessing the model page: Open the home page of Qwen-Image-Edit at Hugging Face (

https://huggingface.co/Qwen/Qwen-Image-Edit)。 - Finding the reasoning interface: Find "Use this model" or a similar interface on the page.

- Upload original image: There will be an image upload area on the interface, click "Drag image file here or click to browse from your device" to upload the image you want to edit.

- Input editing commands: In the text box (usually labeled "Prompt" or similar), describe your change request in simple, direct Chinese or English. For example, type "

Change the rabbit's color to purple, with a flash light background." (Change the color of the rabbit to purple and the background to lightning). - Generate Image: Click on the "Compute" or "Generate" button and wait for the model to be processed. After processing, the new edited image will be displayed in the output area. You can right click to save the image directly.

2. By means of Python code (diffuserslibrary) using the

If you have some programming basics, you can use Hugging Face'sdiffuserslibrary to call the model, which provides greater flexibility.

- installation environment: First make sure you have the necessary Python libraries installed.

pip install torch transformers diffusers accelerateIn order to use the latest modeling features, it is recommended to install directly from GitHub

diffusers。pip install git+https://github.com/huggingface/diffusers - Write the calling code: The following is a basic example of usage.

import os from PIL import Image import torch from diffusers import QwenImageEditPipeline # 从Hugging Face Hub加载模型,模型会自动下载 pipeline = QwenImageEditPipeline.from_pretrained("Qwen/Qwen-Image-Edit") # 如果你有可用的GPU,将模型移至GPU以加速计算 pipeline.to("cuda") # 打开本地的原始图片 image = Image.open("./input.png").convert("RGB") # 设定你的编辑指令 prompt = "把这只熊手里的东西换成画板和画笔" # 配置生成参数 inputs = { "image": image, "prompt": prompt, "generator": torch.manual_seed(0), # 设置随机种子以确保结果可复现 "true_cfg_scale": 4.0, "negative_prompt": " ", # 可以留空或输入不希望出现的内容 "num_inference_steps": 50, # 推理步数,越高细节可能越好,但耗时越长 } # 执行推理 with torch.inference_mode(): output = pipeline(**inputs) # 获取并保存生成的图片 output_image = output.images[0] output_image.save("output_image_edit.png") print("图片已保存至:", os.path.abspath("output_image_edit.png"))

3. Used on the AliCloud Hundred Refinement Platform

The AliCloud Hundred Refinement Platform provides API calls that are suitable for developers to integrate into their applications.

- Open Service: First of all, you need to open the modeling service in AliCloud Hundred Refinement Platform and get the API Key.

- Configuration environment: Configure the acquired API Key into an environment variable and install the DashScope SDK (Python and Java support) as needed.

- invoke an API: Invoke the model by sending an HTTP POST request to the specified API endpoint. The request body needs to contain the model name (

qwen-image-edit), the input image (usually in URL format), and the text command.

For example, using thecurlAn example of a request is shown below:curl --location 'https://dashscope-intl.aliyuncs.com/api/v1/services/aigc/multimodal-generation/generation' \ --header 'Content-Type: application/json' \ --header "Authorization: Bearer $DASHSCOPE_API_KEY" \ --data '{ "model": "qwen-image-edit", "input": { "messages": [ { "role": "user", "content": [ { "image": "https://dashscope.oss-cn-beijing.aliyuncs.com/images/dog_and_girl.jpeg" }, { "text": "将图中的人物改为站立姿势,弯腰握住狗的前爪" } ] } ] }, "parameters": {} }'After the API call is successful, the returned data will contain the URL of the generated image, note that the URL has a validity period of 24 hours and needs to be saved in time.

application scenario

- e-commerce

Merchants can quickly modify product images, such as changing product backgrounds to fit different promotional themes, fixing flaws in images, or changing clothing colors on model images without having to re-shoot. - Social Media Content Creation

Users can easily add creative elements to their photos, change backgrounds, remove passersby, or convert photos into specific styles (e.g., anime, oil paintings) to create more engaging content. - Advertising & Design

Designers can use the model to quickly generate the first draft of designs and posters. For example, modifying or adding promotional slogans and adjusting the color and position of screen elements directly in the image greatly improves work efficiency. - Personal Entertainment and IP Creation

Users can recreate pictures of their pets or favorite characters, design different emoticons, costumes and scenes for them, and easily create personalized IP images.

QA

- Is the Qwen-Image-Edit model free?

The model is open source under the Apache 2.0 license, which means you can download and use it for free and is allowed for commercial purposes. If it is invoked through a cloud service platform such as AliCloud, you may be charged a fee based on the platform's pricing strategy. - What kind of editing commands can this model handle?

It can handle two main types of commands: one is "appearance editing", which is very specific, such as "turn this flower blue" or "remove the streetlight in the upper right corner of the picture"; the other is "semantic editing", which is more focused on creativity and style, such as "turn this picture into a Van Gogh style" or "make this character look happier". "Semantic Editing", the instructions are more focused on creativity and style, such as "turn this photo into Van Gogh style" or "make this character look happier". Meanwhile, one of its most prominent abilities is to accurately edit text in pictures. - Do I need specialized programming knowledge to use this model?

No need. For normal users, you can use the graphical interface directly in the Space provided by Hugging Face community or in the "Image Edit" function on the Tongyiqianqian website, just uploading images and entering text. For developers, you can use the official Python code or API interface to integrate it into your own program. - How is it different from other AI image editing tools?

The main advantage of Qwen-Image-Edit is its powerful Chinese and English text rendering and editing capabilities, which allow direct and precise modification of the text content in the image, which is difficult to do with many other models. In addition, it strikes a good balance between the need to maintain the details of the original image (appearance) and the need to realize creative modifications (semantics) through its unique dual-path technology.