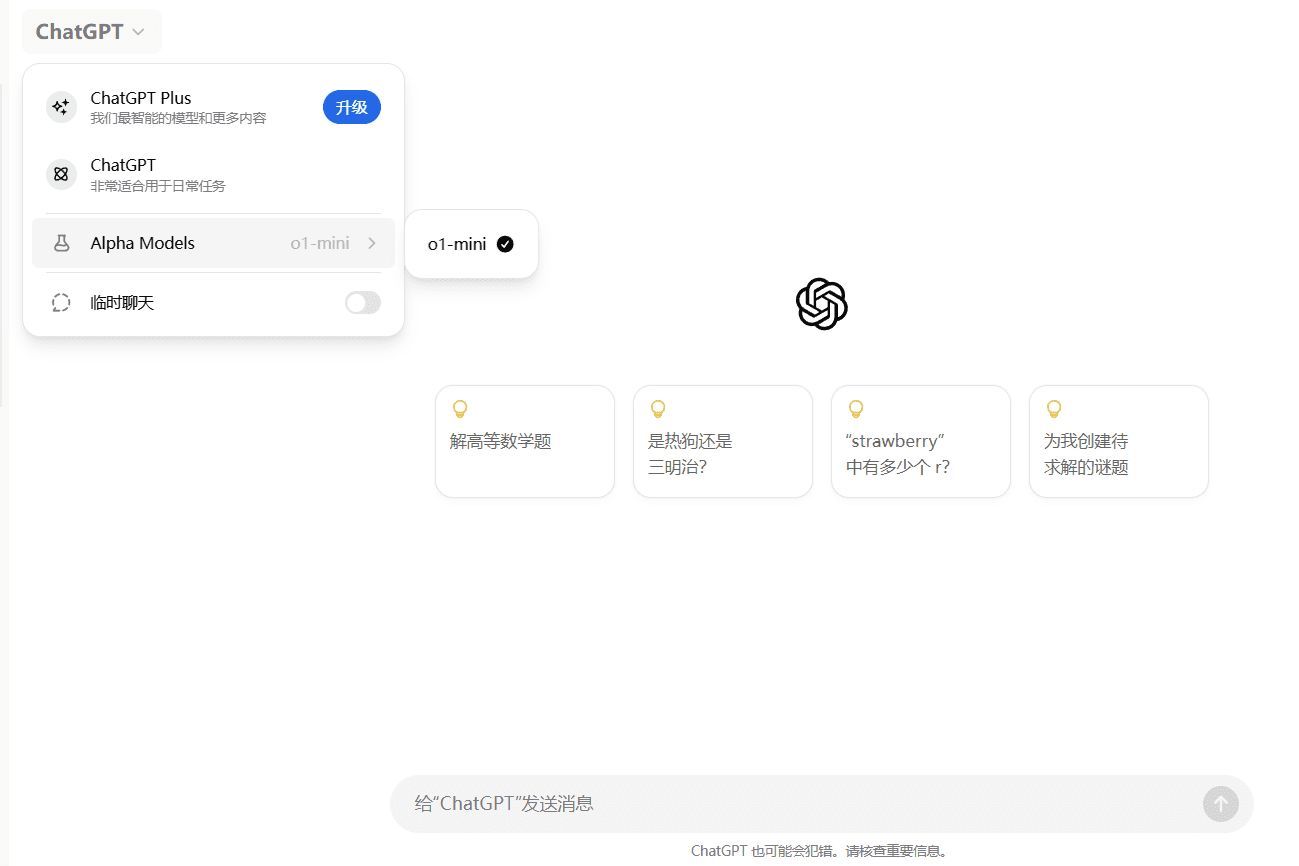

The o1 family of models are advanced process reasoning models, of which the small-sized o1-mini model has the potential to be stronger than o1-preview in terms of logical reasoning, although the world knowledge capability has been curtailed.

Currently o1-mini is only open to some free accounts for preview experience. Whether your account officially opens the o1-mini model can be verified with the following decoding questions:

oyfjdnisdr rtqwainr acxz mynzbhhx -> Think step by step

Use the example above to decode:

oyekaijzdf aaptcg suaokybhai ouow aqht mynznvaatzacdfoulxxz

The above validation question comes from OpenAI o1 Reasoning Ability Learning for Large Language ModelsFor more information on the o1-mini model, please read the following article. Introduction to the OpenAI o1-mini Large Model。

If you don't have a ChatGPT free account or lack access to the o1-mini experience, you can visit:ChatGPT Mirror Station (domestic access to GPT4 series models) Experience.

Some concerns about the OpenAI o1 model

Model names and inference patterns

- OpenAI o1 represents a new level of AI capability and the counter is reset to 1

- "Preview" indicates that this is an early version of the full model.

- "Mini" indicates that this is a smaller version of the o1 model, optimized for speed

- o - on behalf of OpenAI

- o1 is not a "system" but a model that trains students to grow the chain of reasoning before providing the final answer.

- The icon of o1 symbolically represents an alien with extraordinary abilities

o1 Model size and performance

- o1-mini is smaller and faster than o1-preview, so it will be available to free users in the future

- o1-preview is an early checkpoint in the o1 model that is neither too big nor too small

- o1-mini performs better in STEM tasks but is limited in world knowledge

- o1-mini performs well in some tasks, especially in code-related tasks, better than o1-preview

- Inputs for o1 Token is calculated in the same way as GPT-4o, using the same Tokenizer

- Compared to o1-preview, o1-mini can explore more chains of thought

Input Token Contexts and Model Capabilities

- o1 models will soon support larger input contexts

- o1 model can handle longer, more open-ended tasks, with less need to chunk inputs as in GPT-4o

- o1 can generate long chains of reasoning before providing an answer, unlike previous models

- It is currently not possible to pause inference during CoT inference to add more context, but this feature is being explored in future models

Tools, Features and Upcoming Features

- o1-preview does not currently use tools, but plans to support function calls, code interpreters, and browsing capabilities

- Tool support, structured output and system hints will be added in future updates

- Users may eventually be able to control thinking time and Token limits

- Plans are underway to support streaming processing and consider reflecting inference progress in the API

- The multimodal capabilities of the o1 have been built in with the goal of achieving state-of-the-art performance in tasks like MMMUs

CoT (chain of reasoning) reasoning

- o1 Generating hidden inference chains during inference processes

- No plans to expose CoT Token to API users or ChatGPT

- CoT Token will be summarized, but there is no guarantee that it will be fully consistent with the actual reasoning process

- The instructions in the prompt can influence the way the model thinks about the problem

- Reinforcement learning (RL) was used to enhance the CoT capacity of o1, whereas GPT-4o was unable to achieve its CoT performance through cueing alone

- While the reasoning phase may seem slower, it is actually usually faster to generate an answer because it summarizes the reasoning process

API and usage restrictions

- o1-mini has a weekly limit of 50 prompts for ChatGPT Plus users

- All cues are counted the same in ChatGPT

- More API access tiers and higher limits to be rolled out over time

- Hint caching in APIs is a hot demand, but no timeline yet

Pricing, fine-tuning and expansion

- o1 Model pricing is expected to follow a downward price trend every 1-2 years

- Volume API pricing to be supported as restrictions increase

- Fine-tuning is planned, but the timetable has not yet been finalized

- o1 Expansion limited by bottlenecks in research and engineering talent

- New Extended Paradigm for Inference Computing May Lead to Significant Improvements in Future Generations of Models

- Reverse extensions are not significant at this time, but o1-preview performs only slightly better (or even slightly worse) than GPT-4o on individual writing prompts

Model Development and Research Insights

- o1 Reasoning skills through intensive learning training

- The model demonstrates creative thinking and excels in lateral tasks such as poetry

- o1's philosophical reasoning and broad reasoning skills are impressive, such as deciphering codes

- o1 was used by the researchers to create a GitHub bot that pings the right CODEOWNERS for code reviews

- In internal testing, o1 posed difficult questions to itself to assess its ability to

- Extensive world domain knowledge is being added and will be improved in future releases

- Plan to add updated data for o1-mini (currently October 2023)

Tips Tips and Best Practices

- o1 Benefit from providing tips on edge cases or reasoning styles

- o1 models are more receptive to reasoning cues in cues than earlier models

- Providing relevant context in Retrieval Augmented Generation (RAG) improves performance; irrelevant fragments may weaken inference

General feedback and future improvements

- o1-preview is less restrictive due to being in an early testing phase, but will increase the number of

- Latency and inference times are being actively improved

Significant modeling capabilities

- o1 can think about philosophical questions such as "What is life?"

- Researchers find o1 excels at handling complex tasks and extensive reasoning from limited instructions

- o1's creative reasoning skills, such as assessing their abilities by asking their own questions, demonstrate a high level of problem solving skills