The following focuses on the basic idea of hint engineering and how it can improve the performance of large language models (LLMs)...

LLM's interface:One of the key reasons why large-scale language models are so hot is that their text-to-text interfaces enable a minimalist operational experience. In the past, solving tasks with deep learning usually required us to fine-tune at least some of the data to teach the model how to solve the corresponding task. However, most models only had specialized depth on specific tasks. But now, thanks to LLM's excellent contextual learning capabilities, we can solve a variety of problems with a single textual hint. The original complex problem solving process has been abstracted by the language!

"Prompt engineering is a relatively new discipline focused on the efficient use of language models to develop and optimize prompts for a variety of applications and research topics." - Cited in [1]

What is the Tip Project?LLM's simplicity makes it accessible to more people. You don't need to be a data scientist or a machine learning engineer to use LLM, just understand English (or any language of your choice) and you can use LLM to solve relatively complex problems! However, when LLM is allowed to solve problems, the results often depend heavily on the textual cues we give to the model. As a result, cue engineering (i.e., the method of using experience to test different cues to optimize the performance of LLM) has become very popular and has had a great deal of influence, which has led us to many techniques and best practices.

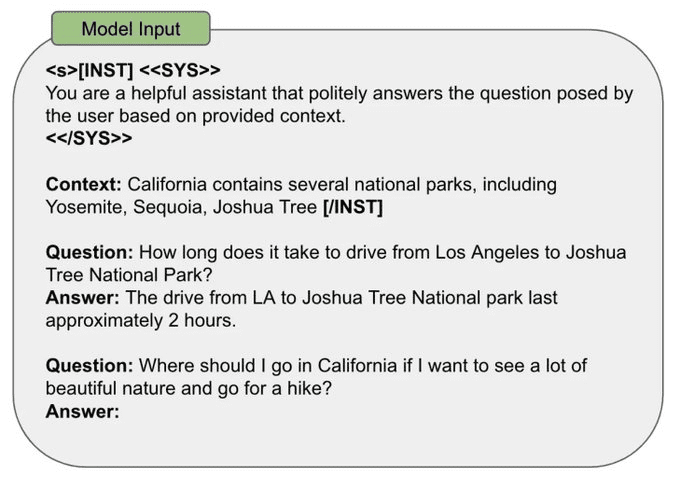

How to design a prompt:There are many ways to design LLM prompts. However, most hint strategies share a few common points:

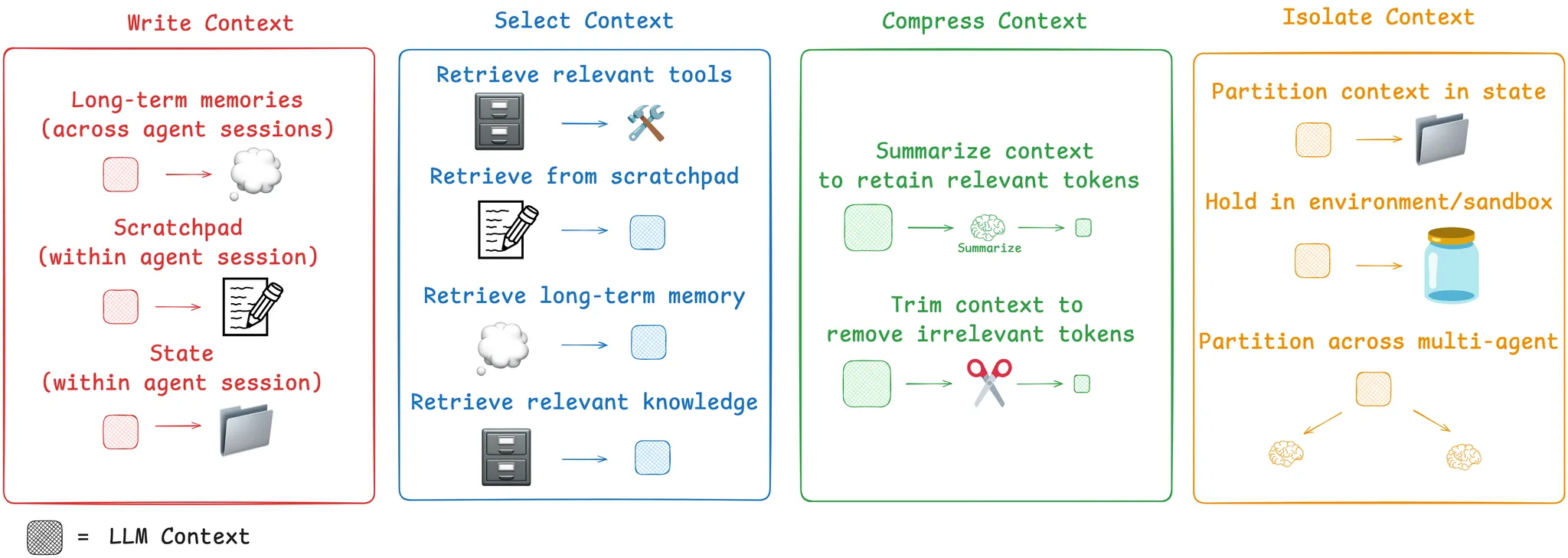

- Input Data: the actual data that LLM needs to process (e.g., sentences to be translated or classified, documents to be summarized, etc.).

- Exemplars: Examples of the correct input-output pairs to include in the prompt.

- Instruction: A textual description of the expected output of the model.

- Labels (Indicators): Labels or formatting elements that create structure in the prompt.

- Context: any additional information given to the LLM in the prompt.

In the following illustration, we give an example of how all the above mentioned cue elements can be merged in a single sentence categorization cue.

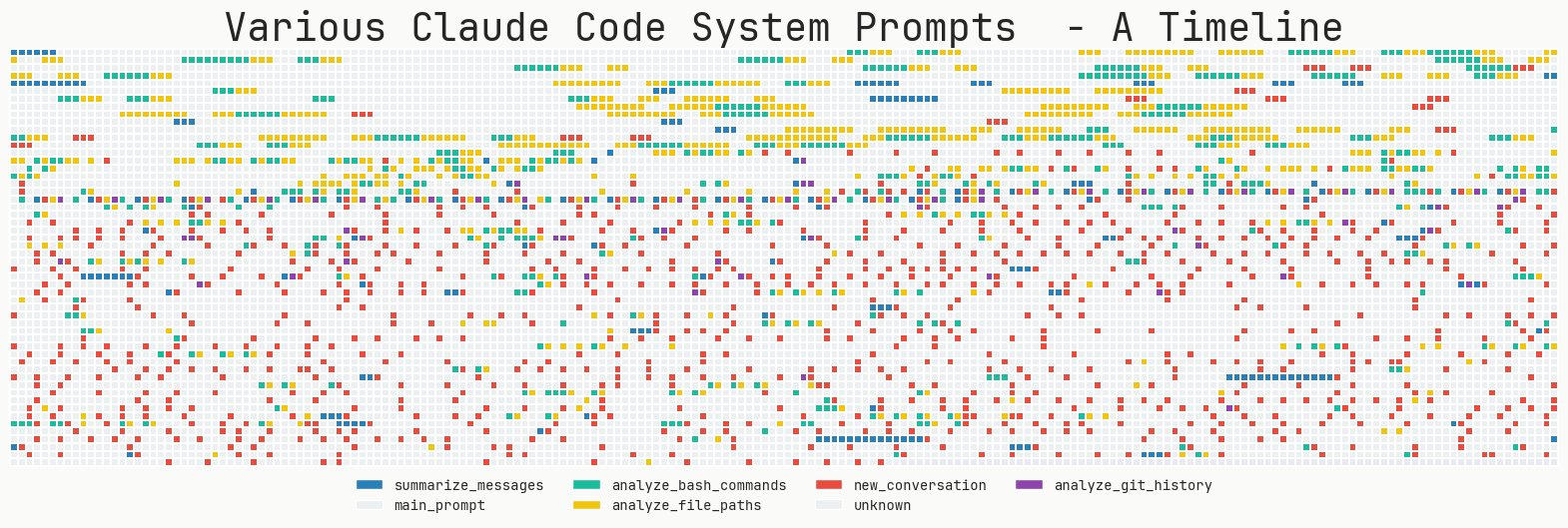

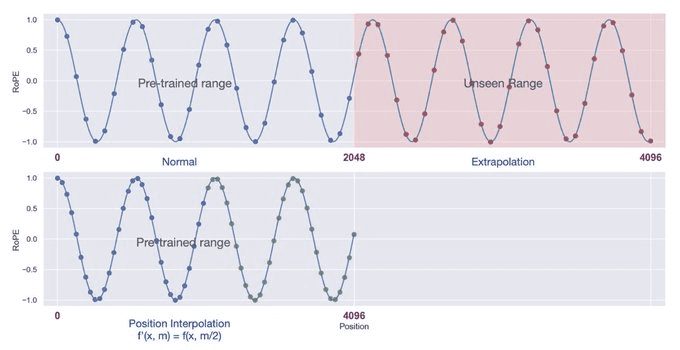

Context window:During pre-training, the LLM sees input sequences of a specific length. During pre-training, we choose this sequence length as the "context length" of the model, which is the maximum sequence length that the model can handle. If a piece of text is given that is significantly longer than this pre-determined context length, the model may react unpredictably and output incorrect results. In practice, however, there are methods-such as self-expansion [2] or positional interpolation [3]-that can be used to extend the model's context window.

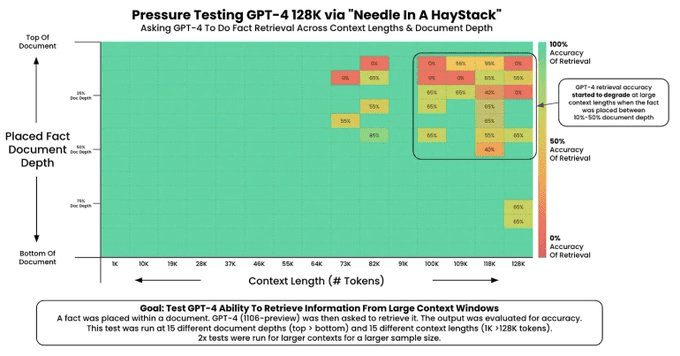

Recent research on LLMs has emphasized the creation of long context windows, which allow the model to process more information per cue (e.g., more examples or more context). However, not all LLMs are able to process their contextual information perfectly! The ability of an LLM to process information in a long context window is usually evaluated by the "needle in a haystack test" [4], which.

1. Embed a random fact in the context.

2. The model is required to find this fact.

3. Repeat this test in a variety of context lengths and locations of facts in the context.

This test generates an image like the one below (source [4]), in which we can easily spot the problems with the context window.

My cue engineering strategy:The details of cue engineering will vary depending on the model used. However, there are some general principles that can also be used as a guide in the cue engineering process:

- Empirical Approach: the first step in cue engineering is to set up a reliable way of evaluating your cue (e.g., through test cases, human assessments, or LLM as a rubric) in order to simply measure modifications to the cue.

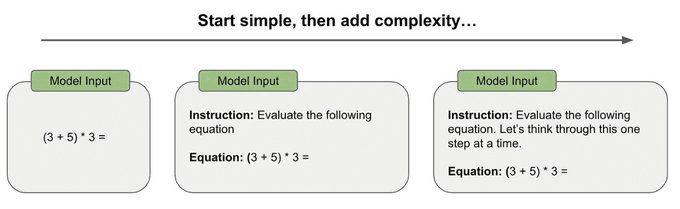

- Move from simple to complex: The first attempts at prompts should be as simple as possible, rather than attempting complex chain-thinking prompts or other specialized prompting techniques at the outset. The very first prompts should be simple, and then gradually increase in complexity while measuring changes in performance to determine if additional complexity is needed.

- Be as specific and direct as possible: Make every effort to eliminate ambiguity in the prompts and be as concise, direct and specific as possible in describing the expected outputs.

- Include examples: If describing the expected output is difficult, try including examples in the prompt. Examples provide concrete examples of expected outputs and can remove ambiguity.

- Avoid complexity wherever possible: complex prompting strategies are sometimes necessary (e.g., to solve multi-step reasoning problems), but we need to think twice before taking this approach. Take an empirical view and use established evaluation strategies to really judge whether it is necessary to include such complexity.

To summarize all of the above, my personal cue engineering strategy is to i) commit to a good evaluation framework, ii) start with a simple cue, and iii) slowly add complexity as needed to achieve the desired level of performance.

Annotation:

[1] https://promptingguide.ai

[2] https://arxiv.org/abs/2401.01325

[3] https://arxiv.org/abs/2306.15595

[4]https://github.com/gkamradt/LLMTest_NeedleInAHaystack