The experience of communicating with a friend who always forgets the content of the conversation and has to start from the beginning every time is undoubtedly inefficient and exhausting. However, this is precisely the norm for most current AI systems. They're powerful, but they're generally missing a key element:memorization。

Memory is not an optional add-on, but a core foundation for building AI intelligences (agents) that can truly learn, evolve, and collaborate.

Core Concepts of AI Memory

The current "statelessness" dilemma of AI

analog ChatGPT Or tools such as code assistants that, despite their power, force users to repeat instructions or preferences over and over again. This "memory illusion", created by the contextual windows of the big language model and Prompt Engineering, has led people to believe that AI already has the ability to memorize.

In fact, the vast majority of AI intelligences are **essentially** Statelesss, they cannot learn from past interactions or adapt themselves over time. To evolve from stateless tools to truly autonomousStateful** intelligences would have to be endowed with real memory mechanisms, rather than simply expanding the context window or optimizing retrieval capabilities.

What exactly are memories in AI intelligences?

In the context of AI intelligences, memory is the ability to retain and recall relevant information across time, tasks, and sessions. It enables intelligences to remember historical interactions and use this information to guide future behavior.

True memory is much more than storing chat logs. It's about building a continuously evolving internal state that affects every decision the intelligence makes, even if two interactions are weeks apart.

The memory of an intelligent body is defined by three pillars:

- State: Perceive instantaneous information about the current interaction.

- Persistence: Cross-session, long-term knowledge retention.

- Selectivity: Determine what information is worth remembering for a long time.

Together, these three pillars form the basis for intelligences to realize **Continuity**.

Contextual Windows, RAG, and Differences in Memory

Typical components for placing memory in the mainstream architecture of an intelligent body include a large language model (LLM), a planner (such as a ReAct frameworks), toolsets (APIs), and retrievers. The problem is that none of these components themselves can remember what happened yesterday.

Context Window ≠ Memory

A common misconception is that an ever-increasing contextual window can completely replace memorization. However, there is a clear bottleneck in this approach:

- high cost: more Tokens implies higher computational cost and latency.

- Limited information: Persistent storage across sessions is not possible, and all contextual information is lost once the session ends.

- Lack of prioritization: All information is equal in context and it is not possible to distinguish between critical and transitory information.

The context window ensures that the intelligences in theIn-sessionof coherence, while memory gives itcross-sessionThe Intelligence.

RAG ≠ Memory

Retrieval enhancement generation (RAG) and memory systems also inform the LLM, but they solve very different problems.RAG When reasoning, factual information is retrieved from external knowledge bases (e.g., documents, databases) and injected with cue words to enhance the accuracy of the answer. However RAG itself is stateless, it doesn't care about the user's identity and can't correlate the current query with historical conversations.

Memory, on the other hand, brings continuity. It records user preferences, historical decisions, successes and failures, and utilizes this information in future interactions.

Briefly:RAG Helps intelligences answer more accurately, and memories help intelligences act more intelligently. A mature intelligence requires both to work together:RAG provides it with external knowledge, and memory shapes its internal behavior.

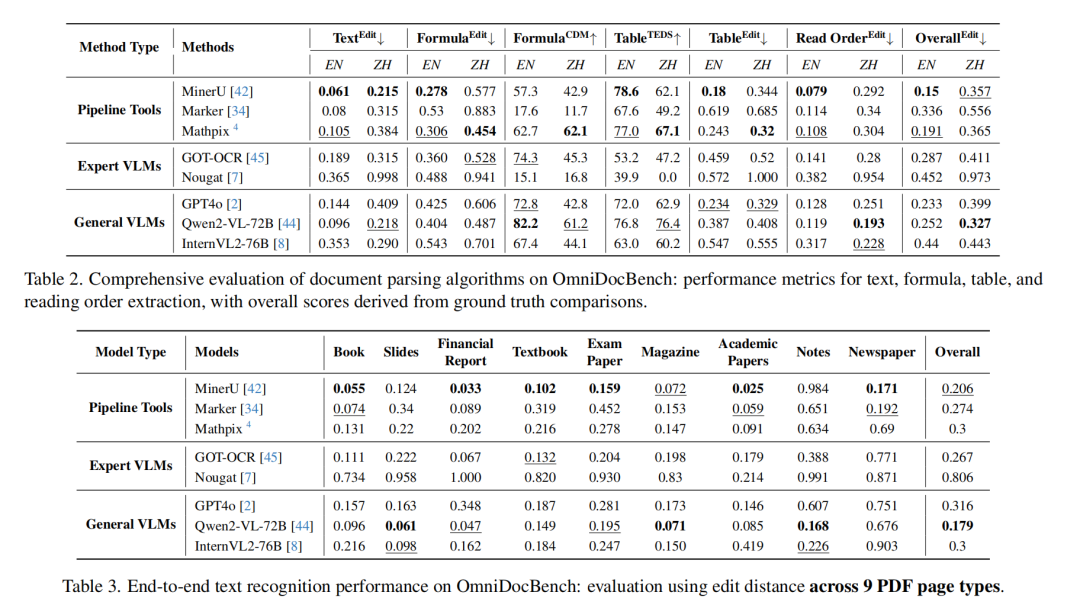

In order to distinguish between the three more clearly, refer to the table below:

| characterization | Context Window | Retrieval Augmentation Generation (RAG) | Memory |

|---|---|---|---|

| functionality | Provides immediate context within a short-term session | Retrieving factual information from external knowledge bases | Storing and recalling historical interactions, preferences, and states |

| state of affairs | Stateless | Stateless | Stateful |

| durability | single session | The external knowledge base is persistent, but the retrieval process is instantaneous | Cross-session, long-term persistence |

| application scenario | Maintaining a coherent dialog | Answer document-specific questions | Personalization, continuous learning, task automation |

AI Types of Memory

Memory in AI intelligences can be categorized into two basic forms: short-term memory and long-term memory, which serve different cognitive functions.

- Short-Term Memory: Preserving immediate context in a single interaction, as in human working memory.

- Long-Term Memory: Retain knowledge across sessions, tasks, and time for learning and adaptation.

short-term memory

Short-term memory, also known as working memory, is the most basic form of memory for AI systems and is used to preserve immediate context, including:

- Dialog History: Recent messages and their order.

- interim state: A temporary variable during the execution of a task.

- Focus of attention: The current core of the conversation.

Long-term memory

More complex AI applications need to implement long-term memory for knowledge retention and personalization across sessions. Long-term memory consists of three main types:

1. Procedural Memory

This is the "muscle memory" of the intelligence, i.e., the intelligence knows thatHow to do itSomething. It defines an action that the intelligence can perform, encoded directly in the logic. For example, "Use a more detailed explanatory style when responding to technical questions" or "Always prioritize emails from specific supervisors".

2. Episodic Memory

This is the "interaction album" of the intelligence, recording the history of interactions and specific events related to a particular user. It is the key to personalization, continuity and long-term learning. For example, an intelligence can remember "the last time a customer inquired about a billing issue, a slow response led to dissatisfaction" and thus optimize its response strategy in the future.

3. Semantic Memory

This is the "factual encyclopedia" of the intelligence, storing objective facts about the world and the user. This knowledge is usually accessed through vector searches or RAG The search is performed independently of the specific interaction. For example, "Alice is the technical lead for project A" or "John's work time zone is PST".

AI Memory Implementation Mechanisms

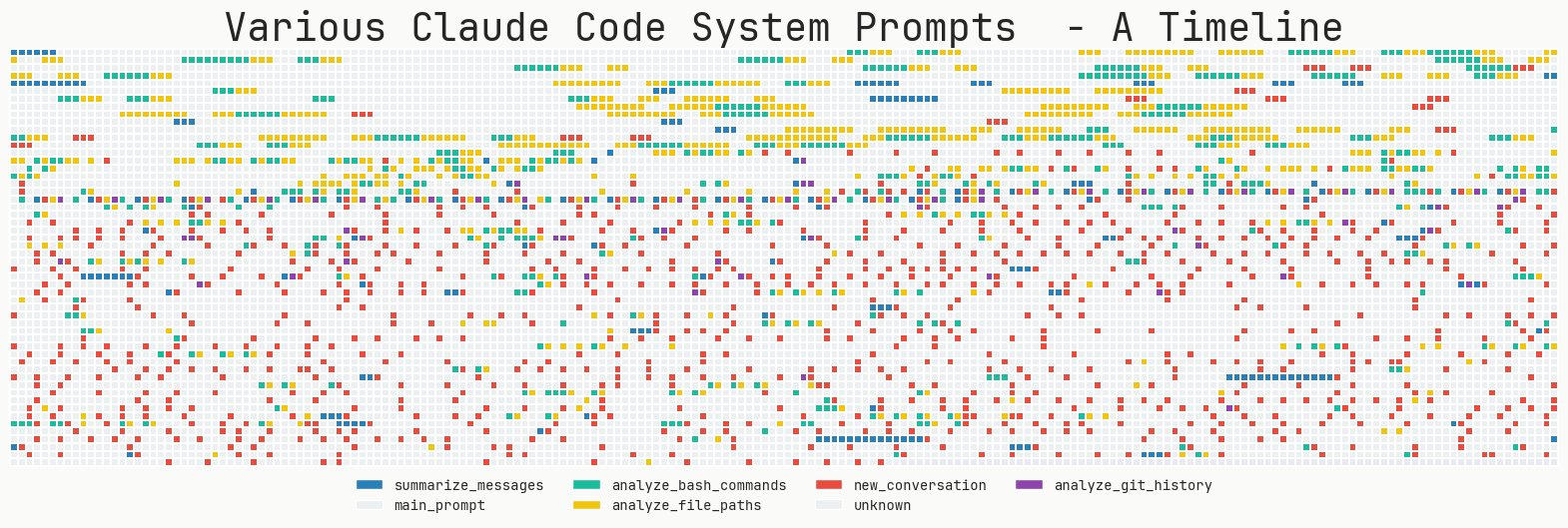

Timing of memory writing

When and how do intelligences create new memories? There are two mainstream approaches:

- Real-time synchronized writing: Create and update memories in real time as they interact with the user. This approach allows new memories to take effect immediately, but may also add latency and complexity to the main application logic. For example.

ChatGPTA similar mechanism is used by the memory function of thesave_memoriesThe tool makes real-time decisions about whether to save information in a conversation. - Asynchronous background processing: Handle memory creation and organization as separate background tasks. This approach decouples memory management from the main application logic, reducing latency and allowing for more complex offline processing such as information summarization and de-duplication.

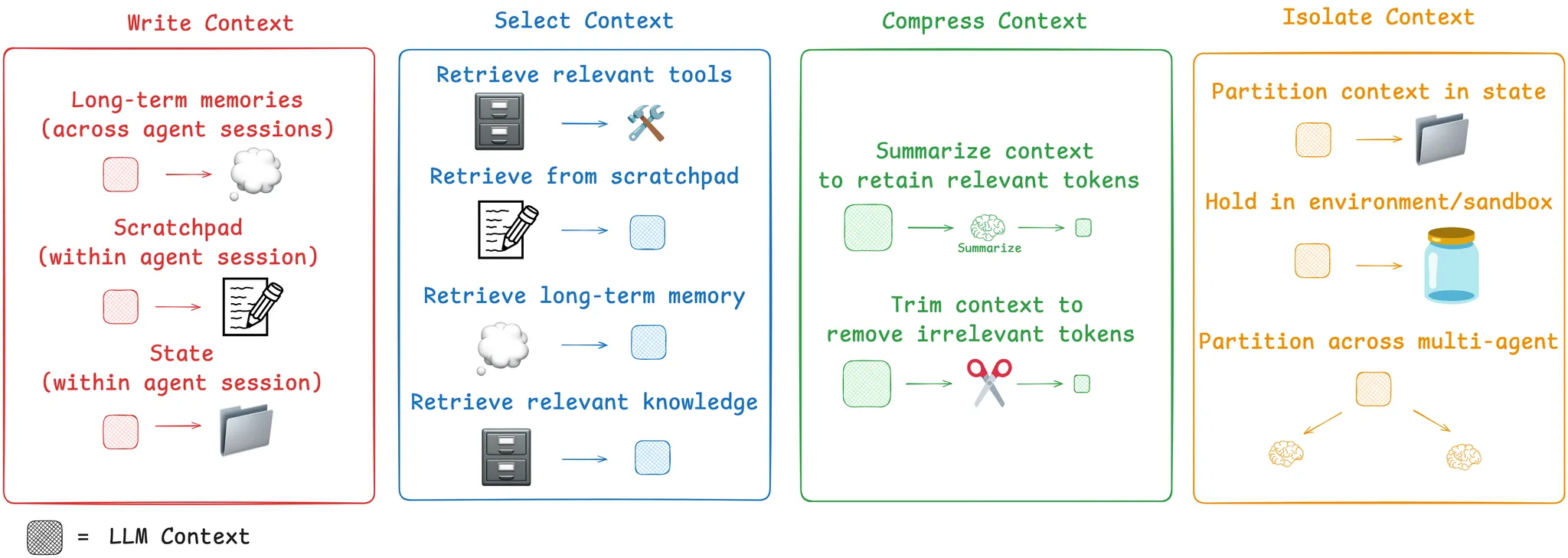

Management Strategies for Memory

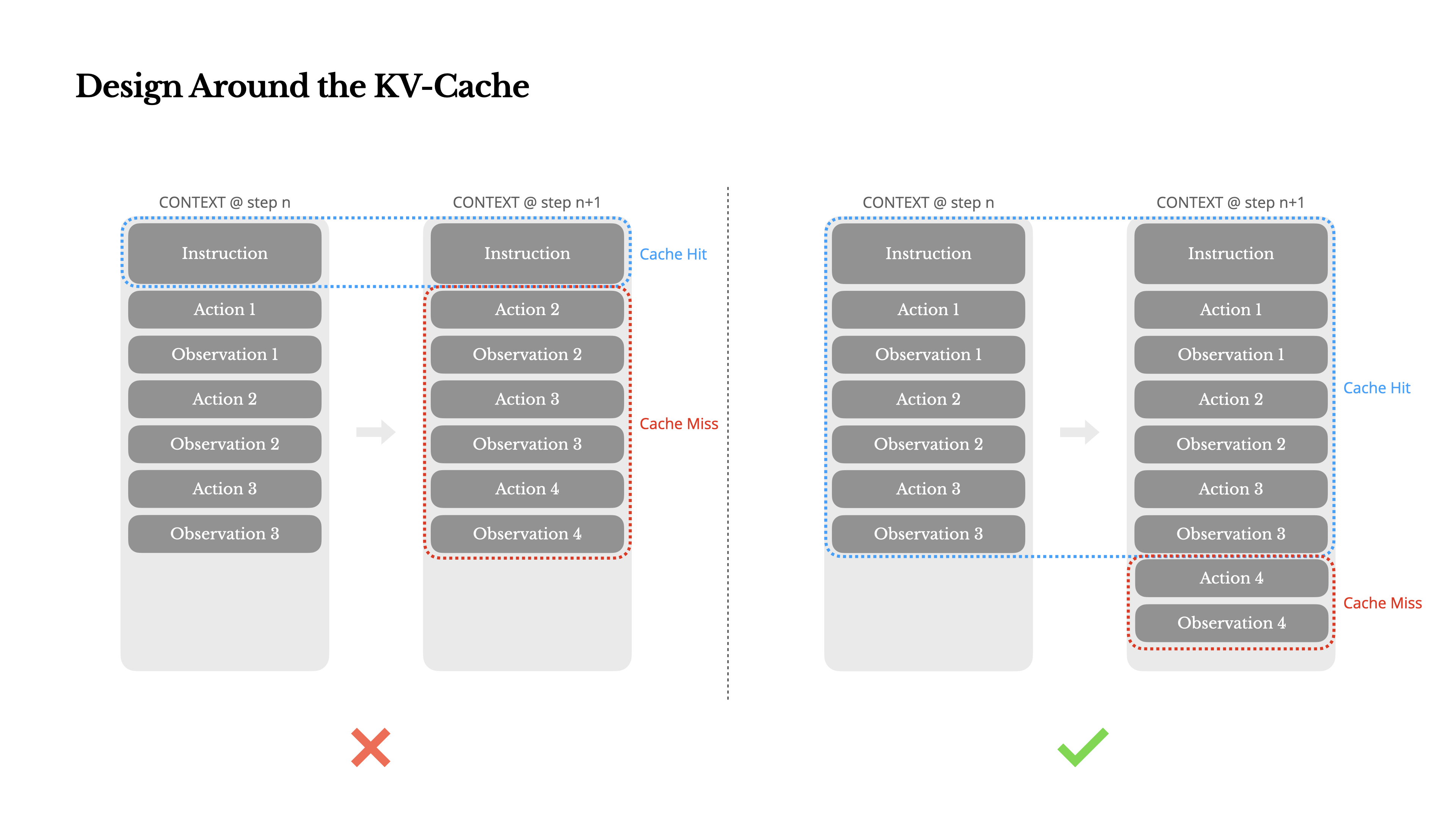

When conversations are too long, short-term memory may exceed the context window limits of the LLM. Common management strategies include:

- trimming: Remove the first or last N messages in the dialog history.

- summarize: Summarize the early conversations and replace the original text with a summary.

- selective forgetting: Permanently deletes part of the memory based on preset rules (e.g., filtering out unimportant system messages) or the model's judgment.

The Challenge of Realizing AI Memory

Although memory is crucial for intelligences, it faces many challenges in practice:

- Memory overload and forgetting mechanisms: Infinitely accumulated memories can make a system bloated and inefficient. It is a challenge to design effective forgetting mechanisms that allow intelligences to forget outdated or irrelevant information.

- information pollution: False or malicious inputs may be stored in long-term memory, thus "contaminating" the intelligence's decision-making system and leading to biased future behavior.

- Privacy and Security: A large amount of sensitive user information, such as preferences, history and personal data, is stored in long-term memory. How to ensure encryption, access control and compliance of this data is key to getting the application off the ground.

- Cost and Performance: Maintaining large-scale memory stores and performing complex retrieval and write operations incur additional computational and storage costs and may affect the responsiveness of the system.

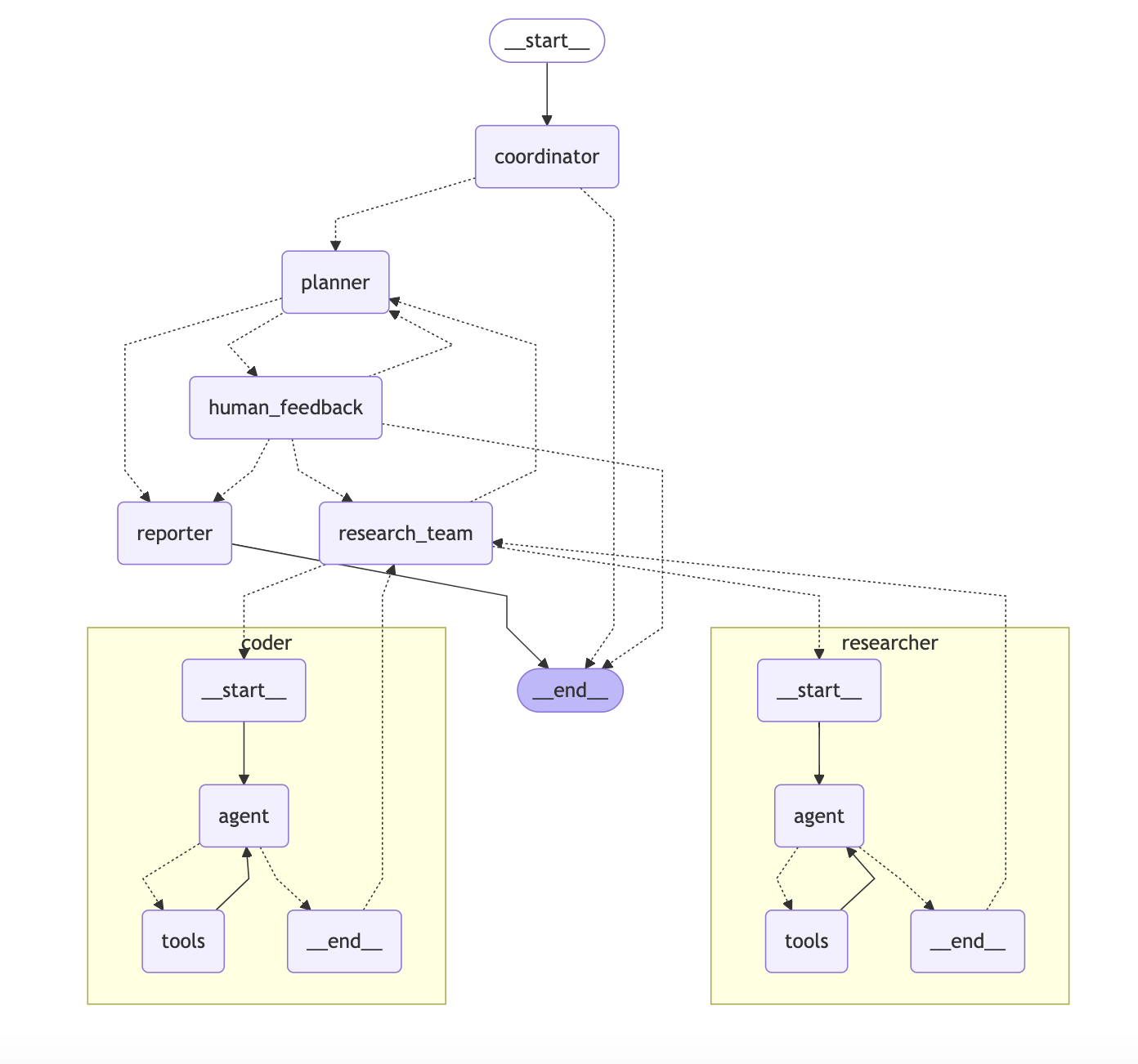

Using LangGraph to Build Intelligent Bodies with Memory

LangGraph is a powerful framework for building stateful, multi-intelligent applications with natural support for loops and state management, ideal for implementing complex memory systems. The following code example will show how to use the LangGraph Adding short-term and long-term memory to intelligences.

Add short-term memory

Short-term memory (thread-level persistence) enables intelligences to keep track of single multi-round conversations. This is accomplished by providing a short-term memory for the LangGraph Figure configures a checkpointer that makes it easy to save and load state automatically.

The following example uses a memory checkpoint InMemorySaver to save the session state.

from langchain_community.chat_models import ChatAnthropic # 假设使用Anthropic模型

from langgraph.graph import StateGraph, MessagesState, START

from langgraph.checkpoint.memory import InMemorySaver

# 为简化,假设模型已初始化

# model = ChatAnthropic(model="claude-3-5-sonnet-20240620")

# 实际使用时请替换为真实模型

class FakeModel:

def invoke(self, messages):

from langchain_core.messages import AIMessage

last_message = messages[-1].content

if "bob" in last_message:

return AIMessage(content="Hi Bob! How can I help you today?")

elif "my name" in last_message:

return AIMessage(content="Your name is Bob.")

else:

return AIMessage(content="Hello there!")

model = FakeModel()

def call_model(state: MessagesState):

response = model.invoke(state["messages"])

return {"messages": [response]}

builder = StateGraph(MessagesState)

builder.add_node("call_model", call_model)

builder.add_edge(START, "call_model")

checkpointer = InMemorySaver()

graph = builder.compile(checkpointer=checkpointer)

# 使用唯一的 thread_id 来标识一个独立的对话线程

config = {

"configurable": {

"thread_id": "user_1_thread_1"

}

}

# 第一次交互

for chunk in graph.stream(

{"messages": [{"role": "user", "content": "Hi! I'm Bob."}]},

config,

stream_mode="values",

):

chunk["messages"][-1].pretty_print()

# 第二次交互,在同一线程中

for chunk in graph.stream(

{"messages": [{"role": "user", "content": "What's my name?"}]},

config,

stream_mode="values",

):

chunk["messages"][-1].pretty_print()

================================== Ai Message ==================================

Hi Bob! How can I help you today?

================================== Ai Message ==================================

Your name is Bob.

In a production environment, the InMemorySaver Replace the database backend with a persistent one such as PostgresSaver 或 MongoDBSaver, to ensure that the dialog state is not lost after the service is restarted.

Management checkpoints

LangGraph Provides an interface for managing checkpoints (i.e., session state).

View the current state of the thread:

# graph.get_state(config)

View all historical status of the thread:

# list(graph.get_state_history(config))

Adding long-term memory

Long-term memory (cross-thread persistence) is used to store data that needs to be retained for a long time, such as user preferences.LangGraph Allows a compiled graph to be passed a store object for this functionality.

The following example shows how to use the InMemoryStore Stores user information and shares it across different dialog threads.

import uuid

from typing_extensions import TypedDict

from langchain_core.runnables import RunnableConfig

from langgraph.store.base import BaseStore

from langgraph.store.memory import InMemoryStore

# ... (复用之前的模型和 State 定义) ...

def call_model_with_long_term_memory(

state: MessagesState,

config: RunnableConfig,

*,

store: BaseStore,

):

from langchain_core.messages import AIMessage, SystemMessage

user_id = config["configurable"]["user_id"]

namespace = ("memories", user_id)

# 检索长期记忆

memories = store.search(query="", namespace=namespace)

info = "\n".join([d.value["data"] for d in memories])

system_msg_content = f"You are a helpful assistant. User info: {info}"

# 写入长期记忆

last_message = state["messages"][-1]

if "remember" in last_message.content.lower():

memory_to_save = "User's name is Bob"

# 使用唯一ID存储记忆

store.put([(str(uuid.uuid4()), {"data": memory_to_save})], namespace=namespace)

# 调用模型

response = model.invoke([SystemMessage(content=system_msg_content)] + state["messages"])

return {"messages": [response]}

builder_ltm = StateGraph(MessagesState)

builder_ltm.add_node("call_model", call_model_with_long_term_memory)

builder_ltm.add_edge(START, "call_model")

checkpointer_ltm = InMemorySaver()

store = InMemoryStore()

graph_ltm = builder_ltm.compile(

checkpointer=checkpointer_ltm,

store=store,

)

# 在线程1中,用户要求记住名字

config1 = {

"configurable": {

"thread_id": "thread_1",

"user_id": "user_123",

}

}

for chunk in graph_ltm.stream(

{"messages": [{"role": "user", "content": "Hi! Please remember my name is Bob"}]},

config1,

stream_mode="values",

):

chunk["messages"][-1].pretty_print()

# 在新的线程2中,查询名字

config2 = {

"configurable": {

"thread_id": "thread_2",

"user_id": "user_123", # 相同的 user_id

}

}

for chunk in graph_ltm.stream(

{"messages": [{"role": "user", "content": "What is my name?"}]},

config2,

stream_mode="values",

):

chunk["messages"][-1].pretty_print()

================================== Ai Message ==================================

Hi Bob! How can I help you today?

================================== Ai Message ==================================

Your name is Bob.

Enabling semantic search

By providing store Configuring the vector embedding model enables semantic similarity-based memory retrieval, allowing intelligences to call up relevant information more intelligently.

from langchain_openai import OpenAIEmbeddings

# 假设已初始化 OpenAIEmbeddings

# embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

# store = InMemoryStore(

# index={

# "embed": embeddings,

# "dims": 1536, # 嵌入维度

# }

# )

#

# store.put([

# ("1", {"text": "I love pizza"}),

# ("2", {"text": "I am a plumber"})

# ], namespace=("user_123", "memories"))

# 在调用时,通过 query 参数进行语义搜索

# items = store.search(

# query="I'm hungry",

# namespace=("user_123", "memories"),

# limit=1

# )

# 搜索结果将是与 "I'm hungry" 最相关的 "I love pizza"。

This feature enables the intelligences to dynamically retrieve the most relevant information from the memory bank based on the context of the conversation, rather than simply performing keyword matching.

Case Study: Building a Memory-Enhanced Email Intelligence Body

In the following, the theory is put into practice to construct an email processing intelligence with multiple types of memories.

7.1 Defining Intelligent Body States

First, define the states that the intelligences need to keep track of, encompassing both short-term and long-term memory.

from typing import TypedDict, Dict, Any

from langgraph.graph import MessagesState

class EmailAgentState(TypedDict, total=False):

"""邮件智能体的状态定义"""

messages: MessagesState # 短期记忆:对话历史

user_preferences: Dict[str, Any] # 长期语义记忆:用户偏好

email_threads: Dict[str, Any] # 长期情景记忆:历史邮件线程

action: str # 决策动作

reply: str # 生成的回复

7.2 Implementing a decision classifier (using situational memory)

Create a classifier node that determines the next action based on the content of the email and historical interactions (situational memory).

def classify_email(state: EmailAgentState):

# 此处应调用 LLM 进行分类

# 为简化,直接返回一个硬编码的决策

email_content = state["messages"][-1].content

if "urgent" in email_content.lower():

return {"action": "escalate"}

return {"action": "reply"}

7.3 Defining tools (using semantic memory)

Define the tools available to the intelligentsia, e.g., contact information from semantic memory.

from langgraph.prebuilt import ToolNode

def get_contacts_from_memory(user_id: str):

# 模拟从用户数据库或语义记忆中获取联系人

contacts_db = {

"user_123": {

"developers": ["alice@company.com"],

"managers": ["charlie@company.com"]

}

}

return contacts_db.get(user_id, {})

def send_email(to: str, subject: str, body: str):

# 模拟发送邮件API

print(f"Email sent to {to} with subject '{subject}'")

return "Email sent successfully."

tools = [get_contacts_from_memory, send_email]

tool_node = ToolNode(tools)

7.4 Constructing graphs and connecting nodes

utilization LangGraph Connect all components into an executable workflow.

from langgraph.graph import StateGraph, START, END

builder = StateGraph(EmailAgentState)

# 1. 定义节点

builder.add_node("classify", classify_email)

builder.add_node("generate_reply", lambda state: {"reply": "Generated reply based on user tone."}) # 简化版

builder.add_node("escalate_task", lambda state: print("Task Escalated!"))

# 2. 定义边

builder.add_edge(START, "classify")

builder.add_conditional_edges(

"classify",

lambda state: state["action"],

{

"reply": "generate_reply",

"escalate": "escalate_task",

}

)

builder.add_edge("generate_reply", END)

builder.add_edge("escalate_task", END)

# 3. 编译图

email_graph = builder.compile()

This simplified example shows how combining different types of memories (via states and tools) can drive the workflow of an intelligence. By extending this framework, powerful AI assistants can be built that are capable of handling complex tasks, are highly personalized, and are capable of continuous learning.

The following questions can serve as an effective guide to thinking about when designing your own memory-enhancing intelligences:

- Learning content: What type of information should intelligences learn? Is it objective facts, summaries of historical events, or rules of behavior?

- Memory timing: When and by whom should memory formation be triggered? Is it in real-time interactions, or in background batch processing?

- Storage Solutions: Where should memories be stored? Is it a local database, vector storage, or a cloud service?

- Security and Privacy: How do you secure memorized data against leakage and misuse?

- Updates and Maintenance: How do you prevent outdated or faulty memories from contaminating an intelligence's decision-making?