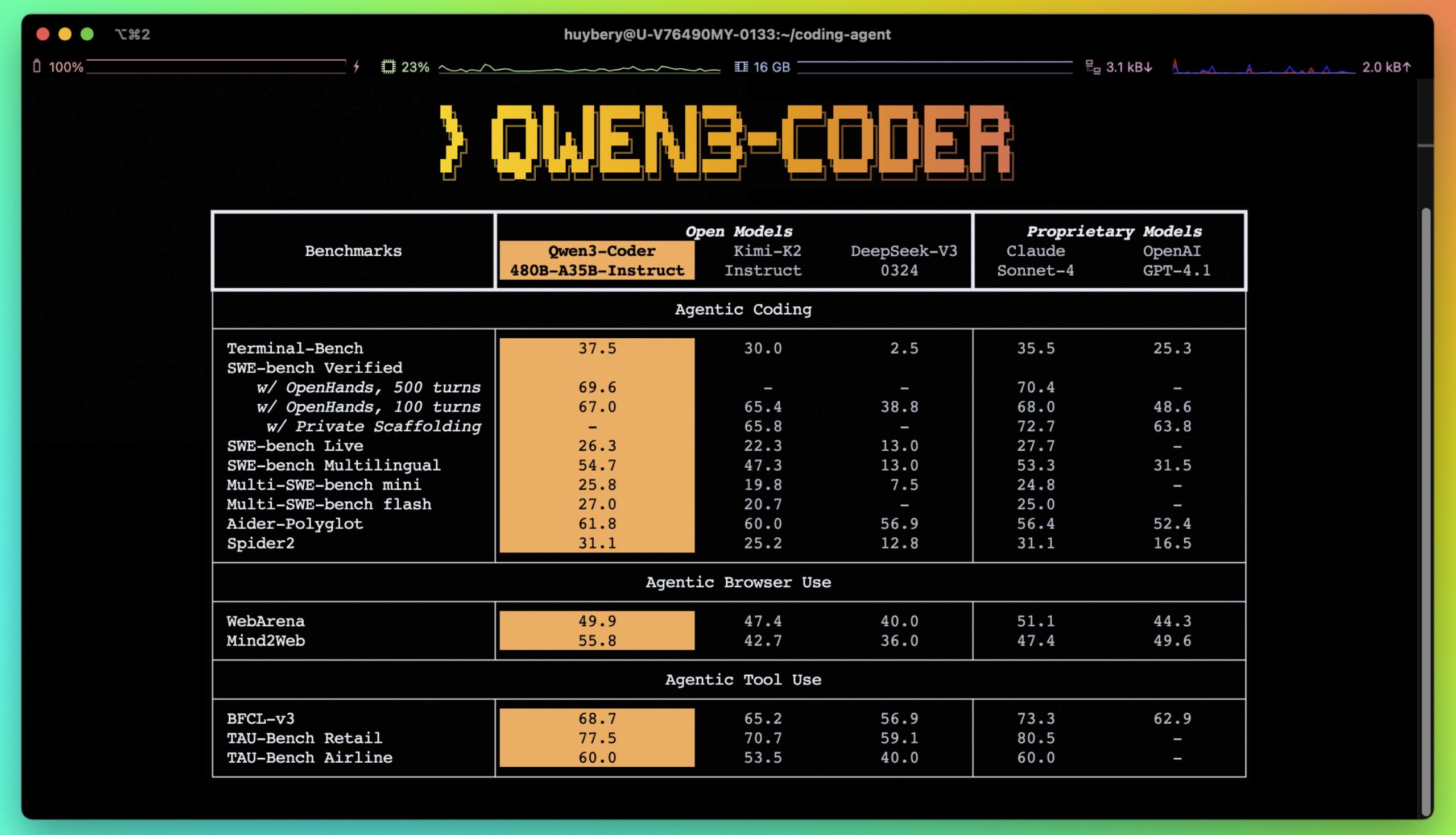

Qwen3-Coder is an open source large-scale language modeling family developed by the Alibaba Cloud Qwen team, focusing on code generation and intelligent programming. Its core products are Qwen3-Coder-480B-A35B-InstructQwen3-Coder is a hybrid model of expertise (MoE) with 48 billion parameters and 3.5 billion activation parameters. It supports a native context length of 256K tokens and can be scaled with extension methods up to 1 million tokens.Qwen3-Coder excels in code generation, code repair, and agent tasks (e.g., browser manipulation and tool usage) with performance comparable to the Claude Sonnet 4. It is available through the open-source command line tool Qwen Code Provides enhanced coding support for developers working with complex code tasks. The models support more than 100 languages and excel especially in multilingual code generation and systems programming. All models are open source under the Apache 2.0 license and can be freely downloaded and customized by developers.

Qwen3-Code Free User's Guide: Configuring the ModelScope API and Qwen CLI

Function List

- code generation: Generate high-quality code with support for 92 programming languages, including Python, C++, Java, and more.

- Code Fixes: Automatically detects and fixes errors in the code to improve programming efficiency.

- code completion: Support for code snippet filler patching to optimize the development process.

- Agent Mission Support: Integrate external tools to perform browser operations and handle complex tasks.

- Long Context Processing: Native support for 256K token contexts, scalable to 1M tokens, suitable for handling large code bases.

- Multi-language support: Coverage of more than 100 languages and dialects, suitable for multilingual development and translation.

- command-line tool: Provided

Qwen Code, optimizing parser and tool support to simplify code management and automation tasks. - open source model: Provides multiple model sizes (0.6B to 480B) and supports developer customization and fine-tuning.

Using Help

Installation and Deployment

The Qwen3-Coder model is hosted on GitHub and can be installed and used by developers by following these steps:

- clone warehouse

Run the following command in a terminal to clone the Qwen3-Coder repository:git clone https://github.com/QwenLM/Qwen3-Coder.gitThis will download the project file locally.

- Installation of dependencies

Go to the project directory and install the necessary Python dependencies:cd Qwen3-Coder pip install -e ./"[gui,rag,code_interpreter,mcp]"If only minimal dependencies are needed, you can run

pip install -e ./。 - Configuring Environment Variables

Qwen3-Coder supports use via Alibaba Cloud's DashScope API or native deployment models.- Using the DashScope API: Setting environment variables to use cloud services:

export OPENAI_API_KEY="your_api_key_here" export OPENAI_BASE_URL="https://dashscope-intl.aliyuncs.com/compatible-mode/v1" export OPENAI_MODEL="qwen3-coder-plus"API keys are available from Alibaba Cloud's DashScope platform.

- local deployment: Use

llama.cpp或OllamaDeployment Model.- Using Ollama: Install Ollama (version 0.6.6 or higher) and run it:

ollama serve ollama run qwen3:8bSet the context length and generation parameters:

/set parameter num_ctx 40960 /set parameter num_predict 32768The API address is

http://localhost:11434/v1/。 - Using llama.cpp: Run the following command to start the service:

./llama-server -hf Qwen/Qwen3-8B-GGUF:Q8_0 --jinja --reasoning-format deepseek -ngl 99 -fa -sm row --temp 0.6 --top-k 20 --top-p 0.95 --min-p 0 -c 40960 -n 32768 --no-context-shift --port 8080This will start a local service with an API address of

http://localhost:8080/v1。

- Using Ollama: Install Ollama (version 0.6.6 or higher) and run it:

- Using the DashScope API: Setting environment variables to use cloud services:

- Download model

Model weights can be downloaded from Hugging Face or ModelScope. Example:- Hugging Face:

https://huggingface.co/Qwen/Qwen3-Coder-480B-A35B-Instruct - ModelScope:

https://modelscope.cn/organization/qwen

utilizationtransformersLibrary loading model:

from transformers import AutoTokenizer, AutoModelForCausalLM device = "cuda" # 使用 GPU tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen3-Coder-480B-A35B-Instruct") model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen3-Coder-480B-A35B-Instruct", device_map="auto").eval() - Hugging Face:

Using Qwen3-Coder

Qwen3-Coder provides a variety of functions, and the following is the detailed operation procedure of the main functions:

- code generation

Qwen3-Coder generates high quality code. For example, generating fast sorting algorithms:input_text = "#write a quick sort algorithm" model_inputs = tokenizer([input_text], return_tensors="pt").to(device) generated_ids = model.generate(model_inputs.input_ids, max_new_tokens=512, do_sample=False)[0] output = tokenizer.decode(generated_ids[len(model_inputs.input_ids[0]):], skip_special_tokens=True) print(output)The model returns the complete quick sort code. Supported programming languages include C, C++, Python, Java, etc., suitable for a variety of development scenarios.

- code completion

Qwen3-Coder supports Fill-in-the-Middle. For example, fill in the quick sort function:input_text = """<|fim_prefix|>def quicksort(arr): if len(arr) <= 1: return arr pivot = arr[len(arr) // 2] <|fim_suffix|> middle = [x for x in arr if x == pivot] right = [x for x in arr if x > pivot] return quicksort(left) + middle + quicksort(right)<|fim_middle|>""" messages = [{"role": "system", "content": "You are a code completion assistant."}, {"role": "user", "content": input_text}] text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True) model_inputs = tokenizer([text], return_tensors="pt").to(device) generated_ids = model.generate(model_inputs.input_ids, max_new_tokens=512, do_sample=False)[0] output = tokenizer.decode(generated_ids[len(model_inputs.input_ids[0]):], skip_special_tokens=True) print(output)The model will automatically fill in the missing code sections.

- Code Fixes

Qwen3-Coder detects and fixes code errors. The user can enter code containing errors and the model will return a fixed version. For example, in the Aider benchmark, Qwen3-Coder-480B-A35B-Instruct scores as well as GPT-4o for complex bug fixes. - Using the Qwen Code Tool

Qwen Codeis a command line tool optimized for parsing and tool support for Qwen3-Coder. Run the following command:cd your-project/ qwenExample command:

- View the project architecture:

qwen > Describe the main pieces of this system's architecture - Improvement function:

qwen > Refactor this function to improve readability and performance - Generate documents:

qwen > Generate comprehensive JSDoc comments for this function

The tool supports automated tasks such as handling git commits and code refactoring.

- View the project architecture:

- Agency assignments

Qwen3-Coder supports proxy tasks, such as browser actions or tool calls. Configuration methods:- Set the API provider to

OpenAI Compatible。 - Enter the DashScope API key.

- Set a custom URL:

https://dashscope-intl.aliyuncs.com/compatible-mode/v1。 - Select the model:

qwen3-coder-plus。

Models can handle complex agent tasks such as automated script execution or browser interaction.

- Set the API provider to

caveat

- Context length: 256K tokens are supported by default, suitable for large code base analysis. It can be extended to 1M tokens with YaRN.

- hardware requirement: GPUs (e.g., CUDA devices) are recommended for faster inference. Smaller models (e.g. Qwen3-8B) are suitable for low-resource environments.

- license: The model is open source under the Apache 2.0 license, which allows free use and modification, but commercial use is subject to a license agreement.

application scenario

- software development

Developers can use Qwen3-Coder to quickly generate code, fix bugs, or patch functions for projects ranging from beginners to professional developers. For example, generating code for the backend of a web application or optimizing code for an embedded system. - Automated workflows

pass (a bill or inspection etc)Qwen CodeA tool that allows developers to automate git commits, code refactoring, or documentation generation for team collaboration and DevOps scenarios. - Multi-language projects

With support for more than 100 languages, Qwen3-Coder is ideal for developing multilingual applications or for code translation, such as converting Python code to C++. - Large code base management

Contextual support for 256K tokens allows the model to handle large code bases, suitable for analyzing and optimizing complex projects such as enterprise software or open source repositories.

QA

- What programming languages does Qwen3-Coder support?

It supports 92 programming languages, including Python, C, C++, Java, JavaScript, etc., covering mainstream development needs. - How do you handle large code bases?

The model natively supports 256K token contexts and can be scaled to 1M tokens with YaRN. The model can be scaled up to 1M tokens with YaRN using theQwen CodeTools to directly query and edit large code bases. - Is Qwen3-Coder free?

The model is open source under the Apache 2.0 license and is free to download and use. Commercial use is subject to a license agreement. - How to optimize model performance?

Using a GPU device such as CUDA increases inference speed. Adjusting parameters such asmax_new_tokens和top_pGeneration quality can be optimized.