From Paris. Mistral AI Once again, with its signature open source strategy, it has dropped an important piece into the AI space - theVoxtral Audio Modeling. The family, which is regarded as OpenAI The strongest competitor in Europe, the launch of the Voxtral Rather than being a mere speech transcription tool, it extends its powerful language modeling capabilities to the audio domain, aiming to provide an out-of-the-box and cost-effective speech processing solution for commercial applications.

Voxtral Offering two very different versions of the model, this strategy clearly reveals its market ambitions. One is made up of 24B parameter constitutes a heavyweight version designed for production environments that need to handle massive amounts of data; another 3B parametric Mini versions, targeting resource-constrained local and edge computing scenarios. Both versions are available in Apache 2.0 Open under license, which means that businesses and developers are not only free to download, modify and deploy, but also eliminates worries about commercial use.

More Than Hearing: Built-in Understanding and Multilingual Benefits

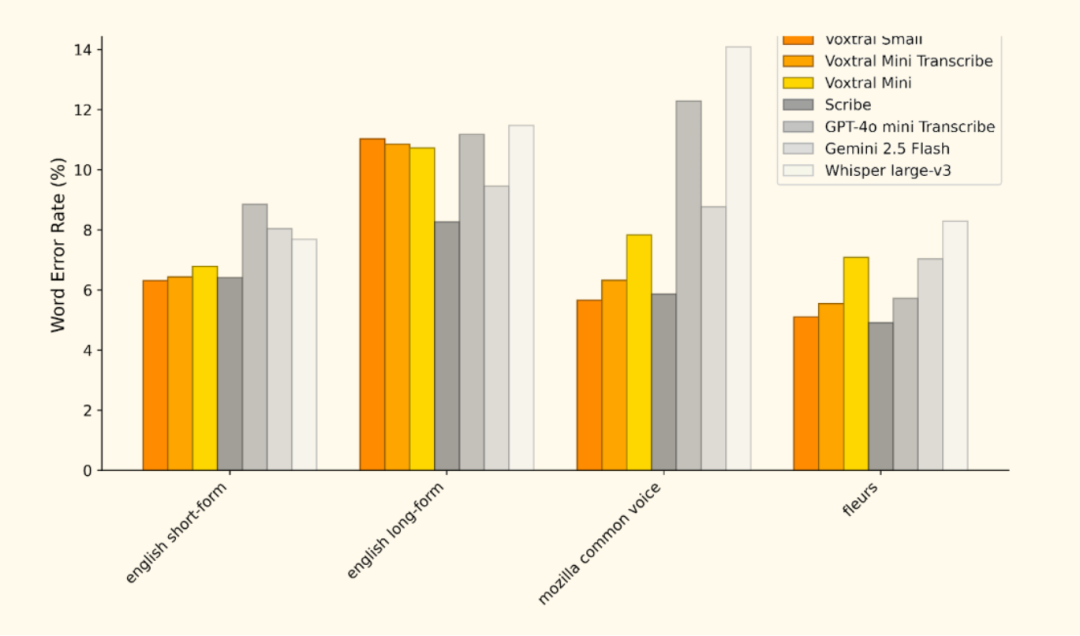

与 OpenAI 的 Whisper Unlike models that focus on high-precision speech transcription (ASR) such as theVoxtral 's core strength is its natively integrated natural language understanding (NLU) capabilities. It is based on Mistral Small 3.1 The language model is constructed so that it inherits powerful text processing capabilities. This means that users no longer need to build complex processing links from speech-to-text to language models, and can directly ask questions, generate summaries or extract structured information from audio files. For exampleVoxtral The ability to handle up to 30 minutes of audio transcription or 40 minutes of audio comprehension tasks is made possible by its 32k The context window of the token is crucial for handling scenarios such as conference recordings and long interviews.

In the area of multilingual support.Voxtral It also excels, especially in European languages, where official benchmarks show it supports English, French, German, Spanish and Italian. This feature gives it a natural advantage when handling audio data for international business.

Application scenarios: from the cloud to the edge

Voxtral The application potential covers a wide range of scenarios from the cloud to the edge:

- client service: Automatically transcribe customer service calls and generate work orders or summaries directly to improve response efficiency.

- content creation: Quickly turn podcasts and interviews into transcripts with the ability to distill core ideas directly.

- Analysis of meetings: Record and generate meeting minutes in real time, extracting key decisions and to-dos.

- Edge Intelligence: Deployment on IoT devices such as smart homes and in-vehicle systems

Voxtral Mini, enabling local voice interaction without the need for an internet connection.

Quick Start Guide

Mistral AI Provides the ability to access the cloud through the API or locally deployed using both Voxtral。

(i) Adoption Mistral AI fig. high in the clouds API

For developers looking for a quick integration, you can use the official API. First, in the Mistral AI Register on the platform and get API key, and then pass the mistralai Python clients can call it.

(ii) Local deployment (vLLM (Recommended)

For scenarios that require data privacy or offline operation, local deployment is a better choice. Officially recommended vLLM framework, as it provides a framework for the Voxtral High-performance inference support is provided.

1. Installation environment

First, make sure you have installed Python environment, and then pass the pip mounting vLLM and related dependencies.

uv pip install -U "vllm

" --torch-backend=auto --extra-index-url https://wheels.vllm.ai/nightly

2. Starting local services

Use the following command from the Hugging Face Download the model and launch an application with the OpenAI Compatible local services.

python -m vllm.entrypoints.openai.api_server \

--model mistralai/Voxtral-Mini-3B-v0.1 \

--tokenizer-id mistralai/Mistral-7B-Instruct-v0.3 \

--enable-chunked-prefill

3. Calling local services

After the service starts, you can use the OpenAI The client libraries of the curl with the locally running Voxtral models to interact. The following is a summary of the use of the Python Examples of performing speech transcription and comprehension.

- voice transcription

from openai import OpenAI

from huggingface_hub import hf_hub_download

# 配置客户端指向本地vLLM服务

client = OpenAI(

base_url="http://localhost:8000/v1",

api_key="vllm" # 本地服务不需要真实密钥

)

# 下载示例音频

audio_file_path = hf_hub_download(

repo_id="patrickvonplaten/audio_samples",

filename="obama.mp3",

repo_type="dataset"

)

# 发起转录请求

with open(audio_file_path, "rb") as audio_file:

transcription = client.audio.transcriptions.create(

model="mistralai/Voxtral-Mini-3B-v0.1",

file=audio_file,

language="en"

)

print(transcription.text)

- Speech Understanding (Q&A)

from openai import OpenAI

from huggingface_hub import hf_hub_download

import base64

# 配置客户端

client = OpenAI(

base_url="http://localhost:8000/v1",

api_key="vllm"

)

# 下载并编码音频文件

def encode_audio_to_base64(filepath):

with open(filepath, 'rb') as audio_file:

return base64.b64encode(audio_file.read()).decode('utf-8')

obama_file = hf_hub_download("patrickvonplaten/audio_samples", "obama.mp3", repo_type="dataset")

bcn_file = hf_hub_download("patrickvonplaten/audio_samples", "bcn_weather.mp3", repo_type="dataset")

obama_base64 = encode_audio_to_base64(obama_file)

bcn_base64 = encode_audio_to_base64(bcn_file)

# 构建包含音频和文本的多模态消息

response = client.chat.completions.create(

model="mistralai/Voxtral-Mini-3B-v0.1",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "这是两段音频。第一段是一位著名人物的演讲,第二段是天气预报。请问,哪一段演讲更有启发性?为什么?"},

{"type": "image_url", "image_url": {"url": f"data:audio/mpeg;base64,{obama_base64}"}},

{"type": "image_url", "image_url": {"url": f"data:audio/mpeg;base64,{bcn_base64}"}}

]

}

],

temperature=0.2

)

print(response.choices.message.content)

Project resources

- Official Blog: https://mistral.ai/news/voxtral/

- Model Download: https://huggingface.co/mistralai/Voxtral-Mini-3B-2507