Large Language Modeling (LLM) technology is changing rapidly, and the open source community is churning out a wealth of valuable learning resources. These projects are a treasure trove of practices for developers who want to master LLM systematically. In this article, we will provide an in-depth look at nine projects in the GitHub On the widely acclaimed top open source projects, they not only cover the whole process from theory to practice, but also provide specific code implementation and engineering guidance, enough to become a solid ladder on your learning path.

Datawhale Series: Systematic Tutorials for Chinese Developers

domestic Datawhale Open source organizations have played an important role in the field of AI knowledge popularization. Their series of LLM tutorials have been widely welcomed by domestic developers due to their systematic content, friendly support for the Chinese environment, and clear learning paths.

1. Happy-LLM: Zero to One Principles and Practices

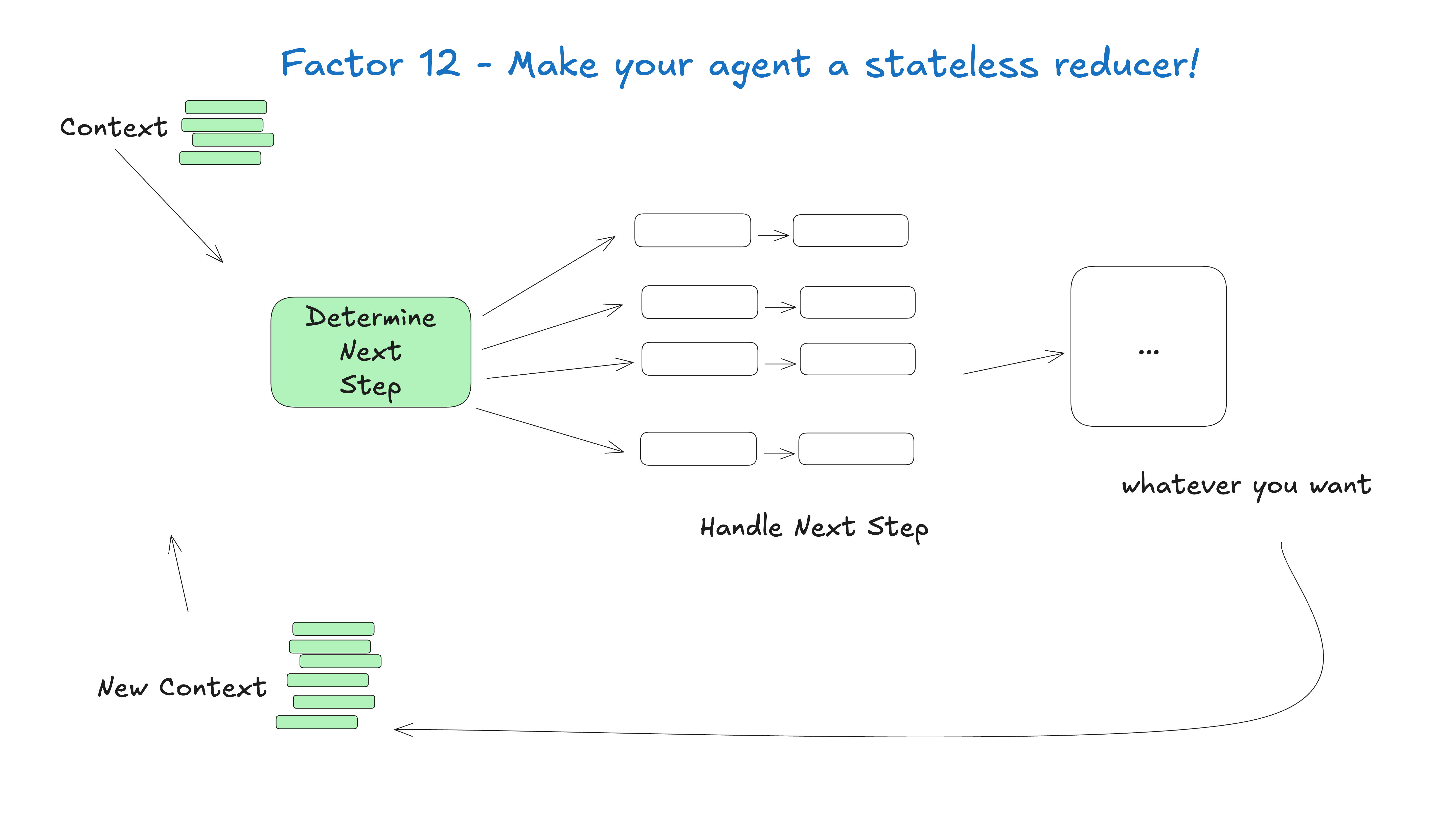

Happy-LLM (5k Stars) is a completely free, systematic tutorial on the principles and practices of Large Models, designed to help developers gain a deep understanding of the core of LLM. The course starts from the basic concepts of NLP, and analyzes in detail the Transformer architecture and attention mechanisms, and provides a clear overview of the principles of pre-training models.

The centerpiece of the program is the "hands-on" approach. It will not only lead you to use PyTorch Implementing a complete from-scratch LLaMA2 model and also covers the training Tokenizer, model pre-training, and supervised fine-tuning of the whole process. Also, the course includes a review of the RAG(search-enhanced generation) and Agent Hands-on lectures on cutting-edge technologies such as

- open source address:

https://github.com/datawhalechina/happy-llm

2. LLM-Universe: large model application development for beginners

LLM-Universe (8.8k Stars) is an introductory course designed for programming novices, focusing on the application of big models. The tutorial is based on a representative project - "Intelligent Q&A Assistant Based on Personal Knowledge Base", and practiced on AliCloud servers.

The content of the course is very pragmatic, covering the call of the mainstream domestic and foreign large models API(e.g. GPT, Wenxin Yiyi, Wisdom Spectrum GLM) method,Prompt Engineering skills, use LangChain framework, the construction of the vector database, and finally how to use the Streamlit Package the application into an interactive front-end interface.

- open source address:

https://github.com/datawhalechina/llm-universe

3. Self-LLM: An Edible Guide to the Localization of Open Source Large Models

Self-LLM (20k Stars) is known as the "edible guide to open source big models tailored for Chinese babies", and its core goal is to solve the problem of private deployment and fine-tuning of open source models in the domestic environment. This project is valuable for developers who care about data privacy and model customization.

It provides the ability to use the Linux environmental analysis LLaMA、ChatGLM、Qwen(Thousands of questions),InternLM(Shusheng-Puyin) and dozens of other domestic and international mainstream models for local deployment, full-parameter fine-tuning and LoRA Exhaustive steps for efficient fine-tuning. In addition, the tutorial extends to the deployment of large multimodal models.

- open source address:

https://github.com/datawhalechina/self-llm

4. LLM Cookbook: A Practical Chinese Version of Wu Enda's Course

LLM Cookbook (20.2k Stars) is a hands-on Chinese version based on a series of Big Model courses by Prof. Ernest Wu. It distills and localizes the core ideas of the original courses, covering Prompt Engineering,RAG development, model fine-tuning, and other key aspects.

A special feature of this program is the provision of bilingual Chinese and English courses that accompany the original curriculum. Jupyter Notebook code, and for the needs of domestic developers, especially optimized for Chinese Prompt design and API Calling Methods. The course is divided into "mandatory" and "optional" parts, so that learners can progress step by step according to their own situation.

- open source address:

https://github.com/datawhalechina/llm-cookbook

Engineering and Deep Practice

After mastering the theory, putting the model into actual production is the way to go. The following program focuses on key engineering challenges in the life cycle of a large model.

5. LLM-Action: Large Model Engineering and Field Practice

LLM-Action (19k Stars) is a technology sharing project focusing on big model engineering and application implementation. It is not as facile as an introductory tutorial, but dives into specific technical details such as model training, inference, compression and security.

Its content can be seen as a vast repository of technical knowledge covering:

- train:

LoRA、QLoRA、P-TuningEfficient fine-tuning techniques for iso-parameters and distributed training. - Reasoning Optimization: In-depth explanation

TensorRT-LLM、vLLMand other mainstream inference frameworks in the industry. - Model Compression: A systematic introduction to techniques such as model quantization, pruning, and knowledge distillation.

- adaptation for localization: Includes experience in adapting domestic hardware platforms such as Huawei Rising.

- open source address:

https://github.com/liguodongiot/llm-action

6. AI Engineering Hub: a repository of real-world AI applications

AI Engineering Hub (13.2k Stars) is a rich collection of in-depth tutorials and hands-on case studies centered on the concept of "real-world oriented AI Applications". Instead of talking about vague theories, this project provides a large number of code examples that can be modified and run directly on the go.

It revolves around DeepSeek、Llama、Gemma and other popular open-source models, providing a wide range of models including RAGmany Agent collaboration, multimodal applications, and many other solutions. The project has organized the core tutorial into a 500+ page PDF Documentation, like a detailed "operation manual", highly collectible.

- open source address:

https://github.com/patchy631/ai-engineering-hub

Refactoring from Zero: Deeper Understanding of Model Underpinnings

For real insight into how LLM works, nothing beats implementing a model from scratch.

7. Reproducing the MiniMind: two hours of training exclusive to the MiniGPT.

MiniMind (22.6k Stars) is a phenomenal open source project that proves to us that ordinary developers can train their own from scratch GPTThe project is a great way to get the most out of your life. With the help of this program, only one piece of NVIDIA 3090 graphics card, it takes about 2 hours to train an ultra-lightweight, only 26 MB in size GPT Model.

This project is valuable because it uses the native PyTorch restructured Transformer Decoder, rotary position encoding (RoPE)、SwiGLU All the core algorithms, such as activation functions, have highly encapsulated interfaces that do not rely on any third-party libraries. It completely reproduces all the core algorithms from pre-training, supervised fine-tuning (SFT)、LoRA Adaptation, to DPO(The industrialized process of (direct preference optimization) alignment is an excellent textbook for deeper understanding of the underlying details of LLM.

- open source address:

https://github.com/jingyaogong/minimind

International Perspective: Authoritative Programs from Top Institutions and Experts

Finally, open source courses from top international researchers and tech giants provide an authoritative and cutting-edge perspective on LLM learning.

8. LLM Course: The Pathway to LLM Scientists

由 Maxime Labonne created LLM Course (56.5k Stars) is a comprehensive course designed for advanced learners. It clearly divides the learning path into LLM Foundation,LLM Scientists and LLM The three main parts of the engineer.

The program offers a wide range of courses that can be taken directly in Google Colab running NotebookThe content covers the use of QLoRA / DPO Perform model fine-tuning,GGUF / GPTQ Quantification, and the use of mergekit Advanced topics such as merging models. Also, it provides advanced topics such as AutoQuant、LazyMergekit and other automated tools to help developers complete model optimization efficiently.

- open source address:

https://github.com/mlabonne/llm-course

9. Generative AI for Beginners: The Definitive Introductory Tutorial from Microsoft

Generative AI for Beginners (87.5k Stars) was created by Microsoft The official introductory course on generative AI is of extremely high quality and is perfect for developers with no prior knowledge. This course contains 21 well-designed lessons covering everything from Prompt Engineering fundamentals, building Vincennes diagram applications, integration RAG 与 Agent and other core knowledge points.

The course offers Python 和 TypeScript code samples, with a special emphasis on "responsible AI" (Responsible AI) on this important topic. It also describes how to use Gradio Low-code tools, such as the rapid construction of application prototypes, greatly reduce the learning threshold.

- open source address:

https://github.com/microsoft/generative-ai-for-beginners