dots.ocr is a powerful multilingual document parsing tool, based on a 1.7B parameterized visual-linguistic model (VLM), capable of both layout detection and content recognition. dots.ocr supports multiple languages, including low-resource languages. It demonstrates state-of-the-art performance in benchmarks such as OmniDocBench, especially in text, table and reading order parsing. dots.ocr supports multiple languages, including low-resource languages, and is suited for processing complex documents such as academic papers, financial reports, and so on. Compared to traditional multi-model pipelines, dots.oc uses a single-model architecture that allows switching between tasks with a simple change in the input prompt, making reasoning fast and efficient. Users can quickly deploy and utilize it through the open source code on GitHub and the provided Docker image.

Function List

- Layout Detection: Recognizes elements in a document (e.g., text, tables, formulas, images, etc.) and provides precise bounding box (bbox) coordinates.

- content recognition: Extracts text, tables (output in HTML format), formulas (output in LaTeX format), etc. from documents.

- Multi-language support: Support for document parsing in 100 languages, especially in low-resource languages.

- Reading order optimization: Sort document elements according to human reading habits to ensure logical output.

- fast inference: Compact model based on 1.7B parameters with inference speed better than many large models.

- Flexible cue switching: The process is accomplished through different prompts (e.g.

prompt_layout_only_en、prompt_ocr) to achieve task-specific parsing. - Output Diversity: Generate structured layout data in JSON format, Markdown files, and visual images with bounding boxes.

Using Help

Installation process

To use dots.ocr, you first need to install the necessary environment and model weights. Below are the detailed installation steps:

- Creating a Virtual Environment:

conda create -n dots_ocr python=3.12 conda activate dots_ocr

- Clone Code Repository:

git clone https://github.com/rednote-hilab/dots.ocr.git cd dots.ocr - Installing PyTorch and dependencies:

Depending on your version of CUDA, install the corresponding version of PyTorch. for example:pip install torch==2.7.0 torchvision==0.22.0 torchaudio==2.7.0 --index-url https://download.pytorch.org/whl/cu128 pip install -e . - Download model weights:

Use the provided script to download the model weights. Note that the folder name of the model save path cannot contain periods, it is recommended to use theDotsOCR:python3 tools/download_model.py - Using a Docker image (optional):

If you encounter installation problems, you can use the officially provided Docker image:git clone https://github.com/rednote-hilab/dots.ocr.git cd dots.ocr pip install -e .

Deployment method

dots.ocr Recommended vLLM Perform the deployment for optimal inference performance. The following are the steps for vLLM-based deployment:

- Registering models to vLLM:

python3 tools/download_model.py export hf_model_path=./weights/DotsOCR export PYTHONPATH=$(dirname "$hf_model_path"):$PYTHONPATH sed -i '/^from vllm\.entrypoints\.cli\.main import main$/a\ from DotsOCR import modeling_dots_ocr_vllm' `which vllm` - Starting the vLLM Service:

CUDA_VISIBLE_DEVICES=0 vllm serve ${hf_model_path} --tensor-parallel-size 1 --gpu-memory-utilization 0.95 --chat-template-content-format string --served-model-name model --trust-remote-code - Running the vLLM API Example:

python3 ./demo/demo_vllm.py --prompt_mode prompt_layout_all_en

Alternatively, use HuggingFace for inference:

python3 demo/demo_hf.py

Document parsing operations

After the vLLM service has started, you can parse an image or PDF file with the following command:

- Parsing a single image:

python3 dots_ocr/parser.py demo/demo_image1.jpg - Parsing PDF files:

For multi-page PDFs, it is recommended to set a larger number of threads:python3 dots_ocr/parser.py demo/demo_pdf1.pdf --num_threads 64 - Layout testing only:

python3 dots_ocr/parser.py demo/demo_image1.jpg --prompt prompt_layout_only_en - Extract text only (exclude headers and footers):

python3 dots_ocr/parser.py demo/demo_image1.jpg --prompt prompt_ocr - Bounding box based parsing:

python3 dots_ocr/parser.py demo/demo_image1.jpg --prompt prompt_grounding_ocr --bbox 163 241 1536 705

output result

After parsing, dots.ocr generates the following file:

- JSON file(e.g.

demo_image1.json): contains the bounding box, category and text content of the layout element. - Markdown file(e.g.

demo_image1.md): merges all detected text content into Markdown format, with an additionaldemo_image1_nohf.mdVersion excludes headers and footers. - visualization image(e.g.

demo_image1.jpg): draws the detected bounding box on the original image.

Running Demo

The interactive presentation interface can be started with the following command:

python demo/demo_gradio.py

or run a bounding box-based OCR demo:

python demo/demo_gradio_annotion.py

caveat

- Model Save Path: Ensure that the model save path does not contain periods (e.g.

DotsOCR), otherwise it may result in module loading errors. - image resolution: The recommended image resolution is no more than 11289600 pixels, with a DPI setting of 200 for PDF resolution.

- Special Character Handling: Consecutive special characters (e.g.

...或_) may result in an output exception, it is recommended to use theprompt_layout_only_en或prompt_ocrTip.

application scenario

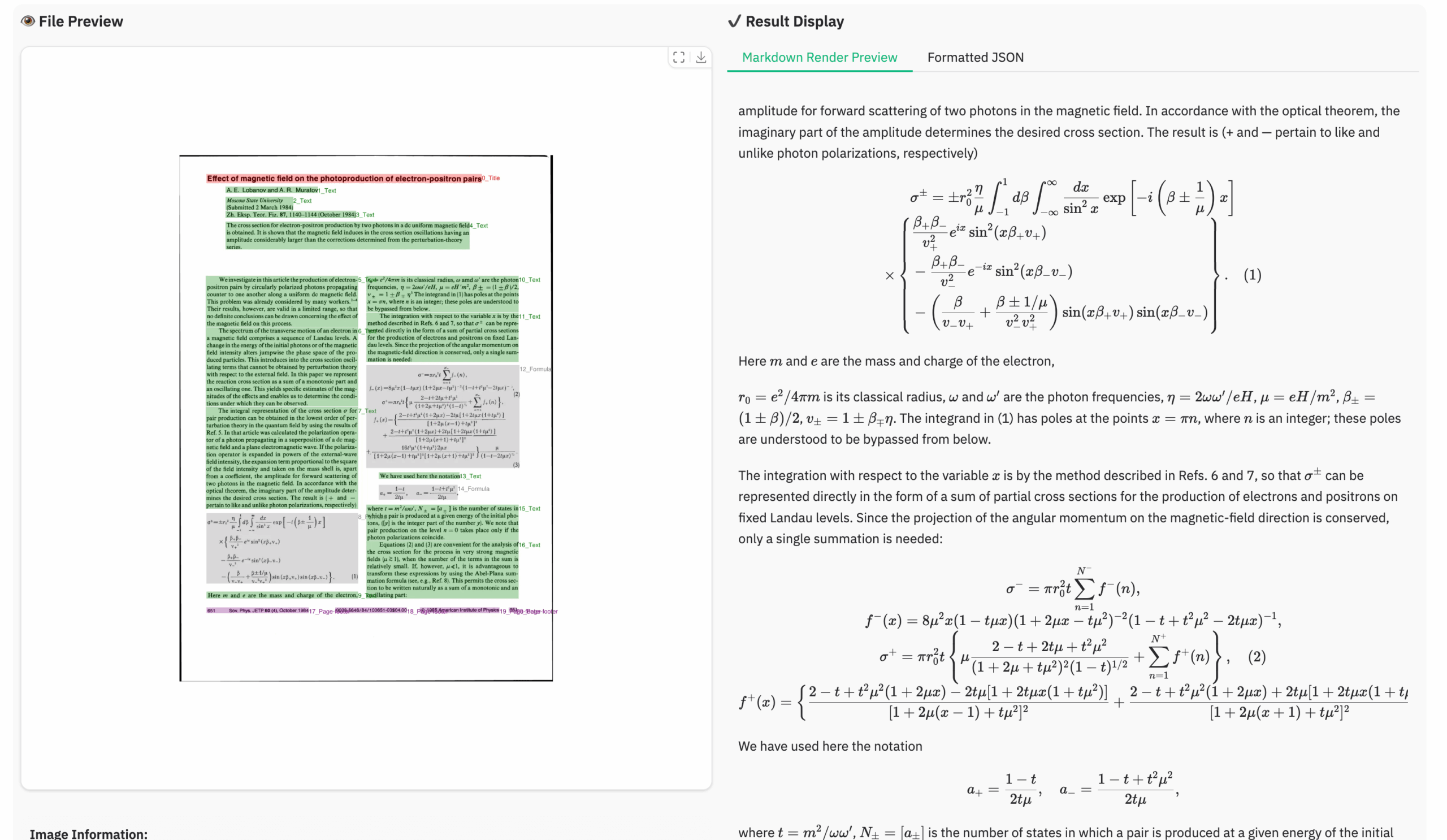

- Analysis of academic papers

dots.ocr efficiently parses text, formulas and tables in academic papers to produce structured JSON data and Markdown documents for researchers to organize their literature. - Processing of financial reports

For financial reporting, dots.ocr accurately extracts table and text content and generates tables in HTML format for easy data analysis and archiving. - Multilingual Documentation

Supports parsing in 100 languages, suitable for handling multilingual contracts, legal documents, etc., ensuring accurate extraction of content and layout. - Organization of educational materials

Parses textbooks, test papers, and other educational materials to extract formulas (in LaTeX format) and text, making it easy for teachers and students to organize learning resources.

QA

- What languages does dots.ocr support?

dots.ocr supports 100 languages, including English, Chinese, Tibetan, Russian, etc., and especially excels in low-resource languages. - How to handle large PDF files?

utilizationparser.pyscript and set the--num_threadsparameter (e.g. 64) to speed up parsing of multi-page PDFs. - How is the parsing result output?

Results include JSON files (structured data), Markdown files (text content) and visual images (with bounding boxes). - How do I resolve model loading errors?

Ensure that the model save path does not contain periods (e.g., by using theDotsOCR) and check that the vLLM registration script is executed correctly.