Let's start with a simple task: scheduling a meeting.

When a user says, "Hey, let's see if we can do a quick sync tomorrow?"

An AI that relies only on Prompt Engineering (Prompt Engineering) might reply, "Yes, tomorrow is fine. What time would you like to schedule it, please?" This response, while correct, is mechanical and unhelpful because it lacks an understanding of the real world.

Now, envision another AI. it not only sees the phrase when it receives the same request, but it also has immediate access to a rich information environment: your calendar shows that tomorrow's schedule is full; email history with the other person suggests that your communication style is informal; the contact list labels the other person as an important partner; and the AI has instrumental access to send meeting invitations and emails directly.

At that point, it would respond with something like, "Hey Jim! I looked and my schedule is completely full tomorrow. There seems to be an opening on Thursday morning, would you see if that's convenient? If that's okay, I'll just send an invite."

The difference is not because the second AI model is "smarter" per se, but because it is in a more complete "context". This paradigm shift from optimizing a single instruction to building dynamic information environments is the most important evolution in AI application development - from hint engineering to context engineering.

What is contextual engineering?

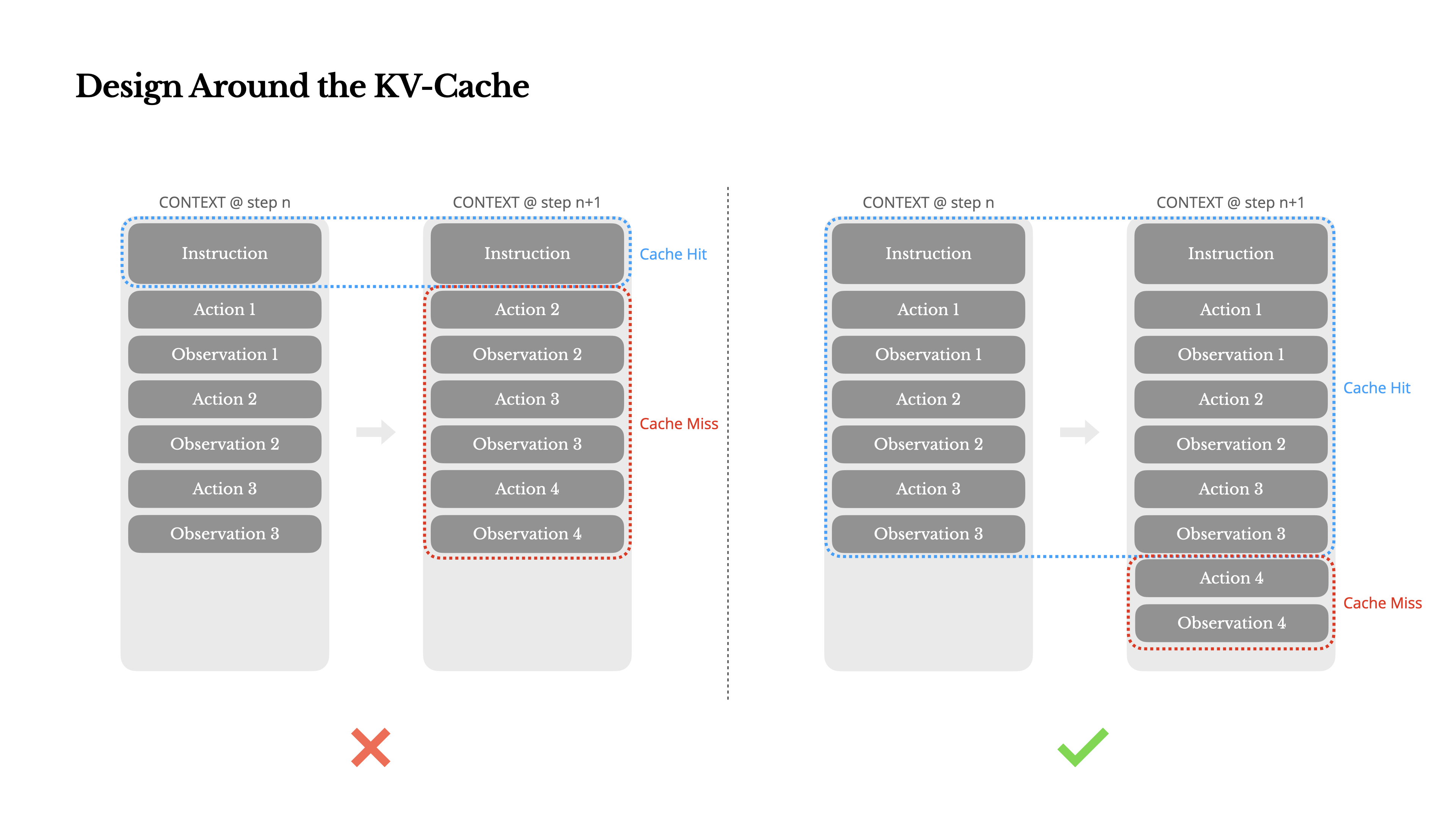

Andrej Karpathy offers an excellent analogy: the Large Language Model (LLM) is like a new type of operating system, and its Context Window is RAM. The core task of contextual engineering is to manage this RAM efficiently, putting in the most critical information before each operation.

A more engineered definition is:Contextual engineering is the discipline of designing and building dynamic systems that provide the right information and tools needed to solve problems for large language models at the right time and in the right format.

The "context" it manages is a three-dimensional, seven-dimensional system:

- 指令 (Instructions): Define system-level cues for AI code of conduct and identity.

- User Prompt. Specific issues or tasks raised by users.

- Short-term memory (State / History). The interaction history of the current dialog ensures continuity of the dialog.

- 长期记忆 (Long-Term Memory): Persistent knowledge across multiple conversations, such as user preferences.

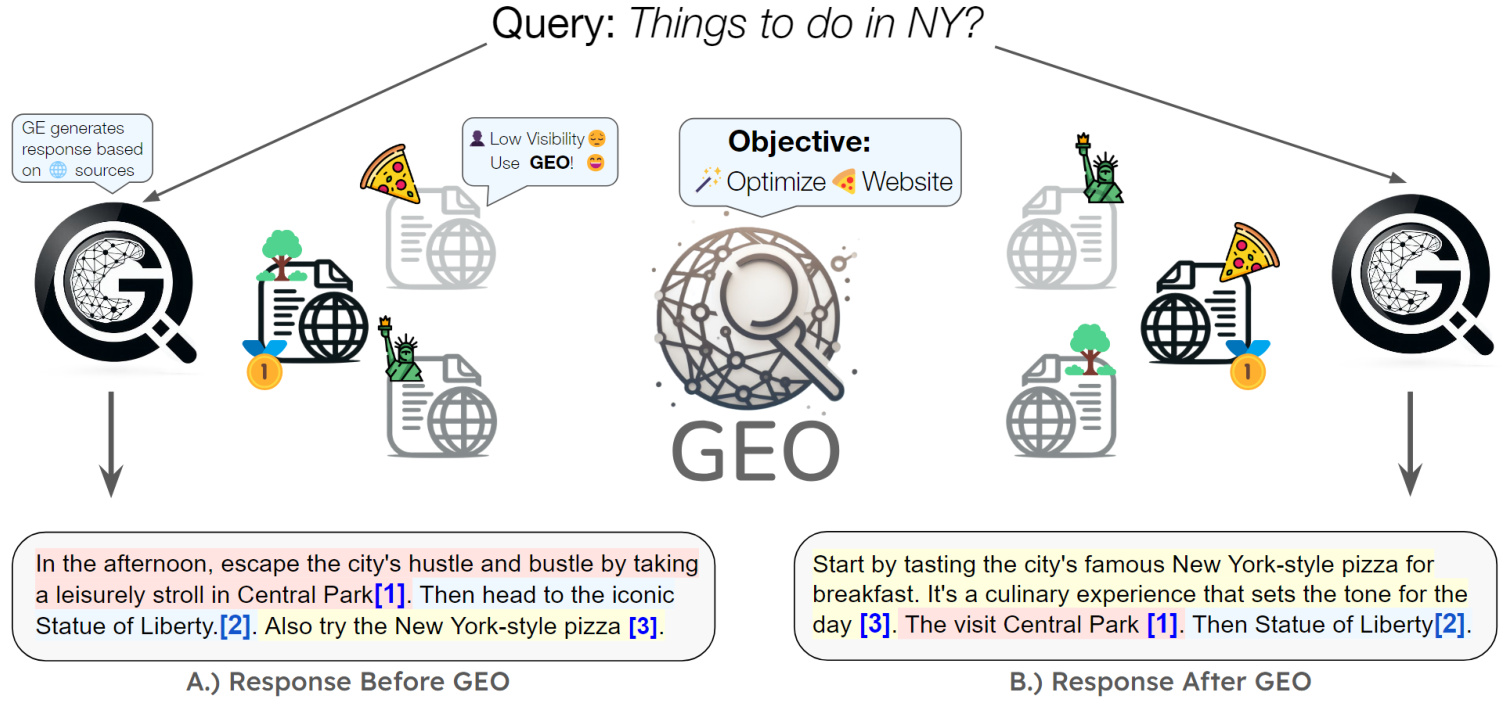

- Retrieved Information. Knowledge acquired externally in real time through technologies such as RAG.

- Available Tools. A list of functions or APIs that the AI can call.

- Structured Output. Definition of the AI output format, such as a request to return JSON.

Why is context so critical? The four modes of failure

When an Agent system performs poorly, the root cause is often a problem with context management. An overly long or low quality context typically leads to the following four failure modes:

- Context Poisoning. Information that is wrong or generated by modeling illusions enters the context and contaminates subsequent decisions.

- Context Distraction. Too much extraneous information swamps the core instructions, causing the model to deviate from the task goal.

- Context Confusion. Some ambiguous or unnecessary details in the context affect the accuracy of the final output.

- Context Clash. There are factual or logical contradictions in different parts of the context.

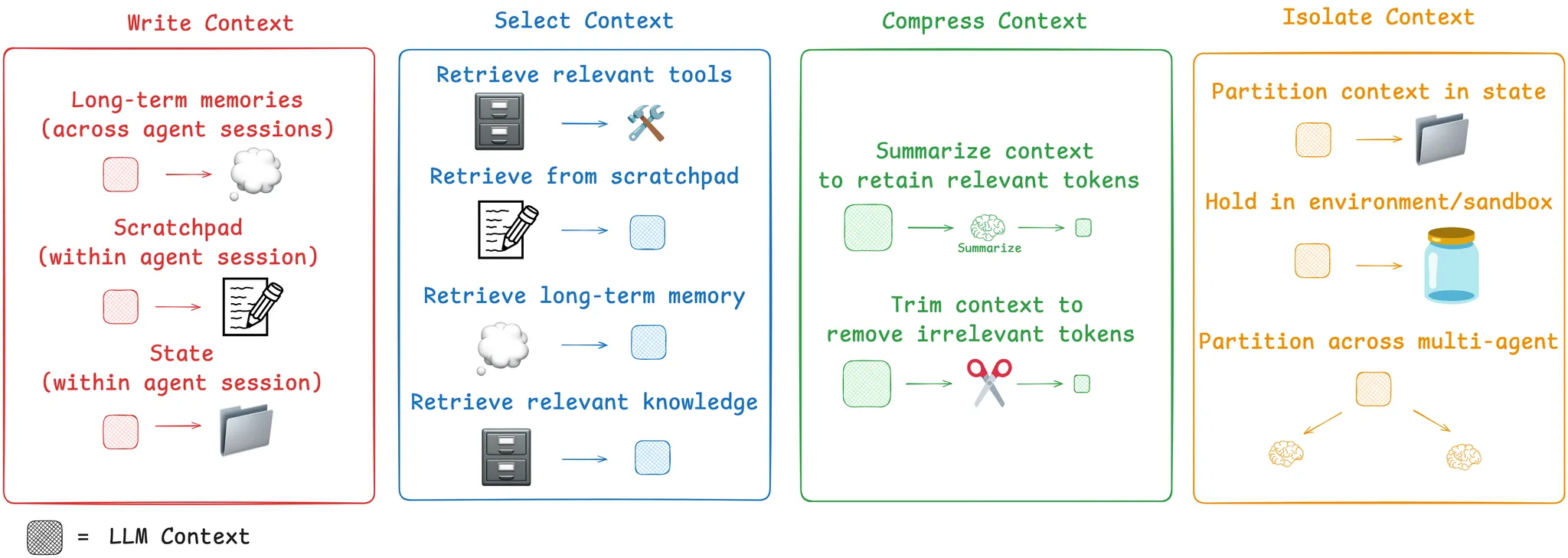

To address these challenges systematically, developers have summarized four core strategies for managing context.

Core Strategies for Contextual Engineering

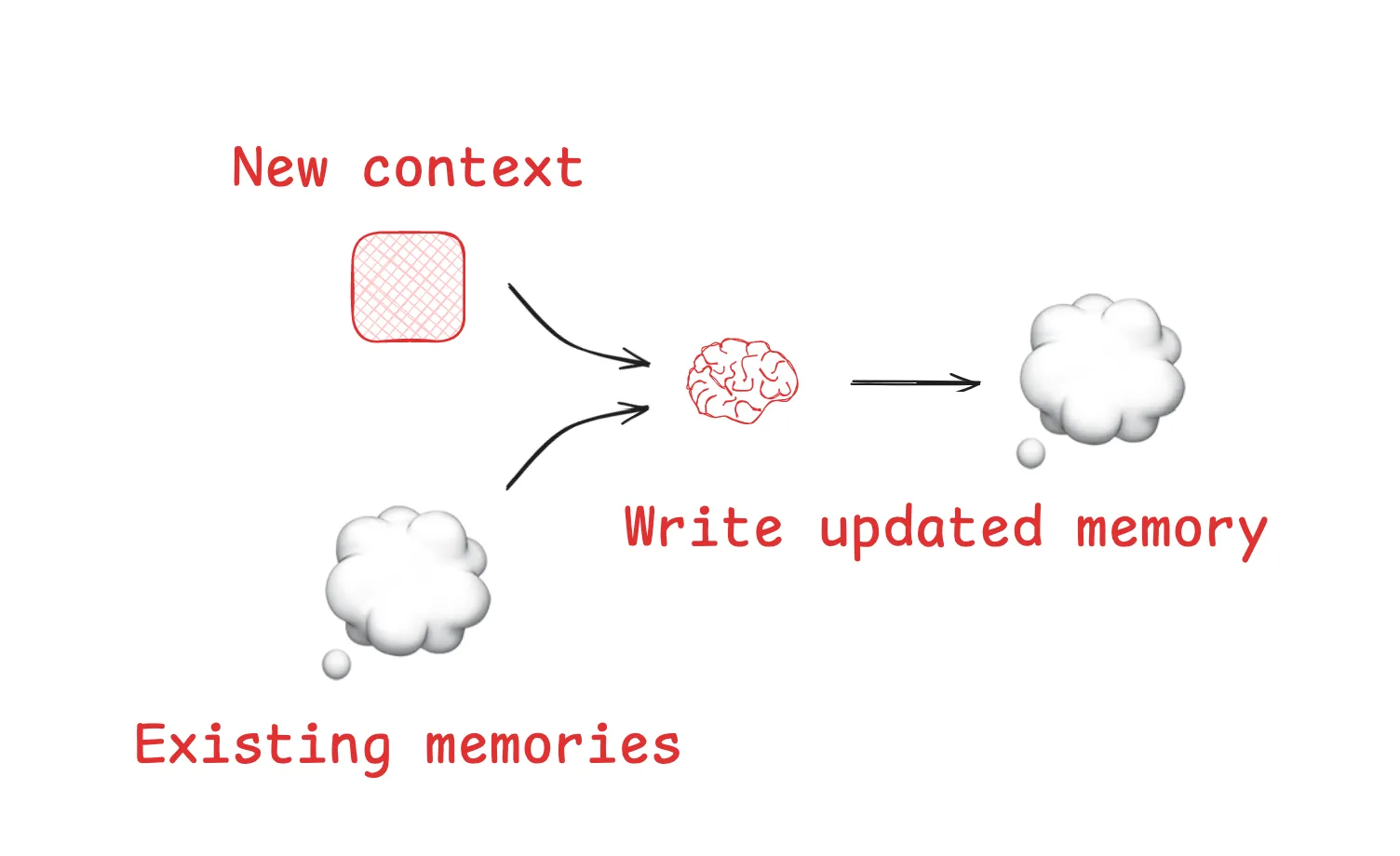

1. Write: Saves information to external storage.

"Write" is to provide the Agent with the ability to take notes and store key information outside of a limited context window.

- Scratchpads. A type of short-term memory within a task. For example.

AnthropicThe study showed that itsLeadResearcherThe Agent "writes" a well thought out plan to external memory before executing complex tasks, preventing the core plan from being lost due to a long context. - Memories. Allows the Agent to remember information across sessions. Like the case of

ChatGPTmemory function, as well as development toolsCursor和Windsurfin all of them contain mechanisms for automatically generating and preserving long-term memories based on user interactions.

2. Select: pulls relevant context into the window on demand

"Selection is responsible for picking out the most important parts of the current task from the huge amount of external information and placing them in the context window at the right time.

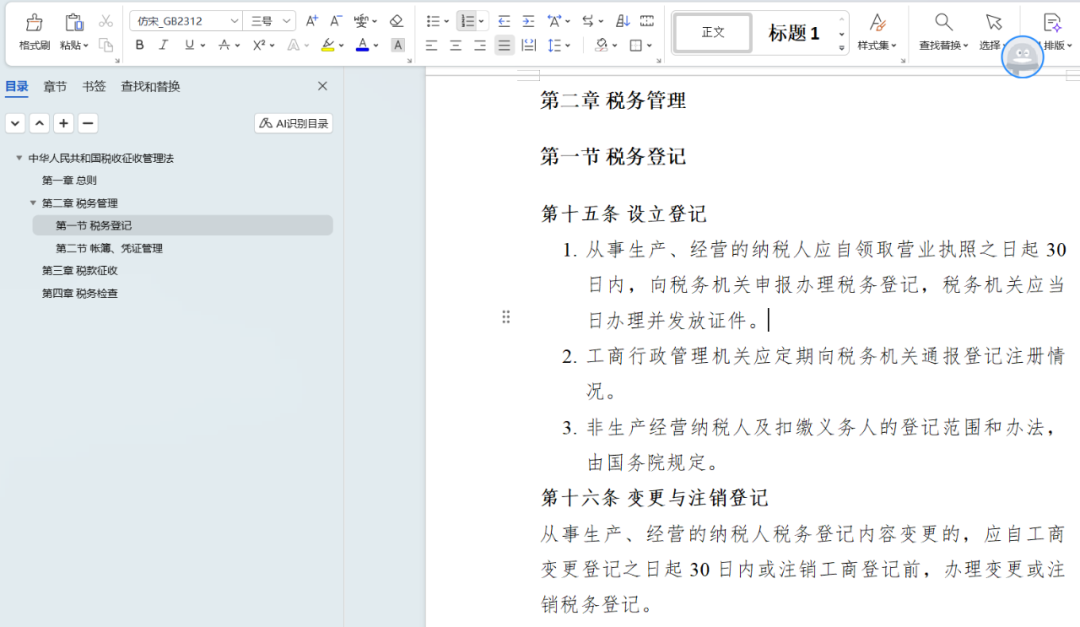

- Select from a specific file:. Some mature Agents obtain context by reading specific files. For example.

Claude Codewill readCLAUDE.mdfile, and theCursorInstead, rules files are used to load coding specifications, which is an efficient way of selecting "procedural memory". - Select from the toolset. When there are very many tools available, utilizing the RAG The technology dynamically retrieves and "selects" the most relevant tools based on the task at hand, increasing the accuracy of tool selection by several times.

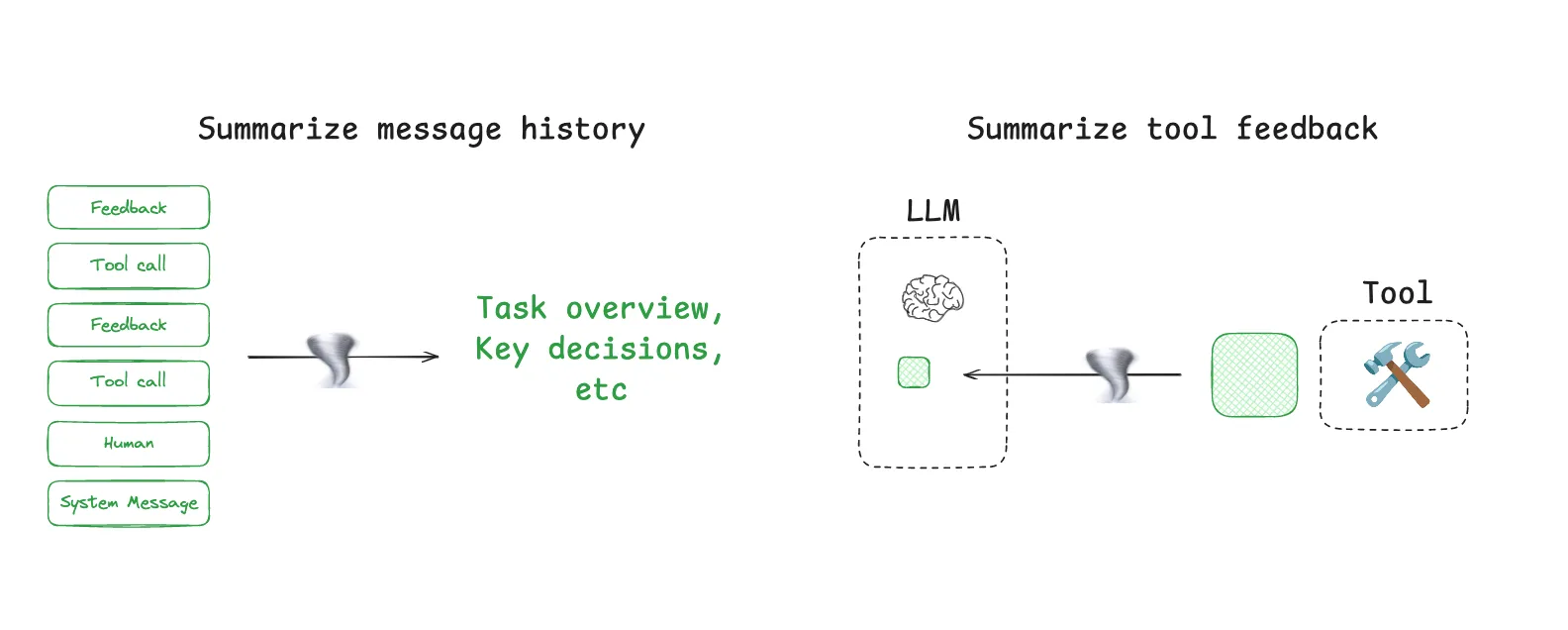

3. Compress: keep the essentials, reduce redundancy

"Compression" aims to reduce the number of tokens occupied by the context without losing critical information.

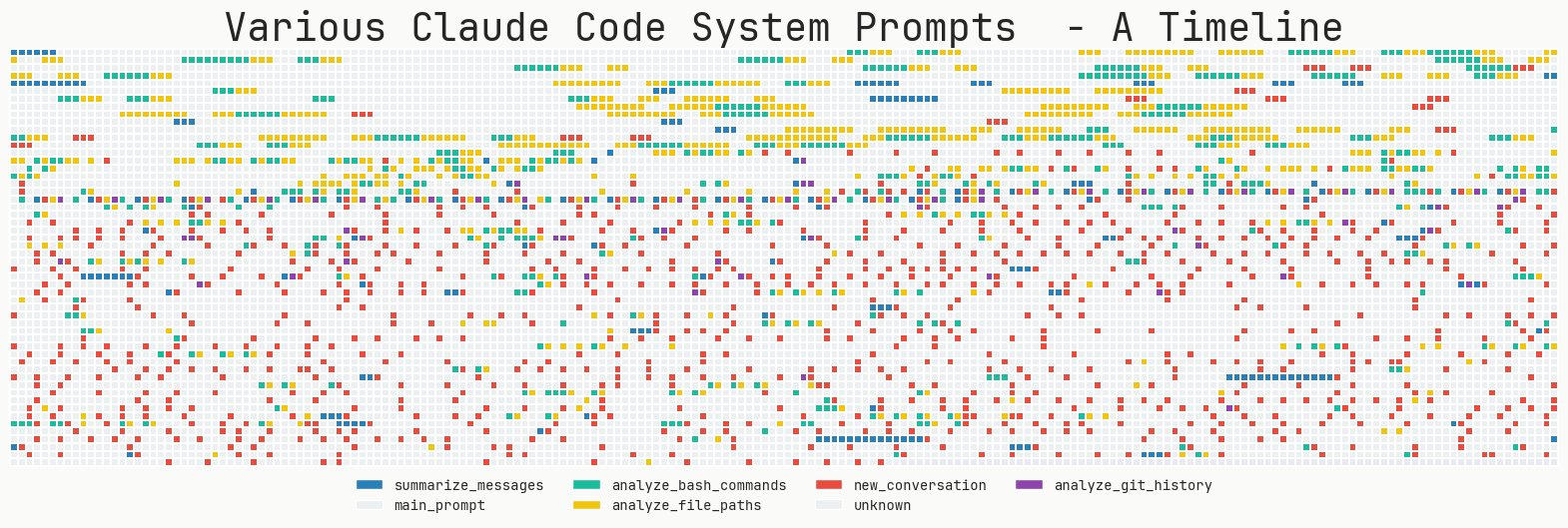

- Context Summarization. When the conversation history becomes too long, LLM can be called to summarize it.

Claude CodeA typical example of this is the "auto-compact" feature, which automatically summarizes the complete conversation history when the context usage exceeds 95%. - Context Trimming. A form of rule-based filtering. The simplest approach is to simply remove the earliest rounds of dialog, while more advanced approaches use specially trained "context pruner" models to identify and remove unimportant information.

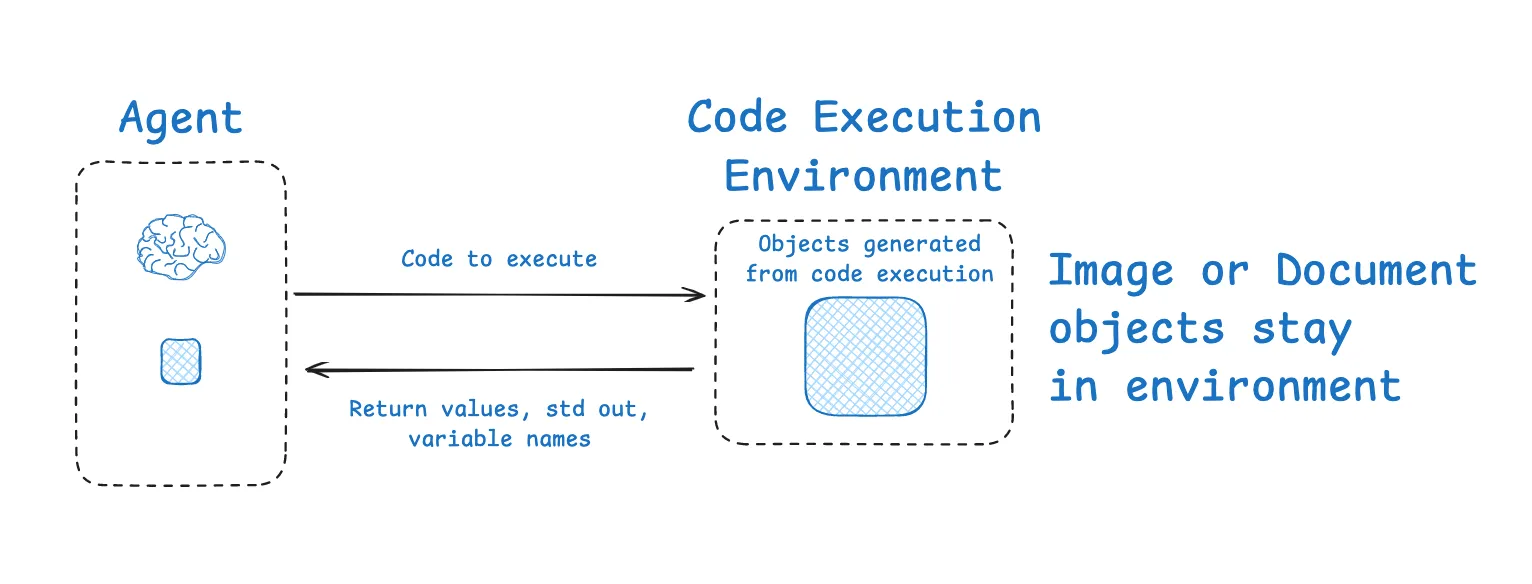

4. Isolate: Splitting contexts to cope with complex tasks

The core idea of "isolation" is "divide and conquer", breaking down a large task so that different contexts are processed in isolated environments.

- Multi-agent. Decompose complex tasks into multiple Expert Agents with independent context windows.

Anthropicfound that teams of multiple sub-agents working in parallel far outperform a single Agent with a huge context in a research task. - Sandboxed Environments. For code execution type tasks, the execution process can be isolated in a sandbox.

HuggingFace的CodeAgentis an example where LLM is responsible for generating the code, and after the code is run in the sandbox, only the most critical execution results are returned to the context of LLM, not the full execution log.

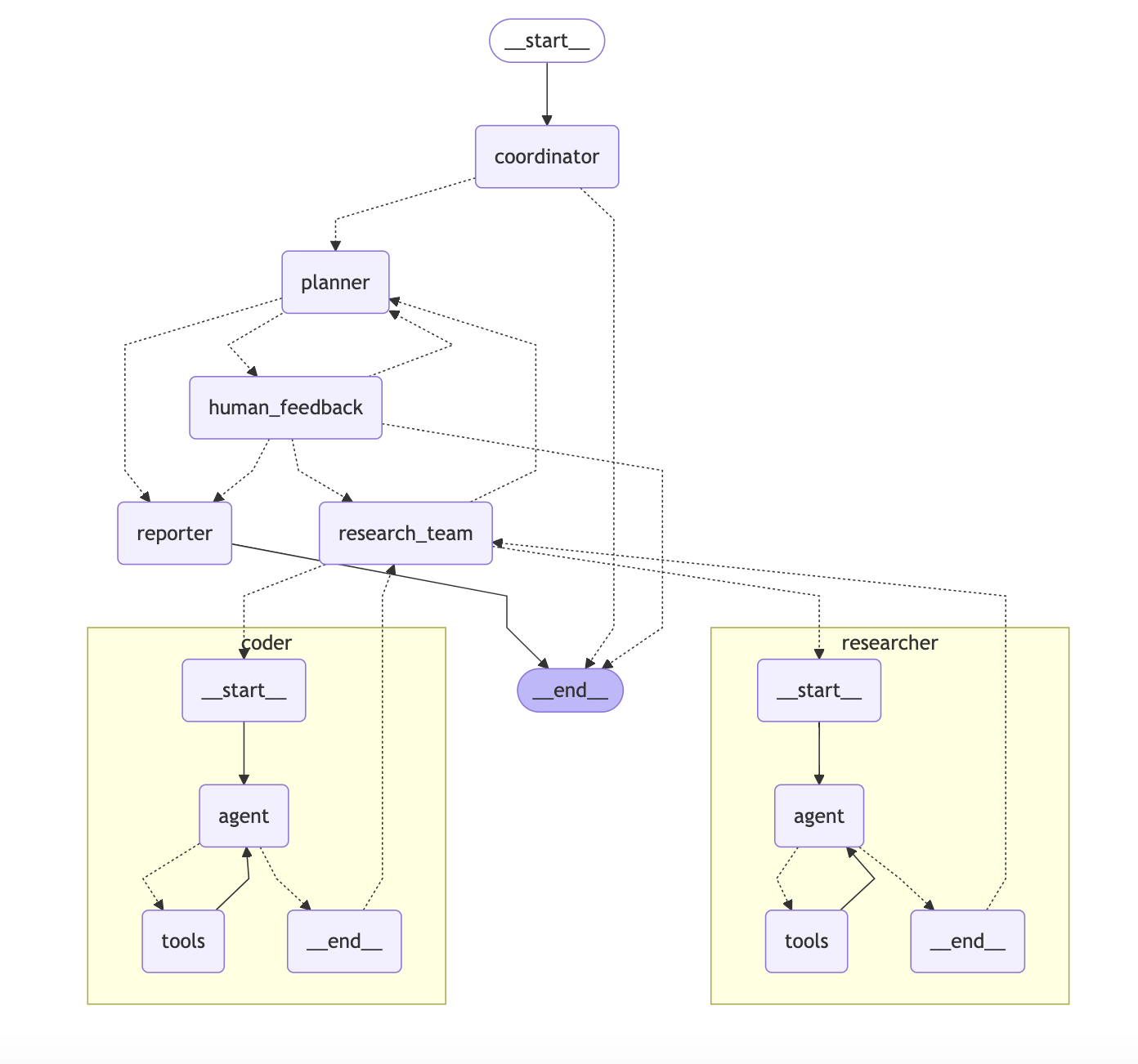

A great tool for contextual engineering: LangGraph and LangSmith.

A sufficiently flexible and controllable framework is required to realize the complex strategies described above.LangChain in the ecosystem LangGraph 和 LangSmith Strong support is provided for this purpose.

LangGraph is a low-level framework for building controlled Agents. It builds the workflow of an Agent as a statechart and is designed to perfectly match the needs of contextual engineering:

- State Object. Acts as a perfect "staging area" (for writes) and contextual "isolation" area. You can define different fields in the state, some of which are exposed to the LLM, and some of which are internal and "selectively" used as needed.

- Persistence and Memory. Its built-in checkpointing and memory modules make it easy to "write" and "select" short-term and long-term memories.

- Customizable nodes. Each node in the diagram is a programmable step. You can easily implement "compression" logic in the nodes, such as summarizing the results immediately after invoking a tool.

- Multi-Intelligent Body Architecture.

LangGraphprovide information such assupervisor和swarmlibraries, such as the one that natively supports the construction of complex multi-intelligence systems and the realization of contextual "isolation".

LangSmith It's an Observability platform. It allows you to clearly trace each step of an Agent's decision-making process as if you were debugging a normal program, seeing exactly what information is contained in the context window for each LLM call. This provides indispensable insight for diagnosing and optimizing contextual engineering, and the ability to validate the effectiveness of optimization strategies through the Eval feature.

From the "alchemy" of cue engineering to the "system science" of contextual engineering, this is not only the evolution of technology, but also the inevitable path to building reliable and powerful AI applications. Mastering this art, as well as LangGraph 和 LangSmith Tools like these are becoming a core competency for AI engineers.