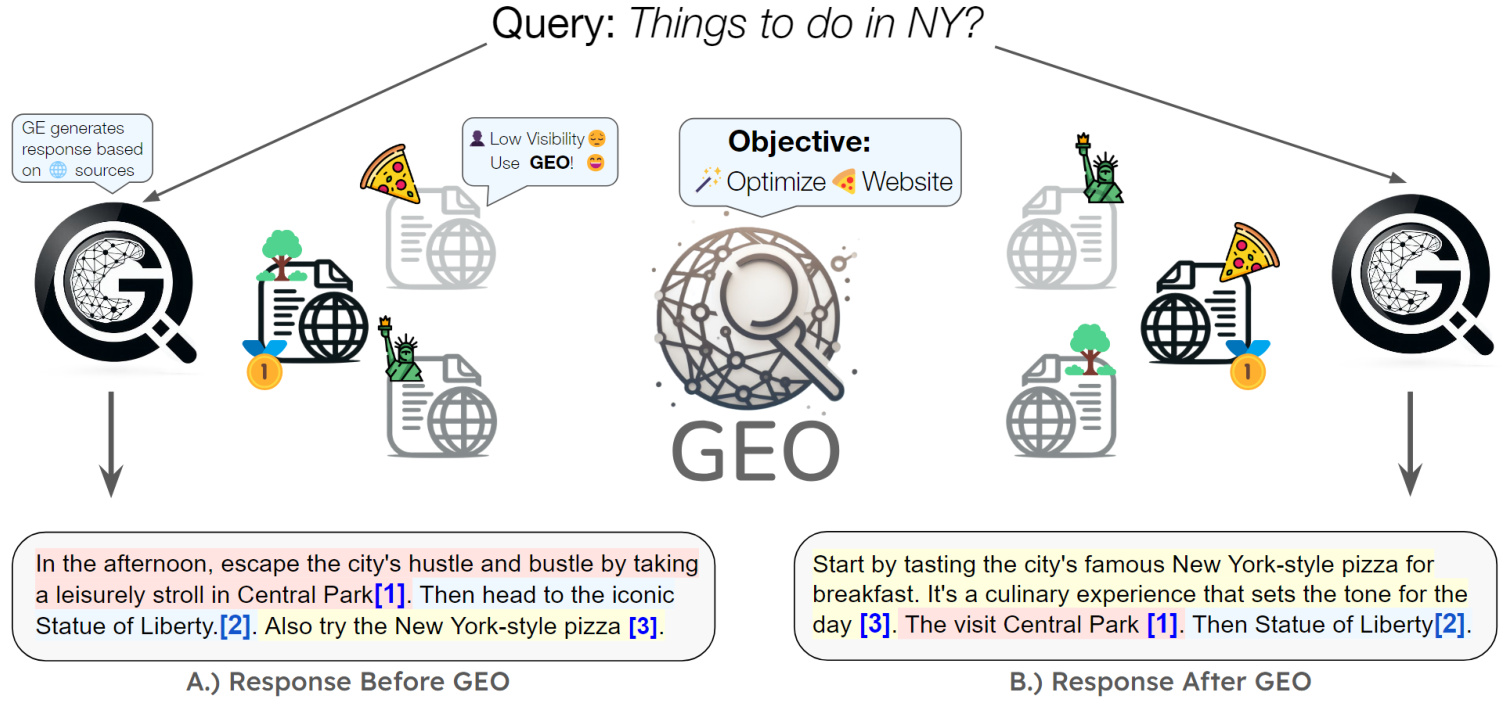

Learn all about Reinforcement Learning (RL) and how to use the Unsloth 和 GRPO Train yourself. DeepSeek-R1 Reasoning Models. A complete guide from entry to mastery.

🦥 What you will learn

- What is RL?RLVR?PPO?GRPO?RLHF?RFT?Is Reinforcement Learning really **"Luck is all you need?" **

- What is an environment? Intelligentsia? Actions? Reward functions? Rewards?

This article covers everything you need to know about GRPO, Reinforcement Learning (RL), and Reward Functions (from introductory to advanced), as well as some tips and tricks and the basics of GRPO with Unsloth. If you're looking for a step-by-step tutorial on using GRPO, see our guide here.

❓ What is Reinforcement Learning (RL)?

RL's goal is:

- Increase the chances of seeing "good" results.

- Reduce the chances of seeing "bad" results.

**That's it! **There are complexities about what "good" and "bad" mean, or how we can "add" or "subtract" from it. Or even what "results" mean, there are some complexities.

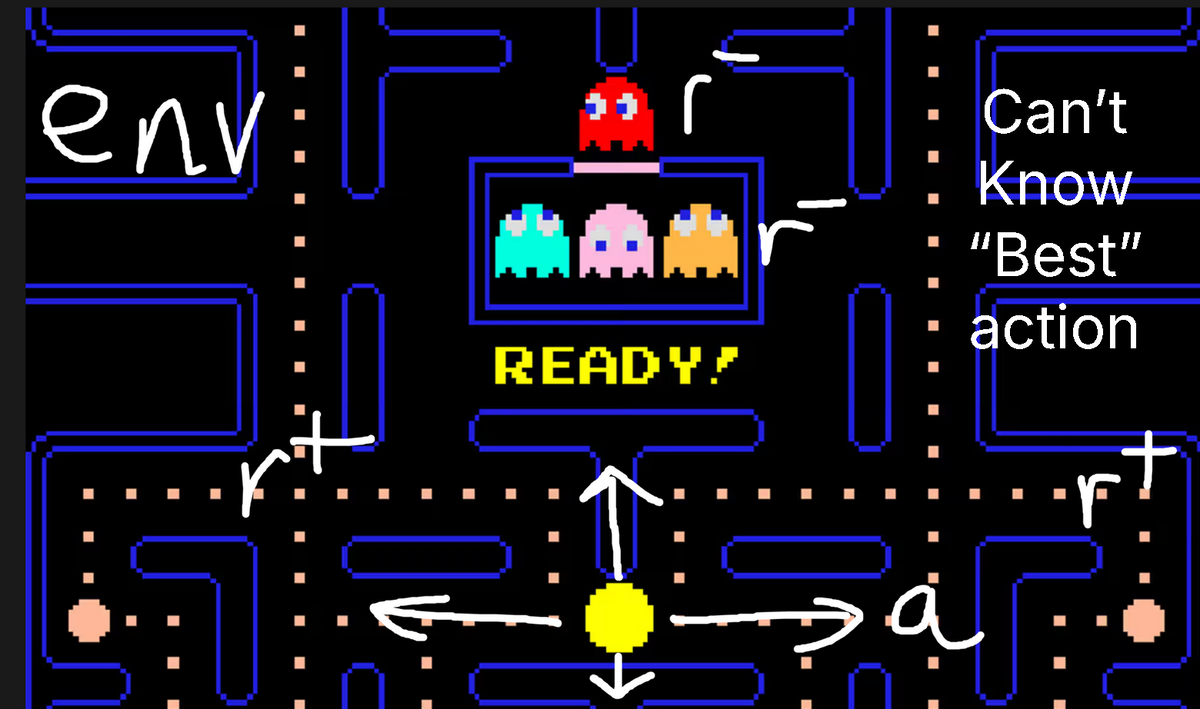

For examplePacman games (Pacman game):

- matrix (environment) is the game world.

- You can take themovements (actions) are up, left, right and down.

- If you eat a bean.incentives (rewards) is good; if you hit those writhing enemies, rewards are bad.

- In RL, you can't know the "best move" to make, but you can observe the intermediate steps or the final game state (win or lose).

As another example, imagine you are asked a question like this:"What does 2 + 2 equal?" (4) An unaligned language model spits out 3, 4, C, D, -10, anything.

- Better a number than a C or a D, right?

- Better to get three than eight, right?

- Getting 4 is absolutely correct.

We just designed areward function (reward function)!

🏃 From RLHF, PPO to GRPO and RLVR

OpenAI promotes the concept of RLHF (Reinforcement Learning from Human Feedback), where we train an **"agent "** (intelligence) to respond to a question (i.e.state of affairs (state)) generates outputs that are evaluated as more useful by humans.

For example, the likes and taps in ChatGPT can be used for the RLHF process.

PPO Formula

clip(..., 1-e, 1+e) item is used to force the PPO not to change too much. There is also a KL term with beta set to > 0 to force the model not to deviate too far.

In order to realize the RLHF, thePPO (proximal policy optimization) was developed. In this case.intelligent bodyis the language model. In fact, it consists of 3 systems:

- Generation strategy (currently trained model)

- Reference strategy (original model)

- Value Model (Average Reward Estimator)

We usereward modelto compute the rewards of the current environment, our goal is toMaximize this reward!

The formula for PPO seems quite complex because it is designed to be stable. Visit our AI Engineer Talk on RL in 2025 to learn more about the in-depth mathematical derivation of PPO.

DeepSeek developed GRPO (group relative policy optimization) to train their R1 inference model. The main difference with PPO is:

- **The value model was removed,** and replaced with statistics derived from multiple calls to the reward model.

- Reward model removedand replace it with one that can be used RLVR of the custom reward function.

This means that GRPO is very efficient. Previously PPO required multiple models to be trained - now that the reward and value models have been removed, we can save memory and speed everything up.

RLVR (Reinforcement Learning with Verifiable Rewards) Allows us to reward models based on tasks with easily verifiable solutions. Example:

- Math equations can be easily verified. For example 2+2 = 4.

- The code output can be verified for correct execution.

- Designing verifiable reward functions can be difficult, so most examples are mathematical or code-based.

- GRPO's use cases are not limited to code or math - its reasoning process can augment tasks such as email automation, database retrieval, law, and medicine, dramatically improving accuracy based on your dataset and reward function - the trick is to define amarking scheme (rubric)-i.e., a series of smaller verifiable rewards, rather than an ultimate, all-encompassing single reward. OpenAI, for example, promotes this in its Reinforcement Learning Fine-Tuning (RFT) service.

Why Group Relative?

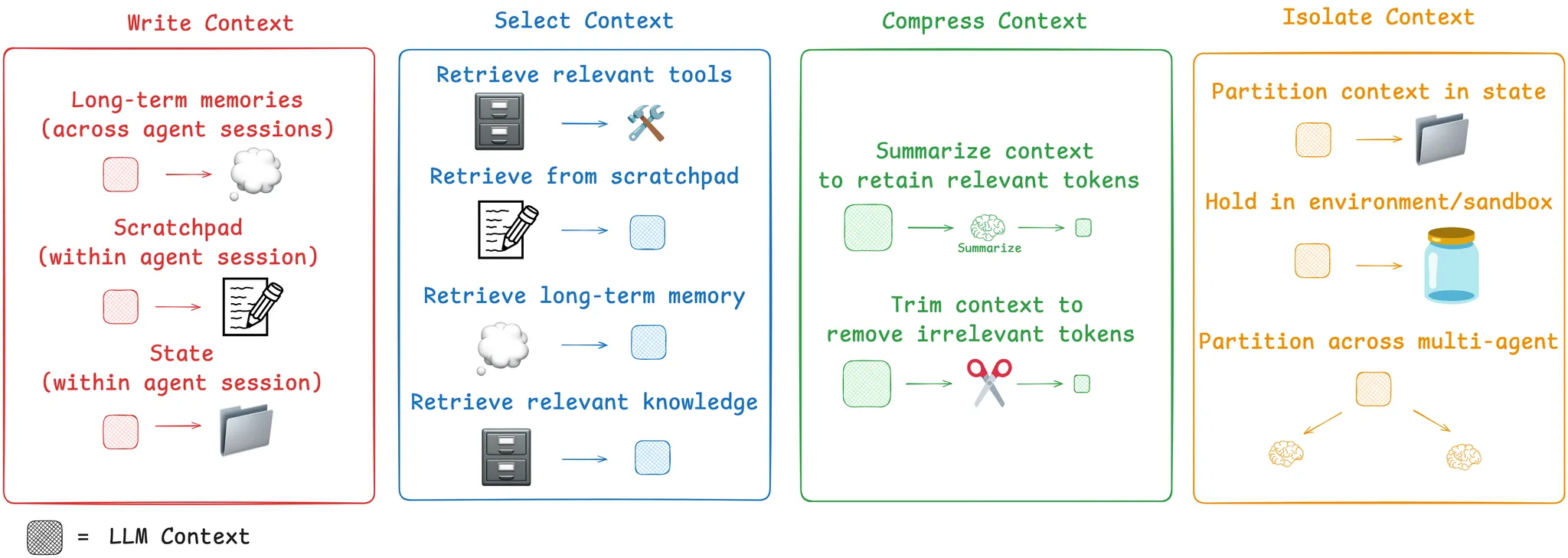

GRPO completely removes the value model, but we still need to estimate the **"average reward "** given the current state.

The trick is to sample large language models! We then compute the average reward by counting the sampling process for several different questions.

For example, for the question "What does 2+2 equal?" For example, for the question "How many times does 2+2 equal?", we sample the question 4 times. We might get 4, 3, D, C. Then we calculate the reward for each of these answers, and then we calculate the reward forAverage incentive和(statistics) standard deviationand then proceed to Z-score standardization!

This creates theAdvantage A (advantage A), which we will use instead of the value model. This saves a lot of memory!

GRPO Strengths Calculation

🤞 Luck (or patience) is all you need!

The trick with RL is that you only need two things:

- A question or instruction such as "What is 2+2 equal to?" "Creating a Flappy Bird game in Python"

- A reward function and validator for verifying that the output is good or bad.

With those two alone, we can basicallyInfinite invocation of a language modelUntil we get a good answer. For example, for the question "What does 2+2 equal?" , an untrained poor language model would output:

0, cat, -10, 1928, 3, A, B, 122, 17, 182, 172, A, C, BAHS, %$, #, 9, -192, 12.31 and then suddenly 4.

The reward signal is 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0 and then suddenly it's 1.

So, by luck and chance, RL managed to find the right answer in many **"rollouts "** (rollouts). Our goal is to see more of the good answers 4 and much less of the rest (bad answers).

So the goal of RL is to be patient - in the limit, if the probability of the correct answer is at least a small number (not zero), it's just a waiting game - you 100% will surely encounter the correct answer in the limit.

That's why I like to call reinforcement learning "Luck is all you need".

Well, a better way to put it would be "patience is all you need" for reinforcement learning.

RL essentially gives us a trick - instead of simply waiting to infinity, we get "bad signals", i.e. bad answers, and we can effectively "steer" the model so that it tries not to generate bad solutions. We can effectively "steer" the model so that it tries not to generate bad solutions. This means that even though you've waited a long time for a "good" answer to appear, the model has already changed and will do its best not to output a bad answer.

In the example "What does 2+2 equal?" example:

0, cat, -10, 1928, 3, A, B, 122, 17, 182, 172, A, C, BAHS, %$, #, 9, -192, 12.31 and then suddenly 4.

As we get bad answers, RL influences the model to try not to output bad answers. This means that over time we are carefully "pruning" or moving the model's output distribution away from the bad answers. This means that RL is not inefficient because we are not just waiting for infinity, but we are actively trying to "push" the model as far as possible into the "right answer space".

If the probability is always 0, then RL will never work!. This is why one prefers to start RL with a model that has been fine-tuned by the instructions, which can partially and reasonably follow the instructions - this is likely to raise the probability above 0.

🦥 What Unsloth offers to RL

- With just 15GB of VRAM, Unsloth lets you transform any model with up to 17B of parameters, such as Llama 3.1 (8B), Phi-4 (14B), Mistral (7B), or Qwen2.5 (7B), into an inference model.

- **Minimum Requirements:** Just 5GB of VRAM is enough to train your own inference model locally (for any model with 1.5B parameters or smaller).

⚡ Tutorial: Training Your Own Inference Model with GRPO

GRPO Notebook

- Qwen3 (4B) - high level

- DeepSeek-R1-0528-Qwen3-8B - newly

- Llama 3.2 (3B) - Advanced

- Gemma 3 (1B)

- Phi-4 (14B)

- Qwen2.5 (3B)

- Mistral v0.3 (7B)

- Llama 3.1 (8B)

**New Feature! **We now support Dr. GRPO and most other new GRPO technologies. You can find this in the GRPOConfig Use the following parameters in to enable them:

make a copy of

epsilon=0.2,

epsilon_high=0.28, # one sided

delta=1.5, # two sided

loss_type='bnpo',

# or:

loss_type='grpo',

# or:

loss_type='dr_grpo',

mask_truncated_completions=True,

- If you don't get any inference results, make sure you have enough training steps and that your reward function/validator is working properly. We have provided examples of reward functions here here.

- Previous demos have shown that you can achieve your own epiphany moments with Qwen 2.5 (3B) - but that requires a 2xA100 GPU (160GB VRAM). Now, with Unsloth, you can achieve the same epiphany moment with just a 5GB VRAM GPU.

- Previously, GRPO only supported full fine-tuning, but we have made it possible to use it with QLoRA and LoRA.

- For example20K context lengthWith 8 responses generated per prompt, Unsloth uses only 54.3GB of VRAM for Llama 3.1 (8B), while the standard implementation (+ Flash Attention 2) requires 510.8GB (Unsloth reduced by 90%)。

- Note that this is not fine-tuning DeepSeek's R1 distillation model or tuning it using R1's distillation data, which are already supported by Unsloth. This is converting a standard model using GRPO into a full-fledged inference model.

In a test example, even though we only trained Phi-4 for 100 steps with GRPO, the results are already obvious. The model that didn't use GRPO didn't think about the Token, whereas the model trained with GRPO did, and the answer was correct.

💻 Training with GRPOs

For a tutorial on how to convert any open large language model into an inference model using Unsloth and GRPO, see here.

How GRPO Trains Models

- For each quiz pair, the model generates multiple possible responses (e.g., 8 variants).

- Each response is evaluated using a reward function.

- Training Steps:

- If you have 300 rows of data, that's 300 training steps (or 900 if you train 3 epochs).

- You can increase the number of responses generated per question (for example, from 8 to 16).

- The model learns by updating its weights at each step.

If you're having trouble learning your GRPO model, we highly recommend using our Advanced GRPO Notebook, as it has a better reward function and you should see results faster and more frequently.

Basics/Tips

- At least wait. 300 steps, the rewards can only really add up. In order to get decent results, you will probably need to train for at least 12 hours (that's how GRPO works), but keep in mind that this is not mandatory and you can stop at any time.

- For best results, at least 500 rows of dataYou can even try it with 10 rows of data, but more data would be better. You could even try with 10 rows of data, but more data would be better.

- Each training run will vary depending on your model, data, reward function/validator, etc. So even though we wrote a minimum of 300 steps, sometimes it may take 1000 or more. So, it depends on multiple factors.

- If you are using Unsloth locally for GRPO, if you encounter an error, please also

pip install diffusers. Also please use the latest version of vLLM. - It is recommended that GRPO be applied to at least 1.5B Parametersmodel in order to generate Think Token correctly, as smaller models may not be able to do so.

- GRPO'sGPU VRAM Requirements (QLoRA 4-bit)The general rule is that the model parameter = the amount of VRAM you will need (you can use less VRAM, but that's just to be on the safe side). The longer you set the context length, the more VRAM you will need. loRA 16-bit will use at least 4 times more VRAM.

- Continuous fine-tuning isPossibly, you can keep GRPO running in the background all the time.

- In the sample notebook, we use theGSM8K data setThis is by far the most popular choice for R1 style training.

- If you are using the base model, make sure you have a chat template.

- The more you train with GRPO, the better.The great thing about GRPO is that you don't even need much data. All you need is a great reward function/validator, and the longer you train, the better your model will become. Expect your reward to increase over time in relation to the number of steps, as shown below:

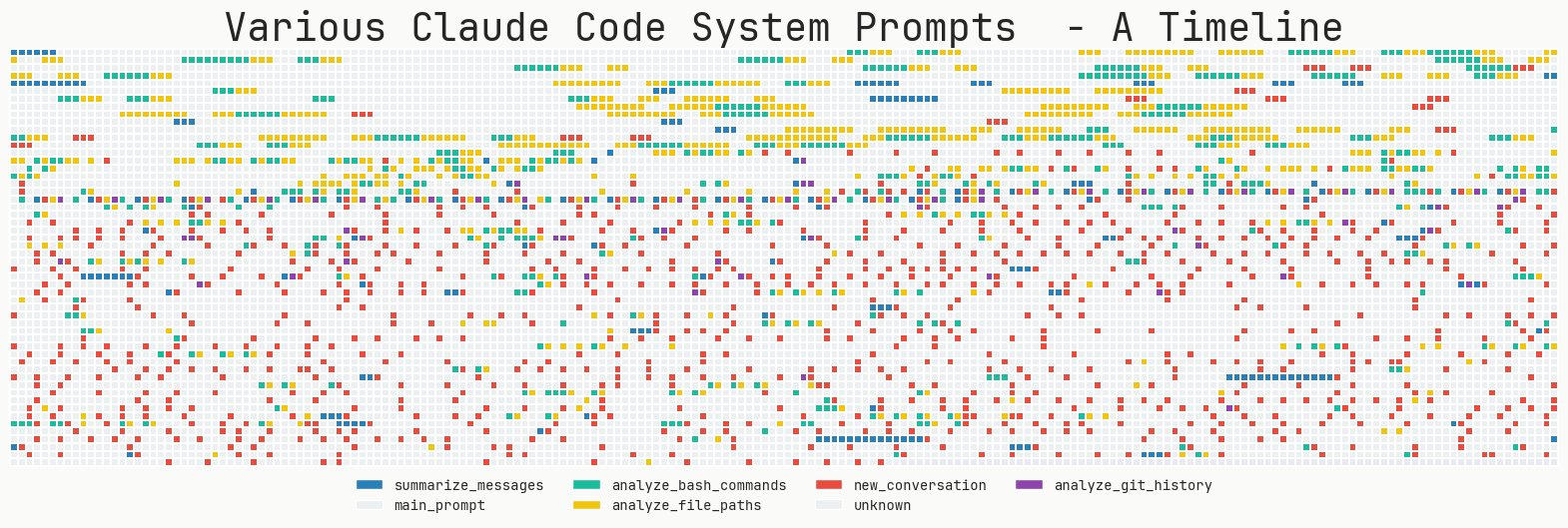

GRPO's training loss tracking is now built directly into Unsloth, eliminating the need for external tools like wandb. It contains full logging details for all reward functions, including the total aggregated reward function itself.

GRPO's training loss tracking is now built directly into Unsloth, eliminating the need for external tools like wandb. It contains full logging details for all reward functions, including the total aggregated reward function itself.

📋 Bonus Functions / Validators

In Intensive Learning.reward function (Reward Function) andvalidator (Verifier) plays a different role in evaluating model output. In general, you can understand them as the same thing, but technically they are not the same, but that doesn't matter because they are usually used in combination.

validator (Verifier):

- Determines whether the generated response is correct or incorrect.

- It does not assign numerical scores - it just verifies correctness.

- Example: If a model generates "5" for "2+2", the validator checks and marks it as "wrong" (because the correct answer is 4).

- The validator can also execute code (e.g., in Python) to verify logic, syntax, and correctness without manual evaluation.

reward function (Reward Function):

- Convert validation results (or other criteria) to numerical scores.

- Example: It may assign a penalty (-1, -2, etc.) if the answer is wrong, while a correct answer may get a positive score (+1, +2).

- It can also penalize based on criteria other than correctness, such as excessive length or poor readability.

The main difference:

- validatorCheck for correctness, but do not score.

- reward functionAssigning fractions, but not necessarily verifying correctness per se.

- reward functionpossibleValidators are used, but they are not technically the same.

Understanding the reward function

The main goal of GRPO is to maximize rewards and learn how answers are derived, rather than just memorizing and reproducing responses from its training data.

- At each training step, GRPO Adjustment of model weightsto maximize rewards. This process progressively fine-tunes the model.

- Routine fine-tuning (without GRPO) onlyMaximize the predicted probability of the next word, but will not be optimized for rewards.GRPO Optimization for a reward function, not just predicting the next word.

- You can do this in multiple epochsReuse data。

- Default reward functioncan be pre-defined for various use cases, or you can have ChatGPT/native models generate them for you.

- There is no single right way to design a reward function or validator - the possibilities are endless. However, they must be well-designed and meaningful, as poorly designed rewards may inadvertently degrade model performance.

🪙 Example of a reward function

You can refer to the following example. You can enter your generated results into a file like the ChatGPT 4o or in a large language model like Llama 3.1 (8B), and design a reward function and validator to evaluate it. For example, feed your generated results into the large language model of your choice and set a rule: "If the answer sounds too much like a robot, deduct 3 points." This helps optimize the output based on quality criteria.

Example #1: Simple Arithmetic Tasks

- Question:

"2 + 2" - Answers:

"4" - Reward function 1:

- If the number → is detected +1

- If no number is detected → -1

- Reward function 2:

- If the number matches the correct answer → +3

- If incorrect → -3

- Total Rewards: Sum of all reward functions

Example #2: E-mail Automation Tasks

- Question: inbound e-mail

- Answers: outbound e-mail

- Reward function:

- If the answer contains the required keywords → +1

- If the answer matches the desired response exactly → +1

- If the response is too long → -1

- If the recipient's name is included → +1

- If signature block exists (phone, e-mail, address) → +1

Unsloth Neighborhood-based reward function

If you've seen ourPremium GRPO Colab NotebookYou'll notice that we created a completely scratch-builtCustom Neighborhood-Based Reward Functions, which is designed to reward answers that are closer to the correct answer. This flexible function can be applied to a wide range of tasks.

- In our example, we have the Qwen3 (Basic Edition) has reasoning enabled and directs it to accomplish specific tasks.

- Apply a pre-tuning policy to avoid GRPO's default preference for learning only formats.

- Use regular expression-based matching to improve evaluation accuracy.

- Creating beyond generic prompts (e.g.

think) customized GRPO templates, such as<start_working_out></end_working_out>。 - Apply proximity-based scoring - models are rewarded more for closer answers (e.g., predicting 9 instead of 10 is better than predicting 3), while outliers are penalized.

GSM8K reward function

In our other examples, we use existing GSM8K reward functions provided by @willccbb, which are popular and have proven to be quite effective:

- correctness_reward_func - Reward answers that exactly match the tags.

- int_reward_func - Encourage answers for integers only.

- soft_format_reward_func - Check the structure but allow for minor line break mismatches.

- strict_format_reward_func - Make sure the response structure matches the prompt, including line breaks.

- xmlcount_reward_func - Ensure that each XML tag appears only once in the response.

🧮 Using vLLM

You can now use vLLM directly in your fine-tuning stack, which leads to higher throughput and allows you to fine-tune and reason about the model at the same time! Dynamic 4bit quantization of the Llama 3.2 3B Instruct using Unsloth on a 1x A100 40GB is expected to reach ~4000 tokens / 秒。在 16GB 的 Tesla T4 (免费的 Colab GPU) 上,你可以达到 300 tokens / 秒。

We've also magically eliminated the need for simultaneous loading of vLLM The double memory usage when loading Unsloth and Unsloth saves about 5GB for Llama 3.1 8B and 3GB for Llama 3.2 3B. Unsloth could have fine-tuned the Llama 3.3 70B Instruct in 1x 48GB GPUs, where the weights of the Llama 3.3 70B take up 40GB of VRAM. if we don't eliminate double memory usage, then loading both Unsloth and vLLM would require >= 80GB of VRAM.

But with Unsloth, you can still fine-tune and enjoy the benefits of fast inference simultaneously in one package with less than 48GB of VRAM! To use fast inference, first install vllm and use the fast_inference Instantiate Unsloth:

make a copy of

pip install unsloth vllm

from unsloth import FastLanguageModel

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/Llama-3.2-3B-Instruct",

fast_inference = True,

)

model.fast_generate(["Hello!"])

✅ GRPO Requirements Guide

When you use Unsloth for GRPO, we have intelligently reduced VRAM usage by over 90% with a variety of tricks compared to the standard implementation using Flash Attention 2! For example, at a context length of 20K and generating 8 responses per cue, Unsloth for Llama 3.1 8B only uses 54.3GB of VRAM, while the standard implementation requires 510.8GB (Unsloth reduced by 90%)。

- GRPO's QLoRA 4-bit GPU VRAM RequirementsThe general rule is that the model parameter = the amount of VRAM you will need (you can use less VRAM, but that's just to be on the safe side). The longer you set the context length, the more VRAM you will need. loRA 16-bit will use at least 4 times more VRAM.

- Our new memory-efficient linear cores for GRPO reduce memory usage by a factor of 8 or more. This saves 68.5GB of memory, while the

torch.compileof help actually faster! - We utilized the intelligent Unsloth gradient checkpoint algorithm we released a while back. It intelligently offloads intermediate activations to system RAM asynchronously while being only 1% slower. this saves 52GB of memory.

- Unlike the implementations in other packages, Unsloth also uses the same GPU / CUDA memory space as the underlying inference engine (vLLM). This saves 16GB of memory.

| norm | Unsloth | Standard + FA2 |

|---|---|---|

| Training Memory Cost (GB) | 42GB | 414GB |

| GRPO Memory Cost (GB) | 9.8GB | 78.3GB |

| Reasoning Costs (GB) | 0GB | 16GB |

| 20K context length reasoning KV cache (GB) | 2.5GB | 2.5GB |

| Total memory usage | 54.33GB (decrease 90%) | 510.8GB |

In a typical standard GRPO implementation, you need to create 2 logits of size (8, 20K) to calculate the GRPO loss. This takes up 2 * 2 字节 * 8 (生成数量) * 20K (上下文长度) * 128256 (词汇表大小) = 78.3GB。

Unsloth saves 8x memory usage for long context GRPOs, so for a 20K context length, we only need an additional 9.8GB of VRAM!

We also need to process the KV cache in 16bit format.Llama 3.1 8B has 32 layers and the size of both K and V is 1024.So the memory usage for a 20K context length = 2 * 2 字节 * 32 层 * 20K 上下文长度 * 1024 = 每个批次 2.5GB. We would set the batch size of vLLM to 8, but to save VRAM we leave it at 1 in our calculations. otherwise you would need 20GB for the KV cache.