In constructing a system such as RAG 或 AI When agenting this type of AI system, the quality of the retrieval is key in determining the upper limit of the system. Developers typically rely on two dominant retrieval techniques: keyword search and semantic search.

- Keyword search (e.g. BM25). It's fast and good at exact matching. But once the wording of a user's question changes, recall drops.

- Semantic Search. Capturing the deeper meaning of text through vector embedding enables understanding of conceptual queries.

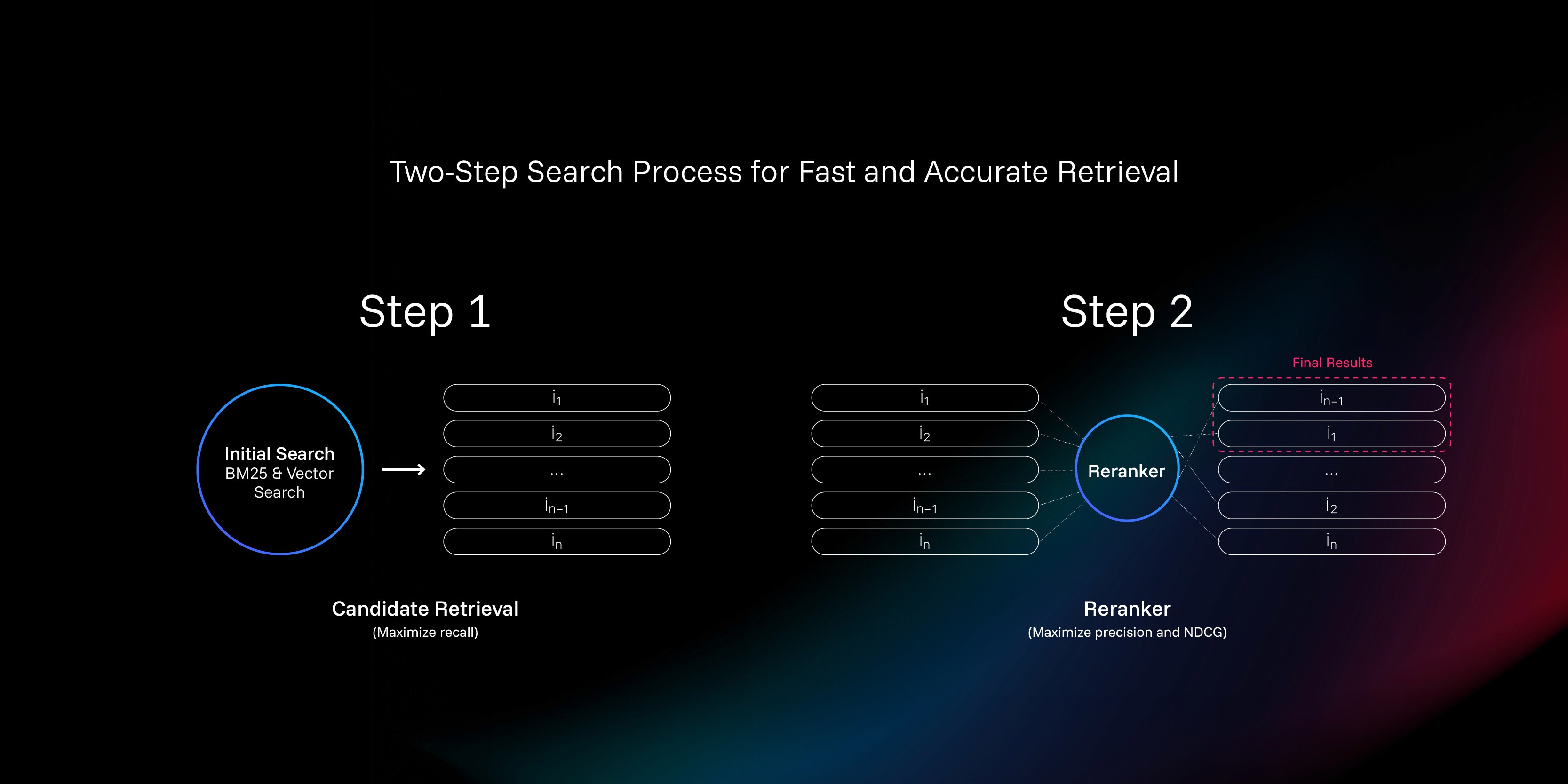

A common practice is to combine the two to maximize recall. However, high recall is not the same as high precision. Even if the correct answer is included in a large number of recalled documents, if it is ranked 67th, it is virtually impossible for a large language model or a user to see it. In order to solve the problem of the "thimble sinking into the sea", thereranker (Resequencer) was developed for this purpose.

What is Reranker?

You can put reranker Understood as a "reviewer" in the information retrieval process.

Rudimentary keyword or semantic search is like a "sea poll", which quickly sifts through a huge volume of documents to find a few hundred relevant candidates. However, the selection process is relatively crude, and documents are encoded independently of the query. In contrast, the reranker As a reviewer, it will get the user's "query" and the full text of each "candidate document", make a refined comparison and score, and finally give a more authoritative and more in line with the user's real intention of the ranking. Because it touches both the query and the document, its understanding of the context is much better than that of the selection stage.

In this paper, we will introduce a new reranker training method, which eschews traditional scoring annotations in favor of the widely used in chess and eSports ELO Rating Subsystem.

The heart of the matter: unreliability of scoring

train reranker The goal is to create a function f(q, d)It can be used for any "query q"and "documentation d"Generating an accurate relevance score s. Theoretically, as long as there is a sea of (q, d, s) data, a neural network can be trained by supervised learning.

But the problem is that this "score s"Extremely difficult to obtain.

The dilemma of existing programs: binary labeling and the "false negative" catastrophe

The current mainstream approach is to use manually labeled pairs of "positive" (relevant) and "negative" (irrelevant) data. Positive examples are easy to find, but the construction of negative examples is a big problem.

One approach is to take a random sample from all the documents and assume that it is probably not relevant. But this is like asking a boxing champion to fight an ordinary person, the trained model can not deal with those subtle cases that "seem to be somewhat related, but actually useless".

Another approach is to use the BM25 or the results of a vector search as a source of negative examples. But this leads to a "false negative" disaster: how do you know that a document labeled as a "negative example" is really not relevant? The answer is, you don't. In many cases, a document labeled as a negative example is actually more relevant than a so-called "positive example".

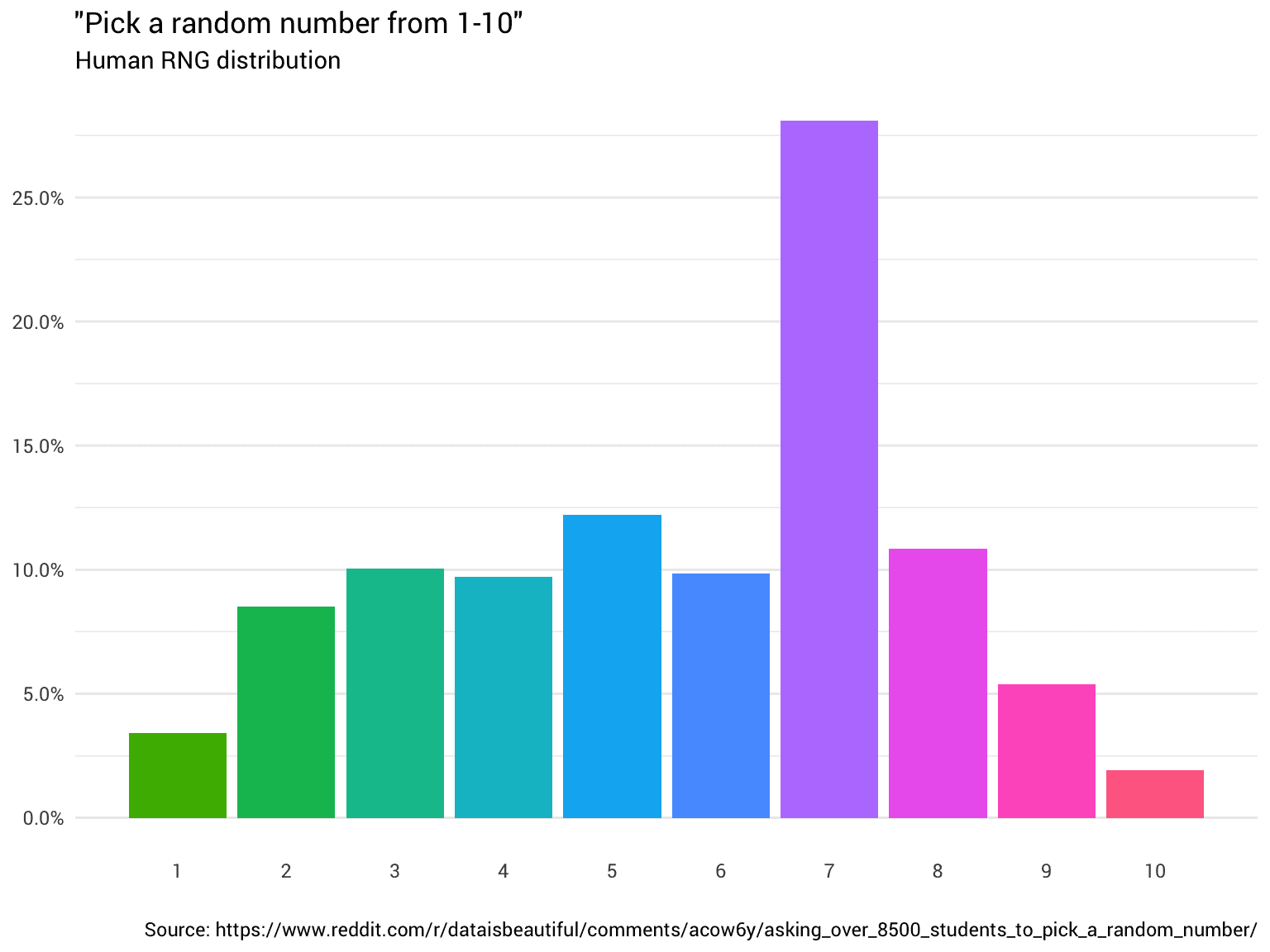

The ambiguity of this scoring system is the fundamental problem. The human brain is inherently bad at giving things absolute scores.

Query. "Who won the 2017 Nobel Prize in Physics?"

Documentation. "Gravitational waves were first observed by LIGO in 2015."

What score should this document get? Some may think the year is wrong and score it low; but physics enthusiasts know that the 2017 Nobel Prize was awarded to the very discoverer of gravitational waves, and would therefore score it high. This huge subjective difference makes absolute scoring full of noise.

The solution: moving from "rating" to "comparing"

If absolute ratings are unreliable, can we just compare? The human brain, while not good at absolute assessments, is extremely good at relative judgments.

Query. "Who won the 2017 Nobel Prize in Physics?"

Document 1. The Nobel Prize was awarded to those who discovered gravitational waves in 2015.

Document 2. Gravitational waves were first observed in September 2015 by the LIGO gravitational wave detector.

Almost everyone would agree that document 1 is more relevant than document 2. By pairwise comparisons, we obtain labeled data with a very high signal-to-noise ratio. This is exactly what ELO The core idea of the rating system is not to ask "how strong are you", but only "who is stronger between you and another person". With a large number of two-on-two results, we can calculate a relatively accurate ranking for each "player" (document).

Now, the problem translates into how to take a large number of pairwise comparison results and convert them into a usable vector of absolute scores.

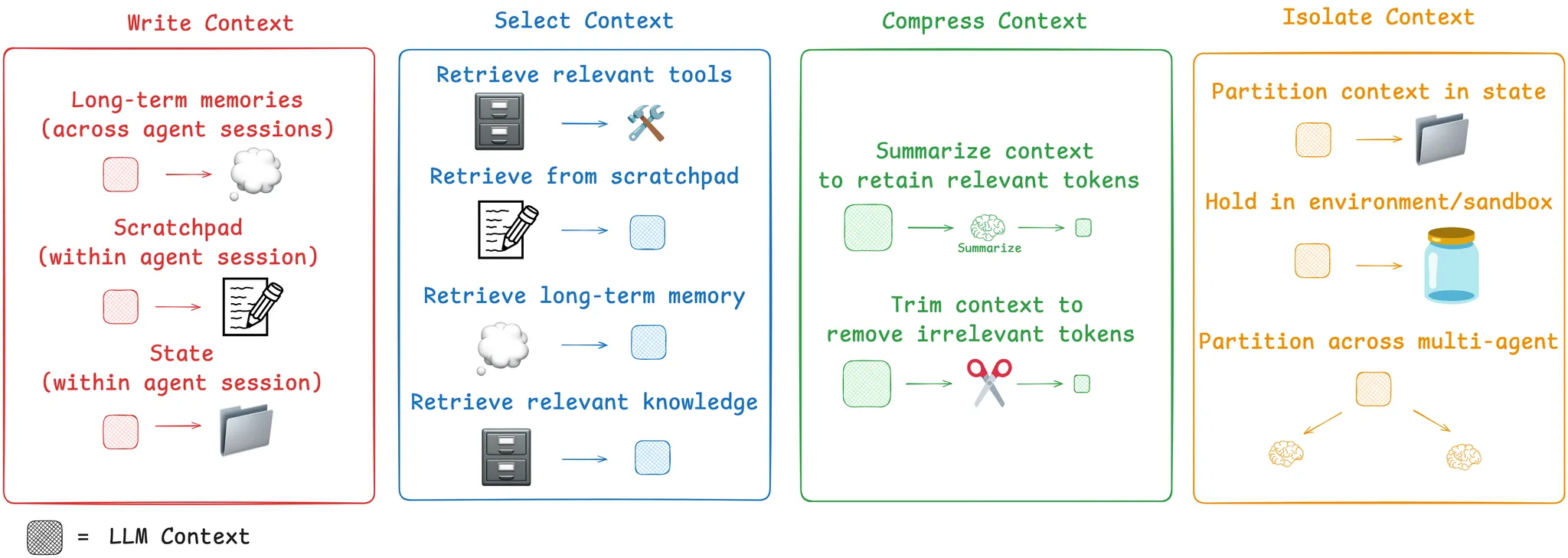

Overview of the training process

Based on this, an innovative training pipeline was designed:

- Ternary Sampling and Labeling. For each query, initially retrieve 100 candidate documents. Randomly select document triples

(q, d_i, d_j), let the large language model (LLM) cluster judgmentd_i和d_jWhich is more relevant. - Training Pairwise Comparison Models. utilization

LLMof labeled data to train a lightweight pairwisererankerthat makes it efficient to predict the relative merits of any two documents. - Calculation of ELO scores. For each query candidate document, multiple rounds of "simulation matches" are performed using the model trained in the previous step, and then the

ELOThe algorithm calculates a rank score for each document. - Training the final model. 将

ELOscores as a "standard answer" to train a standard, single-pointreranker, making it possible to directly predict the relevance scores of documents. - Intensive learning fine-tuning. After supervised learning, reinforcement learning is introduced to allow the model to further optimize the sorting strategy through self-trial and error to improve the final performance.

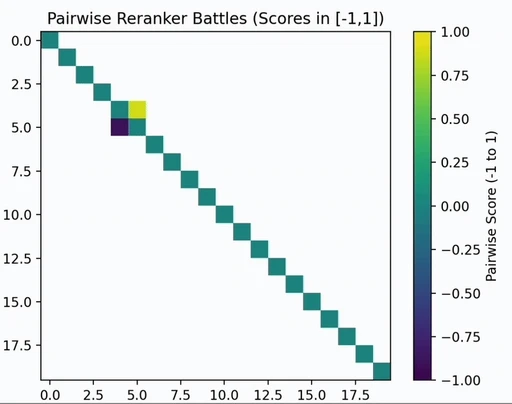

Core technology: pairwise comparisons and ELO ratings

Training pairwise comparators

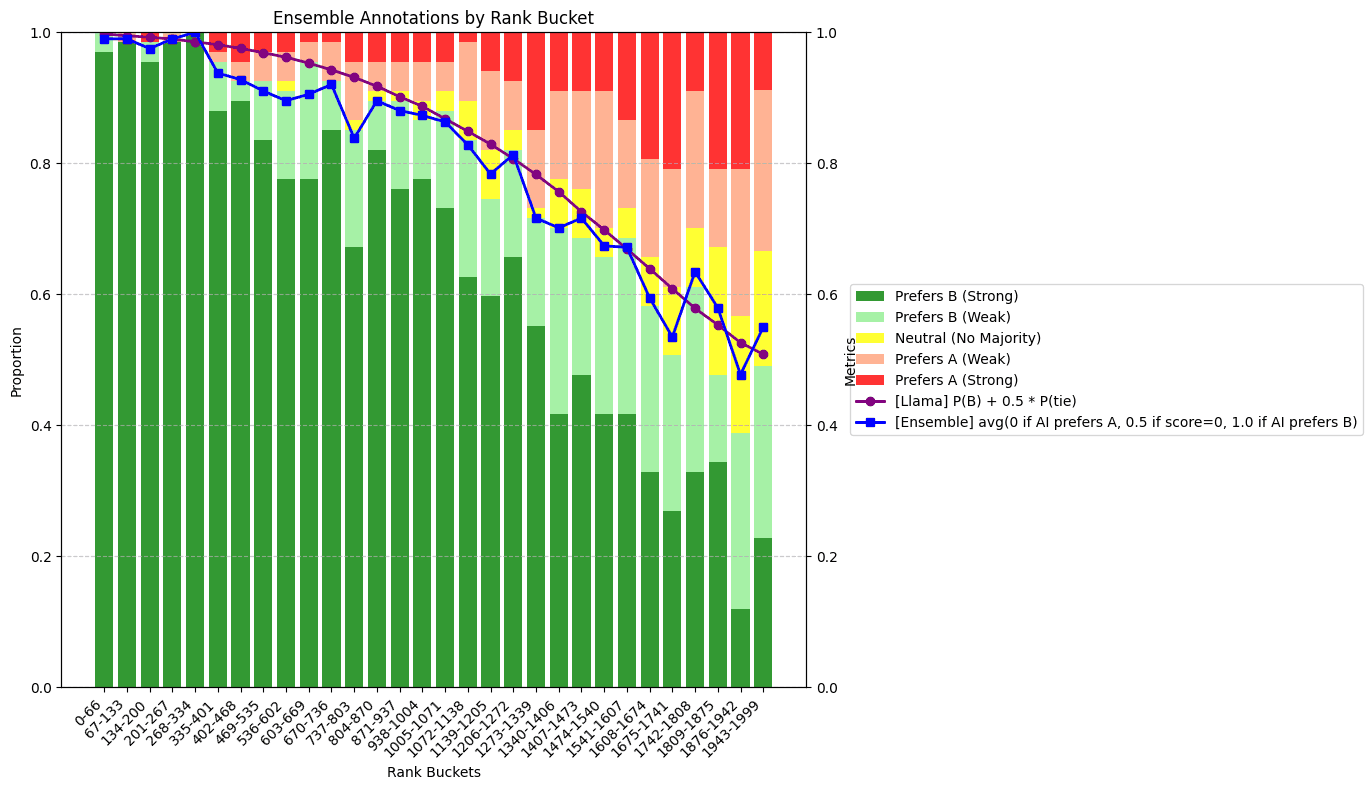

First, the research team used three LLM The integrated model of composition for a large number of document pairs (d_i, d_j) Perform a merit judgment to generate a [0, 1] between preference scores. This process is costly, so the goal is not to apply it directly, but to generate high-quality training data.

This data was then used to fine-tune a lightweight open-source model to train an efficient "pairwise comparator".

Getting an ELO Rating

ELO Model predicts players i overcomer j The probability is based on the difference between their rank scores e_i - e_j. The prediction formula is:

pij = pi / (pi + pj)

Among them.p_i = 10^(e_i / 400).. With this formula, we can correlate probabilities with rank scores.

With a large number of pairwise match results (generated by the comparator in the previous step), it is possible to fit a maximum likelihood estimation that best explains the results of these matches by ELO mark e_i, e_j, .... The loss function is as follows:

𝓁 = -∑i, jwijlog(pi/(pi+pj)) = ∑i, jwijlog(1+eej-ei)

In practice, all 100 documents for each query were n² sub-comparisons are unrealistic. The study found that sampling only O(n) sub-comparisons (e.g., constructing a few randomized tournament loops) would be sufficient to obtain a nearly identical comparison to the fully ELO Score.

Cross-query bias adjustment

above-mentioned ELO The computation is done inside a single query, which creates a new problem:

- For a query with no good answer, the least bad document will also get a high relative

ELOScore (first place in the differential). - For a query with a lot of good answers, some pretty good documentation may get a low relative

ELOScore (bottom of the list in honors).

This can seriously mislead the training of the final model. To solve this problem, a "cross-query bias" needs to be introduced. b" to calibrate score benchmarks between different queries.

The core idea is to allow the model to compare not only "two answers to the same question", but also "two different questions with their own answers", i.e. (q_1, d_1) 与 (q_2, d_2) Which is more relevant. This "apples to oranges" comparison is much noisier, but it gives us the key information we need to calibrate the difficulty of different queries. With a more complex ELO model that can compute the desired bias for each query b, the formula is roughly as follows:

P(P₁ > P₂) = (p₁ + b₁) / ((p₁ + b₁) + (p₂ + b₂))

Training a single-point model

With the above steps, an absolute score that generates high-quality, meaningful f(q, d) = elo(q, d) + b(q) function is created.

The final step is to use the dataset generated by this function to supervise the fine-tuning, through the standard mean square error loss, of a final reranker Modeling. The research team found that the Qwen Fine-tuning on the model family produces the best results, which gave birth to the zerank-1 和 zerank-1-small Model.

This focus on mathematical modeling through ELO The system's approach to generating high-quality training data represents the reranker A unique and effective direction to explore in the field. It fundamentally solves the noise and bottleneck problems caused by relying on unreliable manual absolute scoring in traditional methods.