AI-model-comparison is a web tool that runs in a browser and helps users compare responses from two different artificial intelligence (AI) big language models side-by-side. After the user enters a question, the tool sends a request to the two configured model APIs at the same time and displays the returned results side-by-side. The tool runs entirely on the front-end of the user's browser, no back-end servers are required, and sensitive information such as the user's API key is only used locally and is not uploaded to any servers, ensuring security. It is designed primarily for developers, researchers, and AI enthusiasts who need to evaluate and select the most suitable AI model for a specific task, with an intuitive interface that makes the differences between models readily apparent.

Function List

- parallel comparison: By entering questions simultaneously in an interface, the tool will call two different AI models in parallel and display their answers side-by-side for direct comparison.

- many rounds of dialogue: Support for continuous follow-up questions. After getting the first answer, you can continue to enter questions and the tool will request the model again with a complete contextual history for a more in-depth comparison.

- Detailed Indicator Display: While displaying the answers, key information such as response time, number of Token consumed, etc. for each model is displayed to provide data support for performance evaluation.

- Conversation History: All conversations are automatically recorded and users can view the complete context at any time for easy review and analysis.

- Configuration autosave: The user's API configuration information (excluding the API key) is automatically saved locally in the browser and does not need to be refilled the next time it is used.

- connection test: Provides a one-click test function to quickly verify whether the API address and key can be successfully connected, simplifying the configuration process.

- quick operation: Supports the use of

Ctrl+EnterShortcut keys to quickly initiate comparisons; double-click on the answer returned by the model to quickly copy it to the clipboard. - Pure front-end operation: The entire tool consists only of HTML, CSS and JavaScript, and there is no need to install any back-end services, just open the file in your browser and use it.

Using Help

AI-model-comparison is a purely front-end implementation of a web tool, which means that it is very simple to use, does not require a complex installation and deployment process, and requires only a modern browser to run.

preliminary

Before using it, you need to prepare the following information:

- API information for AI models: You need at least one (two recommended for comparison) language modeling interface compatible with the OpenAI API format. You need access to the following three pieces of information:

API Endpoint(API interface address)Model Name(Name of model)API Key(API key)

Common services and their configuration examples are listed below:

- OpenAI Official API:

API接口:https://api.openai.com/v1/chat/completions模型名称:gpt-4o或gpt-3.5-turboAPI密钥: your OpenAI account key

- OpenRouter (platform for aggregating multiple model services):

API接口:https://openrouter.ai/api/v1/chat/completions模型名称:openai/gpt-4o-mini(model)API密钥: Your OpenRouter account key.

- Locally deployed models or third-party proxy services:

- If you have deployed the model locally through tools such as Ollama, vLLM, etc., and are using an OpenAI-compatible API server, you can fill in the local address, for example

http://localhost:8000/v1/chat/completions。

- If you have deployed the model locally through tools such as Ollama, vLLM, etc., and are using an OpenAI-compatible API server, you can fill in the local address, for example

workflow

Step 1: Download and open the tool

- Visit the project's GitHub page:

https://github.com/hubhubgogo/AI-model-comparison - Click on the green

Codebutton, and then select theDownload ZIPDownload the entire project file to your computer. - Unzip the downloaded ZIP file and you will see the

index.html,style.css,script.jsetc. - Open directly in your browser (Chrome, Firefox, Safari or Edge recommended).

index.htmlfile, and the tool interface loads.

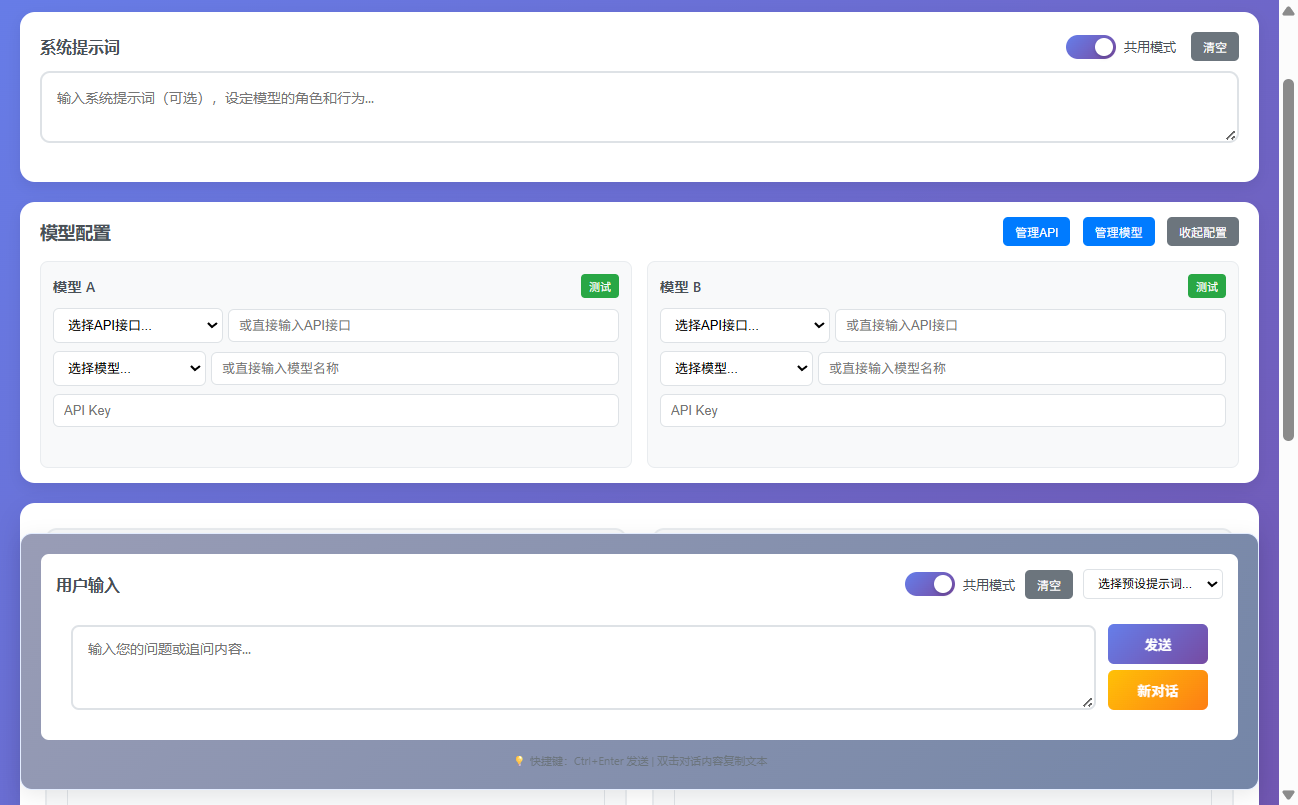

Step 2: Configure the Model API

- The tool interface is divided into left and right columns, each representing a model to be compared.

- In the "Model A" configuration area on the left hand side, fill in the prepared

API接口、模型名称和API密钥。 - Similarly, in the "Model B" configuration area on the right, fill in the API information for another model. You can also use the same API but choose a different model name for comparison (e.g., model A uses the

gpt-4oModel B usesgpt-3.5-turbo)。 - Once filled out, you can click the "Test Connection" button below each configuration area. The system will send a simple request to verify whether the API configuration is correct. If the configuration is correct, the button will turn green and display "Connection Successful"; if it fails, an error message will be prompted for you to check the problem.

Step 3: Enter the prompt words and start comparing

- System Prompt: This is an optional input box. Here you can set the AI model's role, behavioral guidelines, or response style. For example, typing "You are a senior software engineer" will make the model answer from a more technical perspective. This setting takes effect for the entire conversation.

- User Prompt: This is the required core input box. Enter here the question you want to ask or the task you need the model to perform. For example, "Please write a quick sort algorithm in Python".

- Once you have finished typing, click on the "Start Comparison" button in the center of the page, or use the shortcut keys.

Ctrl+Enter。

Step 4: View and analyze the results

- After clicking the button, the tool sends a request to each of the two configured API addresses.

- The models return results that are displayed side-by-side in the left and right side dialog boxes. You can visualize which model's answer is more accurate, more detailed, or more in line with your requirements.

- Above each answer, metadata such as the model's response time and the number of Token used are displayed, which can help you evaluate the model from a performance and cost perspective.

Step 5: Multiple rounds of follow-up questions

- When the first comparison results are available, a new "Continue" input box will appear below the input box.

- You can enter follow-up questions here, such as "Please add detailed comments to the code you just wrote".

- Click "Continue" or use it again.

Ctrl+EnterThe tool sends the entire history of previous conversations (including system prompt words, your first question, the model's first answer, and your follow-up question) to both models together. - In this way, you can have multiple rounds of successive conversations to deeply test the model's performance on complex and continuous tasks.

Step 6: Managing the Dialogue

- Copy content: If you are satisfied with a model's answer, simply double-click on the text area of the answer with your mouse and its contents will be automatically copied to the clipboard.

- Opening a New Dialogue: If you want to start a completely new conversation, you can click the "New Conversation" button on the page. This will clear the current conversation history and allow you to start a new round of comparison testing from scratch.

application scenario

- Model Selection

Before developing an AI application, developers need to choose a language model that best suits their business scenario. This tool can be used to test different models (e.g., GPT-4o vs. Claude 3 Sonnet) in handling specific tasks (e.g., code generation, content creation, customer service Q&A) to make optimal choices based on real-world results, responsiveness, and cost. - Prompt Engineering

For AI application developers and researchers, the quality of prompt words (Prompt) directly affects the output of the model. They can use this tool to fix a model, but configure different versions of system prompt words or user prompt words for the left and right sides, and quickly compare and iterate to find the Prompt writing method with the best results. - Model capacity assessment

When analyzing newly released models, researchers or AI enthusiasts can use it to visually compare the differences between the new models and existing mainstream models in terms of logical reasoning, knowledge reserves, security compliance, etc., to provide first-hand information for model evaluation reports. - Education & Presentation

When teaching or sharing technology related to AI, this tool can be used to visually show the audience the difference between different AI models, for example, comparing the difference in knowledge between a base model and a fine-tuned model in a specific domain, making the concepts concrete and easy to understand.

QA

- Is this tool secure? Will my API key be compromised?

This tool is secure. It is a pure front-end application, all code runs in your browser and your API key is only used to send requests directly from your browser to your configured API service provider, it is not saved or transferred to any third-party server. For maximum security, please do not use it on public or untrusted computers. - Why is there no response or network error when I click "Test Connection" or "Start Comparison"?

This is usually due to several reasons: first, check yourAPI接口The address is filled in correctly. Second, make sure your computer can access the API address normally, some APIs (such as the official OpenAI API) may require a specific network environment to access. Finally, please check yourAPI密钥Is it correct and valid. - What AI models does this tool support?

It supports any model service that provides an interface compatible with the OpenAI API format. This includes the official OpenAI models, the Microsoft Azure OpenAI service, numerous models on the OpenRouter platform, and many open source models that can be deployed via local servers such as Ollama. - Is it possible to export the conversation history?

The current version does not support the ability to export the entire conversation history with a single click. However, you can manually save the information you need by double-clicking on any answer to quickly copy its content.