The vllm-cli is a program for the vLLM A command line interface tool designed to make deploying and managing large language models easier. The tool provides both an interactive menu interface and a traditional command line mode. Users can manage local and remote models, use preset or customized configuration scenarios, and monitor the operational status of the model server in real time. For developers who need to quickly test different models locally or integrate model services into automation scripts, vllm-cli provides an efficient and easy-to-use solution. It also has built-in system information checking and log viewing to help users quickly locate problems when they encounter them.

Function List

- interactive mode: Provides a feature-rich terminal interface that users can navigate through menus, lowering the barrier to use.

- command-line mode: Support for direct command line instructions for easy integration into automation scripts and workflows.

- model management:: Ability to automatically discover and manage model files stored locally.

- Remote Modeling Support: No need to pre-download, you can load and run models directly from the HuggingFace Hub.

- Configuration options: A variety of configurations optimized for different scenarios (e.g., high throughput, low memory) are built-in, and user-defined configurations are also supported.

- Server Monitoring: You can view the status of the vLLM server in real time, including GPU utilization and logging information.

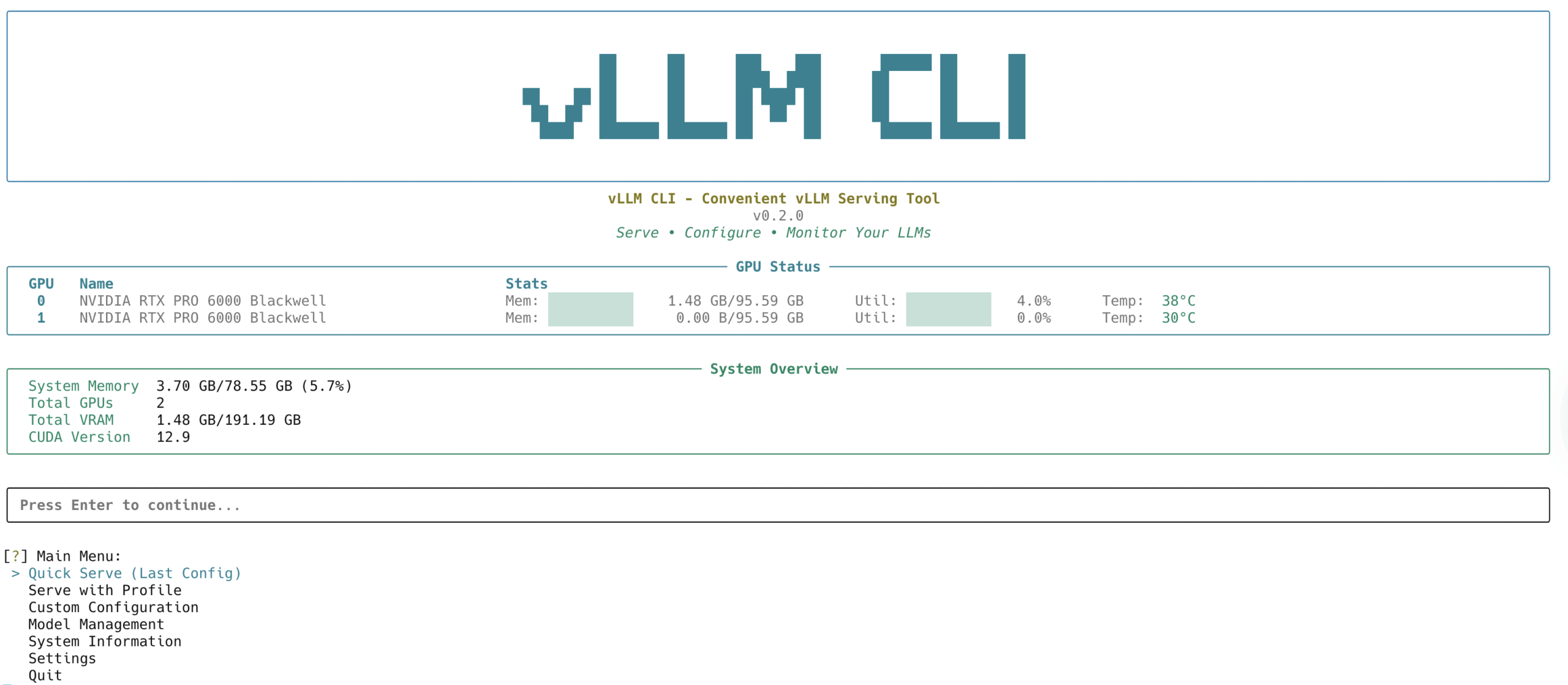

- System Information: Checks and displays GPU, memory, and CUDA compatibility.

- Log Viewer: When the server fails to start, it is easy to view the full log file to troubleshoot errors.

- LoRA support: Allows one or more LoRA adapters to be mounted while the base model is loaded.

Using Help

The vllm-cli is designed to simplify the process of deploying large language models using vLLM. Here are detailed installation and usage steps to help you get started quickly.

1. Installation

precondition

Before installing, make sure your system meets the following conditions:

- Python 3.11 or later.

- A CUDA-enabled NVIDIA GPU.

- The vLLM core package has been installed.

Installing from PyPI

The easiest way to install is from the official PyPI repositories via pip:

pip install vllm-cli

Compile and install from source

If you want to experience the latest features, you can choose to compile and install from the GitHub source.

First, clone the project repository locally:

git clone https://github.com/Chen-zexi/vllm-cli.git

cd vllm-cli

Then, install the required dependency libraries. It is recommended to perform these operations in a clean virtual environment.

# 安装依赖

pip install -r requirements.txt

pip install hf-model-tool

# 以开发者模式安装

pip install -e .

2. Methods of use

vllm-cli provides two modes of operation: an interactive interface and command line instructions.

interactive mode

This is the best way for beginners to get started. Start by typing the following command directly into the terminal:

vllm-cli

Upon startup, you'll see a welcome screen with menu-driven options that walk you through all the steps of model selection, configuration, and service startup.

- Model Selection: The interface lists locally discovered models and remote models on the HuggingFace Hub. You can directly select one for deployment.

- quick start: If you have successfully run it once before, this feature will automatically load the last configuration for one-click startup.

- Customized Configuration: Enter the Advanced Configuration menu, where you can adjust dozens of vLLM parameters including quantization method, tensor parallel size, and more.

- Server Monitoring: Once the service is started, you can see real-time GPU utilization, server status, and log streams in the monitoring interface.

command-line mode

Command line mode is suitable for automated scripts and advanced users. The core commands are serve。

basic usage

Start a model service using the default configuration:

vllm-cli serve <MODEL_NAME>

included among these <MODEL_NAME> is the name of the model, e.g. Qwen/Qwen2-1.5B-Instruct。

Using preset configurations

You can use the --profile parameter to specify a built-in optimization configuration. For example, use the high_throughput configuration to maximize performance:

vllm-cli serve <MODEL_NAME> --profile high_throughput

```内置的配置方案包括:

- `standard`: 智能默认值的最小化配置。

- `moe_optimized`: 为 MoE(混合专家)模型优化。

- `high_throughput`: 追求最大请求吞吐量的性能配置。

- `low_memory`: 适用于内存受限环境的配置,例如启用 FP8 量化。

**传递自定义参数**

你也可以直接在命令行中传递任意 vLLM 支持的参数。例如,同时指定 AWQ 量化和张量并行数为2:

```bash

vllm-cli serve <MODEL_NAME> --quantization awq --tensor-parallel-size 2

Other common commands

- List of available models:

vllm-cli models - Displaying system information:

vllm-cli info - Checking for running services:

vllm-cli status - Stopping a service (need to specify port number).

vllm-cli stop --port 8000

3. Configuration files

The configuration file for vllm-cli is stored in the user directory in the ~/.config/vllm-cli/ Center.

config.yaml: The main configuration file.user_profiles.json:: User-defined configuration schemes.cache.json: Used to cache model lists and system information to improve performance.

When encountering problems such as model loading failures, the tool provides the option for you to view the logs directly, which is very useful for debugging.

application scenario

- Local development and model evaluation

Researchers and developers can quickly deploy and switch between different large language models for algorithm validation, functional testing, and performance evaluation in their local environments without having to write complex server deployment code. - Automated deployment scripts

Utilizing its command line mode, vllm-cli can be integrated into CI/CD processes or automated Ops scripts. For example, when a new model is trained, a script can be automatically triggered to deploy the model and benchmark it. - Teaching and Demonstration

In teaching or product demonstration scenarios, an interactive interface can be used to easily launch a large language modeling service and visually demonstrate the effects of the model to others without having to care about the underlying complex configuration details. - Lightweight application backend

For some internal tools or lightweight applications, you can use vllm-cli to quickly build a stable large language model inference backend for small-scale calls.

QA

- What types of hardware does vllm-cli support?

Currently, vllm-cli primarily supports NVIDIA GPUs with CUDA. support for AMD GPUs is still on the development roadmap. - What should I do if a model fails to load?

First, use the log viewing feature provided by the tool to check for detailed error messages, which will usually indicate what the problem is. Second, verify that your GPU model and vLLM version are compatible with the model. Finally, check the official vLLM documentation to see if the model requires special startup parameters, such as specific quantization methods or trusting remote code. - How did this tool discover my local HuggingFace model?

vllm-cli has an internal integration calledhf-model-toolThe HuggingFace tool is an auxiliary tool for HuggingFace. It automatically scans HuggingFace's default cache directory, as well as other model directories manually configured by the user, to discover and manage all model files stored locally. - Can I use it without a GPU?

No. vllm-cli relies on the vLLM engine, which itself is designed to run large language models efficiently on GPUs, and therefore must have NVIDIA GPU hardware with CUDA support.