TaskingAI is an open source platform focused on helping developers build AI-native applications. It simplifies the development process of Large Language Models (LLM) by providing an intuitive interface, powerful APIs and flexible modular design. The platform supports a wide range of mainstream models, such as OpenAI, Anthropic, etc., and allows the integration of native models.TaskingAI emphasizes asynchronous high performance, plug-in extensions, and multi-tenant support, making it suitable for beginners to professional developers. Users can rapidly deploy the community edition via Docker or use the cloud service for development. The platform also provides a Python client SDK to make it easy for developers to manage and invoke AI functions.TaskingAI is committed to making AI application development easier and more efficient, with an active community that encourages users to participate in contributing.

Function List

- Supports a wide range of Large Language Models (LLMs), including OpenAI, Claude, Mistral, etc., and can integrate with local models such as Ollama.

- Provide rich plug-ins, such as Google search, website content reading, stock data acquisition, etc. Support user-defined tools.

- Built-in RAG (Retrieval Augmentation Generation) system for easy handling and management of external data.

- Supports asynchronous processing and realizes high-performance concurrent computation based on FastAPI.

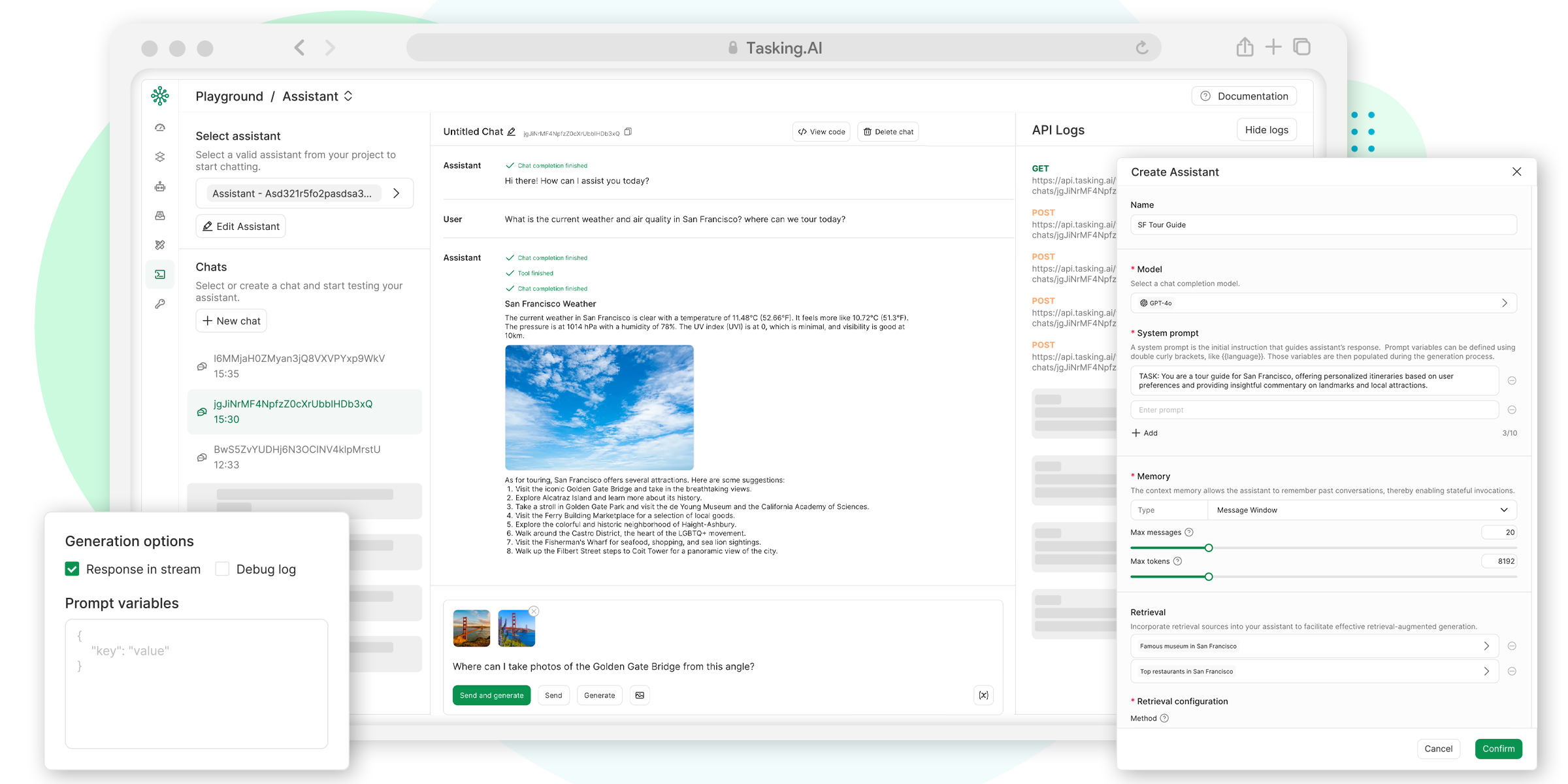

- Provides an intuitive UI console to simplify project management and testing workflows.

- Supports multi-tenant application development for rapid deployment and scaling.

- Provides a unified API interface for easy management of models, tools and data.

- Support for OpenAI-compatible chat completion APIs to simplify task creation and invocation.

Using Help

TaskingAI is a powerful platform for developers to quickly build AI native applications. Below is a detailed installation and usage guide to help users get started quickly.

Installation process

TaskingAI Community Edition can be quickly deployed via Docker and is suitable for local development or testing. Here are the specific steps:

- Ensure environmental readiness

- Install Docker and Docker Compose.

- Make sure your system supports Python 3.8 or above.

- Optional: create a Python virtual environment to isolate dependencies.

- Download TaskingAI Community Edition

- Visit the GitHub repository at https://github.com/TaskingAI/TaskingAI.

- Clone the repository to local:

git clone https://github.com/TaskingAI/TaskingAI.git - Go to the project catalog:

cd TaskingAI

- Configuring Environment Variables

- In the project root directory, create the

.envfile to set the API key and other configurations. Example:TASKINGAI_API_KEY=your_api_key TASKINGAI_HOST=http://localhost:8080 - API keys are available from the TaskingAI console (cloud version requires account registration).

- In the project root directory, create the

- Starting services

- Start the TaskingAI service using Docker Compose:

cd docker docker-compose -p taskingai pull docker-compose -p taskingai --env-file .env up -d - After the service has started, access the

http://localhost:8080View Console.

- Start the TaskingAI service using Docker Compose:

- Installing the Python Client SDK

- Install the TaskingAI Python client using pip:

pip install taskingai - Initialize the SDK:

import taskingai taskingai.init(api_key="YOUR_API_KEY", host="http://localhost:8080")

- Install the TaskingAI Python client using pip:

Main Functions

The core features of TaskingAI include model integration, plugin usage, RAG system and API calls. Below are the specific operation guidelines:

- model integration

TaskingAI supports a wide range of large language models. Users can select a model (e.g. Claude, Mistral) in the console or specify a model ID via the API. for example:from openai import OpenAI client = OpenAI( api_key="YOUR_TASKINGAI_API_KEY", base_url="https://oapi.tasking.ai/v1", ) response = client.chat.completions.create( model="YOUR_MODEL_ID", messages=[{"role": "user", "content": "你好,今天天气如何?"}] ) print(response)

- In the console, the user can directly test the model's dialog effects and adjust parameters such as temperature or maximum output length.

- Plug-in use

TaskingAI offers a variety of built-in plug-ins such as Google search, website content reading, and more. Users can also develop custom plug-ins. Operation Steps:- Select or add a plug-in on the Plug-ins page of the console.

- Call plugin functionality through the API. For example, calling the Google search plugin:

import taskingai result = taskingai.plugin.google_search(query="最新AI技术") print(result) - Custom plugins need to follow TaskingAI's tool development documentation, upload code and bind to the AI agent.

- RAG system operation

The RAG (Retrieval Augmentation Generation) system is used to process external data. Users can create data collections and retrieve content:- Create collections:

coll = taskingai.retrieval.create_collection( embedding_model_id="YOUR_EMBEDDING_MODEL_ID", capacity=1000 ) print(f"集合ID: {coll.collection_id}") - Add a record:

record = taskingai.retrieval.create_record( collection_id=coll.collection_id, type="text", content="人工智能正在改变世界。", text_splitter={"type": "token", "chunk_size": 200, "chunk_overlap": 20} ) print(f"记录ID: {record.record_id}") - Retrieve records:

retrieved_record = taskingai.retrieval.get_record( collection_id=coll.collection_id, record_id=record.record_id ) print(f"检索内容: {retrieved_record.content}")

- Create collections:

- UI Console Usage

- After logging in to the console, go to the "Projects" page and create a new project.

- Select or configure a large language model on the Model screen.

- Run the workflow on the Test page to see the AI output in real time.

- Use the "Logging" feature to monitor API calls and performance.

Featured Function Operation

TaskingAI features include asynchronous processing and multi-tenant support:

- asynchronous processing: Asynchronous architecture based on FastAPI, suitable for high concurrency scenarios. Users do not need additional configuration, API calls automatically support asynchrony. For example, when creating multiple AI agent tasks, the system will process the requests in parallel.

- Multi-tenant support: Suitable for developing multi-user oriented applications. Users can assign models and plug-ins to different tenants in the console to ensure data isolation. Configuration Methods:

- Add a new tenant on the Tenant Management screen of the console.

- Assign separate API keys and model configurations to each tenant.

caveat

- Make sure the API key is secure and not compromised.

- When Docker is deployed, check if port 8080 is occupied.

- Cloud version users are required to register for an account and visit https://www.tasking.ai获取API密钥.

application scenario

- Development of AI chatbots

Developers can use TaskingAI to quickly build chatbots based on large language models, supporting multi-round conversations and external data retrieval, suitable for customer service or education scenarios. - Automated data analysis

With plug-ins and a RAG system, TaskingAI can extract data from web pages or documents and combine it with AI analytics to generate reports or insights, suitable for market researchers. - Rapid Prototyping

Startups or individual developers can utilize TaskingAI's UI console and APIs to quickly build AI application prototypes and validate business ideas. - Education and training

Educational institutions can use TaskingAI to create interactive learning assistants that support students in querying knowledge points or generating practice questions.

QA

- What models does TaskingAI support?

TaskingAI supports a wide range of models such as OpenAI, Claude, Mistral, etc. and can integrate local models through Ollama, etc. - How do I get started with TaskingAI?

Download the community version to deploy via Docker, or sign up for the cloud version to get the API key. Install the Python SDK to invoke the API. - Is programming experience required?

Beginners can use the UI console to operate, developers can realize complex functions through the API and SDK. - What is the difference between the TaskingAI Community Edition and the Cloud Edition?

The community version is free and requires local deployment; the cloud version provides hosting services and is suitable for rapid development.