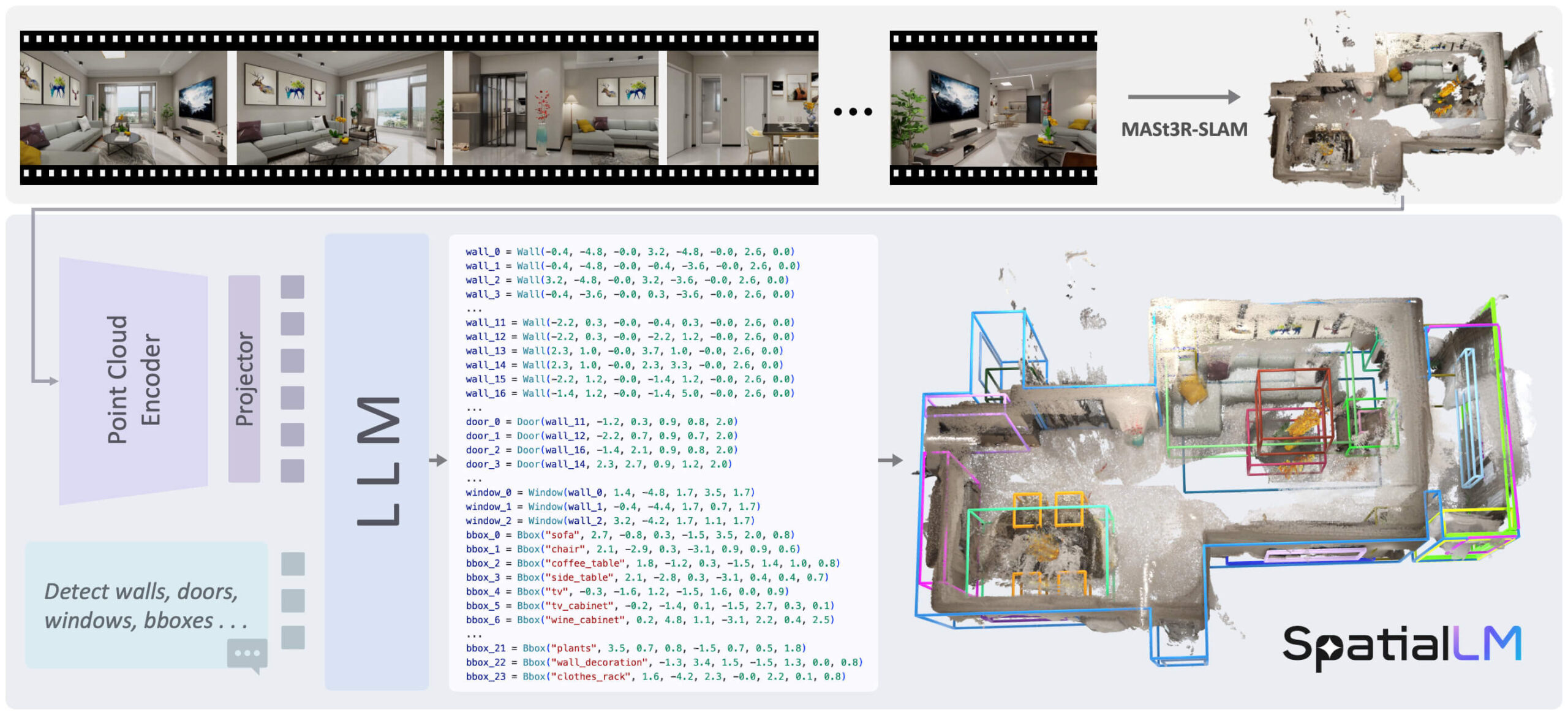

SpatialLM is a large language model designed specifically for processing three-dimensional (3D) point cloud data. Its core function is to understand unstructured 3D geometric data and transform it into structured 3D scene representations. These structured outputs contain architectural elements (e.g., walls, doors, windows) as well as bounding boxes of objects with orientation and their semantic categories. Unlike many approaches that require specific devices to capture data, SpatialLM can process point clouds from multiple data sources, such as monocular video sequences, RGBD images, and laser radar (LiDAR) sensors. The model effectively connects 3D geometric data and structured representations, providing high-level semantic understanding capabilities for embodied robotics, automated navigation, and other applications requiring complex 3D scene analysis.

Function List

- Processing 3D point clouds: Ability to analyze and understand 3D point clouds directly as input.

- Generate structured scenarios: Output structured 3D scene information, including building layouts and object positions.

- Identify architectural elements:: Accurately detect and model basic structures such as walls, doors, windows, etc. in the interior.

- 3D object detection:: Recognize objects such as furniture in the scene and generate bounding boxes with orientation, dimensions and semantic categories (e.g. "bed", "chair").

- Multi-source data compatibility: Support for processing point cloud data generated from different devices (e.g., regular cameras, depth cameras, LIDAR).

- Customized category detection: The user can specify categories of objects of interest and the model will detect and output only those specific categories of objects.

- Multiple model versions:: Multiple versions based on different underlying models such as Llama and Qwen are available, for example

SpatialLM1.1-Llama-1B和SpatialLM1.1-Qwen-0.5B。

Using Help

The process of using SpatialLM model mainly includes environment installation, data preparation and running inference. The following are the detailed steps.

Environmental requirements

Before you begin, make sure your system environment meets the following requirements:

- Python: 3.11

- Pytorch: 2.4.1

- CUDA: 12.4

1. Installation process

First, clone the code repository and go to the project directory, then use Conda to create and activate a standalone Python environment.

# 克隆仓库

git clone https://github.com/manycore-research/SpatialLM.git

# 进入项目目录

cd SpatialLM

# 使用Conda创建名为 "spatiallm" 的环境

conda create -n spatiallm python=3.11

# 激活环境

conda activate spatiallm

# 安装CUDA工具包

conda install -y -c nvidia/label/cuda-12.4.0 cuda-toolkit conda-forge::sparsehash

Next, use thepoetrytool installs the project's dependency libraries.

# 安装poetry

pip install poetry && poetry config virtualenvs.create false --local

# 安装项目主要依赖

poetry install

Depending on the version of SpatialLM you are using, there are additional specific dependency libraries that need to be installed.

SpatialLM version 1.0 depends on.

# 该命令会编译torchsparse,可能需要一些时间

poe install-torchsparse

SpatialLM version 1.1 depends on.

# 该命令会编译flash-attn,可能需要一些时间

poe install-sonata

2. Operational reasoning

Before running the inference, the input data needs to be prepared.SpatialLM requires the input point cloud data to be axis-aligned, i.e., the Z-axis is vertically oriented upwards.

Download sample data:

The project provides a test dataset that you can usehuggingface-clitool to download a sample point cloud file.

huggingface-cli download manycore-research/SpatialLM-Testset pcd/scene0000_00.ply --repo-type dataset --local-dir .

Execute reasoning commands:

Use the following command to process the downloaded point cloud file to generate a structured scene description file.

python inference.py --point_cloud pcd/scene0000_00.ply --output scene0000_00.txt --model_path manycore-research/SpatialLM1.1-Qwen-0.5B

```- `point_cloud`: 指定输入的点云文件路径。

- `output`: 指定输出的结构化文本文件路径。

- `model_path`: 指定使用的模型,可以从Hugging Face选择不同版本。

### 3. 按指定类别检测物体

SpatialLM 1.1版本支持用户指定想要检测的物体类别。例如,如果你只想在场景中检测“床(bed)”和“床头柜(nightstand)”,可以使用以下命令:

```bash

python inference.py --point_cloud pcd/scene0000_00.ply --output scene0000_00.txt --model_path manycore-research/SpatialLM1.1-Qwen-0.5B --detect_type object --category bed nightstand

detect_type object: Set the task type to 3D object detection only.category bed nightstand: Specify that only the categories "bed" and "nightstand" are to be tested.

4. Visualization of results

To visualize the effect of the model output, you can use thereruntool for visualization.

First, convert the model output text file to Rerun format:

python visualize.py --point_cloud pcd/scene0000_00.ply --layout scene0000_00.txt --save scene0000_00.rrd

layout: Specify the reasoning step to generate the.txtLayout files.save: Specify the output of the.rrdVisualize the file path.

Then, use rerun to view the results:

rerun scene0000_00.rrd

This command launches a visualization window showing the original point cloud as well as the walls, doors, windows, and object bounding boxes predicted by the model.

application scenario

- Embodied intelligence and robotics

Robots can utilize SpatialLM to understand the indoor environment in which they are located. For example, by analyzing the point cloud of a room, a robot can identify where doors, obstacles, sofas, and tables are located exactly, allowing for smarter path planning and interaction tasks. - Automatic navigation and map building

In indoor auto-navigation applications, such as service robots in shopping malls or warehouses, SpatialLM can help to quickly build structured 3D maps from sensor data, which can provide richer semantic information than traditional SLAM methods. - Architecture, Engineering and Construction (AEC)

Architects or engineers can use portable scanning equipment to quickly scan a room and then automatically generate a structured model of that room, including walls, door and window locations, etc. with SpatialLM, greatly simplifying the interior mapping and modeling process. - Augmented Reality (AR) & Gaming

AR applications require a precise understanding of the spatial layout of the real world in order to place virtual objects realistically in the environment.SpatialLM provides this precise scene understanding, allowing virtual furniture or game characters to interact naturally with the real scene.

QA

- What is SpatialLM?

SpatialLM is a 3D big language model that understands 3D point cloud data and outputs structured descriptions of interior scenes, including architectural elements such as walls, doors and windows, and objects with annotations. - What types of input data can this model handle?

It can process 3D point cloud data from a variety of sources, including data captured via monocular camera video, RGBD cameras such as Kinect, and laser radar (LiDAR) sensors. - What kind of hardware configuration is required to use SpatialLM?

As it involves the computation of deep learning models, it is recommended to run it on a machine equipped with an NVIDIA GPU and make sure that the corresponding version of the CUDA toolkit (e.g. CUDA 12.4) is installed. - How does SpatialLM differ from other 3D scene understanding methods?

It combines the power of a large language model to directly generate structured, semantically labeled output, not just geometric information. In addition, it supports user-specified categories for detection, providing greater flexibility. - What is the format of the model output file?

The model inference generates a.txtA text file which describes in a structured way the position, size, orientation and category information of each element (wall, door, object, etc.) in the scene.