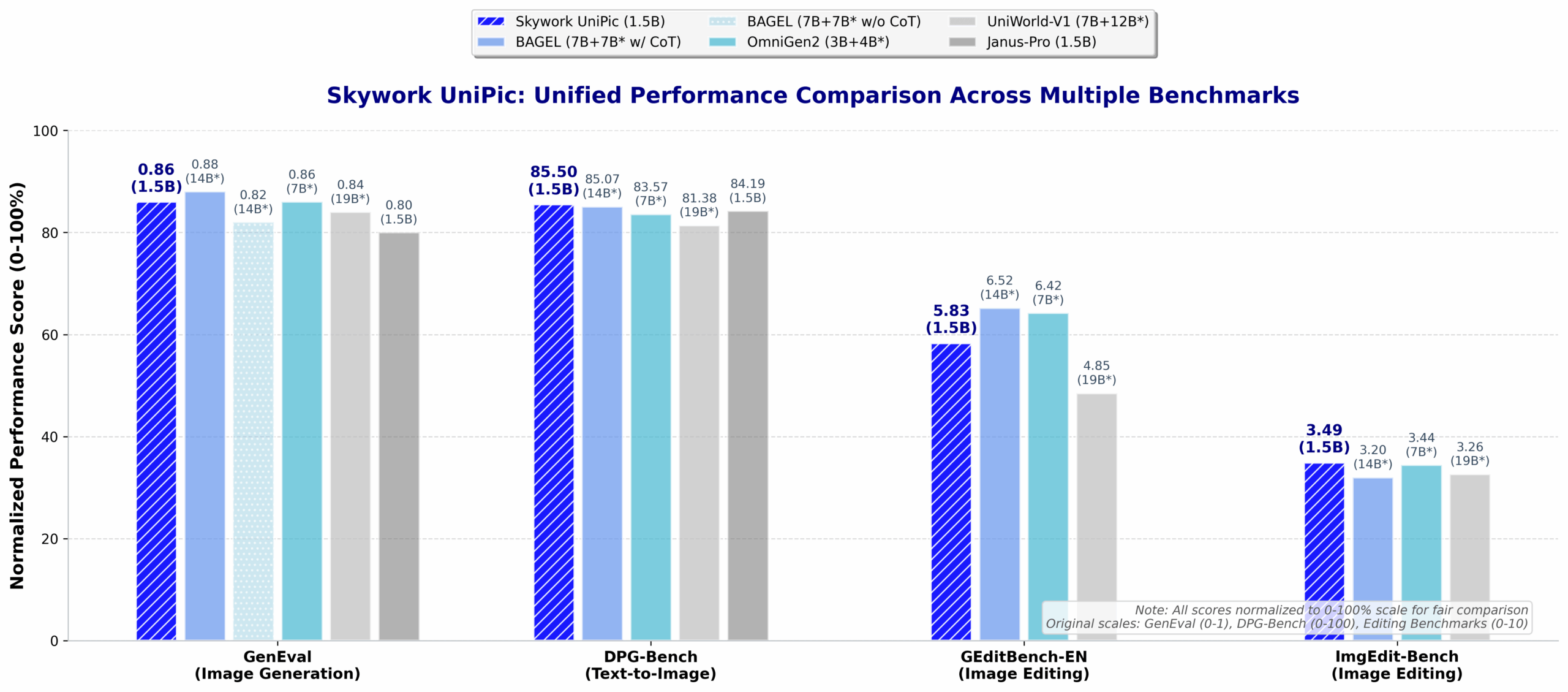

SkyworkUniPic is an open-source multimodal model developed by SkyworkAI that focuses on image understanding, text-generated images, and image editing. It integrates three visual language tasks using a single 150 million parameter architecture. Users can run 1024×1024 pixel image generation and editing tasks on consumer GPUs such as RTX 4090. UniPic performs well in benchmarks such as GenEval, DPG-Bench, and others, making it suitable for developers exploring visual AI applications. The project code and model weights are open on GitHub under the MIT license, which encourages free use and modification.

Function List

- graphic understanding: Analyze the content of input images to answer relevant questions or extract information.

- Text to Image: Generates high-quality images of 1024×1024 pixels based on text descriptions.

- image editing: Modify the image with text commands, such as replacing specific elements or adjusting the style.

- Support for consumer-grade hardware: Runs efficiently on GPUs such as the RTX 4090 without specialized equipment.

- Open Source Model Weighting: Provides pre-trained models that developers can download and customize directly.

Using Help

Installation process

The installation and use of UniPic requires a basic Python environment and GPU support. The following are the detailed installation steps:

- clone warehouse:

Open a terminal and run the following command to clone the UniPic repository:git clone https://github.com/SkyworkAI/UniPic cd UniPic - Creating a Virtual Environment:

Create a Python 3.10.14 environment using conda to ensure dependency isolation:conda create -n unipic python=3.10.14 conda activate unipic - Installation of dependencies:

Install the Python libraries needed for your project:pip install -r requirements.txt - Download model weights:

UniPic provides pre-trained model weights that need to be downloaded from Hugging Face. Run the following command:pip install -U "huggingface_hub[cli]" huggingface-cli download Skywork/Skywork-UniPic-1.5B --local-dir checkpoint --repo-type model - Setting environment variables:

Adds a project path for the script to run:export PYTHONPATH=./:$PYTHONPATH

Functional operation flow

UniPic supports three main functions: image understanding, text to image generation and image editing. The following is a detailed description of the operation:

1. Text to image

The user can generate a 1024×1024 pixel image with a text description. For example, generate an image of a golden retriever standing on the grass in a park:

- procedure:

Run the following commands to specify the model profile, weight paths, and text prompts:python scripts/text2image.py configs/models/qwen2_5_1_5b_kl16_mar_h.py \ --checkpoint checkpoint/pytorch_model.bin \ --image_size 1024 \ --prompt "A glossy-coated golden retriever stands on the park lawn beside a life-sized penguin statue." \ --output output.jpg - caveat:

- Currently, only 1024×1024 pixels are supported for image generation.

- Text prompts need to be clear and specific for better generation.

- The output image is saved in the specified

output.jpgDocumentation.

2. Image editing

UniPic allows the user to modify an existing image with text commands. For example, replacing stars in an image with candles:

- procedure:

Prepare an input image (e.g.data/sample.png), run the following command:python scripts/image_edit.py configs/models/qwen2_5_1_5b_kl16_mar_h.py \ --checkpoint checkpoint/pytorch_model.bin \ --image_size 1024 \ --image data/sample.png \ --prompt "Replace the stars with the candle." \ --output output.jpg - caveat:

- The input image should be 1024×1024 pixels.

- Textual instructions need to clearly describe the modification, such as replacing, adding, or removing elements.

- The edited image is saved as

output.jpg。

3. Image comprehension

UniPic can analyze image content and answer related questions. No standalone image understanding scripts are currently available in the repository, but developers can customize their implementations based on model weights and the Qwen2.5 framework.

- operation suggestion:

- Use Hugging Face's Transformers library to load the model.

- Prepare the image and question and call the model's inference interface to get the answer.

- Refer to SkyworkAI's documentation or community examples to implement specific features.

Other useful tips

- hardware requirement: NVIDIA RTX 4090 or higher GPUs with at least 24GB of video memory are recommended.

- Debugging Issues: If you encounter a dependency conflict, check the Python version and CUDA compatibility.

- Community Support: Visit the Issues page of your GitHub repository to see frequently asked questions or submit a new issue.

- model optimization: Developers can fine-tune model weights to fit specific tasks or datasets.

application scenario

- content creation

UniPic is suitable for bloggers, designers and other creators to generate high-quality images. For example, generate promotional images that match a brand's style, or automatically create illustrations based on article content. - Education and Research

Researchers can use UniPic to explore the capabilities of multimodal AI. Students can learn image processing and generation techniques through open source code. - E-commerce and Advertising

Merchants can use UniPic to edit product images, such as changing the background or adding promotional elements to enhance visual appeal. - game development

Developers can generate game scenarios or character concept art to quickly iterate on design ideas.

QA

- What image resolutions does UniPic support?

Currently, only 1024×1024 pixels are supported for image generation and editing. - Do I need a specialized GPU to run UniPic?

Not required. Consumer GPUs (e.g. RTX 4090) can run it, and 24GB or more of video memory is recommended. - How do I get model weights?

Download via Hugging Face, runhuggingface-cli download Skywork/Skywork-UniPic-1.5BCommand. - Is UniPic commercially available?

Yes. UniPic is licensed under the MIT license, which permits commercial use, modification and distribution. - What is the quality of image generation?

UniPic scored 0.86 on GenEval and 85.5 on DPG-Bench, generating better quality than some of the larger models.