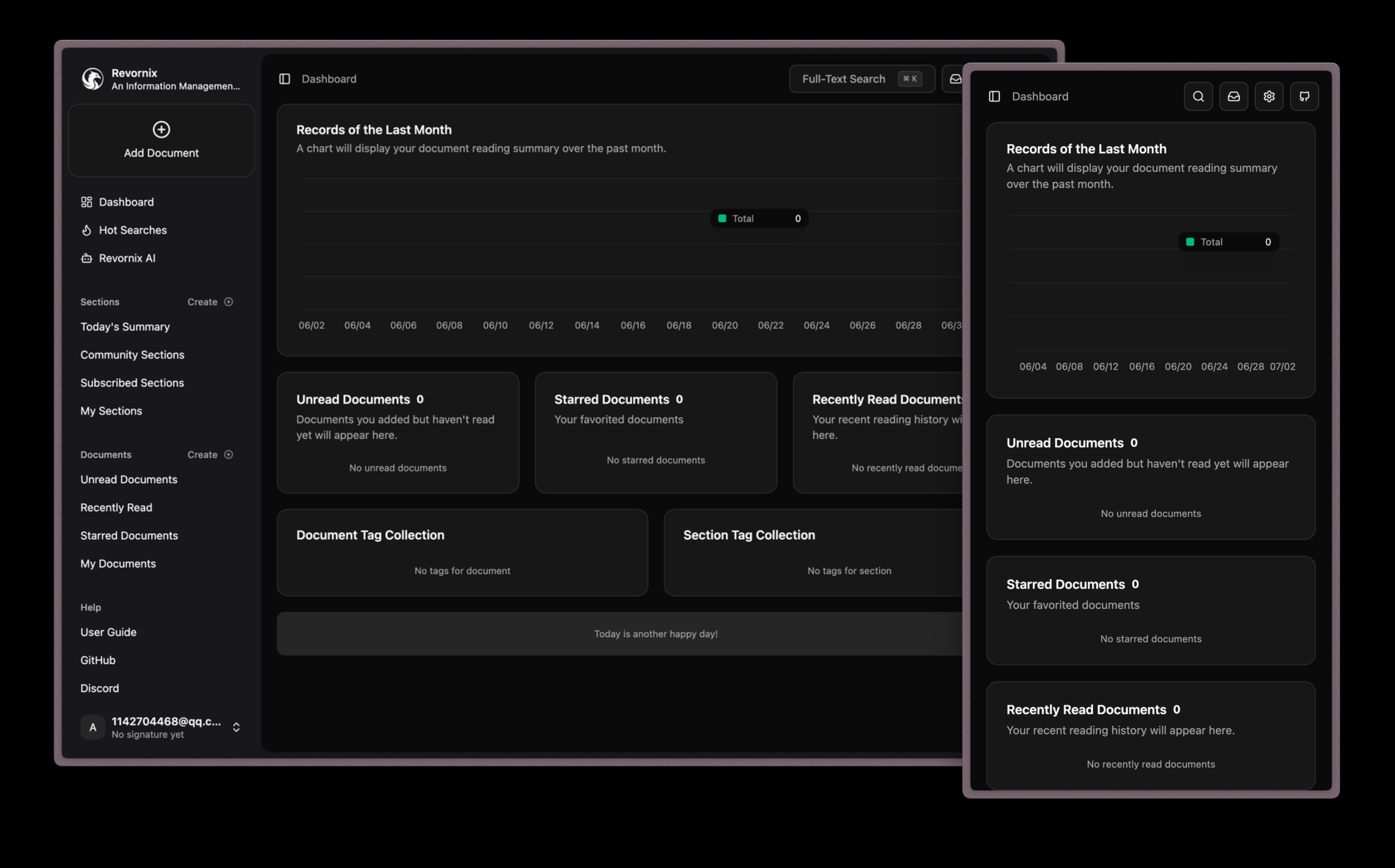

Revornix is an information management tool for the AI era. It enables users to consolidate information and documents from different sources, interact and analyze them with built-in AI assistants. At the heart of the tool is its Multimodal Collaborative Processing (MCP) client, which supports automatic daily summarization of collected documents and information to generate structured reports. Revornix is an open source project that allows users to deploy it on their own servers to ensure data privacy. It supports multi-tenant use, meaning that multiple users can use one system at the same time and have their own independent document databases. At the same time, the tool allows users to customize access to a variety of large language models (need to be compatible with the OpenAI interface), so that users can flexibly choose the appropriate AI model for their own document content processing and interaction.

Function List

- Cross-platform content aggregation: Supports centralized collection of content from a variety of sources such as news sites, blogs, forums, etc. Currently offers a Web version, with future plans to support iOS and WeChat applets.

- Document Processing and Vectorization: Using multimodal big model technology, uploaded or collected documents (e.g., PDF, Word, etc.) are uniformly converted into Markdown format, vectorized and deposited into the Milvus vector database, which facilitates subsequent semantic retrieval and content analysis.

- Built-in intelligent assistant (MCP): Provides an AI assistant powered by built-in Multimodal Co-Processing (MCP) technology that allows users to talk to and interact directly with their own document libraries, with support for switching between different language models.

- multi-tenant architecture: The system natively supports multi-user use, with each user having a separate document space and database, ensuring data isolation and privacy.

- Localization and Open Source: The project code is completely open source and all data is stored locally by the user, avoiding the risk of data leakage.

- Highly customizable: Support users to freely configure and replace the back-end Large Language Model (LLM), as long as the model is compatible with OpenAI's API interface can be accessed.

- Customized message notification: Users can set up rules to allow the system to send customized message notifications via email, phone, etc.

- Responsive and multilingual interface: The system provides a good user experience for both computer and mobile, Chinese and English users.

Using Help

Revornix recommends using Docker for deployment, which simplifies environment configuration and allows users to get programs up and running quickly.

Getting started quickly with Docker (recommended)

- Clone Code Repository

First, the Revornix code needs to be cloned from GitHub to your local computer or server. Open a terminal (command line tool) and execute the following command:git clone git@github.com:Qingyon-AI/Revornix.gitThen, go to the project directory:

cd Revornix - Configuring Environment Variables

In the project root directory, there is a file namedenvsfolder, which contains templates for the environment variable configuration files required by each service. You need to add the template files (starting with.exampleending) is copied as a formal configuration file.

Execute the following command:cp ./envs/.api.env.example ./envs/.api.env cp ./envs/.file.env.example ./envs/.file.env cp ./envs/.celery.env.example ./envs/.celery.env cp ./envs/.hot.env.example ./envs/.hot.env cp ./envs/.mcp.env.example ./envs/.mcp.env cp ./envs/.web.env.example ./web/.envIn most cases, you only need to configure one key parameter. Use a text editor (e.g.

vim或nano) to open one of the configuration files, for examplenano ./envs/.api.envFindSECRET_KEYThis parameter. You need to set a complex, randomized string for it to use as a key for user authentication and encryption.

Important Notes:SECRET_KEYvalues must be kept exactly the same in the configuration files of all services, otherwise the user authentication system between services will not be able to interoperate, resulting in login failures. For other parameters, if there is no special need, you can keep the default values for the time being. - Starting services

After completing the environment variable configuration, you can use Docker Compose to pull all the necessary images and start all the services with a single click. Execute it in the project root directory:docker compose up -dthis one

-dparameter will make the service run in the background.

The startup process takes some time as it has to download multiple Docker images and start multiple containers such as backend, database, AI modeling service, etc. in sequence. Usually it takes 3 to 5 minutes to wait. - Access to front-end pages

Once all the services have been successfully started, you can access them in your browser athttp://localhostto view and use Revornix's front-end interface.

take note of: Due to the long startup time of the back-end service, you may experience a request failure or inability to load data when you first open the front-end page. This is normal. You can check the logs of the core backend service (api) to confirm that it is ready with the following command:docker compose logs apiWhen you see a log that something like the service has run successfully, refresh the front-end page and it will work.

Manual deployment method

For developers who wish to customize more deeply or understand the system architecture, the project also supports manual deployment. The detailed manual deployment process can be found in the official documentation.

application scenario

- Personal knowledge management

Users can upload articles, blogs, eBooks and local PDF documents from the web into Revornix. The system automatically converts and vectorizes these documents into a uniform format. Users can quickly find the information they need by talking to the built-in AI assistant, or have the AI assistant summarize specific topics and generate reports based on the content of the entire knowledge base. - Team information sharing and collaboration

In a team, different members can deposit collected industry information, competitive product analysis, technical documents, etc. into Revornix. as the system supports multi-tenancy, each member can have his/her own space or set up a shared space. Managers can use the daily summary function to quickly understand the dynamics of team members' concerns, while team members can also interact with the AI assistant to quickly get answers from the team's knowledge base and improve collaboration efficiency. - Vertical Information Monitoring

For users who need to follow the developments in specific fields (e.g. finance, technology, healthcare) for a long time, they can use Revornix to aggregate information from different news sources and forums. By setting up keywords and notification rules, the system can automatically alert users via email or other means when important information appears, and can generate a daily summary of hotspots to help users save time in filtering and reading information.

QA

- How is Revornix different from other knowledge management software?

Revornix's core differentiator is its built-in MCP (Multimodal Collaborative Processing) client and deep integration with the Big Language Model. It doesn't just store documents, it emphasizes "interacting" with their content. You can ask your knowledge base questions and ask it to summarize or analyze as if you were talking to a human being. In addition, its open-source and localized deployment features make it more comfortable for data privacy-conscious users. - Do I need to have my own AI model to use Revornix?

No. Revornix itself does not provide AI models, but it has designed an open model access framework. Users can connect to any language modeling service that is compatible with the OpenAI API interface, and can easily configure and use it, whether they are using models officially provided by OpenAI, or other third-party or self-deployed open source models (e.g., Llama, Mistral, etc.). - What is MCP?

MCP stands for "Multi-modal Co-pilot", which means Multi-modal Co-processing or Multi-modal Co-driving. In Revornix, it stands for an intelligent assistant that can understand and process multiple types of data (e.g., text, possible future support for images, etc.) and interact with the user. It can invoke different tools and models to fulfill the user's commands, such as summarizing documents, answering questions, and so on. - Are there high server requirements for deploying Revornix?

The basic deployment is not too demanding on the server, but if you need to process a large number of documents or use more powerful local AI models, you will have some requirements on the server's CPU, memory and graphics card (especially when performing AI calculations). The official recommendation is to use Docker for deployment, which simplifies environment dependency issues, but the server itself still needs to have the basic performance to run Docker.