Understanding the consumer behavior of large-scale users is a core challenge for modern financial institutions. When billions of transactions are generated by millions of users, the ability to interpret this data is directly related to the success or failure of product recommendations, fraud detection, risk assessment and user experience.

In the past, the financial industry has relied on traditional machine learning methods based on tabular data. Under this model, engineers would manually convert raw transaction data into structured "features" such as income levels, spending categories, or number of transactions, and then feed these features into predictive models. While this approach is effective, it has two fatal flaws: first, manually constructing features is time-consuming and fragile, and is highly dependent on the experience of domain experts; second, it is not very generalizable, making it difficult to use features designed for credit risk control for fraud detection, and leading to duplication of effort across different teams.

In order to break through these limitations.Nubank Turning to a technique that is revolutionizing the fields of natural language processing and computer vision: Foundation Model. Instead of relying on artificial features, Foundation Models automatically learn generic "embeddings" directly from massive amounts of raw transaction data through self-supervised learning. These embeddings capture the deep patterns of user behavior in a compact and expressive way.

Nubank The goal is to process trillions of transactions and extract a common user representation that can empower a variety of downstream tasks such as credit modeling, personalized recommendations, anomaly detection, and more. In this way, they hope to unify modeling standards, reduce repetitive feature engineering, and improve predictive performance across the board.

In this article, we will take an in-depth look at Nubank The body of technology used to build and deploy these base models traces the complete lifecycle from data representation and model architecture to pre-training, fine-tuning, and integration with traditional tabular systems.

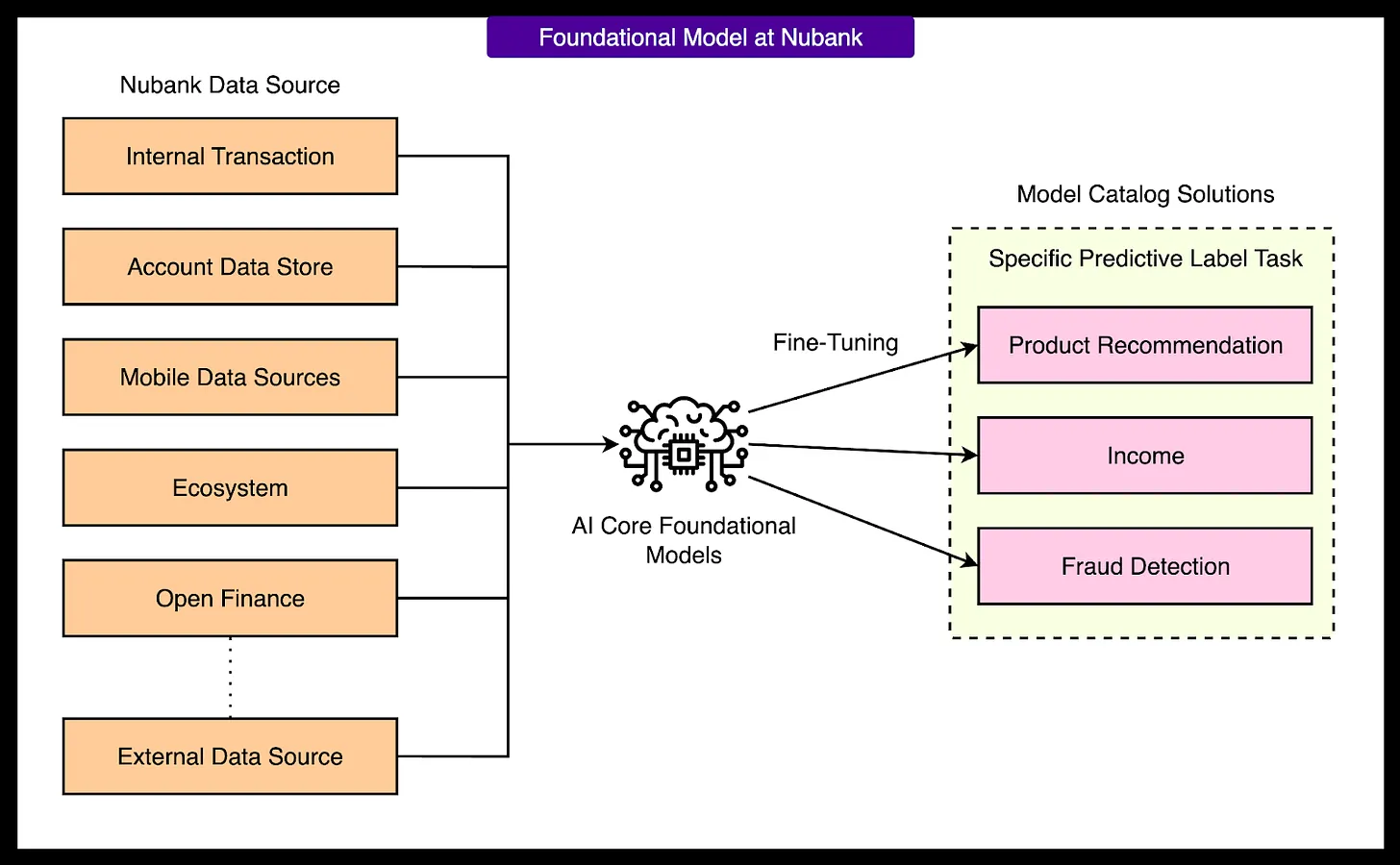

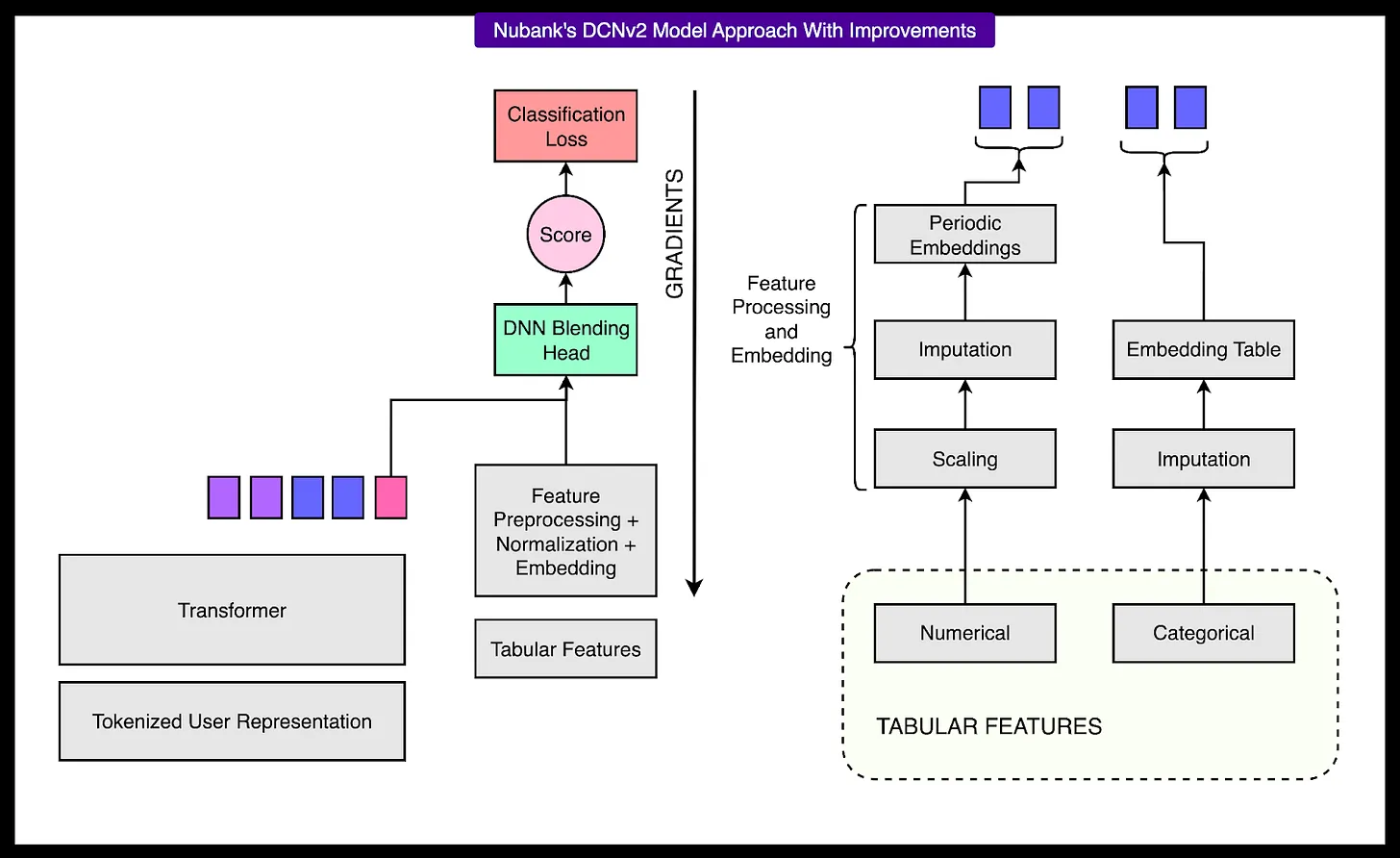

Nubank's overall system architecture

Nubank The basic modeling system is designed to extract generic user representations from massive financial data, and these representations, called "embeddings", will subsequently be widely used in business scenarios such as credit scoring, product recommendation, and fraud detection. The whole architecture is based on Transformer The model is centered on several key stages.

1 - Transaction data ingestion

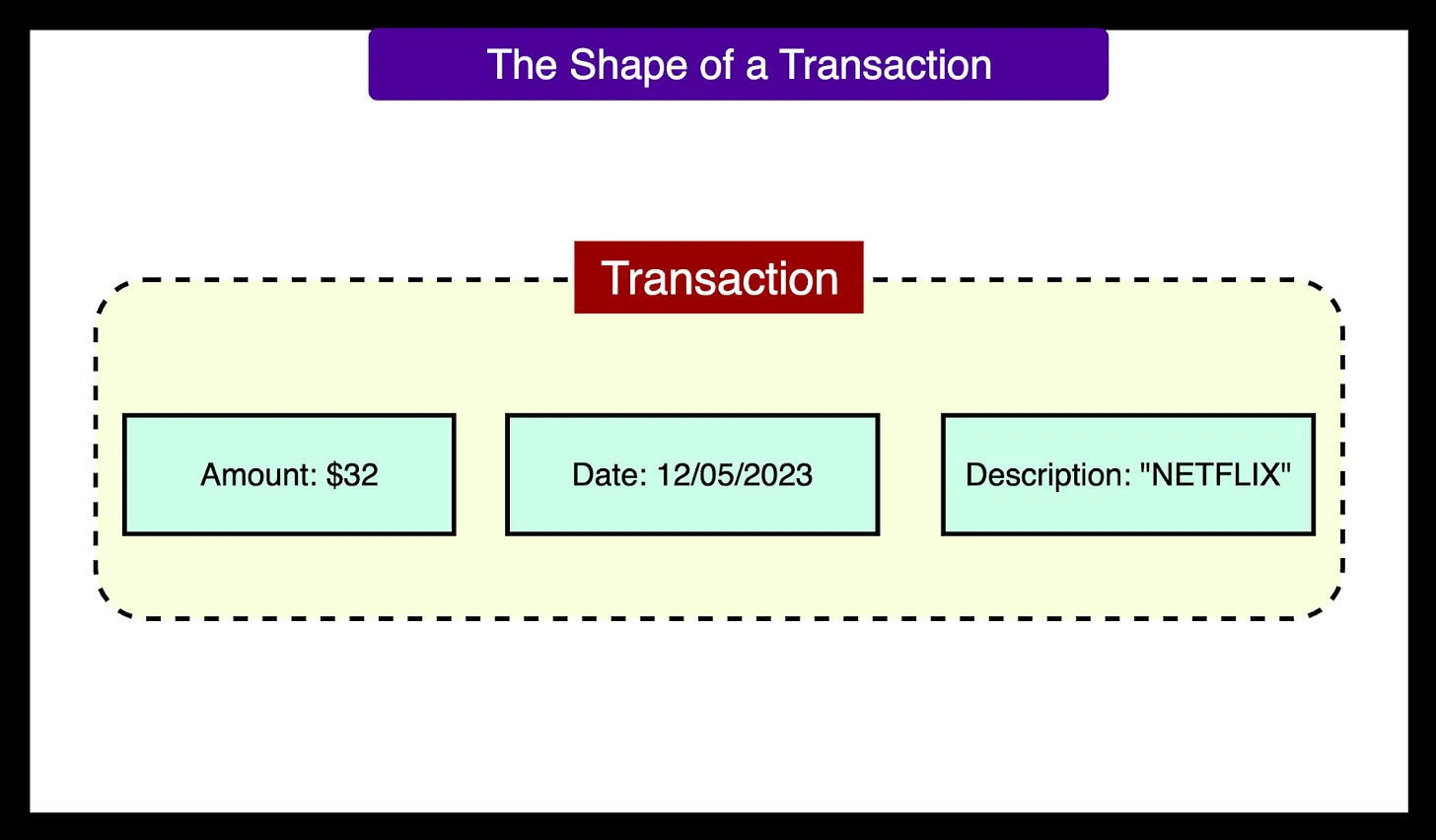

The starting point for the system is the collection of raw transaction data for each customer, including information such as transaction amounts, timestamps, and merchant descriptions. The amount of data is enormous, covering trillions of transactions from over 100 million users. Each user has a chronological sequence of transactions, which is critical for the model to understand the evolution of the user's consumption behavior.

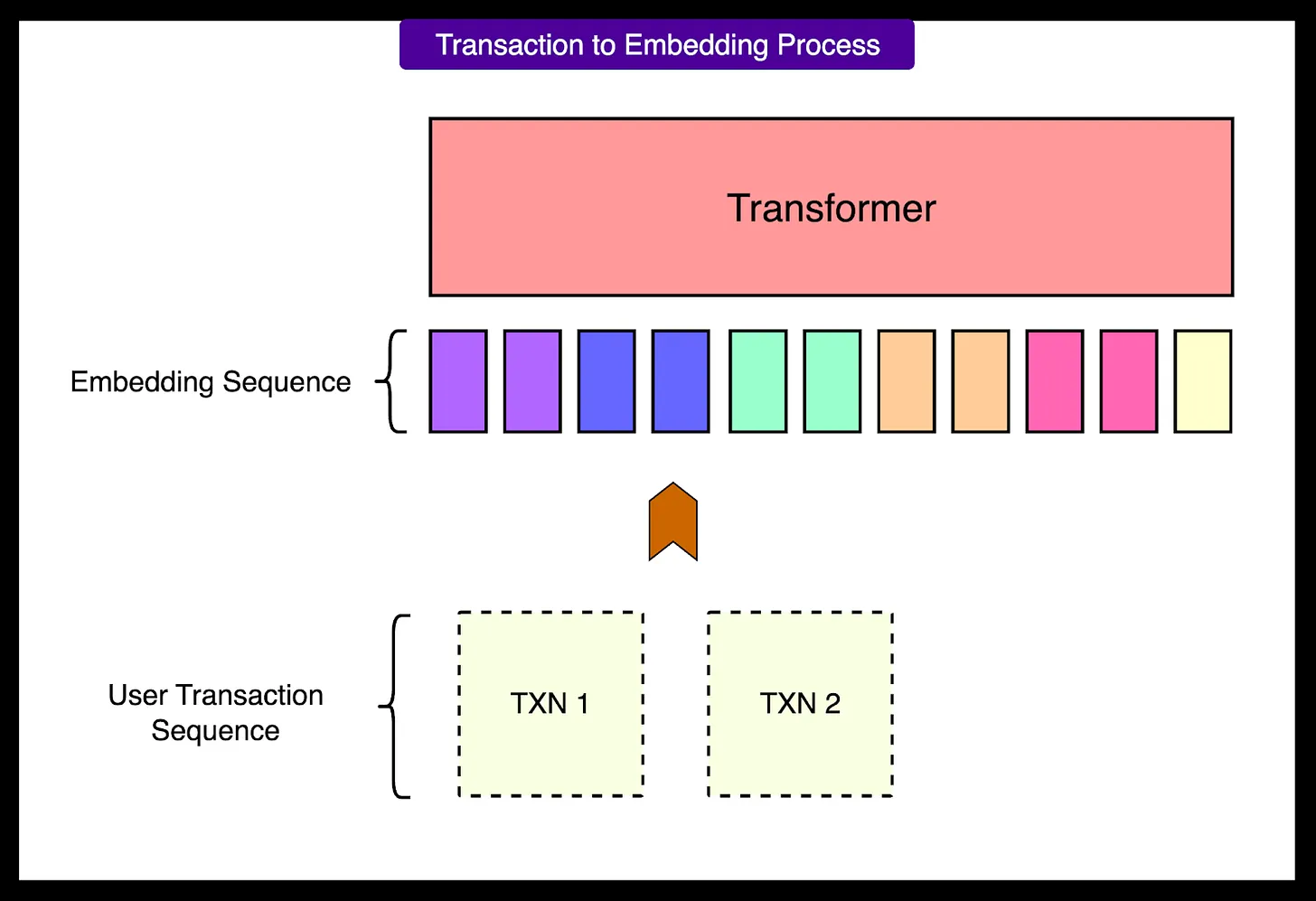

2 - Embedded Interface

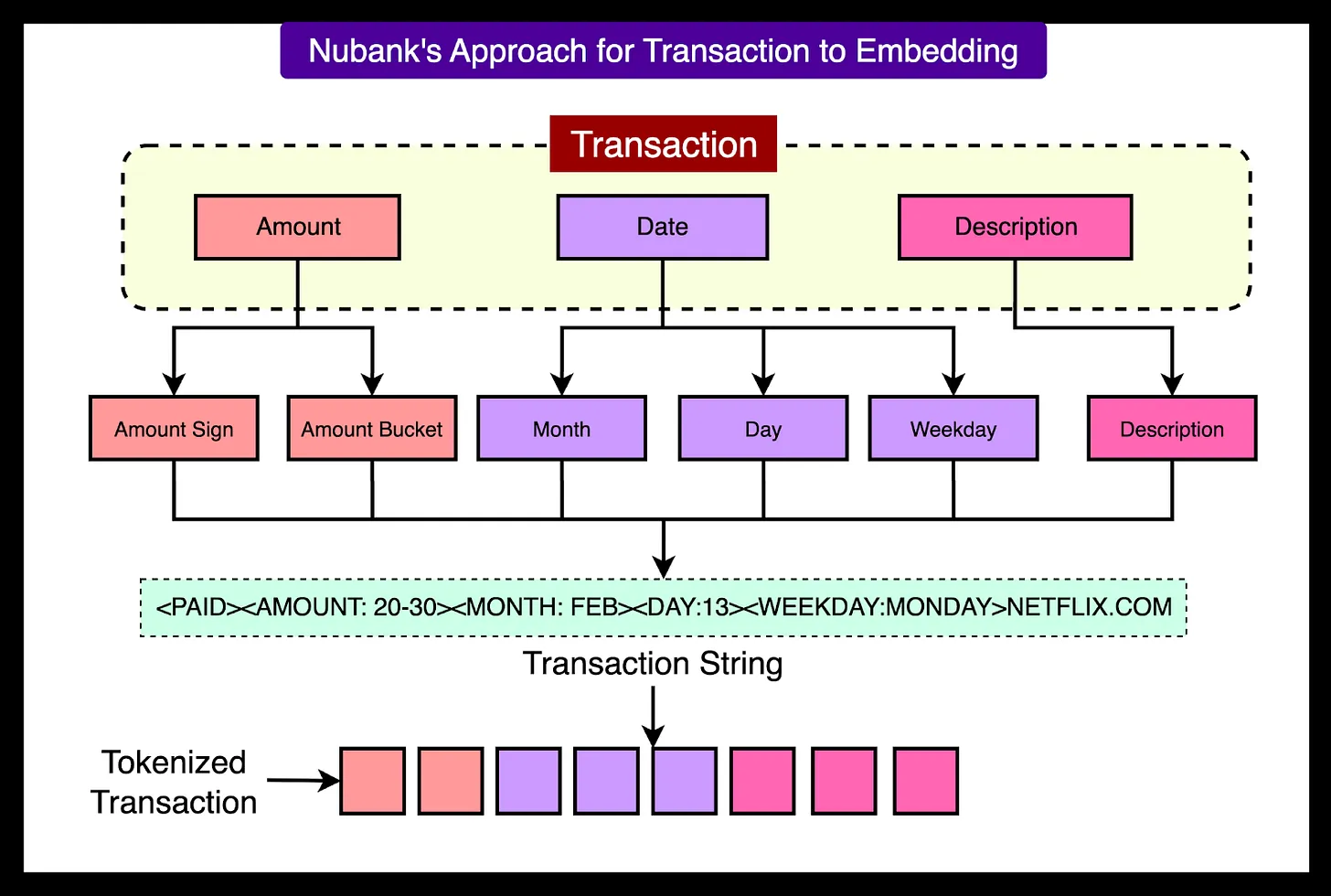

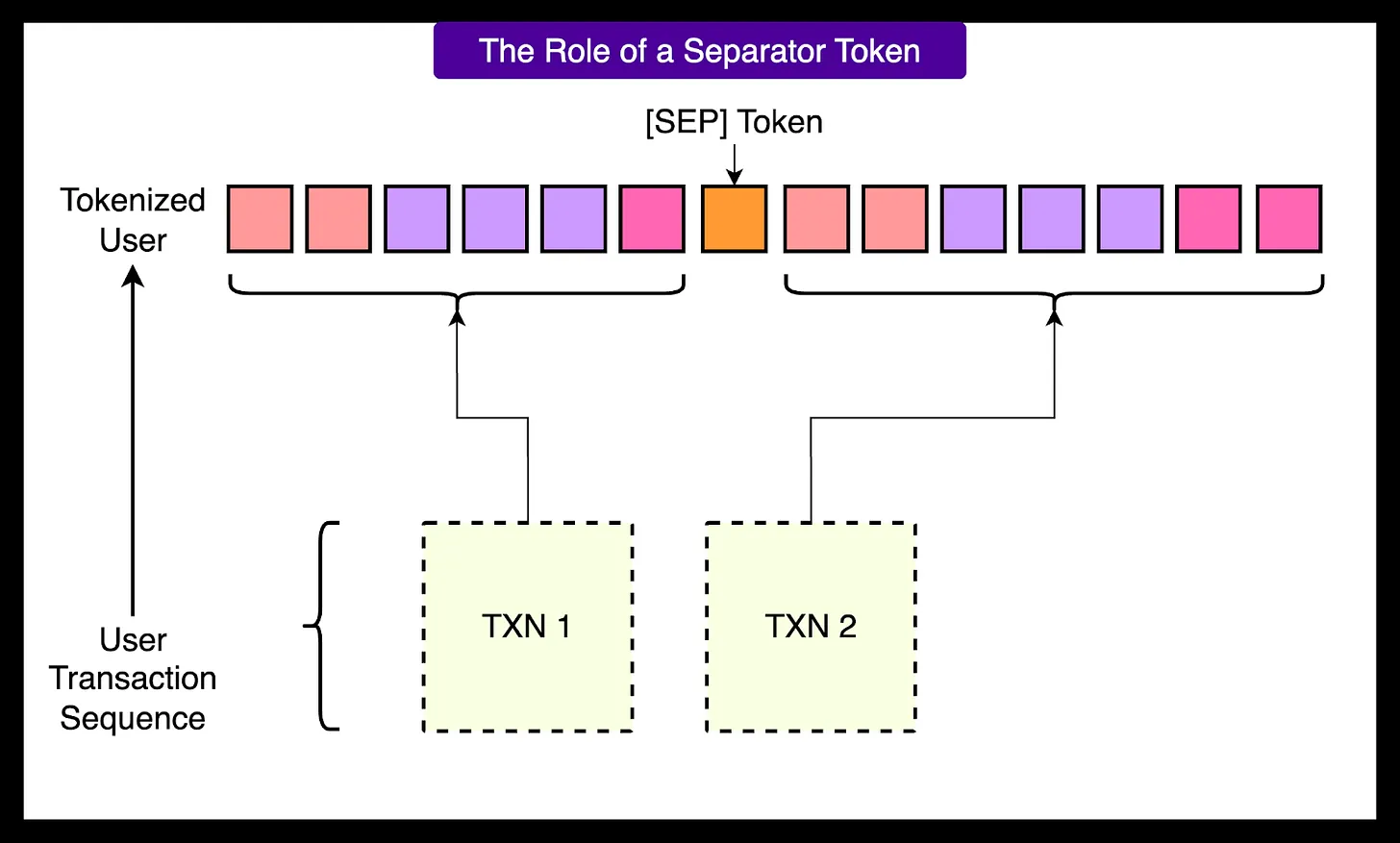

The raw transaction data needs to be converted into Transformer A format that the model understands.Nubank A hybrid encoding strategy is used, where each transaction is treated as a structured sequence of tokens (Token).

Each transaction is broken down into several key elements:

- dollar sign: A classification token is used to indicate whether a transaction is positive (e.g., deposit) or negative (e.g., consumption).

- cash dispenser: Transaction amounts are quantified and divided into pre-defined "bins" to reduce the variance of the values.

- date token: Date information such as month, day of the week, and number are also converted into separate tokens.

- Merchant Description: Use standard text splitters (e.g.

Byte Pair Encoding) slices the merchant name into multiple sub-word tokens.

This tokenized sequence preserves both the structure and semantics of the original data and the compactness of the input sequence. This is important because the Transformer The computational cost of the medium attention mechanism is proportional to the square of the input length.

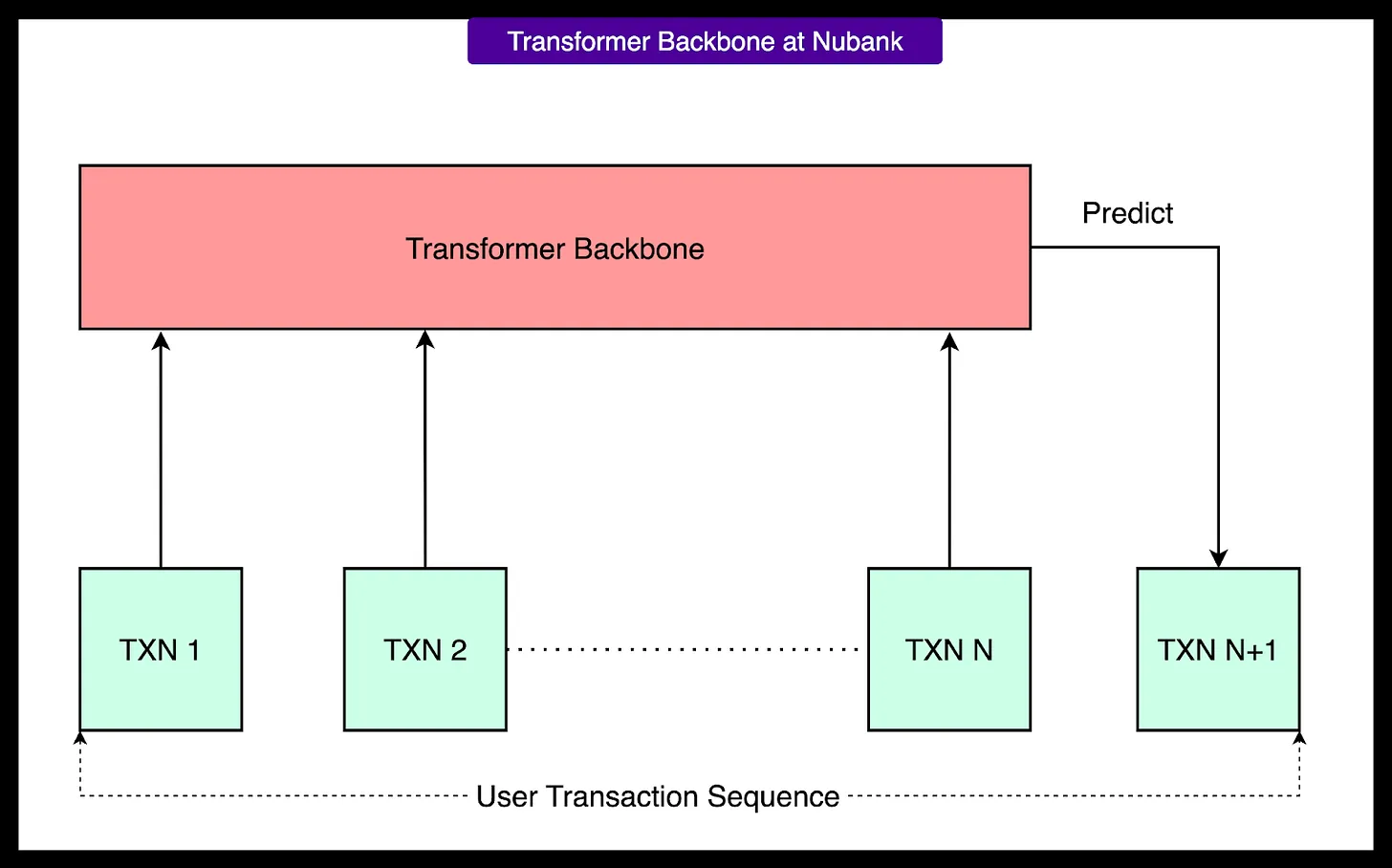

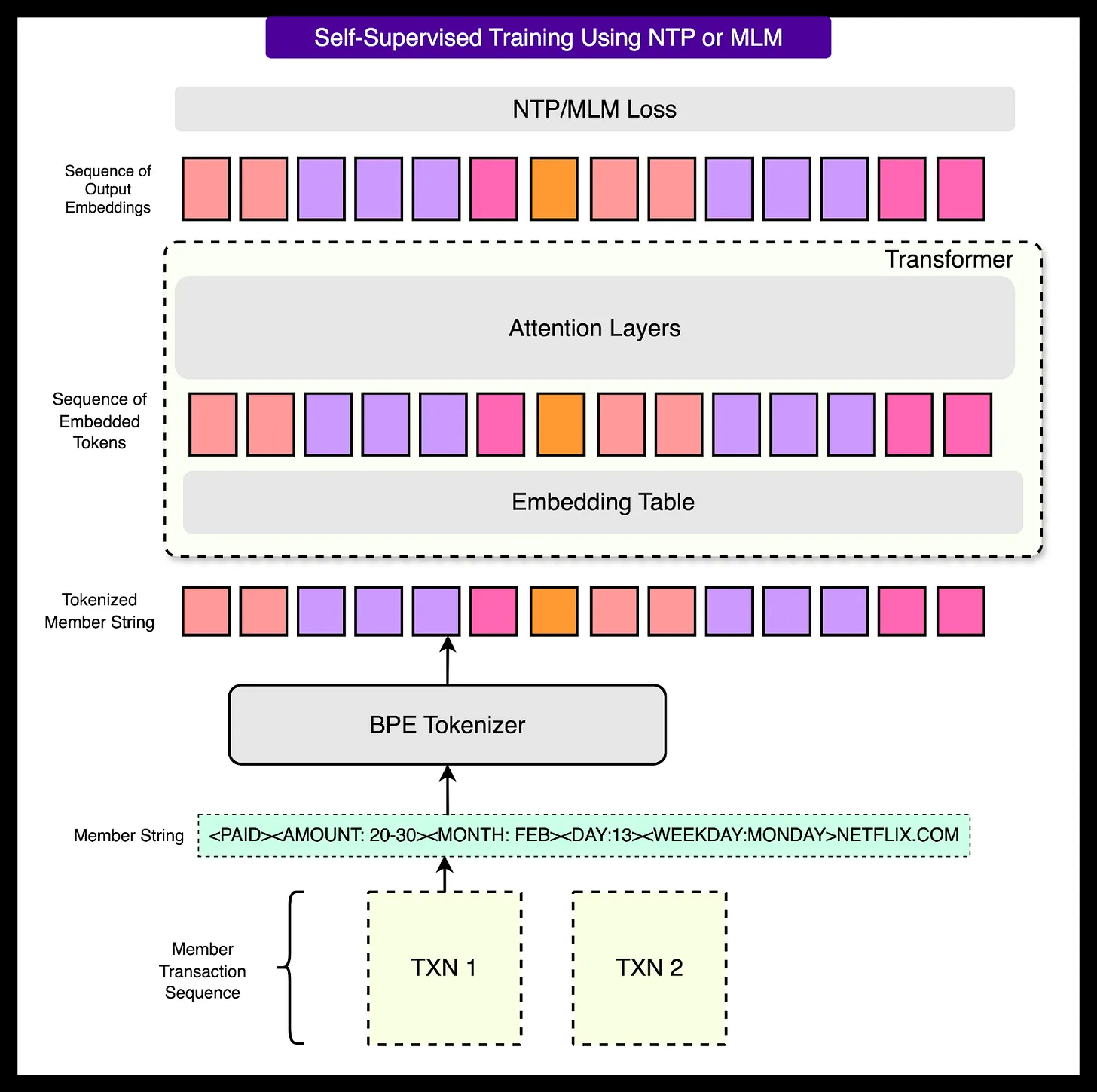

3 - Transformer Backbone

The tokenized transaction sequence is fed into the Transformer Model.Nubank Tried a variety of Transformer variants to optimize performance. These models are trained by means of self-supervised learning without any manually labeled data. They address two main types of tasks:

- Masked Language Modeling (MLM): Hiding a portion of the tokens in a sequence of transactions allows the model to predict what is being hidden.

- Next Token Prediction (NTP): Let the model learn to predict the next token in the sequence.

Transformer 's output is a fixed-length user embedding, usually taken from the hidden state of the last token.

4 - Self-supervised training

The model is trained on massive, unlabeled transaction data. Since no manual labeling is required, the system can utilize the entire transaction history of each user. By constantly predicting the missing or future parts of a user's transaction sequence, the model autonomously learns valuable patterns of financial behavior, such as consumption cycles, recurring payments, and unusual transactions. As a simplified example, the model sees "coffee, lunch, then..." and tries to guess that "dinner" is next.

The size of the training data and model parameters is critical. As the model size and context window increase, performance improves significantly. For example, switching from a base MLM model to a large causal with an optimized attention layer Transformer model, the performance of the downstream task improves by more than 7 percentage points.

5 - Downstream fine-tuning and integration

Once the base model pre-training is complete, it can be fine-tuned for specific tasks. This is usually done in the Transformer A prediction header is added at the top and trained using labeled data. For example, in a credit default prediction task, known default labels would be used to fine-tune the model.

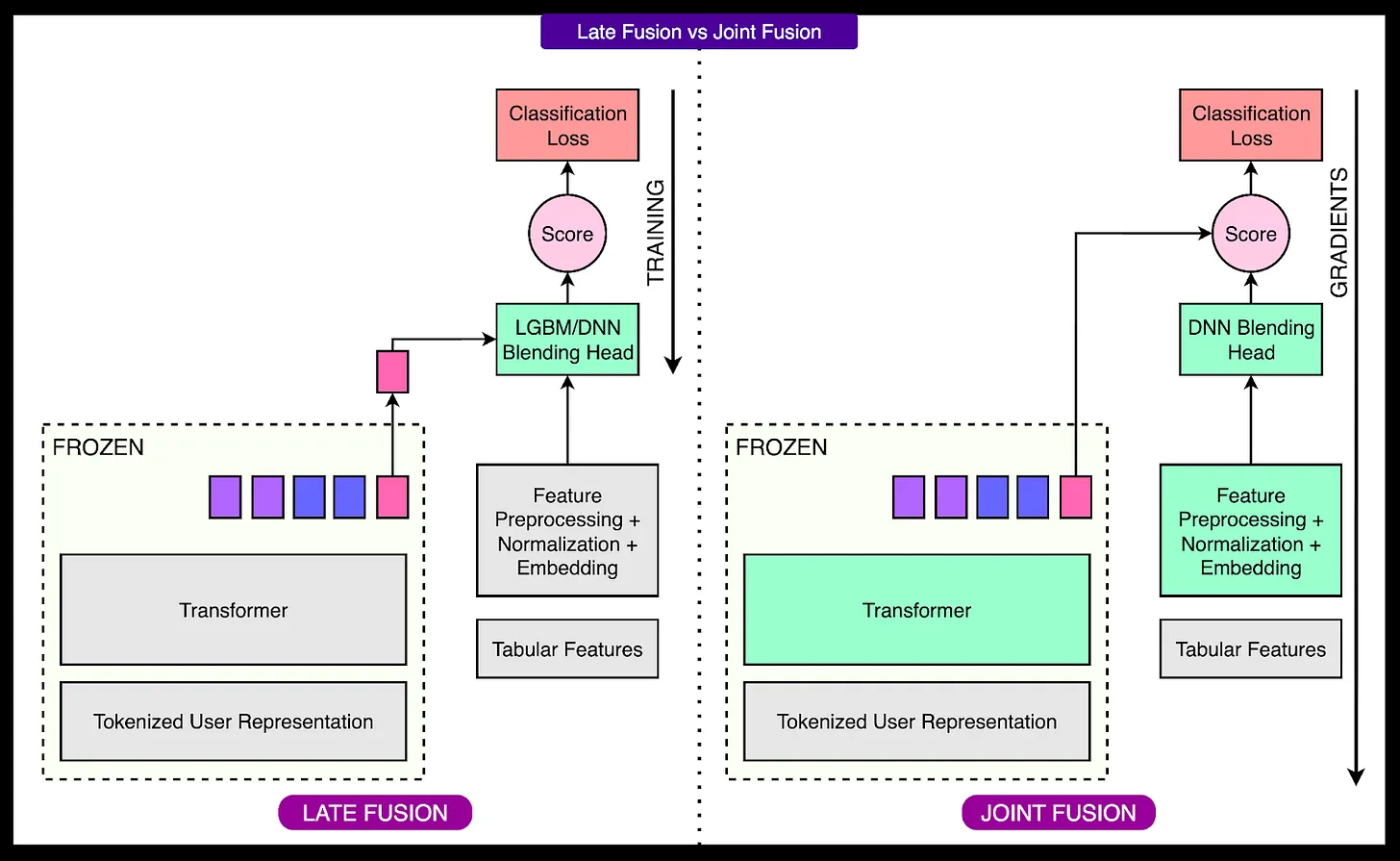

For integration with existing systems.Nubank Fusion of model-generated user embeddings with manually engineered form features. This fusion is realized in two ways:

- Late Fusion: Use

LightGBMand other models to combine embedded and tabular data, but the two are trained separately. - Joint Fusion: Using deep neural networks (in particular

DCNv2architecture) willTransformerand tabular data models are trained together in an end-to-end system.

6 - Centralized Model Library

In order to make the entire architecture usable within the companyNubank A centralized AI platform was created. The platform stores pre-trained base models and provides a standardized fine-tuning process. Internal teams can directly access these models, incorporate their own business characteristics, and deploy fine-tuned versions without having to train from scratch. This centralized management accelerates the development process and reduces resource redundancy.

Converting transaction data into model-readable sequences

because of Transformer There are two main challenges when the model prepares transaction data:

- Mixed Data Types: A single transaction contains structured fields (e.g., amount and date) and text fields (e.g., merchant name), which are difficult to represent uniformly in plain text or in a purely structured manner.

- High base and cold start issues: The diversity of transactions is extremely high, with new combinations arising from different merchants, locations or amounts. If a separate ID is assigned to each unique transaction, the lexicon becomes abnormally large, making the model difficult to train and unable to handle new transactions that have not been seen during the training period (i.e., the cold-start problem).

In order to address these challenges.Nubank Various strategies for converting transactions into token sequences are explored.

Option 1: ID-based representation

This approach assigns a numerical ID to each unique transaction, similar to what is done in recommender systems. While simple and efficient, this approach has obvious drawbacks: the number of unique transaction combinations is too large for the ID space to be manageable; at the same time, the model is not able to handle new unseen transactions.

Option 2: Text is everything

This method treats each transaction as a piece of natural language text, such as "description=NETFLIX amount=32.40 date=2023-05-12". This representation is very general and can handle transactions in any format. However, it is extremely computationally expensive. Converting structured fields to long text sequences creates a large number of unnecessary tokens, resulting in a Transformer of attention computational costs skyrocket and training slows down.

Option 3: Hybrid coding scheme (Nubank's choice)

In order to balance versatility and efficiency, theNubank A hybrid encoding strategy is developed. It decomposes each transaction into a compact set of discrete field tokens, including the dollar sign, the dollar bin, the date, and the merchant description after subword slicing.

This hybrid approach preserves key structured information in a compact format with the ability to generalize to handle new inputs and effectively control computational costs. When each transaction is tokenized in this way, a user's complete transaction history can be stitched together into a long sequence to be used as a Transformer The input.

Training the base model

Nubank 's engineers use self-supervised learning to train the model, which means that the model learns directly from the sequence of trades without any manual labeling. This approach allows the system to leverage massive amounts of historical transaction data from millions of users.

Two main types of training objectives are used:

- Next Token Prediction (NTP): The model predicts the next token in the sequence based on the previous token. This, like a language model predicting the next word in a sentence, teaches the model to understand the flow and structure of trading behavior.

- Masked Language Modeling (MLM): Randomly hide some tokens in a sequence and train the model to guess the "covered" tokens. This forces the model to understand the context and learn deeper relationships between tokens, such as the connection between the day of the week and the type of purchase, or the merchant name and the amount of the transaction.

Fusing sequence embedding with tabular data

While underlying models based on transaction sequences can capture complex behavioral patterns, many financial systems still rely on structured tabular data, such as information from credit bureaus or user profiles. In order to fully utilize these two data sources, they must be effectively fused.

Post-fusion (baseline method)

In post-fusion, the "frozen" embeddings generated by the pre-trained base model are combined with the form features and then fed into the LightGBM maybe XGBoost among other traditional machine learning models. This approach is simple to implement, but because the underlying models are trained independently, their generated embeddings cannot meaningfully interact with the tabular data during training, limiting the upper bound of overall performance.

Joint fusion (recommended method)

In order to overcome this limitation.Nubank A joint fusion architecture was developed. The approach trains simultaneously in an end-to-end system Transformer and models that process tabular data. In this way, the model learns to extract information from the transaction sequences that can complement the tabular data, and the two components are synergistically optimized for the same forecasting goal.

Nubank option DCNv2 (Deep & Cross Network v2) architecture to process table features.DCNv2 is a deep neural network designed specifically for structured inputs that efficiently captures cross-interactions between features.

Nubank The quest to utilize fundamental models represents a major leap forward in the way financial institutions understand and serve their customers. By moving away from manual feature engineering and instead embracing self-supervised learning based on raw transaction data, theNubank A modeling system that is both scalable and expressive is constructed.

Key to this success will be how the system fits into its broader AI infrastructure.Nubank Instead of building isolated models for each scenario, a centralized AI platform was developed. Teams can choose between two models depending on their needs: a model that uses only transaction sequence embeddings, and a hybrid model that combines embeddings with structured form features using a federated fusion architecture.

This flexibility is critical. Some teams can plug-and-play user embedding into their existing robust forms model; others may rely exclusively on new tasks based on the Transformer of the sequence model. The architecture is also compatible with new future data sources such as application usage patterns or customer support chats. This is not just a technical proof-of-concept, but a production-grade solution that delivers measurable benefits in core financial forecasting tasks.