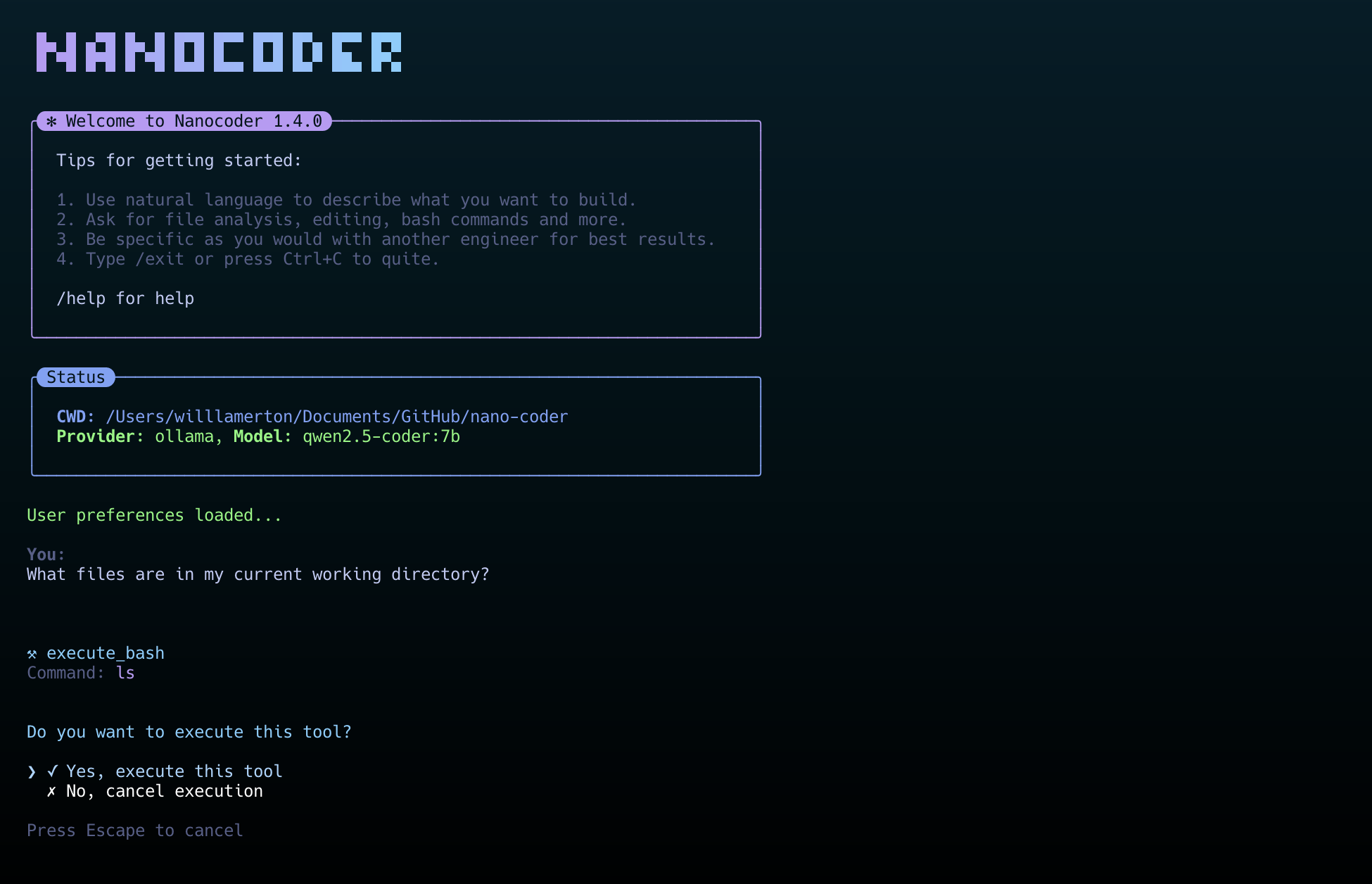

nanocoder is an AI programming tool that runs in a local terminal environment, with a core design philosophy of "local first" to protect user privacy and data security. nanocoder allows developers to interact with AI on their own computers through a command line interface to perform tasks such as writing, reviewing and refactoring. nanocoder features support for multiple AI model service providers. The tool allows developers to interact with AI on their own computers through a command line interface to accomplish tasks such as writing, reviewing, and refactoring code. nanocoder features support for a wide range of AI model service providers. Users can either connect to Ollama, which runs entirely locally, for offline code generation, or configure OpenRouter or OpenAI-compatible API interfaces to use those more powerful cloud-based AI models. It has a built-in system of useful tools that allow AI to read and write files and execute terminal commands directly, as well as connect to more external services such as file systems, GitHub repositories, and web searches via the Model Context Protocol (MCP), further expanding application capabilities.

Function List

- Support for multiple AI service providers: It is possible to connect to locally running Ollama, to OpenRouter in the cloud, and to any service compatible with the OpenAI API (e.g. LM Studio, vLLM, etc.).

- Advanced Tooling System: Built-in tools for file manipulation, execution of bash commands, etc. AI can perform operations such as reading and writing files or running code with the user's permission.

- Model Context Protocol (MCP): Support for connecting to MCP servers, which extends AI's tooling capabilities, such as access to the file system, GitHub, Brave search, and memory repositories.

- Customized Instruction System: Users can set the project's

.nanocoder/commandsdirectory, use Markdown files to create reusable instructions with parameters that facilitate the execution of highly repetitive tasks, such as generating tests, code reviews, and so on. - Intelligent User Experience: Provides command auto-completion, dialog history traceability, session state retention, etc., and will display the AI's thinking process, Token usage, and execution time in real time according to the task status.

- Project-level stand-alone configuration: By placing the

agents.config.jsonfile, a different AI model or API key can be set for each project for easy management. - Developer Friendly: The entire project is built using TypeScript , the code structure is clear and easy to extend . Also provides detailed debugging tools and logging level control .

Using Help

nanocoder is a tool that runs in the terminal (command line interface). The following section describes in detail how to install and use it.

Installation process

1. Environmental preparation

Before installing nanocoder, you need to make sure that you have installed the following on your computer Node.js (version 18+ required) and npm Package management tool. npm is usually installed with Node.js.

2. Global installation

It is recommended to use the global installation method so that you can run nanocoder directly from any directory on your computer. open your terminal and enter the following command:

npm install -g @motesoftware/nanocoder

Once the installation is complete, you can access it directly from any project folder by typing nanocoder command to start it.

Configuring the AI model

The power of nanocoder is that it can connect different AI models. You need to select and configure an AI service according to your needs. The configuration information is stored in a file calledagents.config.jsonfile, which you need to place in the root directory of your project code.

Option 1: Use of Ollama (local model)

This is the most privacy-protective way, as the model runs entirely on your own computer.

- First, you need to install and run Ollama.

- Then, download a model you need from the Ollama model library. For example, download a lightweight

qwen2:0.5bModel:ollama pull qwen2:0.5b - After completing the above steps, run directly

nanocoderIt will automatically detect your local Ollama service and connect.

Option 2: Use OpenRouter (cloud model)

OpenRouter brings together a wide range of excellent AI models on the market, such as GPT-4o, Claude 3, and so on.

- In your project root directory, create a

agents.config.jsonDocumentation. - Copy the following into the file and put the

your-api-key-hereReplace the OpenRouter API key with your own, and also add a new OpenRouter API key in themodelsThe field is filled with the name of the model you want to use.{ "nanocoder": { "openRouter": { "apiKey": "your-api-key-here", "models": ["openai/gpt-4o", "anthropic/claude-3-opus"] } } }

Option 3: Use OpenAI-compatible APIs (local or remote)

If the AI service you are using (e.g., LM Studio, vLLM, or a service you built yourself) provides an OpenAI-compatible API interface, it can also be configured.

- Similarly, in the project root directory, create the

agents.config.jsonDocumentation. - Fill in the blanks that will

baseUrlReplace it with your service address and fill in the API key if you need it.{ "nanocoder": { "openAICompatible": { "baseUrl": "http://localhost:1234", "apiKey": "optional-api-key", "models": ["model-1", "model-2"] } } }

basic operation command

After launching nanocoder, you will be taken to an interactive chat interface. You can either type in natural language to describe your needs, or you can use the slash/The built-in instructions at the beginning control the program.

/help: Displays a list of all available commands./provider:: Switching AI service providers, e.g. from Ollama to OpenRouter./model: Switch between the list of models provided by the current service provider./clear: Clear the current chat and start a new session./debug: Toggles the log display level for viewing detailed information about program operation./exit: Exit the nanocoder program.

Using custom commands

Custom commands are a special feature of nanocoder that helps you to template common complex tasks.

- Create a file in the root directory of your project named

.nanocoderfolder, and then create anothercommandsFolder. - 在

.nanocoder/commands/directory, create a Markdown file, for exampletest.md。 - In this file, you can define the description, aliases, and parameters of the directive. For example, create a directive for generating unit tests:

--- description: "为指定的组件生成全面的单元测试" aliases: ["testing", "spec"] parameters: - name: "component" description: "需要测试的组件或函数名称" required: true --- 请为 {{component}} 生成全面的单元测试。测试需要覆盖以下几点: - 正常情况下的行为 - 边界条件和异常处理 - 对外部依赖进行模拟 - 清晰的测试描述 - After saving the file, you can use this new command in nanocoder. For example, for the

UserServiceGenerate tests that you can enter:

/test component="UserService"

The program will automatically set the{{component}}Replace withUserService, and then sends the entire prompt to the AI model to perform the task.

application scenario

- Quickly generate code snippets

When developers need to write a new function or module, they can directly describe the requirements to nanocoder in the terminal, for example, "Create a TypeScript function to parse CSV files", and AI will directly generate the corresponding code. - Code review and refactoring

A piece of written code can be given to a nanocoder for review and suggestions for improvement. It is also possible to use custom refactoring directives such as/refactor:dryThe AI will optimize the code according to the principle of "don't repeat yourself". - Writing Unit Tests

Quickly generating unit test cases for existing functions or components is one of the more tedious aspects of software development. This can be achieved by customizing the/testinstructions, developers only need to provide the component name to allow AI to automatically write test code, improving development efficiency. - Learning a new programming language or framework

When approaching unfamiliar technology, think of it as an on-call programming teacher by asking questions and asking the nanocoder to provide code examples or explain the purpose and workings of certain code.

QA

- Which operating systems does nanocoder support?

nanocoder is developed based on Node.js, so it can run on all major operating systems, including Windows, macOS and Linux, as long as the Node.js environment is installed on the system. - What are the requirements for using the local Ollama model?

Using Ollama requires that you first install and run the Ollama program on your computer. There are some computer hardware requirements, mainly depending on the size of the AI model you are running. The larger the model (e.g. more than 7 billion parameters) the more RAM and a good graphics card (GPU) are required to ensure fast generation. - Is my API key and code data secure?

Nanocoder is designed with "local first" in mind. When you use Ollama, all data and calculations are done on your local computer, without going through any external servers. When you use a cloud service such as OpenRouter, your code and hints are sent to the service provider via an API, in which case you need to comply with that service provider's data privacy policy. Configuration fileagents.config.jsonSaved in your local project directory, it will not be uploaded. - How is nanocoder different from other AI programming tools?

The main differentiator of nanocoder is that it is a tool that runs purely in the command line terminal, which makes it ideal for developers who are used to keyboard manipulation and terminal workflows. Secondly, it emphasizes local-first and user-control, giving users the freedom to choose whether to use a local model or a cloud-based model, with granular management via project-level configuration files.