GLM-4.5V: A multimodal dialog model capable of understanding images and videos and generating code

GLM-4.5V is a new generation of Visual Language Megamodel (VLM) developed by Zhi Spectrum AI (Z.AI). The model is built based on the flagship text model GLM-4.5-Air using MOE architecture, with 106 billion total references, including 12 billion activation parameters.GLM-4.5V not only processes images and text, but also understands visual...

Step3: Efficient generation of open source big models for multimodal content

Step3 is an open source multimodal macromodeling project developed by StepFun, hosted on GitHub, that aims to provide efficient and cost-effective text, image, and speech content generation capabilities. The project is centered on a 32.1 billion-parameter (3.8 billion active parameters) mixed-expert model (MoE), optimized for inference speed and performance, suitable for...

AutoArk: A Multi-Intelligence AI Platform that Collaborates on Complex Tasks

AutoArk is a company focusing on artificial intelligence technology, the core of which is the self-developed end-to-end multimodal model EVA-1, which outperforms GPT-4o in a number of international benchmarks. Based on the EVA-1 model, AutoArk has further developed a multimodal framework called “ArkAgentOS Based on the EVA-1 model, Boundless Ark has further developed a multi-intelligence framework called ”ArkAgentOS". Based on the EVA-1 model, Boundless Ark has further built a multi-intelligence framework called "ArkAgentOS". This framework...

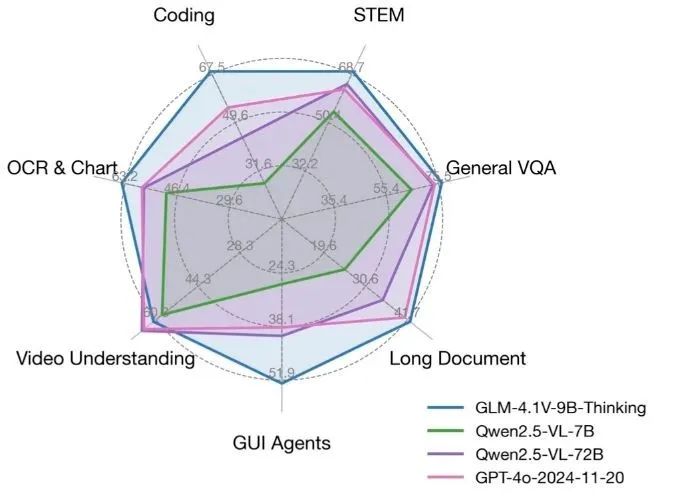

GLM-4.1V-Thinking: an open source visual inference model to support multimodal complex tasks

GLM-4.1V-Thinking is an open source visual language model developed by the KEG Lab at Tsinghua University (THUDM), focusing on multimodal reasoning capabilities. Based on the GLM-4-9B-0414 base model, GLM-4.1V-Thinking utilizes reinforcement learning and "chain-of-mind" reasoning mechanisms to...

Gemma 3n

Google is expanding its footprint for inclusive AI with the release of Gemma 3 and Gemma 3 QAT, open source models that run on a single cloud or desktop gas pedal. If Gemma 3 brought powerful cloud and desktop capabilities to developers, this May 20, 2025 release...

BAGEL

BAGEL is an open source multimodal base model developed by the ByteDance Seed team and hosted on GitHub.It integrates text comprehension, image generation, and editing capabilities to support cross-modal tasks. The model has 7B active parameters (14B parameters in total) and uses Mixture-of-Tra...

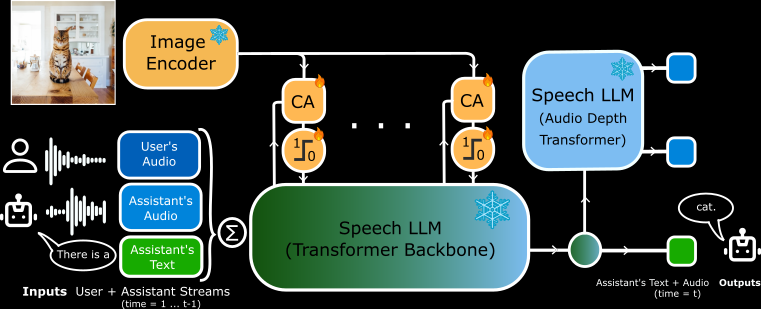

MoshiVis

MoshiVis is an open source project developed by Kyutai Labs and hosted on GitHub. It is based on the Moshi speech-to-text model (7B parameters), with about 206 million new adaptation parameters and the frozen PaliGemma2 visual coder (400M parameters), allowing the model...

Qwen2.5-Omni

Qwen2.5-Omni is an open source multimodal AI model developed by Alibaba Cloud Qwen team. It can process multiple inputs such as text, images, audio, and video, and generate text or natural speech responses in real-time. The model was released on March 26, 2025, and the code and model files are hosted on GitHu...

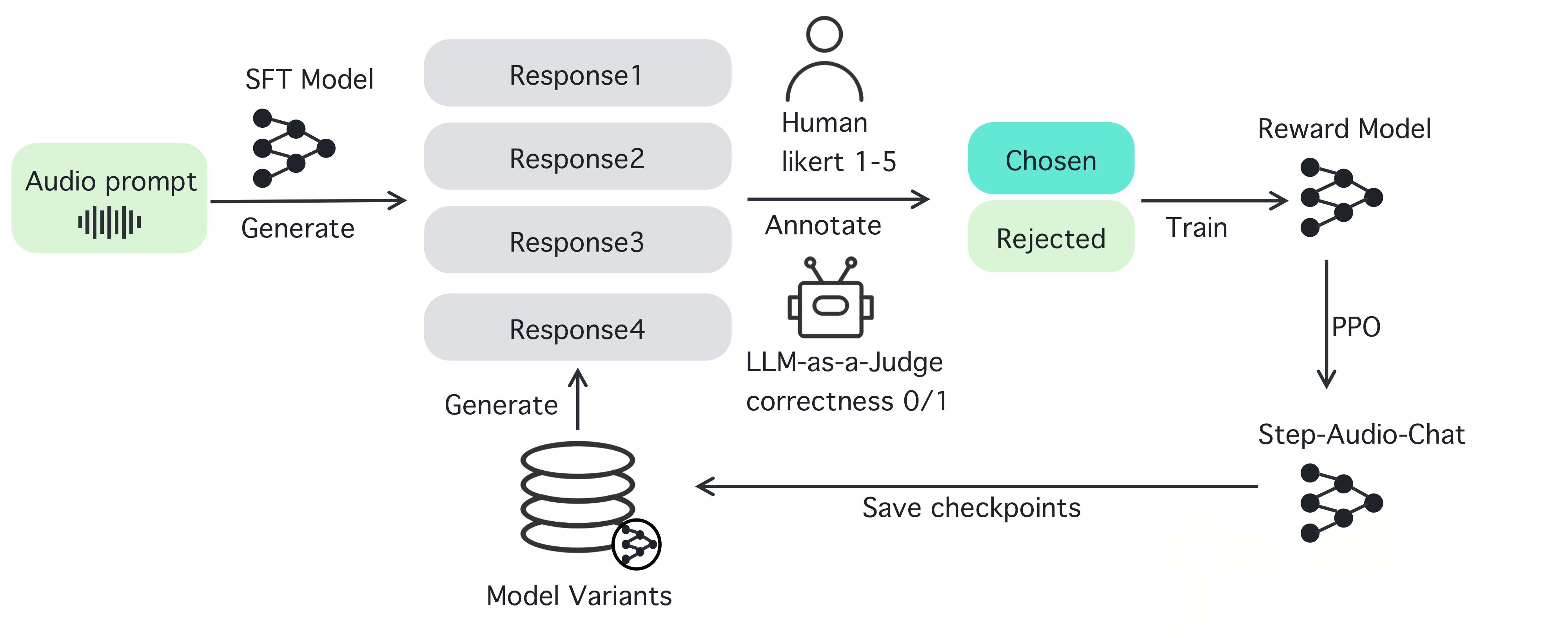

Step-Audio

Step-Audio is an open source intelligent voice interaction framework designed to provide out-of-the-box speech understanding and generation capabilities for production environments. The framework supports multi-language dialog (e.g., Chinese, English, Japanese), emotional speech (e.g., happy, sad), regional dialects (e.g., Cantonese, Szechuan), and adjustable speech rate and rhythmic style (e.g., rap). step-...

VITA

VITA is a leading open source interactive multimodal large language modeling project, pioneering the ability to achieve true full multimodal interaction. The project launched VITA-1.0 in August 2024, pioneering the first open source interactive fully-modal large language model.In December 2024, the project launched a major upgrade, VITA-1.5,...

Megrez-3B-Omni

Infini-Megrez is an edge intelligence solution developed by the unquestioned core dome (Infinigence AI), aiming to achieve efficient multimodal understanding and analysis through hardware and software co-design. At the core of the project is the Megrez-3B model, which supports integrated image, text and audio understanding with high accuracy and fast inference...

Top