Publishing rules are used to automate the generation of article topics (titles), as well as to provide contextual references during the article generation phase. To set up publishing rules and generate topics according to the real optimization goals of the website, do not use them blindly, it is recommended to test them on a small scale in the early stage and observe whether the generated topics meet the expectations. The main operation of the plugin...

1.First configure the APIs used to generate articles The APIs added in the custom API configuration, pre-built API configuration are used to generate text content such as themes, articles, article structure, and so on. 1.1 Support the use of custom APIs compatible with the OPENAI format Remember to complete the /v1/chat/completions section...

1. From the creation of simple rules to create rules for the creation of the theme (article title) before the preparation of materials, is the starting point of the entire article generation process. Here I use the simplest type of rule, "Random Category", to generate a rule. Explanation of the following configuration: Randomly use the name and description of the 10 categories ...

1.1 Operational Guidelines 1.1 Multi-language Selection Only in the figure channel multi-language selection is valid 1.2 Chinese keyword mining techniques It is recommended that only Google and Baidu Involving a mixture of Chinese and English keywords, it is recommended that the English use lowercase, multiple words separated by spaces Keyword Expansion Techniques: Using a large model to get the basis ...

0. Necessary: must be complete and detailed configuration of the site classification Classification name, alias (English), description, must be detailed.AI Content Manager deeply relies on the classification name and detailed description of the classification to control the direction of the generated content and automatically select the appropriate classification. After setting up the categorization, please click once to insert...

This is AI Content Generation Manager, exclusive theme, can not directly use this template. After enabling, all related settings are in the "Theme Settings". 1. Enable secondary domain name access What is the case for enabling secondary domain name access? Already have a website, want to enhance the weight of the main site, attract traffic; or for individual...

Well, by this point we have over 1000 lines in our markdown file. This one is mostly for fun.

If you've been waiting for an introduction to humanlayer, then this is it. If you are practicing Element 6 - Start/Pause/Resume via a simple API and Element 7 - Contacting humans via tool calls, then you are ready to integrate this element. Allow the user to start/pause/resume from s...

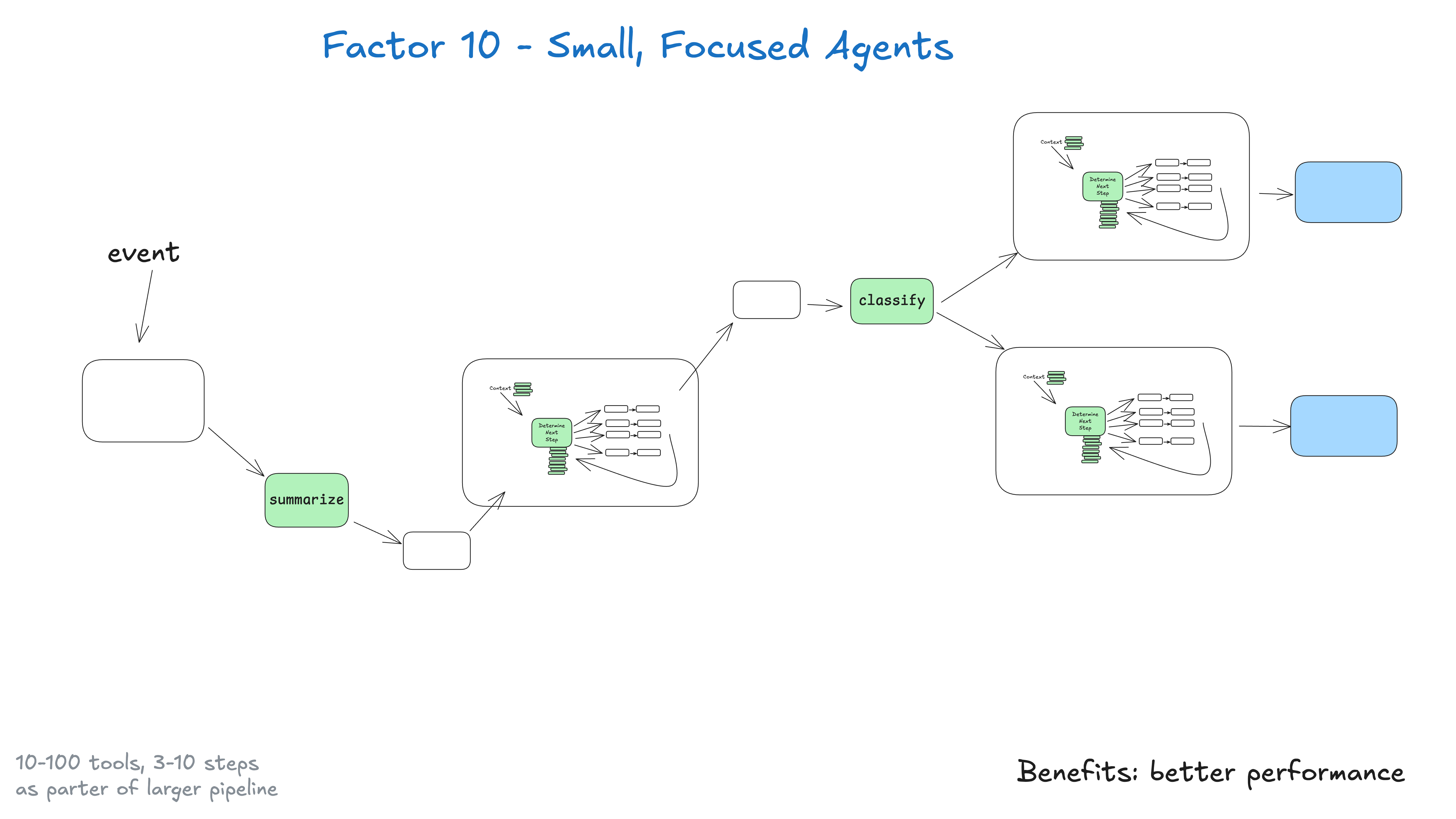

Instead of building monolithic intelligences that try to do everything, it is better to build small, focused intelligences that can do one thing well. Intelligentsia are just one building block in a larger, largely deterministic system. The key insight here is the limitation of the big language model: the larger and more complex the task, the...

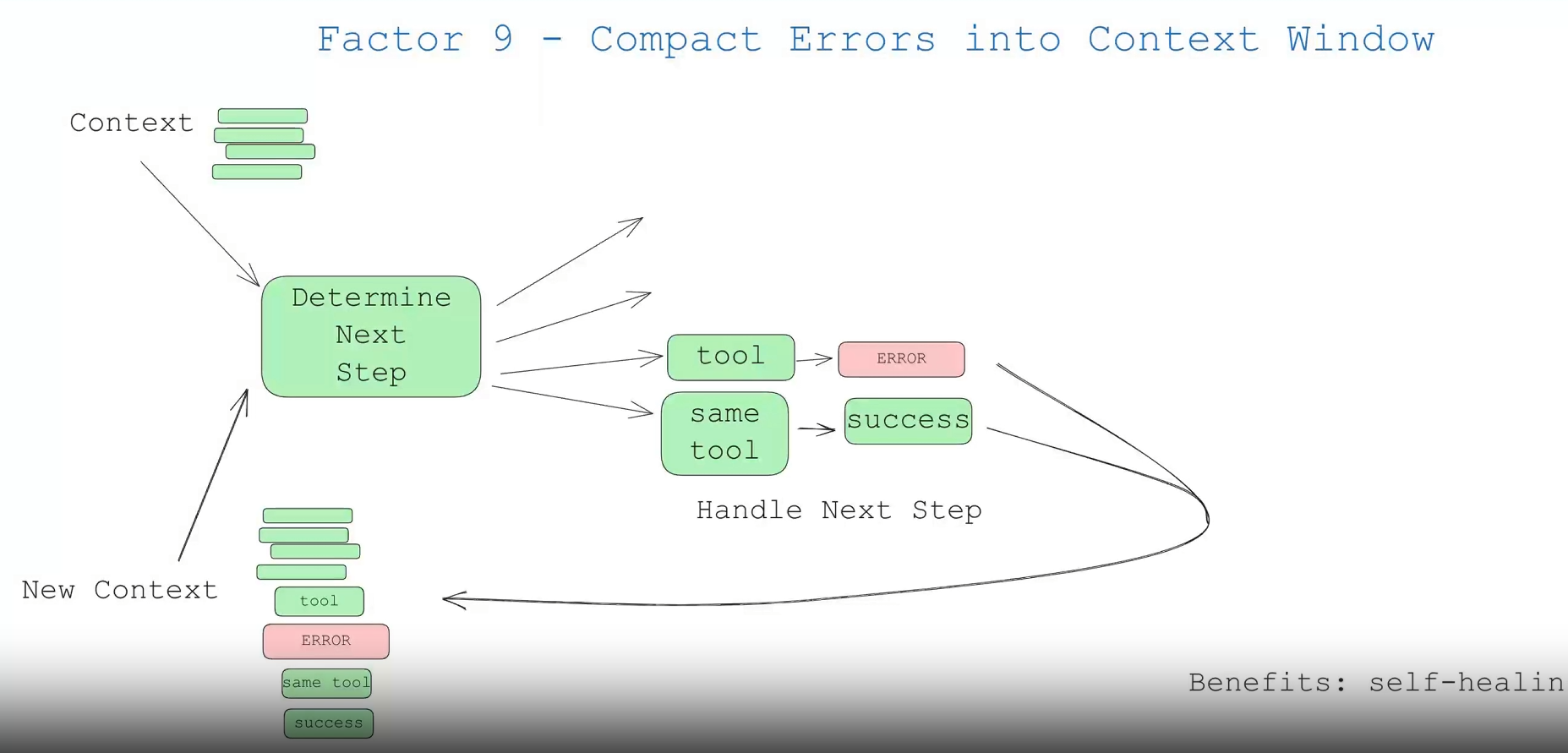

This is a small point, but worth mentioning. One of the benefits of an agent is "self-healing" - for short tasks, a large language model (LLM) may call a failed tool. There is a good chance that a good LLM will be able to read an error message or stack trace and...

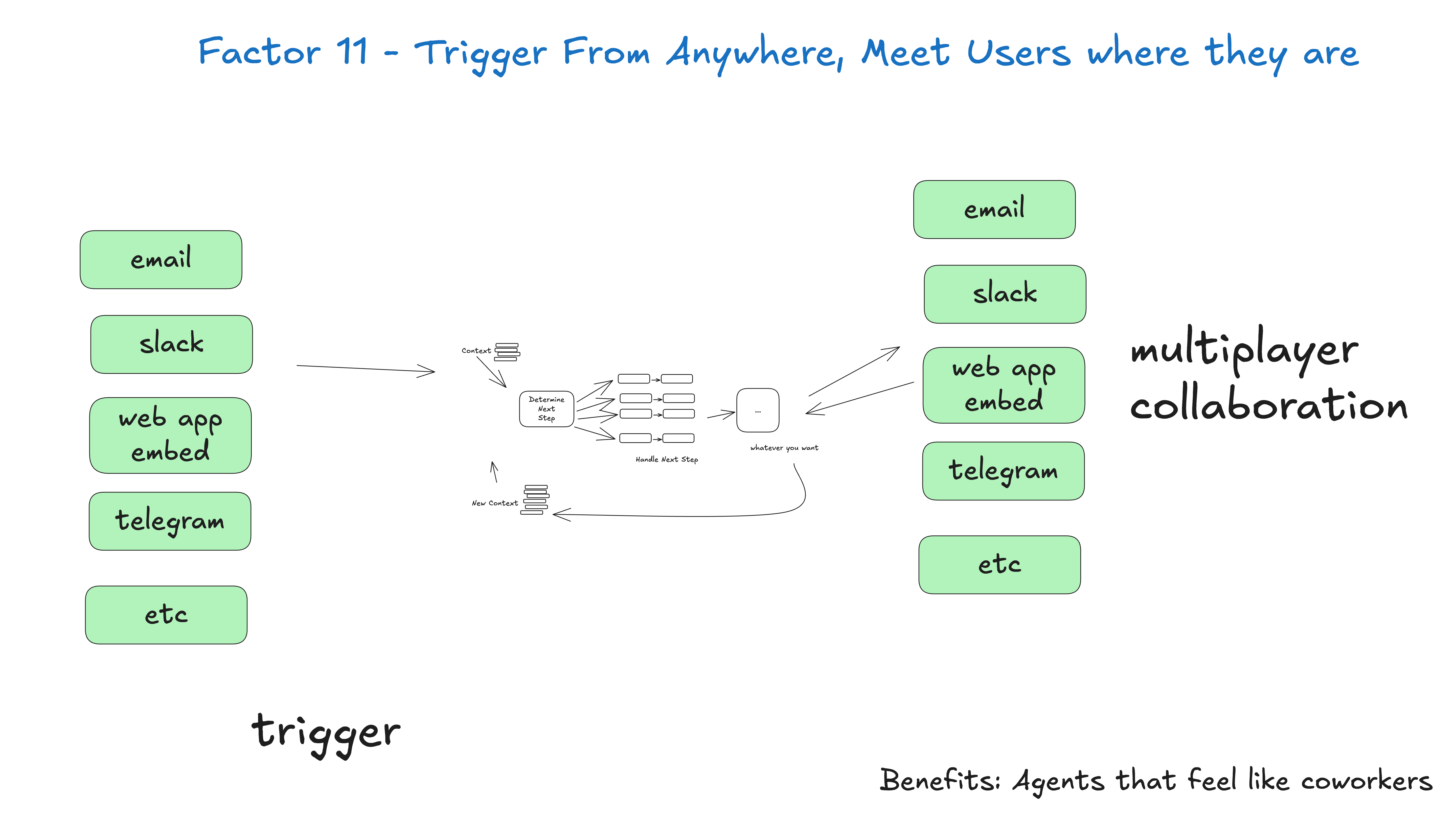

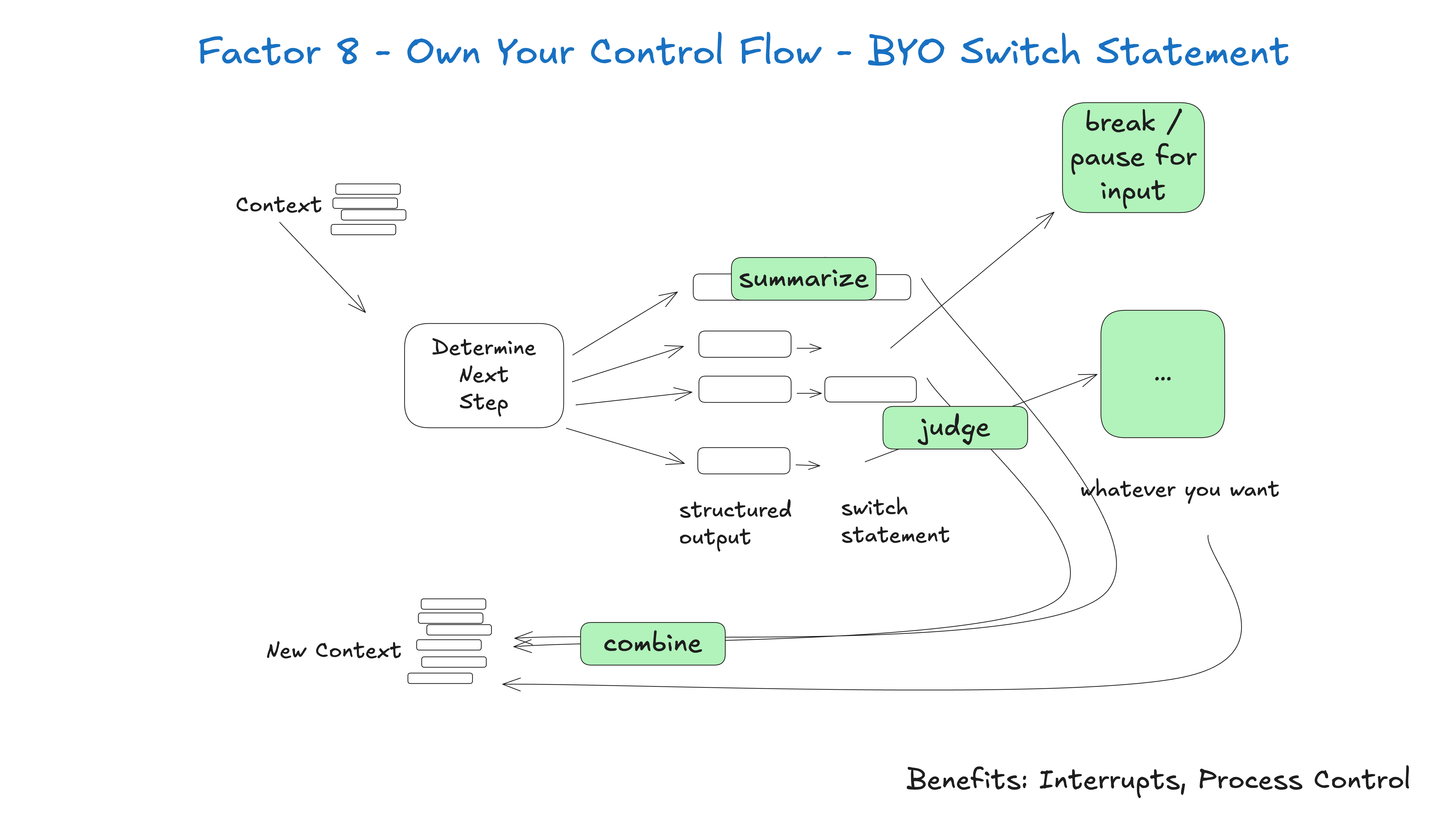

If you are in control of your own control flow, you can implement many interesting features. Build custom control structures that fit your particular use case. Specifically, certain types of tool calls might be a reason to jump out of a loop, wait for a human to respond, or wait for another long-running task (e.g., a training pipeline)...

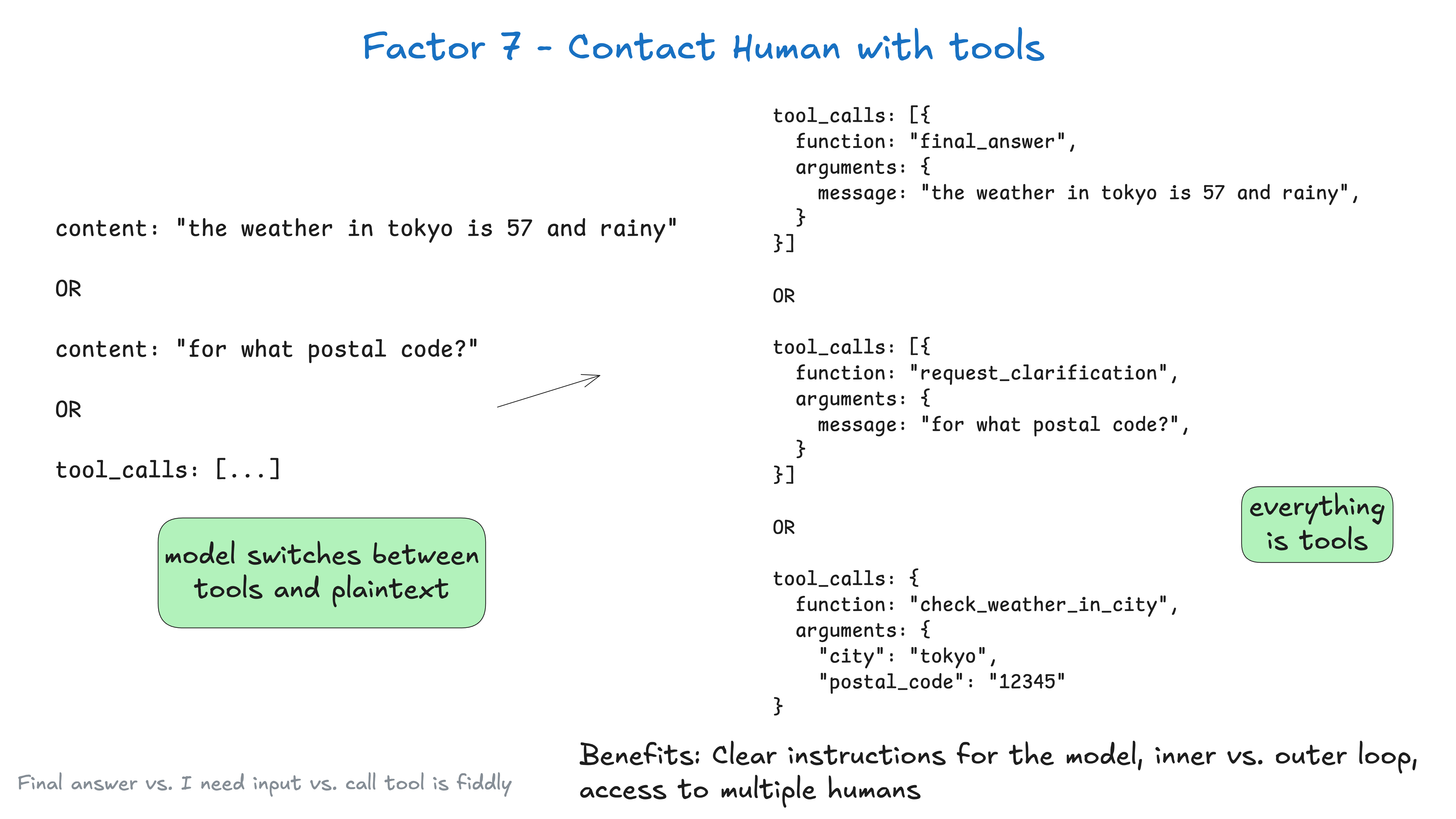

By default, the Large Language Model (LLM) API relies on a fundamentally high-stakes Token choice: do we return plain text content, or do we return structured data? You put a lot of weight on the first Token choice, which in the case of the weather in tokyo...

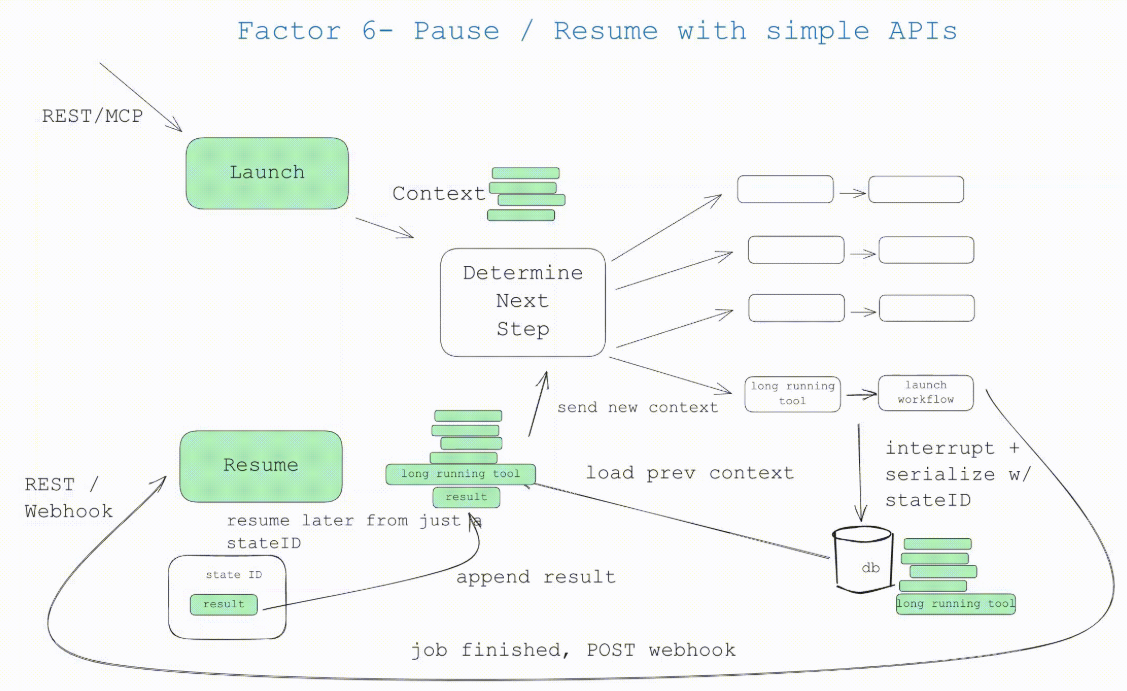

Intelligences are programs, and we expect to be able to start, query, resume, and stop them in some way. Users, applications, pipelines, and other intelligences should be able to easily start an intelligence with a simple API. When long-running operations need to be performed, intelligences and their orchestration deterministic code...

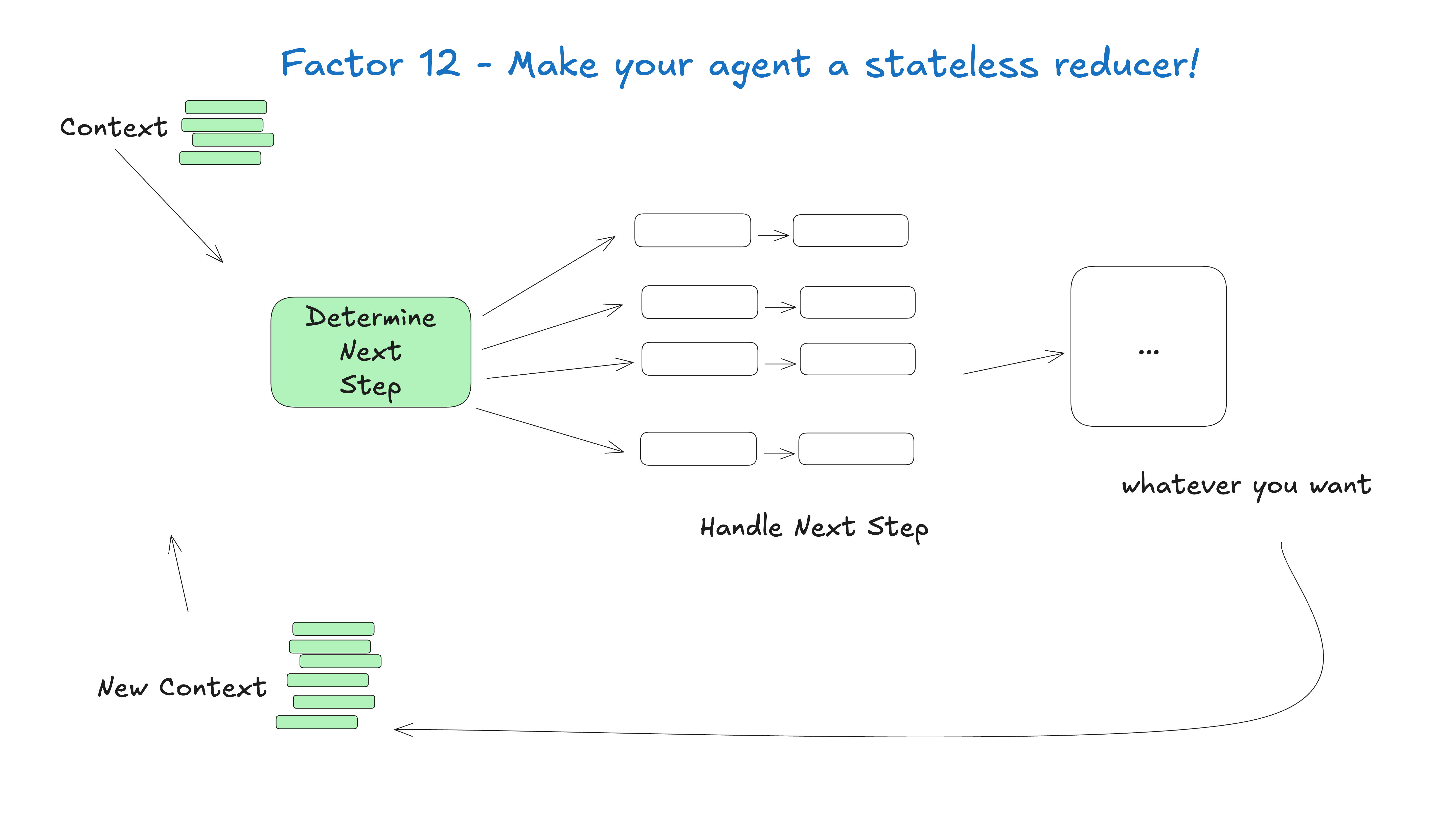

Even outside of the AI space, many infrastructure systems try to separate the "execution state" from the "business state". For AI applications, this can involve complex abstractions to keep track of information such as the current step, next step, wait state, number of retries, and so on. This separation introduces complexity, and while it may be worthwhile,...

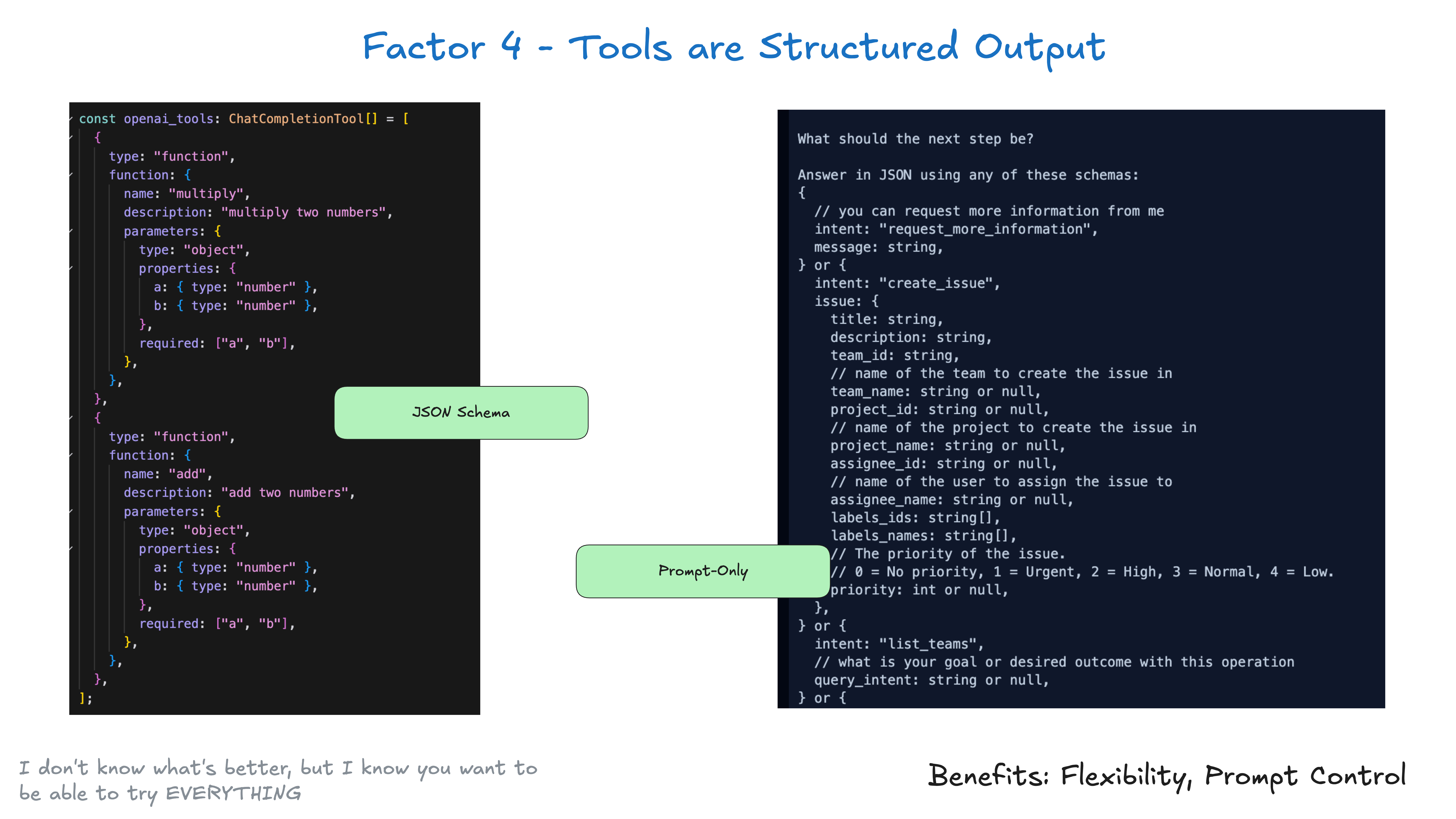

The tool need not be complex. At its core, it's just structured output from your Large Language Model (LLM) for triggering deterministic code. For example, suppose you have two tools CreateIssue and SearchIssues. asking a Large Language Model (LLM) to "use one of the multiple tools" is really asking it to output...

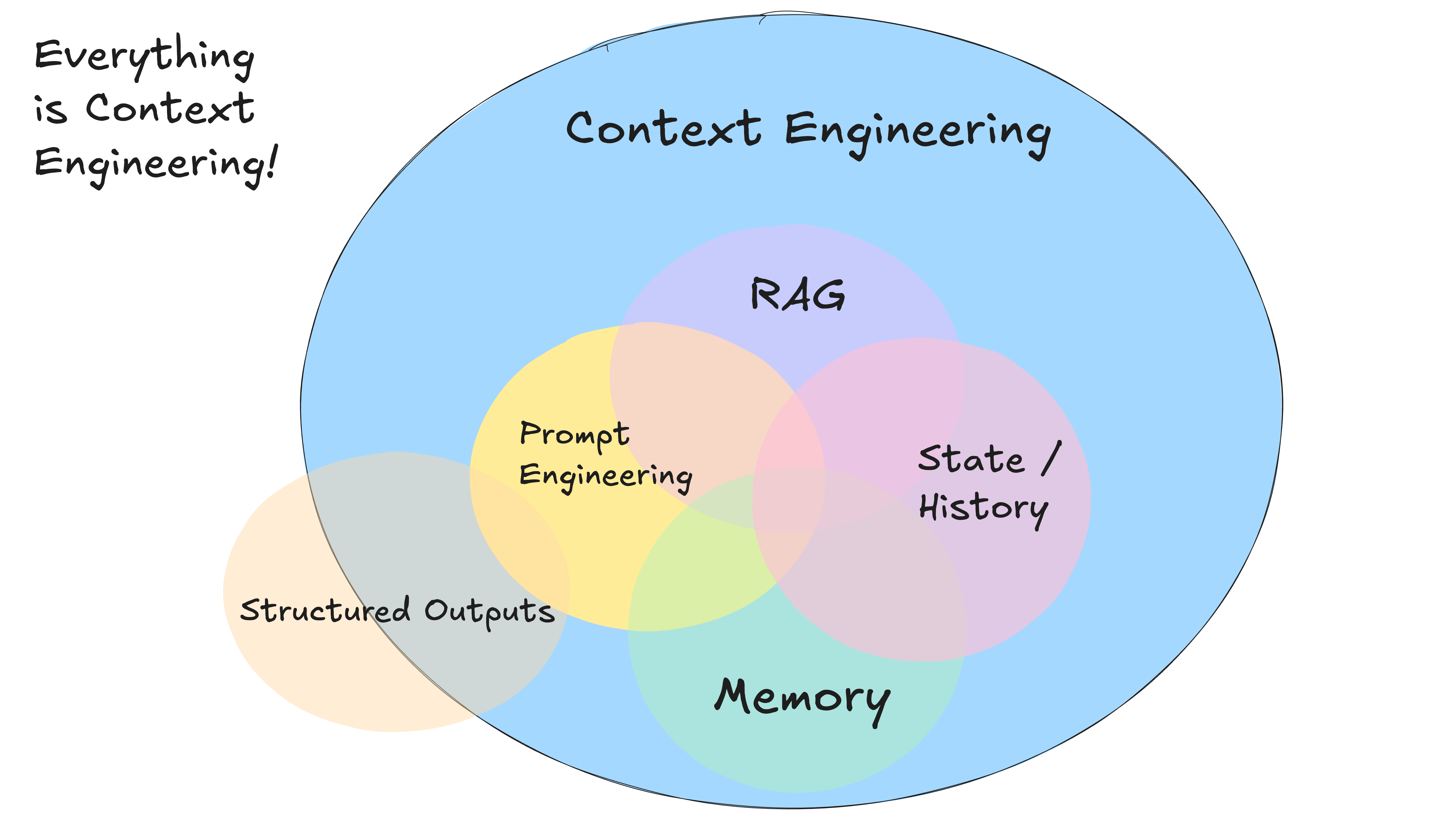

You don't have to use a standardized, message-based format to deliver context to the big language model. At any given moment, your input to the big language model in the AI intelligence is, "Here's everything that's happened so far, and here's what to do next." It's all contextual engineering. The big language model is...

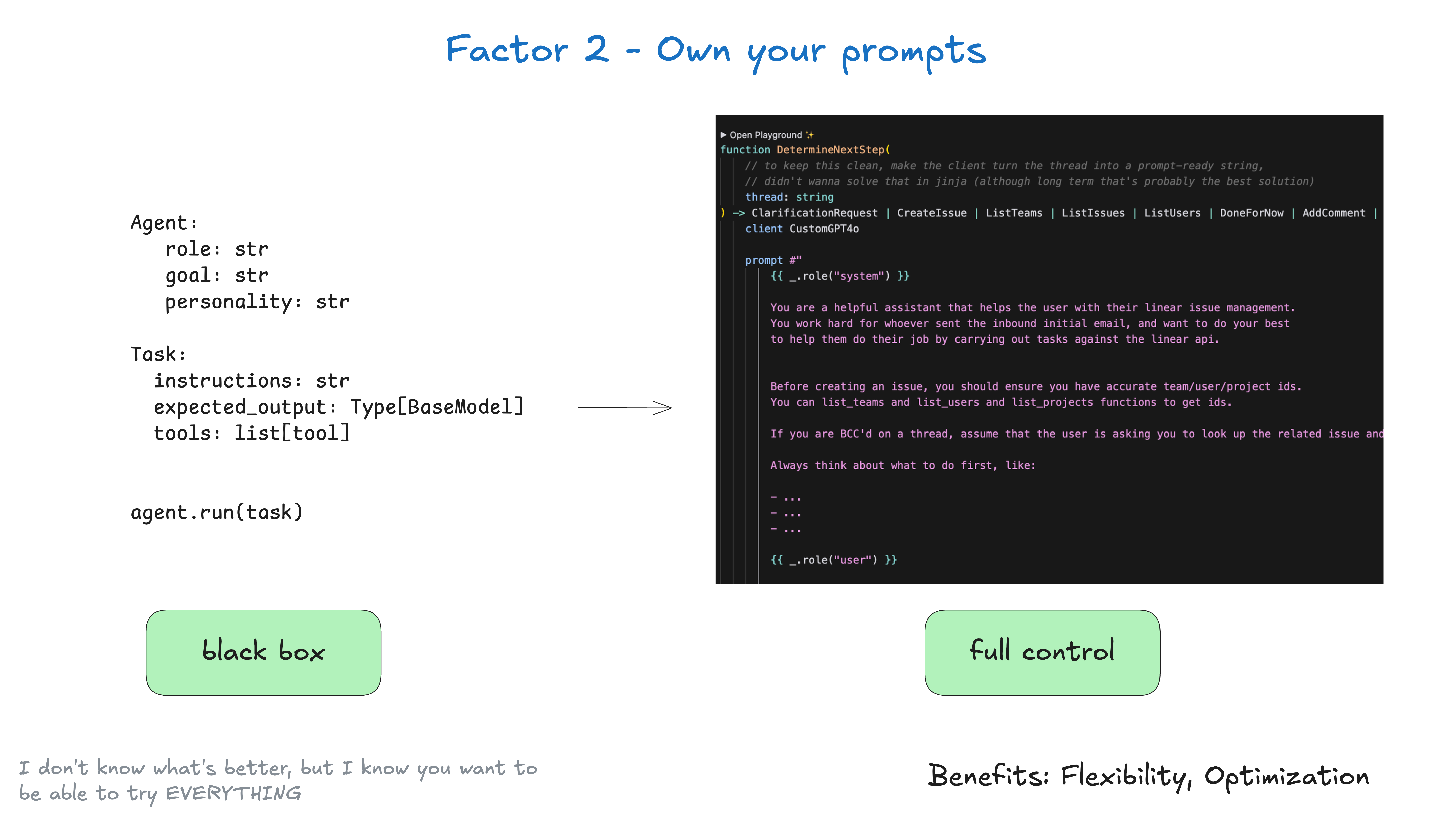

Don't outsource your input prompt engineering to a framework. By the way, this is far from being novel advice: some frameworks provide a "black box" approach like this: agent = Agent( role="..." , goal="..." , personality="..." , tools=...

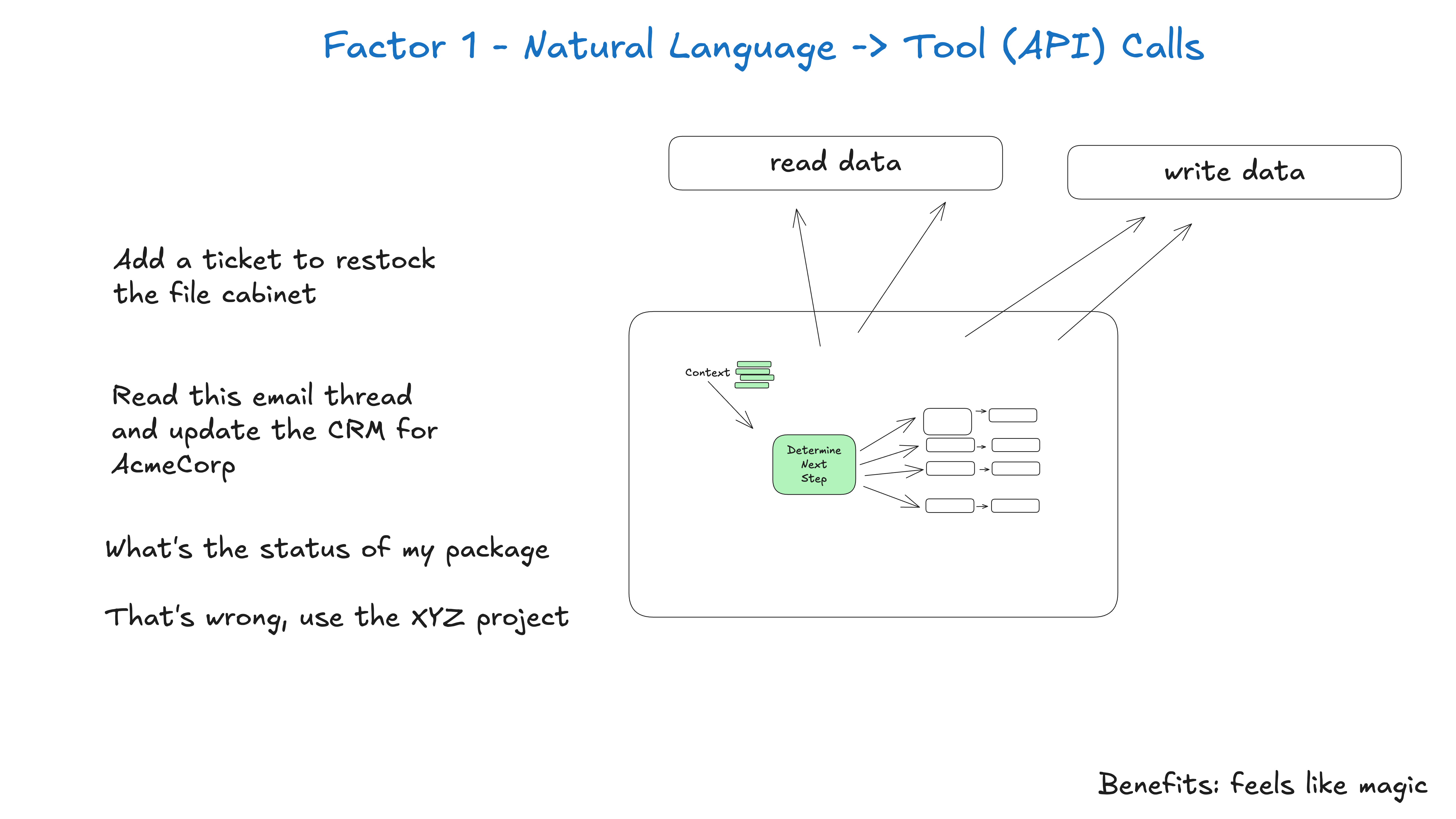

One of the most common patterns when building intelligences is converting natural language into structured tool calls. This is a powerful pattern that allows you to build intelligences that can reason about tasks and execute them. This pattern, when applied atomically, is to take a phrase (e.g.) that you can use for Ter...

Detailed version: how we got here You don't have to listen to me Whether you're new to intelligences or a grumpy veteran like me, I'm going to try to convince you to ditch most of your pre-existing views on AI intelligences, take a step back, and rethink them from first principles. (As...

Top