Google in succession introduced open source models running for a single cloud or desktop gas pedal Gemma 3 After QAT with Gemma 3, it's expanding its footprint for pervasive AI. If Gemma 3 brought powerful cloud and desktop capabilities to developers, this preview release of Gemma 3n on May 20, 2025 clearly reveals its ambitions for real-time AI on the mobile device side - the goal is to make the phones, tablets, and laptops we use every day run directly with high-performance AI directly on the phones, tablets and laptops we use every day.

To drive the next generation of end-side AI and support diverse application scenarios, including further enhancements to the Gemini Nano's capabilities, Google's engineering team has built a new cutting-edge architecture. This new architecture, said to be the result of close collaboration with mobile hardware leaders such as Qualcomm, MediaTek, and Samsung's System LSI business, is optimized for lightning-fast response and multimodal AI processing, and is designed to deliver a truly personalized and private smart experience directly on the device side.

Gemma 3n is the first open model built on this groundbreaking shared architecture, allowing developers to experience the technology through an early preview starting today. Notably, the same advanced architecture will also empower the next-generation Gemini Nano, bringing enhancements to a wide range of features in Google Apps and its end-side ecosystem, and is scheduled to go live later this year. This means that by getting started with Gemma 3n, developers are actually previewing an underlying technology that is coming to mainstream platforms such as Android and Chrome.

Note: This chart ranks AI models based on the Chatbot Arena Elo Score; higher scores (top numbers) indicate higher user preference.Gemma 3n ranks among the top mainstream proprietary and open-source models.

A core innovation of Gemma 3n is the use of Google DeepMind's Per-Layer Embeddings (PLE) technology, which significantly reduces the model's memory (RAM) usage. While the original parameter counts of the models are 5 billion (5B) and 8 billion (8B), the PLE technique allows the models to run with memory overheads comparable to those of the 2 billion (2B) and 4 billion (4B) parameter sizes. Specifically, they can run with dynamic memory footprints of only 2GB and 3GB. This breakthrough is a huge boon for memory-constrained mobile devices. More technical details can be found in the official documentation.

By exploring Gemma 3n, developers can get a sneak peek at the core capabilities of this open source model and the mobile-first architectural innovations coming to the Android and Chrome platforms with Gemini Nano.

Gemma 3n's Key Capabilities Profiling

Gemma 3n is designed for a fast, low resource footprint AI experience running locally with the following key features:

- Optimized end-side performance and efficiencyGemma 3n: Compared to the Gemma 3 4B model, Gemma 3n delivers approximately 1.5x faster response startup on mobile devices, as well as a significant improvement in the quality of the model output. This is made possible by innovations such as layer-by-layer embedding (PLE), key-value cache sharing (KVC sharing), and advanced activation quantization, which combine to reduce memory footprint.

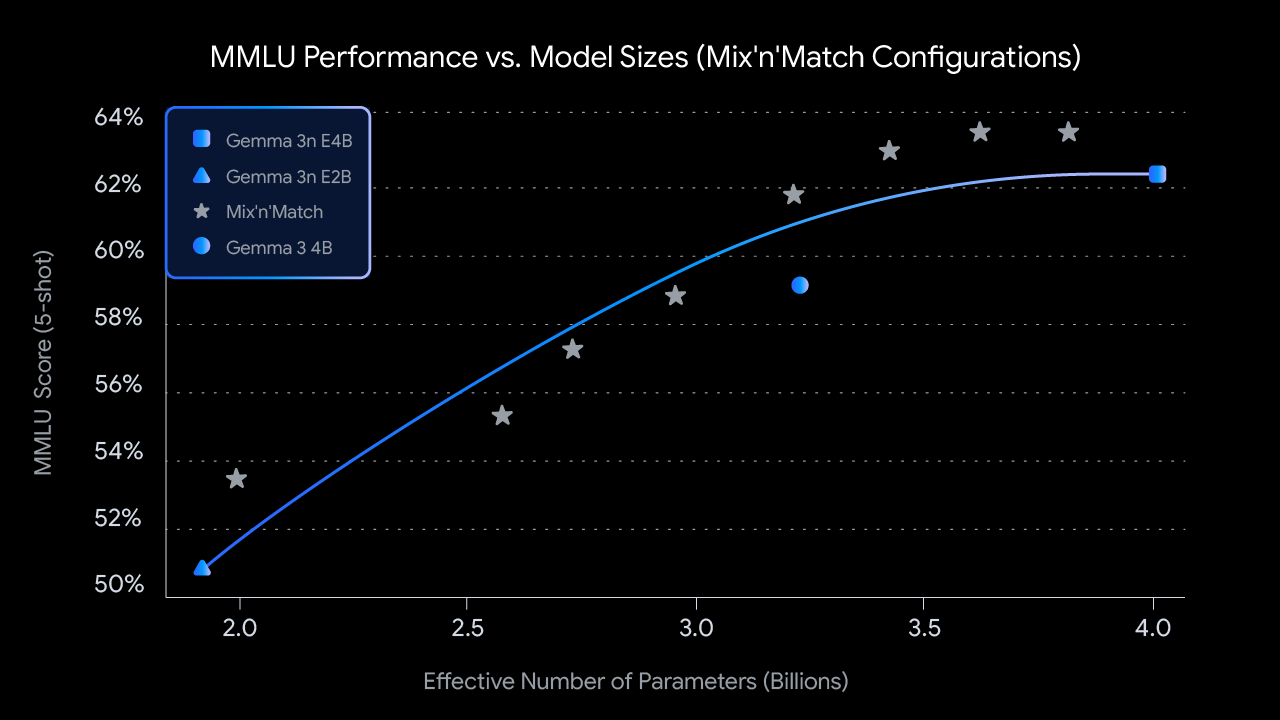

- "Many-in-1 Flexibility: The 4B active memory footprint version of Gemma 3n naturally includes a nested, industry-leading 2B active memory footprint submodel inside the MatFormer training technology. This design gives the model the flexibility to dynamically trade-off performance and quality without the need to host multiple independent models. More interestingly, Gemma 3n introduces a "mix'n'match" capability that allows sub-models to be dynamically created from 4B models to optimally fit specific use cases and their associated quality/latency requirements. More details on this research will be disclosed in a forthcoming technical report.

- Privacy First and Offline Availability: The local execution feature ensures that the function operates with respect for the user's privacy and works reliably even when there is no network connection.

- Extended Audio Multimodal Understanding: Gemma 3n not only understands and processes audio, text and images, but also significantly enhances video understanding. Its audio capabilities enable the model to perform high-quality automatic speech recognition (transcription) and translation (speech to translated text). In addition, the model accepts interleaved inputs across modalities, allowing it to understand complex multimodal interactions. (Public implementation coming soon)

- Enhanced multilingual capabilities: Gemma 3n has improved multilingual performance, especially in Japanese, German, Korean, Spanish and French. It has achieved

50.1%WMT (Workshop on Machine Translation) is an important evaluation in the field of machine translation, and ChrF is a commonly used evaluation index for machine translation.

Note: This graph shows the MMLU performance of Gemma 3n's "mix-n-match" (pre-training) capability for different model sizes. MMLU (Massive Multitask Language Understanding) is a comprehensive benchmark test of language understanding.

Unlocking the potential for new mobile experiences

Gemma 3n is expected to empower a new wave of intelligent mobile apps by

- Building Real-Time Interactive Experiences: The ability to understand and respond to real-time visual and auditory cues from the user's environment.

- Driving deeper understanding: Utilizing a combination of audio, image, video, and text inputs, information is processed privately on the device side to enable more contextual text generation.

- Developing Advanced Audio Center Applications: Includes real-time voice transcription, translation, and rich voice-driven interaction.

The following video outlines the types of experiences you can build:

Responsible co-construction

A commitment to responsible AI development is essential. Like all models in the Gemma family, Gemma 3n has been subjected to rigorous security assessments, data governance, and fine-tuned calibration in accordance with its security policies. For their part, Google says they approach open models with a careful risk assessment and continue to refine their practices as the AI landscape evolves.

In-depth analysis of Gemma 3n model parameters

Gemma 3n is a generative AI model optimized for use on everyday devices such as cell phones, laptops, and tablets. The model includes innovations in parameter-efficient processing, such as the aforementioned layer-by-layer embedding (PLE) parameter caching, as well as the MatFormer model architecture, which provides the flexibility to reduce computation and memory requirements. The models are capable of processing audio inputs, as well as textual and visual data.

Preview Stage and Licensing: Gemma 3n is currently in early preview. Users can find out more about Gemma 3n at Google AI Studio and Google AI Edge to try it out. Like other Gemma models, Gemma 3n offers open weights and is licensed for responsible commercial use, allowing developers to fine-tune and deploy it in their own projects and applications.

The key features of Gemma 3n can be further refined at the technical level:

- audio input: Processing sound data for speech recognition, translation and audio data analysis.

- Visual and text input: Multimodal capabilities allow it to process visual, audio and textual information to help understand and analyze the world around it.

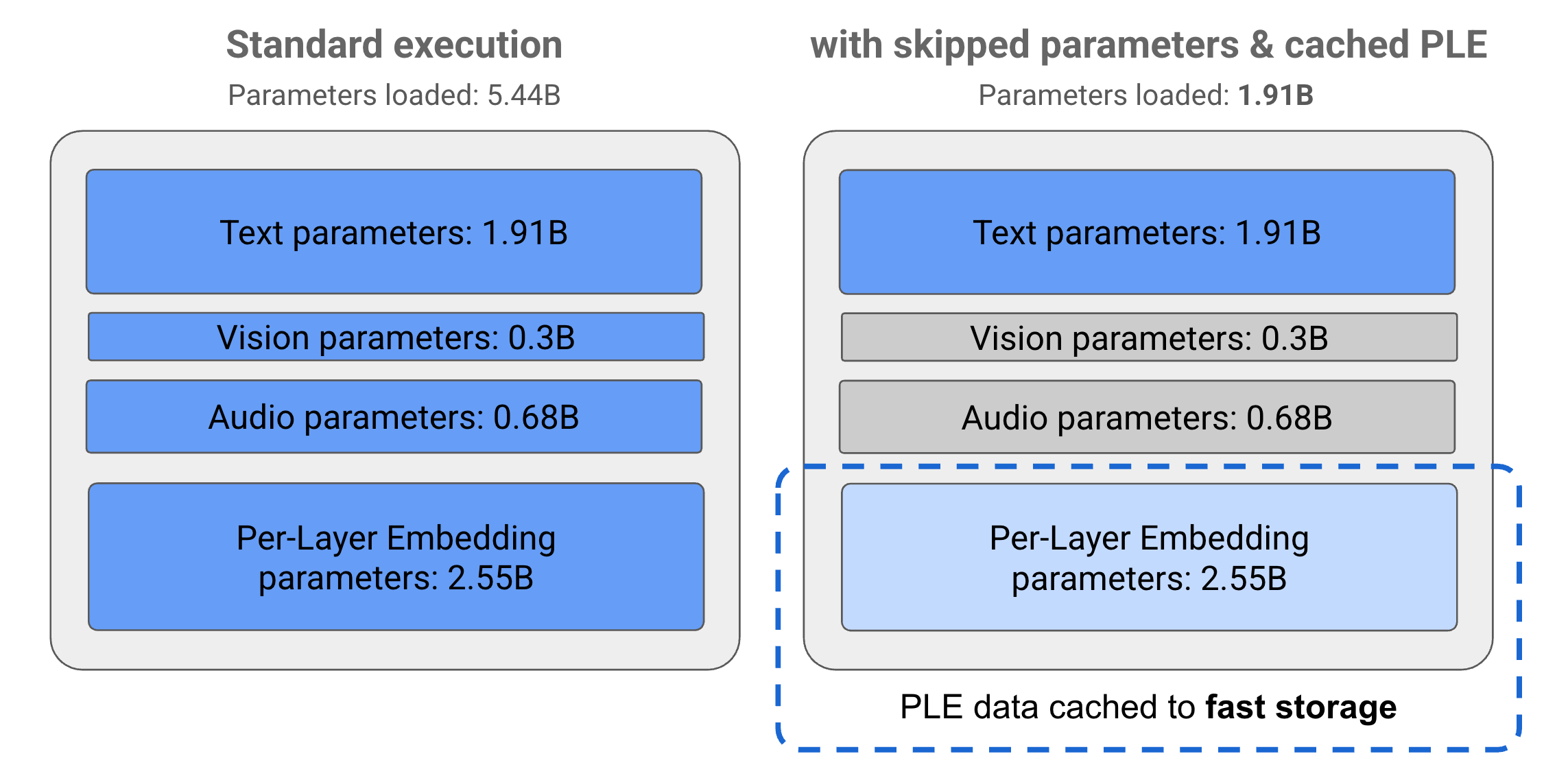

- PLE caching: Layer-by-Layer Embedding (PLE) parameters contained in the model can be cached into fast local storage to reduce memory costs at model runtime. This PLE data is used during model execution to create data that enhances the performance of each model layer, reducing resource consumption while improving the quality of model response by excluding PLE parameters from the model's main memory space.

- MatFormer architecture: "nesting" Transformer (Matryoshka Transformer) architecture that nests smaller models inside a larger model. These nested submodels can be invoked in response to a request without having to activate the full parameters of the outer model. This ability to run only the smaller core models inside a MatFormer model can significantly reduce the computational cost, response time, and energy consumption of the model. For Gemma 3n, the E4B model alone contains the E2B parameters of the model. The architecture also allows the developer to choose parameters and assemble models of intermediate sizes between 2B and 4B. More details can be found in the MatFormer research paper.

- Conditional parameter loading: Similar to PLE parameters, certain parameters in the model (e.g., audio or visual parameters) can be selectively skipped from loading into memory to minimize memory load. These parameters can be loaded dynamically at runtime if the device has the required resources. Overall, parameter skipping can further reduce the amount of runtime memory required by Gemma 3n models, allowing them to run on a wider range of devices and allowing developers to be more resource efficient for less demanding tasks.

- Extensive language support: Extensive language skills with training in over 140 languages.

- 32K token context: Provides sufficient input context length for analyzing data and processing tasks.

draw attention to sth.For more information, see the Android Gemini Nano developer documentation.

The Mystery of Model Parameters and "Effective Parameters"

The Gemma 3n model is named with the name of the model such as E2B cap (a poem) E4B Such parameter counts that are lower than the total number of parameters included in the model. Prefixes E This indicates that the models can operate with a simplified set of Effective parameters. This simplified parameter manipulation is made possible by the flexible parameterization techniques built into Gemma 3n models, helping them to run efficiently on lower resource devices.

The parameters of the Gemma 3n model are categorized into 4 main groups: textual parameters, visual parameters, audio parameters and layer-by-layer embedding (PLE) parameters. In the standard execution mode of the E2B model, over 5 billion parameters are loaded at runtime. However, by utilizing parameter skipping and PLE caching techniques, the model is able to run with an effective memory load of close to 2 billion (1.91B) parameters, as shown in the figure below.

Figure 1. Gemma 3n E2B model parameters in standard execution mode vs. using PLE caching and parameter skipping techniques to achieve effectively lower parameter loads.

Using these parameter offloading and selective activation techniques, it is possible to run the model with a very condensed set of parameters, or activate additional parameters to handle other data types such as visual and audio. These features give users the flexibility to adjust model functionality based on device capabilities or task requirements.

Getting Started: Previewing the Gemma 3n

An early trial of Gemma 3n is now open:

- Cloud Exploration with Google AI Studio: Access Google directly in your browser with no setup required! AI Studio Try Gemma 3n now and explore its text input capabilities instantly.

- End-side development with Google AI Edge: For developers who wish to integrate Gemma 3n locally, Google AI Edge provides the tools and libraries to do so. It is currently possible to start using its text and image understanding/generation capabilities.

Gemma 3n marks a new step in popularizing cutting-edge, efficient AI. As the technology opens up, it's exciting to see what innovations it will bring to the developer community, starting with today's preview.

You can explore this announcement and all of its features on io.google starting May 22nd. Google I/O 2025 The update.

Interested in starting to build? Why not start with the Getting Started Guide for Gemma Models.