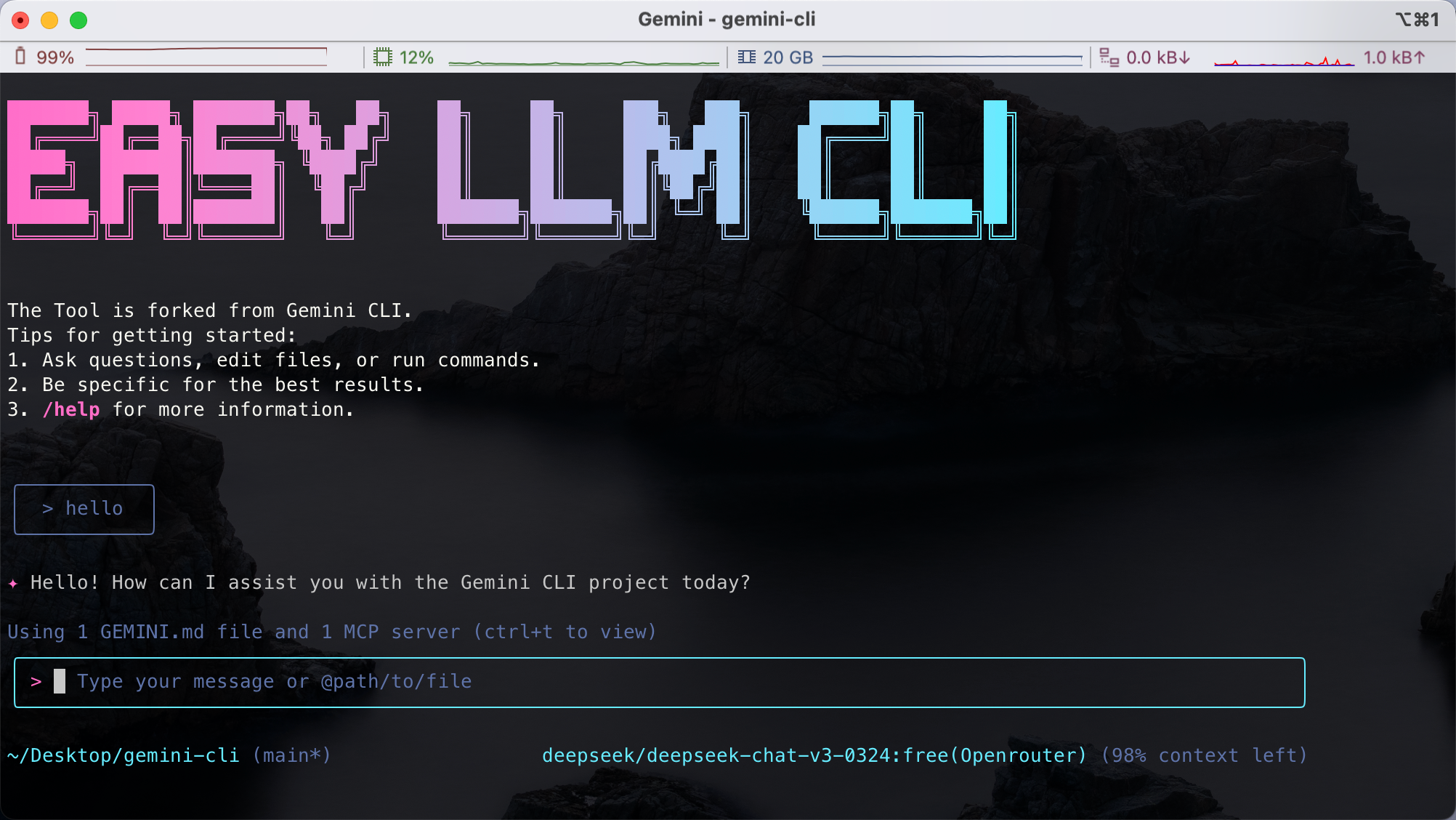

easy-llm-cli is an open source command line tool based on Google's Gemini The CLI was developed to help developers invoke multiple Large Language Models (LLMs) with simple commands. It supports APIs for OpenAI, Gemini, Claude, and many other models, and is compatible with any model that follows the OpenAI API format. Users can quickly generate text, analyze code, process multimodal inputs (such as images or PDFs), and even automate complex development tasks through the terminal. The tool is lightweight, modular, and supports custom configurations, making it suitable for developers to use in local or production environments. The project is hosted on GitHub, with an active community, detailed documentation, and easy to get started.

Function List

- Supports a variety of large language models, including Gemini, OpenAI, Claude, Qwen, and more.

- Provides a command line interface to quickly generate text, answer questions, or process input.

- Supports multimodal input and analyzes non-text content such as images and PDFs.

- Provide code analysis functions to generate code comments or summarize code base changes.

- Support for automated workflows such as processing Git commits, generating reports or charts.

- Allows customized model configuration and flexible switching of LLM providers via environment variables.

- be in favor of MCP servers, connecting external tools to extend functionality.

- Open source project with support for community contributions and local deployment.

Using Help

Installation process

To use easy-llm-cli locally, you need to complete the following installation steps. Make sure your system meets the minimum requirements: Node.js 20 or higher.

- Installing Node.js

interviews Node.js official website Download and install Node.js (recommended version 20.x or higher). Run the following command to check the version:node -v

2. **快速运行 CLI**

无需克隆仓库,可直接通过 npx 运行:

```bash

npx easy-llm-cli

This will automatically download and launch the tool for a quick trial.

3. Global installation (recommended)

If you plan to use it for a long period of time, it is recommended to install it globally:

npm install -g easy-llm-cli

After the installation is complete, run the following command to start it:

elc

- Installation from source (optional)

If you need to customize or contribute code, you can clone the repository:git clone https://github.com/ConardLi/easy-llm-cli.git cd easy-llm-cli npm install npm run start - Configuring API Keys

easy-llm-cli needs to be configured with the LLM provider's API key. For example, use OpenAI's API:export CUSTOM_LLM_PROVIDER="openai" export CUSTOM_LLM_API_KEY="sk-xxxxxxxxxxxxxxxxxxxx" export CUSTOM_LLM_ENDPOINT="https://api.openai.com/v1" export CUSTOM_LLM_MODEL_NAME="gpt-4o-mini"将

sk-xxxxxxxxxxxxxxxxxxxxreplace it with your actual key. You can find the actual key in the.bashrc或.zshrcAdd these commands to persist the configuration. - Docker installation (optional)

If you prefer containerized deployment, you can use Docker:docker build -t easy-llm-cli . docker run -d -p 1717:1717 --name easy-llm-cli easy-llm-cliEnsure that the port

1717Unoccupied.

Main Functions

The core of easy-llm-cli is to invoke the big language model via the command line to accomplish text generation, code analysis and automation tasks. The following is a detailed description of how to do this:

1. Text generation

Run the following command to generate the text:

elc "写一篇 200 字的 Python 简介"

- Operating Instructions:

elcis the tool's startup command, followed by a prompt. The configured model (e.g. Gemini or OpenAI) is used by default. You can switch models via environment variables. - exports: The model returns a 200-word Python profile in a clear format.

2. Code analysis

Run the following command in the project directory to analyze the code base:

cd my-project

elc "总结昨天的 Git 提交记录"

- Operating Instructions: The tool scans the Git history of the current directory and generates a summary of the commit history.

- exports: Returns submission instructions grouped by time or function.

3. Multimodal inputs

easy-llm-cli supports processing of images or PDFs. e.g. extracting text from PDFs:

elc "提取 PDF 中的文本" -f document.pdf

- Operating Instructions:

-fparameter specifies the file path. Supported formats include PDF and images (JPEG, PNG, etc.). - exports: Returns the extracted text content.

4. Automated workflows

Through the MCP server, the tool can connect to external systems. For example, to generate Git history charts:

elc "生成过去 7 天的 Git 提交柱状图"

- Operating Instructions: The MCP server needs to be configured (e.g.

@antv/mcp-server-chart). The tool calls the model to generate the chart data and renders it via MCP. - exports: Returns bar graph data or visualization results.

5. Customized model configuration

You can switch to other models, such as Claude, via environment variables:

export CUSTOM_LLM_PROVIDER="openrouter"

export CUSTOM_LLM_API_KEY="your-openrouter-key"

export CUSTOM_LLM_MODEL_NAME="claude-sonnet-4"

elc "分析这段代码的功能" < myfile.py

- Operating Instructions: After setting environment variables, run the command to switch models.

- exports: Return Claude Model analysis of the code.

Featured Function Operation

1. Multimodal support

easy-llm-cli supports handling multimodal input such as images, PDFs, and more. For example, generating draft applications:

elc "根据 sketch.jpg 生成 Web 应用代码" -f sketch.jpg

- Operating Instructions: Upload a design sketch and the model will generate the corresponding HTML/CSS/JavaScript code.

- exports: Returns the complete web application code framework.

2. Automated tasks

Tools can handle complex development tasks. For example, automating Git operations:

elc "处理 GitHub Issue #123 的代码实现"

- Operating Instructions: The tool reads the Issue description, generates implementation code and submits a Pull Request.

- exports: Returns the generated code and PR link.

3. Programmatic integration

Developers can integrate easy-llm-cli into their code. For example, using Node.js:

import { ElcAgent } from 'easy-llm-cli';

const agent = new ElcAgent({

model: 'gpt-4o-mini',

apiKey: 'your-api-key',

endpoint: 'https://api.openai.com/v1'

});

const result = await agent.run('生成销售数据的柱状图');

console.log(result);

- Operating Instructions: By

ElcAgentClass call model, suitable for embedding in automation scripts. - exports: Returns the results of processing, such as chart data.

caveat

- Ensure that the API key is valid and that the network connection is stable.

- Multimodal functionality requires model support (see official test form). For example, Gemini-2.5-pro and the GPT-4.1 supports multimodality, while some models such as DeepSeek-R1 No support.

- When running complex tasks, check the

CUSTOM_LLM_MAX_TOKENSis sufficient (default 8192). - If you encounter problems, check the troubleshooting documentation or run:

elc logs

application scenario

- Codebase Analysis

Developers can run the new projectelc "描述系统架构", quickly understand the code structure, suitable for taking over unfamiliar projects. - Generate application prototypes

The designer uploads a sketch or PDF, runselc "生成 Web 应用", quickly get a runnable code prototype. - Automating DevOps Tasks

DevOps engineers can runelc "生成最近 7 天的 Git 提交报告", automatically organizing the progress of the team's work. - file processing

Researchers can useelc "提取 PDF 中的关键信息" -f paper.pdf, quickly organize the content of academic documents.

QA

- What models does easy-llm-cli support?

Supports Gemini, OpenAI, Claude, Qwen, and many others, as well as any custom model that follows the OpenAI API format. See the official test form for details. - How do you handle multimodal inputs?

utilization-fparameter to upload an image or PDF, for exampleelc "提取文本" -f file.pdf. Make sure the model supports multimodality (e.g. Gemini-2.5-pro). - Is a paid API required?

Remote models require a valid API key (e.g. a paid key for OpenAI or OpenRouter). Local models such as Qwen2.5-7B-Instruct do not require additional fees. - How do I debug errors?

(of a computer) runelc logsView the log, or visit troubleshooting documentation Get help.