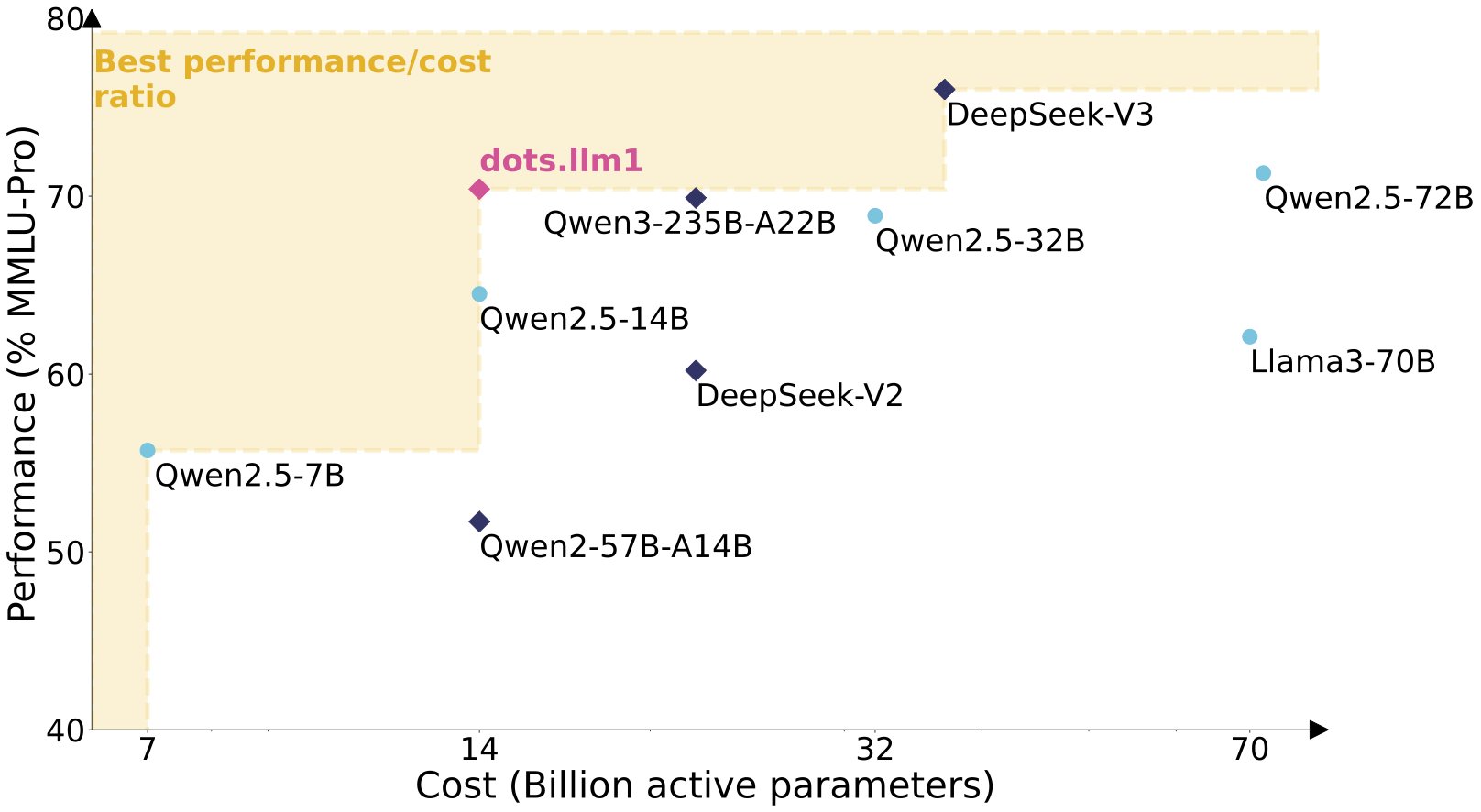

rednote-hilab/dots.llm1.baseis the first big language model dots.llm1 open-sourced by Xiaohongshu, hosted on the Hugging Face platform. The model adopts the Mixed Expert (MoE) architecture with 142 billion parameters, and only 14 billion parameters are activated during inference, taking into account high performance and low cost. dots.llm1 was trained using 11.2 trillion non-synthetic high-quality corpus, and scored an average of 91.3 in the Chinese language test, outperforming DeepSeek V2, V3, and the Ali Qwen2.5 series. Supports 32,768 tokens of ultra-long contexts, suitable for tasks such as text generation and dialog. The page provides model weights, configuration files and usage examples for developers to integrate and research.

Function List

- Generate high-quality text for dialog, article continuation, and code generation.

- Using the MoE architecture, inference activates only 14 billion parameters, reducing computational cost.

- Supports 32,768 tokens of very long contexts, suitable for processing long documents or complex tasks.

- Provides training checkpoints per 1 trillion tokens to help study model training dynamics.

- Supports Docker and vLLM deployments to optimize high-throughput inference scenarios.

- Integrates Hugging Face Transformers, provides Python code examples.

- Optimized Chinese processing capability, with test scores exceeding those of several mainstream open-source models.

Using Help

Installation and Deployment

To use dots.llm1.base, a GPU-enabled computing environment (at least 8GB of video memory is recommended) needs to be prepared. Below are the detailed deployment steps:

1. Deployment via Docker

Docker is the official recommended deployment method that supports GPU acceleration and high throughput reasoning. The steps are as follows:

- Make sure Docker and NVIDIA Container Toolkit are installed.

- Pull and run the Docker image:

docker run --gpus all \

-v ~/.cache/huggingface:/root/.cache/huggingface \

-p 8000:8000 \

--ipc=host \

rednotehilab/dots1:vllm-openai-v0.9.0.1 \

--model rednote-hilab/dots.llm1.base \

--tensor-parallel-size 8 \

--trust-remote-code \

--served-model-name dots1

- Test that the service is working:

curl http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "dots1",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What is MoE in AI?"}

],

"max_tokens": 32,

"temperature": 0

}'

A successful return indicates that the service is running normally.

2. Use of Hugging Face Transformers

If Docker is not used, models can be loaded via Python:

- Install the dependencies:

pip install transformers torch

- Load models and disambiguators:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, GenerationConfig

model_name = "rednote-hilab/dots.llm1.base"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto", torch_dtype=torch.bfloat16)

- Perform text generation:

text = "人工智能中的MoE架构是什么?"

inputs = tokenizer(text, return_tensors="pt")

outputs = model.generate(**inputs.to(model.device), max_new_tokens=100)

result = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(result)

3. High throughput reasoning with vLLM

vLLM is suitable for large-scale reasoning scenarios. Install vLLM and run it:

vllm serve rednote-hilab/dots.llm1.base --port 8000 --tensor-parallel-size 8

Main Functions

Text Generation

dots.llm1.base is good at generating coherent text, suitable for academic writing, article continuation and so on. Operation steps:

- Prepare input text, such as technical documentation or problem descriptions.

- Using Python code or a Docker service, set the

max_new_tokensControls the output length. - Check the content of the output to ensure that it is logically coherent.

Dialogue missions

By prompting the project, the model can realize the dialog function. Example:

messages = [{"role": "user", "content": "讲解MoE架构的核心原理。"}]

input_tensor = tokenizer.apply_chat_template(messages, add_generation_prompt=True, return_tensors="pt")

outputs = model.generate(input_tensor.to(model.device), max_new_tokens=200)

result = tokenizer.decode(outputs[0][input_tensor.shape[1]:], skip_special_tokens=True)

print(result)

Research and training developments

The model provides intermediate checkpoints for every 1 trillion tokens that researchers can use from Hugging Face'sdots1Collective downloads to analyze the MoE model training process.

Model Architecture Features

dots.llm1 utilizes a unidirectional decoder Transformer architecture, replacing the feedforward network as MoE, containing 128 routing experts and 2 shared experts. Six routing experts and two shared experts are dynamically selected per input marker, activating a total of eight expert networks. The model uses the SwiGLU activation function to optimize data relationship capture. The attention layer uses a multi-head attention mechanism (MHA) combined with RMSNorm normalization to improve numerical stability. The load balancing strategy optimizes expert network utilization through dynamic bias terms.

caveat

- A GPU with at least 8GB of video memory is recommended.

- The model weight is about 4GB and requires a stable network to download.

- utilization

torch.bfloat16Optimize performance and check hardware compatibility. - Chinese language tasks performed well, but prompts need to be adjusted to optimize dialogue.

application scenario

- academic research

Researchers can analyze intermediate checkpoints to explore the training dynamics and parameter efficiency of MoE architectures. - content creation

Integrate models into writing tools to generate drafts of articles, reports, or technical documentation. - dialog system

Develop customer service bots or educational assistants that support long contextual conversations. - Code Assist

Generate code snippets to assist developers in writing algorithms or scripts quickly.

QA

- Difference between dots.llm1.base and inst version?

The BASE version is suitable for text completion, and the INST version has been fine-tuned with commands to optimize dialogue capabilities. - How can we reduce the cost of reasoning?

The MoE architecture activates only 14 billion parameters, combining vLLM and Docker to optimize resource usage. - What languages are supported?

Mainly supports Chinese and English with a context length of 32,768 tokens. - How can training data be of high quality?

Using an 11.2 trillion non-synthetic corpus, high-quality content is filtered through a three-stage processing pipeline.