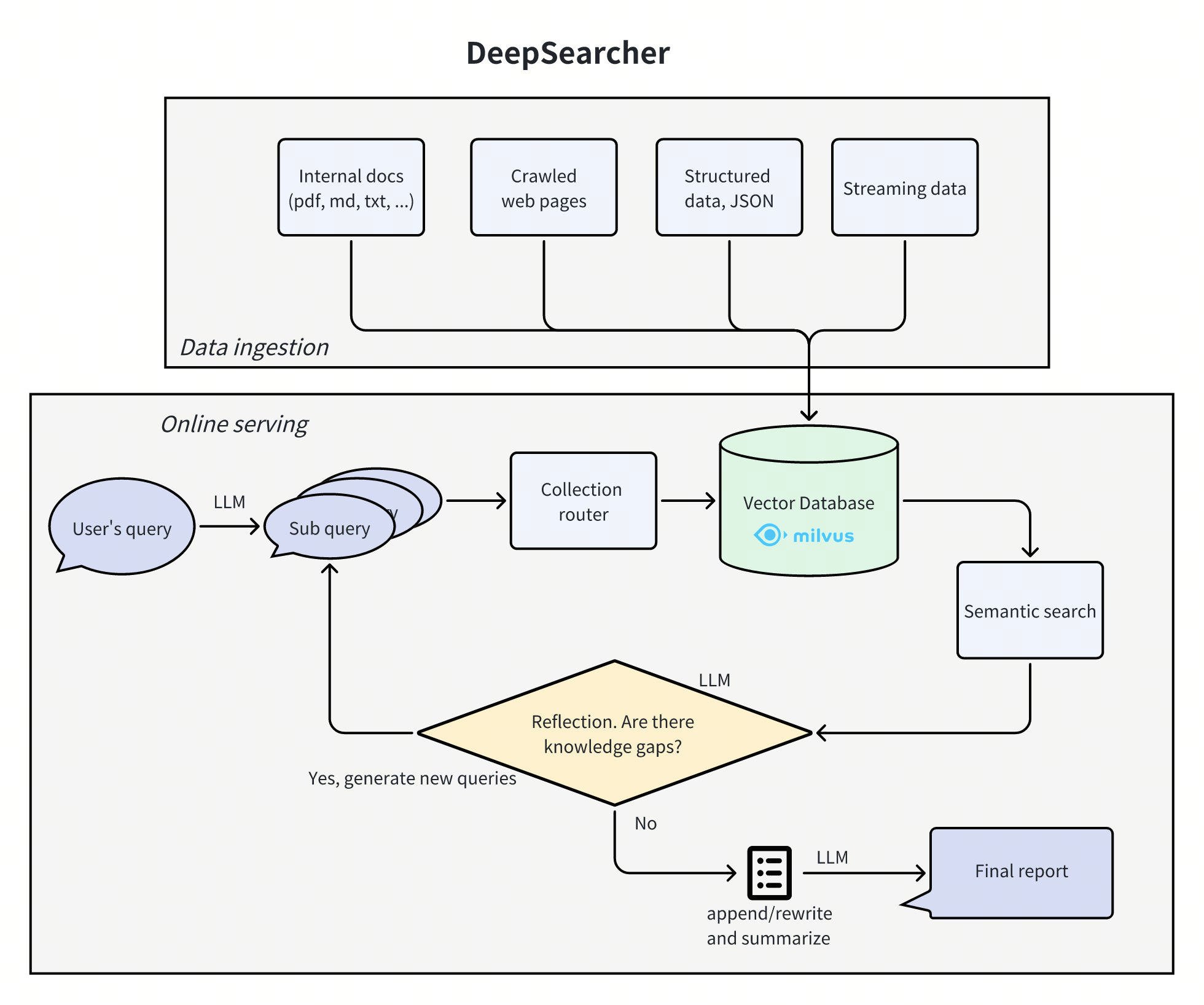

Deep Searcher is a combination of powerful large language models (such as the DeepSeek and OpenAI) and vector databases (e.g., Milvus) are tools designed to search, evaluate, and reason based on private data, providing highly accurate answers and comprehensive reports. The project is applicable to enterprise knowledge management, intelligent Q&A systems, and information retrieval scenarios.Deep Searcher supports a wide range of embedding models and large language models, and is able to manage vector databases to ensure efficient retrieval and secure utilization of data.

Function List

- Private Data Search: Maximize the use of internal enterprise data and ensure data security.

- Vector Database Management: Supports vector databases such as Milvus, which allows data partitioning for more efficient retrieval.

- Flexible embedding options: Compatible with multiple embedding models for easy selection of the best solution.

- Multiple large language model support: Support for big models like DeepSeek, OpenAI, etc. for smart Q&A and content generation.

- Document Loader: Local file loading is supported and web crawling will be added in the future.

Using Help

Installation process

- Cloning Warehouse:

git clone https://github.com/zilliztech/deep-searcher.git

- Create a Python virtual environment (recommended):

python3 -m venv .venv

source .venv/bin/activate

- Install the dependencies:

cd deep-searcher

pip install -e .

- Configuring LLM or Milvus: Edit

examples/example1.pyfile to configure LLM or Milvus as needed. - Prepare the data and run the example:

python examples/example1.py

Instructions for use

- Configuring LLM: In

deepsearcher.configurationmodule, use theset_provider_configmethod to configure the LLM. for example. configure the OpenAI model:

config.set_provider_config("llm", "OpenAI", {"model": "gpt-4o"})

- Load Local Data: Use

deepsearcher.offline_loadingin the moduleload_from_local_filesmethod to load local data:

load_from_local_files(paths_or_directory="your_local_path")

- Query Data: Use

deepsearcher.online_queryin the modulequerymethod for querying:

result = query("Write a report about xxx.")

Detailed function operation flow

- Private Data Search::

- Maximize the use of data within the enterprise while ensuring data security.

- Online content can be integrated when more accurate answers are needed.

- Vector Database Management::

- Supports vector databases such as Milvus, which allows data partitioning for more efficient retrieval.

- Support for more vector databases (e.g. FAISS) is planned for the future.

- Flexible embedding options::

- Compatible with multiple embedded models for easy selection of the best solution.

- Multiple large language model support::

- Supports big models like DeepSeek, OpenAI, etc. for smart Q&A and content generation.

- Document Loader::

- Local file loading is supported and web crawling will be added in the future.