Core is an open source tool developed by RedPlanetHQ to provide personalized memory storage capabilities for large models (LLMs). With Core, users can build and manage their own memory maps, saving text, conversations, or other data to a private knowledge base. The tool supports storing records of user interactions with the Big Model to form structured memory nodes that can be easily queried subsequently or connected to other tools.Core is designed with simplicity in mind for both developers and casual users. It operates through an intuitive dashboard and supports both local deployment and cloud services to meet the needs of different users. The project is currently in an active development phase, with continuous improvement especially for memory optimization of large models.

Function List

- memory storage: Save user-entered text or dialog to a private knowledge graph.

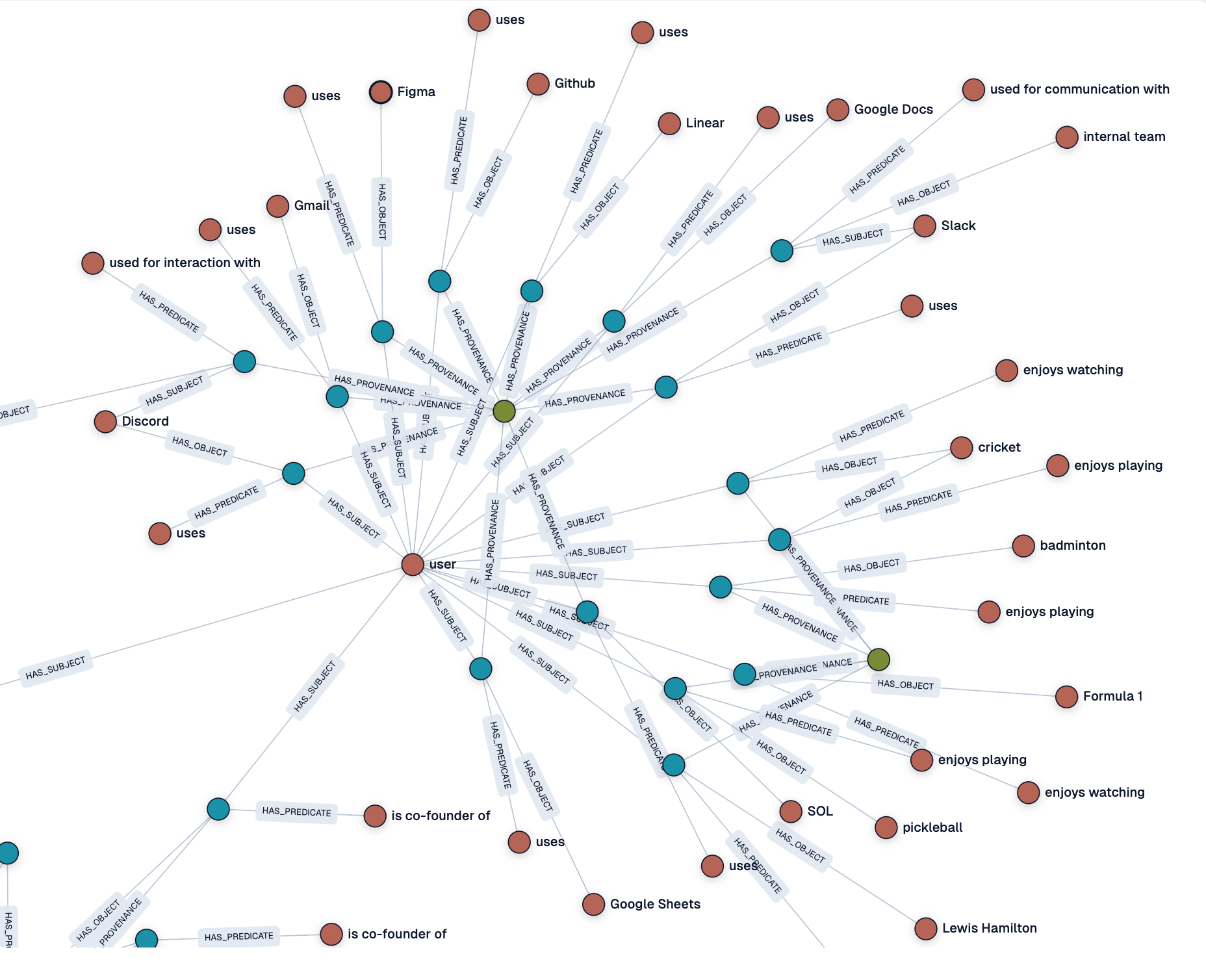

- Knowledge Graph Generation: Automatically transforms inputs into structured memory nodes for easy retrieval.

- Dashboard Management: Provide a visual interface to view and manage the nodes and state of the memory map.

- Supports local and cloud deployment: Users can choose to run locally or use the Core Cloud service.

- Integration with large models: Supports interfacing with a variety of large models to store interaction records.

- Log Monitoring: View logs of memory processing in real time to understand the status of data processing.

- open source project: Users are free to access the code and participate in the development or customization of features.

Using Help

Installation process

To use Core, you first need to install and configure your environment. The following are detailed installation and usage steps:

- clone warehouse:

- Open a terminal and run the following command to clone the Core repository:

git clone https://github.com/RedPlanetHQ/core.git - Go to the project catalog:

cd core

- Open a terminal and run the following command to clone the Core repository:

- Installation of dependencies:

- Core uses a Node.js environment, and it is recommended that you install the latest version of Node.js (recommended 16.x or higher).

- Install the dependency packages:

npm install

- Configuring Environment Variables:

- In the project root directory, create a

.envfile, add the following:API_TOKEN=YOUR_API_TOKEN_HERE API_BASE_URL=https://core.heysol.ai SOURCE=cursor API_TOKENYou need to get it after registering an account from Core Cloud official website (https://core.heysol.ai).

- In the project root directory, create a

- Running Local Services:

- Start Core's Memory Control Panel (MCP):

npx -y @redplanethq/core-mcp - Once the service has started, open your browser and visit

http://localhost:3000。

- Start Core's Memory Control Panel (MCP):

- Using Core Cloud (optional):

- If you choose a cloud service, visit https://core.heysol.ai to register and log in to your account.

- Manage memories directly in the cloud dashboard without local deployment.

Main Functions

1. Adding memories

- procedure:

- Login Dashboard (local or cloud).

- In the input box in the upper right corner, enter the text you want to save, e.g. "I like to play badminton".

- Click the "+Add" button and the text will be sent to the processing queue.

- Check the processing status on the "Logs" screen. When finished, the memory node will be displayed in the dashboard.

- Functional Description:

- A memory node is automatically generated for each added text, and the node contains the text content and metadata (e.g., timestamps).

- These nodes can be connected to form a knowledge graph for subsequent queries or analysis.

2. Viewing and managing memory

- procedure:

- Open the "Graph" page of the dashboard to see all memory nodes.

- Click on a node to view the details or edit it.

- Support search function, input keywords to quickly locate related memories.

- Functional Description:

- The dashboard provides a visual knowledge graph with relationships between nodes at a glance.

- Users can manually adjust node connections or delete unwanted memories.

3. Integration with larger models

- procedure:

- On the Settings page, add a rule to automatically save the big model dialog:

- Open "Settings" -> "User rules" -> "New Rule".

- Add rules to ensure that the user's query and the model's response are stored in Core memory after each conversation.

- set up

sessionIdis the unique identifier (UUID) of the dialog.

- Example rule configuration:

{ "mcpServers": { "memory": { "command": "npx", "args": ["-y", "@redplanethq/core-mcp"], "env": { "API_TOKEN": "YOUR_API_TOKEN_HERE", "API_BASE_URL": "https://core.heysol.ai", "SOURCE": "cursor" } } } }

- On the Settings page, add a rule to automatically save the big model dialog:

- Functional Description:

- Core automatically captures user interactions with large models and stores them as structured data.

- A variety of large models are supported, but support for Llama models is currently being optimized for better compatibility in the future.

4. Log monitoring

- procedure:

- Open the "Logs" page of the dashboard.

- View real-time processing logs, including memory additions, processing status, and error messages.

- Functional Description:

- The logging feature helps users to monitor the data processing flow and ensure the reliability of data storage.

- If processing fails, the log provides error details for easy troubleshooting.

caveat

- local deployment: Ensure that the network is stable and port 3000 is not occupied.

- cloud service: Stable API keys are required, and key validity is checked periodically.

- Llama model support: Currently Core has limited compatibility with the Llama model, so it is recommended to use other major models for best results.

- Community Support: Core is an open source project, if you encounter problems, you can submit an issue on GitHub or join the community discussion.

application scenario

- Personal knowledge management

- Users can use Core to save daily study notes, inspirations or important conversations to form a personal knowledge base. For example, students can save class notes as memory nodes for easy review.

- Developer Debugging Large Models

- Developers can record interactions with large models, analyze model output, and optimize prompt design. For example, when debugging a chatbot, Core can save all test conversations.

- Teamwork

- Team members can share memory maps and collaborate on building a project knowledge base through Core Cloud. For example, a product team can deposit user feedback into Core to generate a requirements analysis map.

- Research assistance

- Researchers can save literature summaries or experimental data to Core, creating a structured research memory for subsequent analysis and citation.

QA

- What big models does Core support?

- Core currently supports many of the major big models, but compatibility with the Llama model is still being optimized. It is recommended to use other models for best results.

- What is the difference between local deployment and cloud services?

- Local deployment requires manual configuration of the environment, which is suitable for scenarios that require privacy; cloud services do not require installation and work out-of-the-box, which is suitable for getting started quickly.

- How do I ensure the security of my memory data?

- Locally deployed memory data is stored on the user's device with security controlled by the user; cloud services use encrypted transmission with API keys to ensure secure data access.

- Is Core completely free?

- Core is an open source project and is free for local deployment; cloud services may involve subscription fees, so check the official website for pricing.