Baichuan-M2 is an open source large language model with 32 billion (32B) parameters from Baichuan Intelligence. The model focuses on the medical domain and is designed to handle real-world medical reasoning tasks. It is based on the Qwen2.5-32B model for secondary development, and by introducing the innovative Large Verifier System and specialized medical domain adaptation training, it significantly improves the professional ability in medical scenarios while maintaining strong general knowledge and reasoning capabilities. Ability. According to official data on the HealthBench review set, Baichuan-M2-32B outperforms several similar models, including OpenAI's latest open source model. A highlight of the model is that it has been optimized to support 4-bit quantization, allowing it to be efficiently deployed and reasoned on a single consumer-grade graphics card, such as the RTX 4090, which greatly lowers the barrier to use.

Function List

- Top medical performance: Outperforms several well-known open- and closed-source models on HealthBench, the authoritative medical review set, and leads the open-source models in medical capabilities.

- Aligning physician thinking: The training data contains real clinical cases and patient simulators, enabling the model to better understand and simulate clinical diagnostic thinking and effective doctor-patient interaction.

- Efficient Deployment Reasoning: Supporting 4-bit quantization technology, users can deploy the model on a single RTX 4090 graphics card, which achieves higher token throughput and significantly reduces hardware costs.

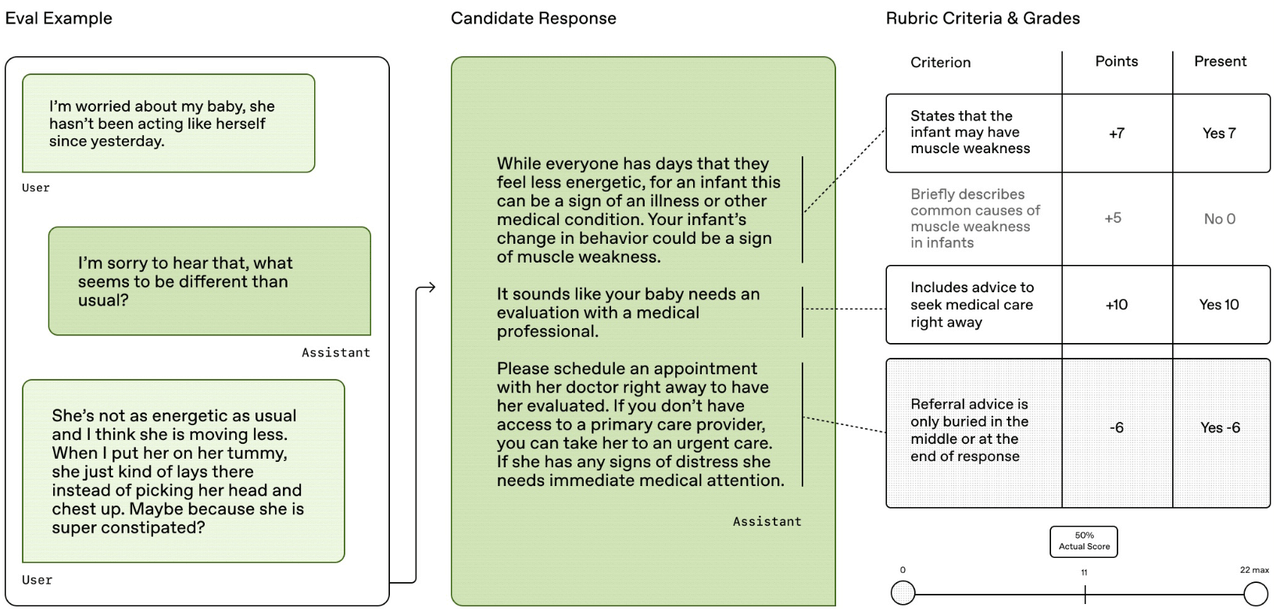

- Large-scale validator systems: A unique validation system with a built-in "patient simulator" based on real cases, and dynamic evaluation and validation of model outputs in eight dimensions, including medical accuracy and answer completeness.

- Enhanced domain adaptation: Medical knowledge is injected into the model by means of Mid-Training, and a multi-stage reinforcement learning strategy is used to enhance medical specialization while effectively retaining its general-purpose capabilities.

- Powerful base model: Constructed based on the excellent general-purpose large model Qwen2.5-32B, which lays a solid foundation for the model's strong comprehensive capabilities.

Using Help

Users can access Hugging Face'stransformersThe library easily loads and uses the Baichuan-M2-32B model. The following is the detailed procedure for using it:

1. Environmental preparation

First, the necessary Python libraries need to be installed, mainly thetransformers和accelerate. The latest version is recommended for best compatibility.

pip install transformers>=4.42.0

pip install accelerate

Also, for better performance, it is recommended to install the CUDA version of PyTorch and make sure that you have properly configured NVIDIA drivers in your environment.

2. Loading models and word splitters

utilizationAutoModelForCausalLM和AutoTokenizerModels can be easily downloaded and loaded from the Hugging Face Hub.

# 引入必要的库

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# 指定模型ID

model_id = "baichuan-inc/Baichuan-M2-32B"

# 加载模型,trust_remote_code=True是必需的,因为它会执行模型仓库中的自定义代码

# 如果有支持BF16的GPU,可以使用torch.bfloat16以获得更好的性能

model = AutoModelForCausalLM.from_pretrained(

model_id,

trust_remote_code=True,

torch_dtype=torch.bfloat16, # 使用bfloat16以节省显存

device_map="auto" # 自动将模型分配到可用的设备上(如GPU)

)

# 加载对应的分词器

tokenizer = AutoTokenizer.from_pretrained(model_id)

3. Structuring input content

Baichuan-M2-32B uses a specific dialog template. In order to properly interact with the model, it is necessary to use thetokenizer.apply_chat_templatemethod to format the input. The model supports a specialthinking_mode(Thinking Mode), which allows you to output a thought process before generating a final answer.

# 定义用户输入的问题

prompt = "被虫子咬了之后肿了一大块,怎么样可以快速消肿?"

# 按照官方格式构建输入消息

messages = [

{"role": "user", "content": prompt}

]

# 使用apply_chat_template将消息转换为模型所需的输入字符串

# thinking_mode可以设置为 'on', 'off', 或 'auto'

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

thinking_mode='on'

)

# 将文本编码为模型输入的张量

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

4. Generating and parsing responses

Calling the model'sgeneratemethod to generate responses. Since the thinking mode is turned on, the output of the model will contain both the thinking process and the final answer, which needs to be parsed.

# 使用模型生成回复

# max_new_tokens控制生成内容的最大长度

generated_ids = model.generate(

**model_inputs,

max_new_tokens=4096

)

# 从生成结果中移除输入部分,只保留新生成的内容

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

# 解析思考过程和最终内容

# 思考过程以特殊标记 </think> (ID: 151668) 结尾

try:

# 找到 </think> 标记的位置

think_token_id = 151668

index = len(output_ids) - output_ids[::-1].index(think_token_id)

except ValueError:

# 如果没有找到标记,则认为全部是内容

index = 0

# 解码思考过程和最终答案

thinking_content = tokenizer.decode(output_ids[:index], skip_special_tokens=True).strip()

content = tokenizer.decode(output_ids[index:], skip_special_tokens=True).strip()

# 打印结果

print("【思考过程】:", thinking_content)

print("【最终答案】:", content)

5. API service deployment

For scenarios where you need to deploy a model as a service, you can use thesglang或vllmand other inference engines to create an OpenAI-compatible API endpoint.

Deploying with SGLang.

python -m sglang.launch_server --model-path baichuan-inc/Baichuan-M2-32B --reasoning-parser qwen3

Deploying with vLLM.

vllm serve baichuan-inc/Baichuan-M2-32B --reasoning-parser qwen3

Once the service is started, it is possible to interact with the model via HTTP requests just like calling the OpenAI API.

application scenario

- Clinical Decision Aids

In complex clinical diagnosis, the model can provide doctors with ideas for differential diagnosis, references to the latest treatment options, and drug information queries, helping them make more comprehensive and faster decisions. - Medical education and training

Medical students and young doctors can use the model's "patient simulator" function to conduct virtual consultation training, learning diagnostic ideas and disease analysis methods, and the model can also be used as a readily accessible "medical encyclopedia". - Mass Health Counseling

Provide preliminary answers to common health questions and popularization of health care knowledge for ordinary users, such as explaining routine indicators in laboratory tests and providing advice on the use of over-the-counter medicines, etc., so as to enhance the public's health literacy. - Medical record summarization and information extraction

The model is able to quickly read and understand lengthy electronic medical records, automatically extract key information, generate structured medical record summaries, reduce the burden of paperwork for medical staff, and improve work efficiency.

QA

- What is the base model for the Baichuan-M2-32B model?

It is built on the Qwen 2.5-32B model and has been enhanced and optimized specifically for the medical domain based on its strong general-purpose capabilities. - What kind of hardware is needed to run this model?

The model is lightweight and optimized to support 4-bit quantization and can be efficiently deployed and run on a single NVIDIA RTX 4090-class consumer graphics card, significantly lowering the hardware threshold. - Is this model available for commercial use?

Can. The model follows the Apache 2.0 open source license, which allows users to commercialize it. - Can the model replace a medical professional?

Cannot. All information provided by the model is for research and informational purposes only and should not be used as a professional medical diagnosis or treatment plan. Any medical decisions should be made under the guidance of a licensed physician.