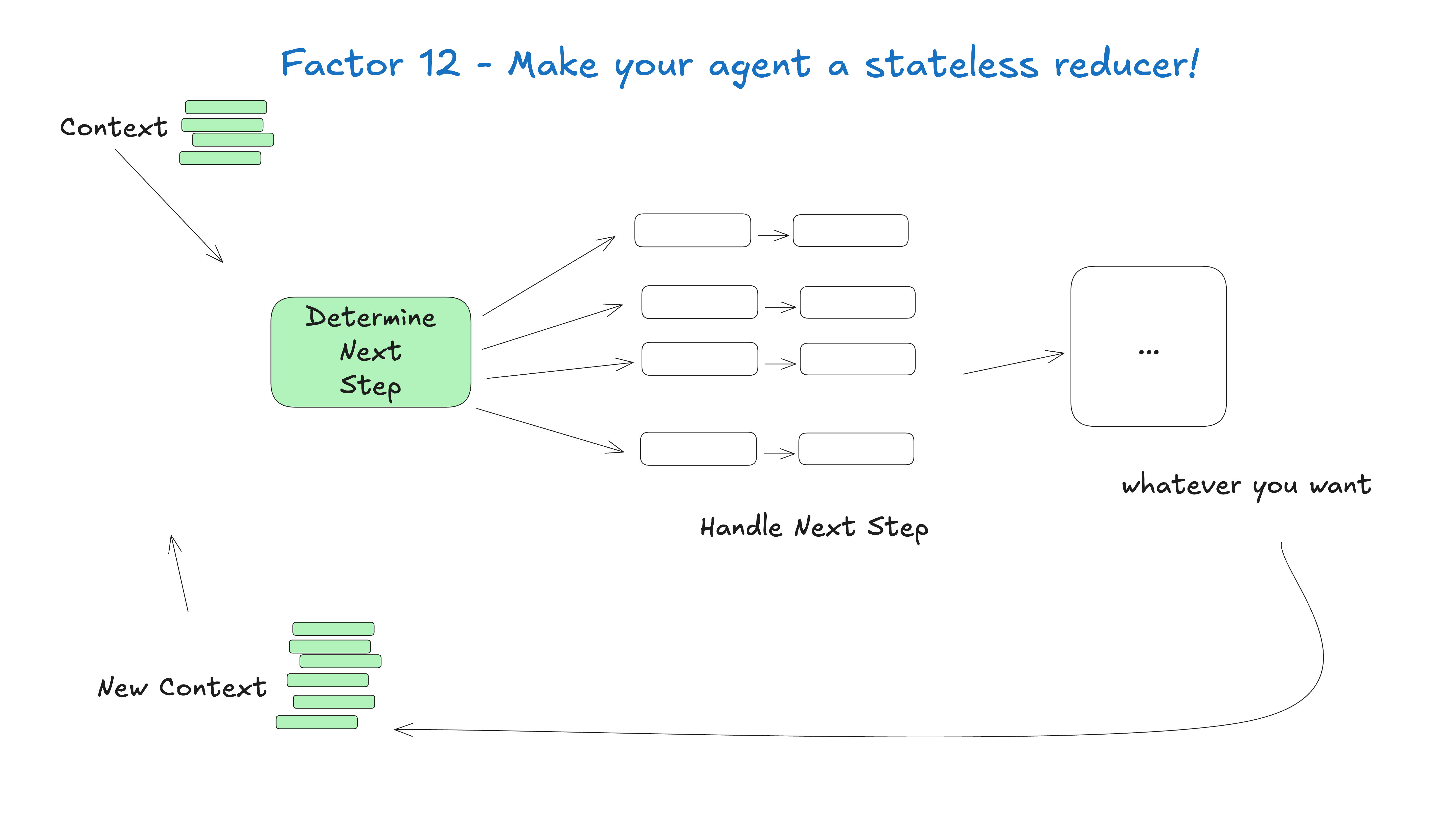

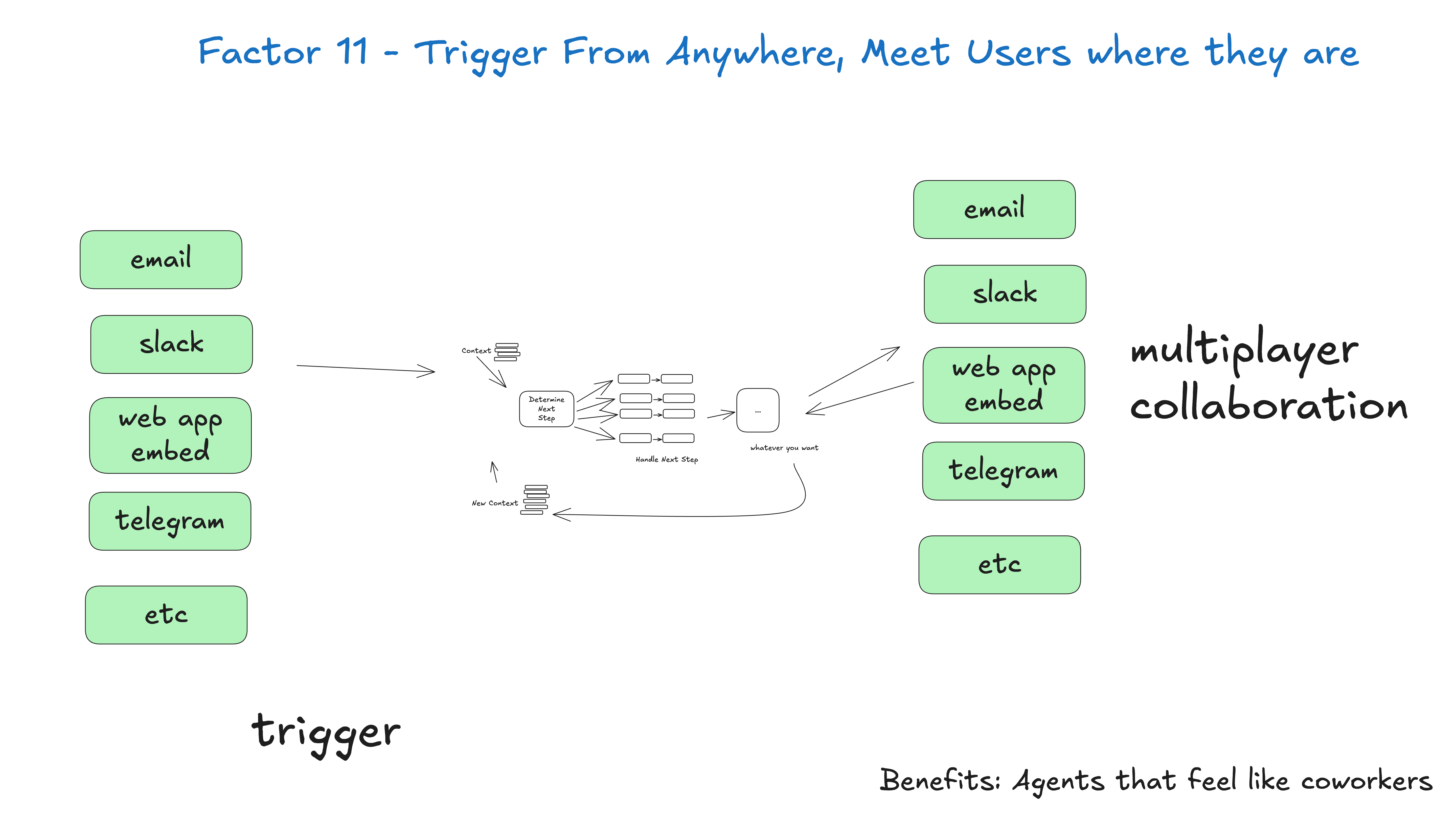

You are not obliged to use a standardized, message-based format to deliver context to a large language model.

At any given moment, your input to the big language model in the AI intelligences is "Here's everything that's happened so far, here's what to do next."

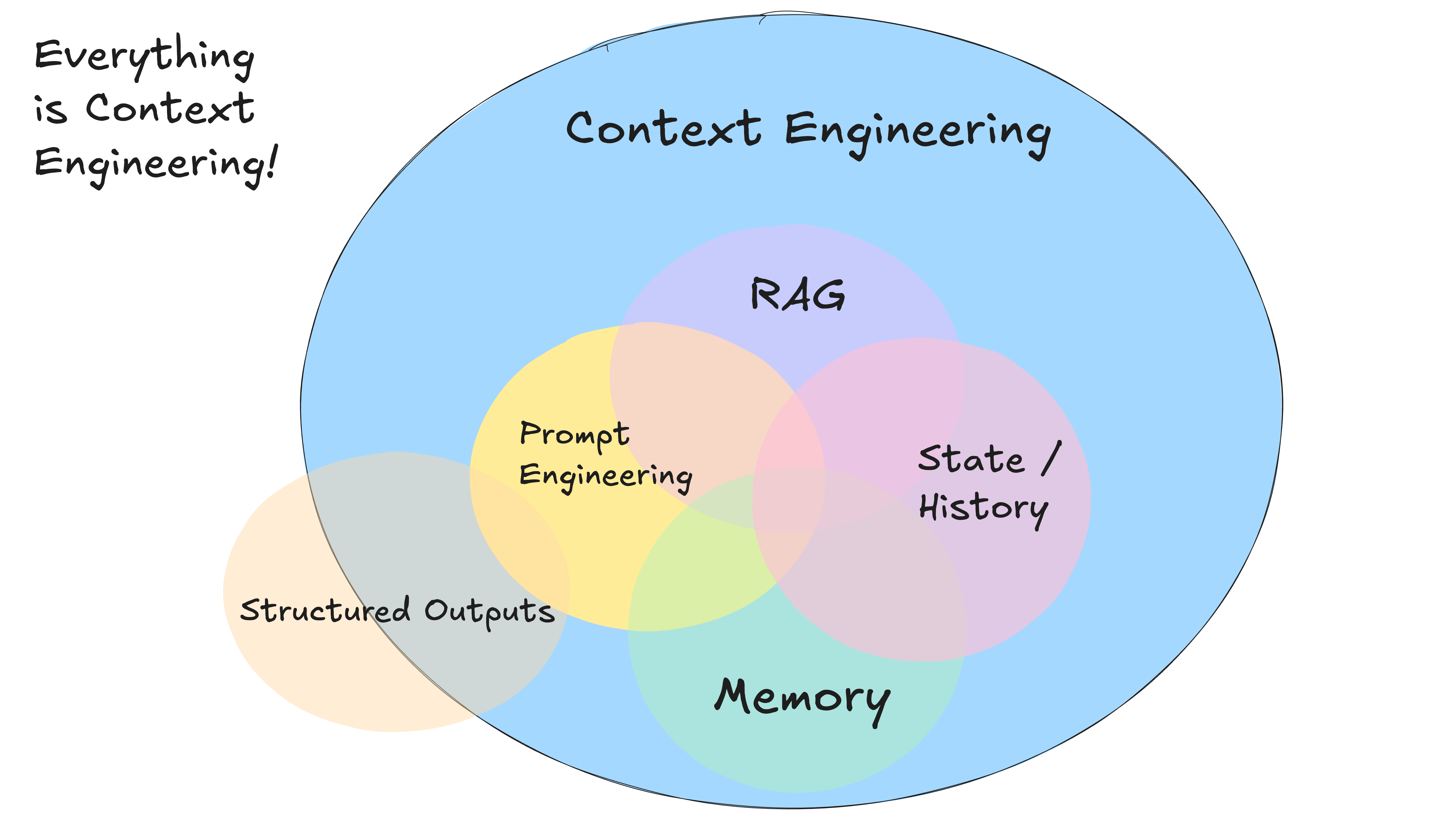

Everything is contextual engineering. Large language models are stateless functions, they convert inputs into outputs. To get the best output, you need to give them the best input.

Creating quality context means:

- Hints and instructions you provide to the model

- Any documents or external data you retrieve (such as RAG)

- Any past states, tool calls, results or other history

- Any past news or events from related but independent sources (memories)

- Instructions on what kind of structured data to output

About contextual engineering

This guide aims to explore how to maximize performance from today's models. Notably, this guide does not mention the following:

- Changes to model parameters such as temperature, top_p, frequency_penalty, presence_penalty, etc.

- Train your own complementary or embedding models

- Fine-tuning of existing models

Again, I don't know what the best way to hand over context to a large language model is, but I do know that you need to have the flexibility to be able to try all the possibilities.

Standard and customized context formats

Most Big Language Model clients use a standardized, message-based format as shown below:

[

{

"role": "system",

"content": "你是一个乐于助人的助手..."

},

{

"role": "user",

"content": "你能部署后端吗?"

},

{

"role": "assistant",

"content": null,

"tool_calls": [

{

"id": "1",

"name": "list_git_tags",

"arguments": "{}"

}

]

},

{

"role": "tool",

"name": "list_git_tags",

"content": "{\"tags\": [{\"name\": \"v1.2.3\", \"commit\": \"abc123\", \"date\": \"2024-03-15T10:00:00Z\"}, {\"name\": \"v1.2.2\", \"commit\": \"def456\", \"date\": \"2024-03-14T15:30:00Z\"}, {\"name\": \"v1.2.1\", \"commit\": \"abe033d\", \"date\": \"2024-03-13T09:15:00Z\"}]}",

"tool_call_id": "1"

}

]

While this approach works well in most use cases, if you want to really maximize performance from today's big language models, you need to do it in a way that saves as much as possible on Token and attention (attention) efficient ways to input context into a large language model.

As an alternative to standardized, message-based formats, you can build custom context formats optimized for your use cases. For example, you can use custom objects and package/scatter them into one or more user, system, helper, or tool messages as needed.

This is an example of putting an entire context window into a single user message:

[

{

"role": "system",

"content": "你是一个乐于助人的助手..."

},

{

"role": "user",

"content": |

这是目前为止发生的所有事情:

<slack_message>

来自: @alex

频道: #deployments

内容: 你能部署后端吗?

</slack_message>

<list_git_tags>

intent: "list_git_tags"

</list_git_tags>

<list_git_tags_result>

tags:

- name: "v1.2.3"

commit: "abc123"

date: "2024-03-15T10:00:00Z"

- name: "v1.2.2"

commit: "def456"

date: "2024-03-14T15:30:00Z"

- name: "v1.2.1"

commit: "ghi789"

date: "2024-03-13T09:15:00Z"

</list_git_tags_result>

下一步该怎么做?

}

]

The model may deduce from the schemas you provide that you are asking for what's the next step, but it never hurts to add it explicitly to your prompt template.

code example

We can build it with code similar to the following:

class Thread:

events: List[Event]

class Event:

# 可以只使用字符串,也可以是显式的 - 由你决定

type: Literal["list_git_tags", "deploy_backend", "deploy_frontend", "request_more_information", "done_for_now", "list_git_tags_result", "deploy_backend_result", "deploy_frontend_result", "request_more_information_result", "done_for_now_result", "error"]

data: ListGitTags | DeployBackend | DeployFrontend | RequestMoreInformation |

ListGitTagsResult | DeployBackendResult | DeployFrontendResult | RequestMoreInformationResult | string

def event_to_prompt(event: Event) -> str:

data = event.data if isinstance(event.data, str) \

else stringifyToYaml(event.data)

return f"<{event.type}>\n{data}\n</{event.type}>"

def thread_to_prompt(thread: Thread) -> str:

return '\n\n'.join(event_to_prompt(event) for event in thread.events)

Context Window Example

Using this approach, the context window might look like the following:

Initial Slack request:

<slack_message>

来自: @alex

频道: #deployments

内容: 你能将最新的后端部署到生产环境吗?

</slack_message>

After listing the Git tags:

<slack_message>

来自: @alex

频道: #deployments

内容: 你能将最新的后端部署到生产环境吗?

Thread: []

</slack_message>

<list_git_tags>

intent: "list_git_tags"

</list_git_tags>

<list_git_tags_result>

tags:

- name: "v1.2.3"

commit: "abc123"

date: "2024-03-15T10:00:00Z"

- name: "v1.2.2"

commit: "def456"

date: "2024-03-14T15:30:00Z"

- name: "v1.2.1"

commit: "ghi789"

date: "2024-03-13T09:15:00Z"

</list_git_tags_result>

Error and recovery after:

<slack_message>

来自: @alex

频道: #deployments

内容: 你能将最新的后端部署到生产环境吗?

Thread: []

</slack_message>

<deploy_backend>

intent: "deploy_backend"

tag: "v1.2.3"

environment: "production"

</deploy_backend>

<error>

运行 deploy_backend 时出错: 连接部署服务失败

</error>

<request_more_information>

intent: "request_more_information_from_human"

问题: "我连接部署服务时遇到问题,你能提供更多详细信息和/或检查一下该服务的状态吗?"

</request_more_information>

<human_response>

data:

回复: "我不确定发生了什么,你能检查一下最新工作流的状态吗?"

</human_response>

From here, your next step may be:

nextStep = await determine_next_step(thread_to_prompt(thread))

{

"intent": "get_workflow_status",

"workflow_name": "tag_push_prod.yaml",

}

This XML-like format is just one example - the point is that you can build custom formats that fit your application. If you have the flexibility to experiment with different context structures, as well as deciding what to store versus what to pass to the larger language model, you'll get better quality.

The main benefit of being in control of your context window:

- information density:: Organize information in a way that maximizes the comprehension of large language models

- error handling: Include error messages in a format that facilitates large language model recovery. Once errors and failed calls are resolved, consider hiding them from the context window.

- safety: Control the information passed to the Big Language Model, filtering out sensitive data

- dexterity: Adjust the format as you learn about use case best practices

- Token Efficiency: Optimizing Context Formats for Token Efficiency and Comprehension of Large Language Models

Context includes: prompts, commands, RAG documents, history, tool calls, memory

Remember: the context window is your primary interface for interacting with the larger language model. Taking control of the way you organize and present information can significantly improve the performance of your intelligences.

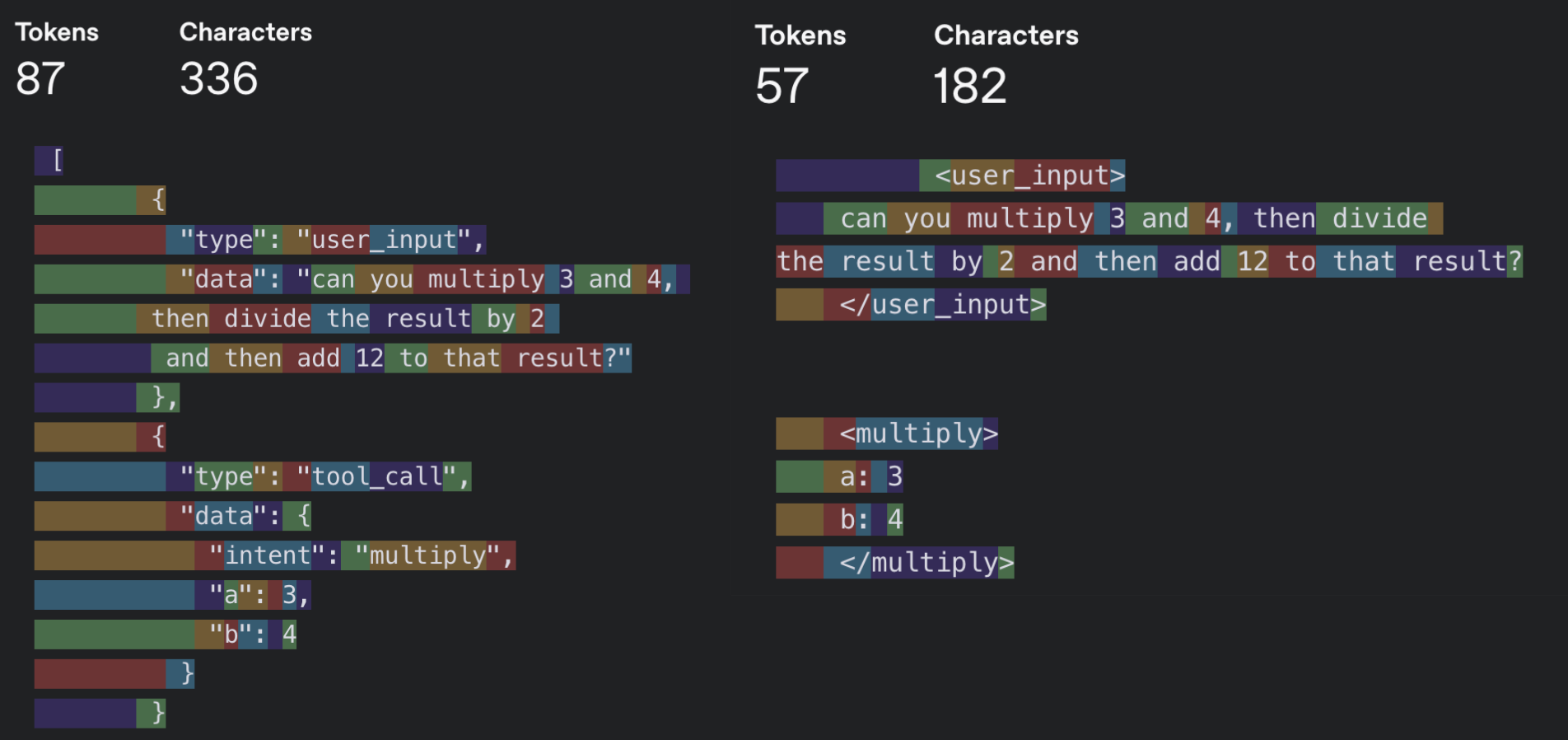

Example - Message Density - same message, fewer Token:

Don't take my word for it.

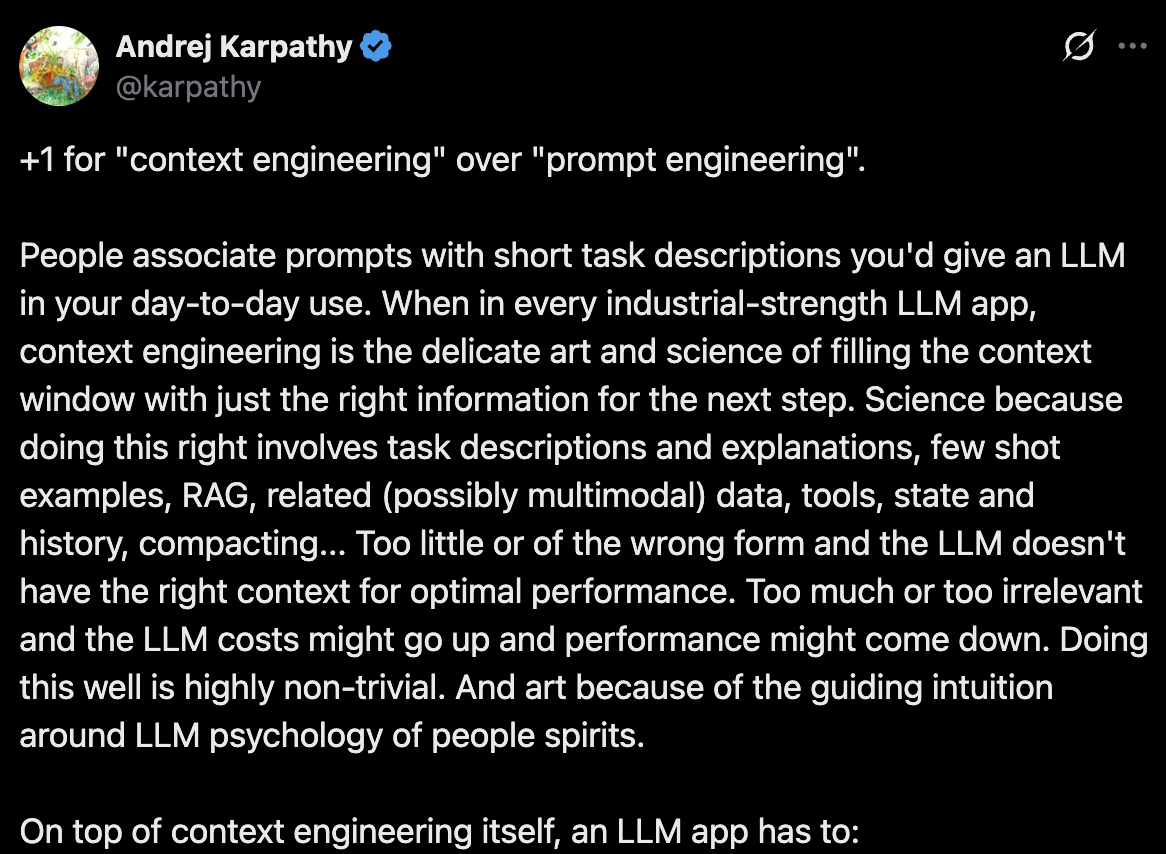

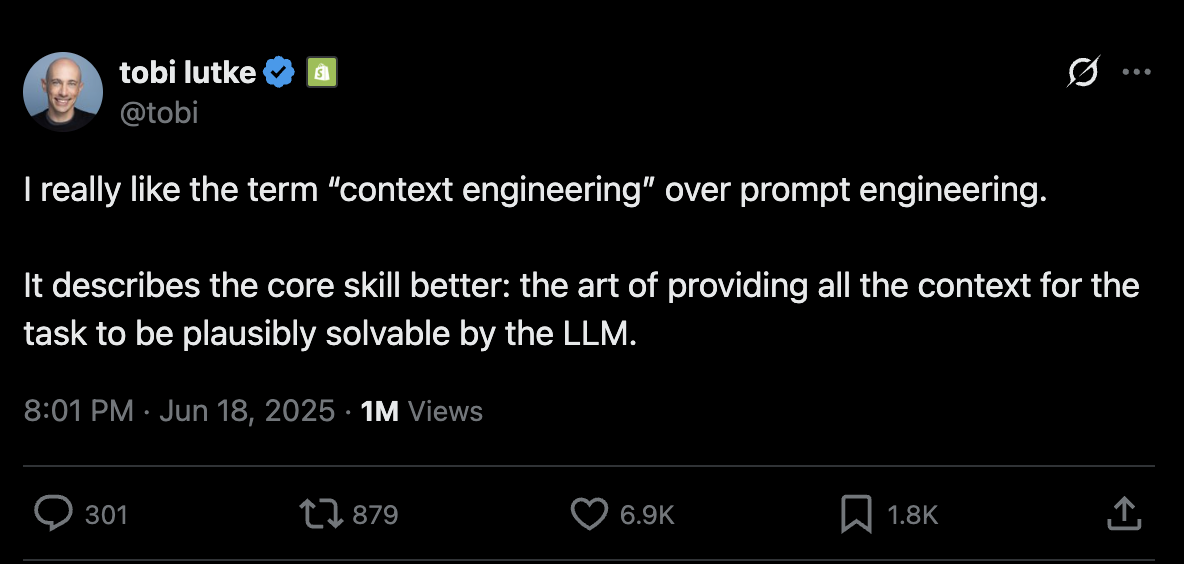

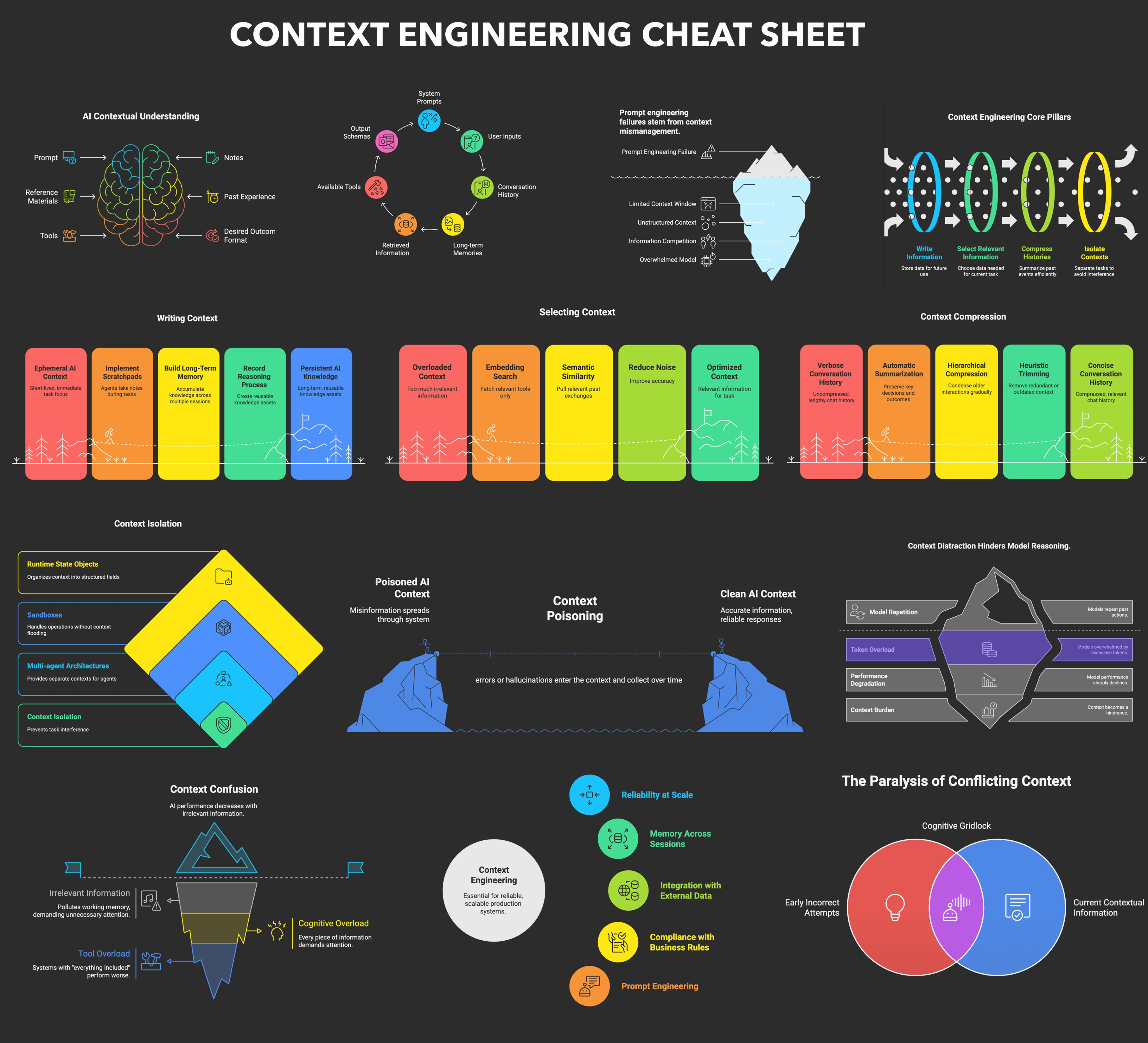

About 2 months after the release of 12-Factor Agents, context engineering started to become a fairly popular term.

In addition.@lenadroid A pretty good one was also released in July of 2025 Contextual Engineering Quick Reference Table。

The recurring theme here is that I don't know what the best approach is, but I do know that you need to have the flexibility to be able to try all the possibilities.