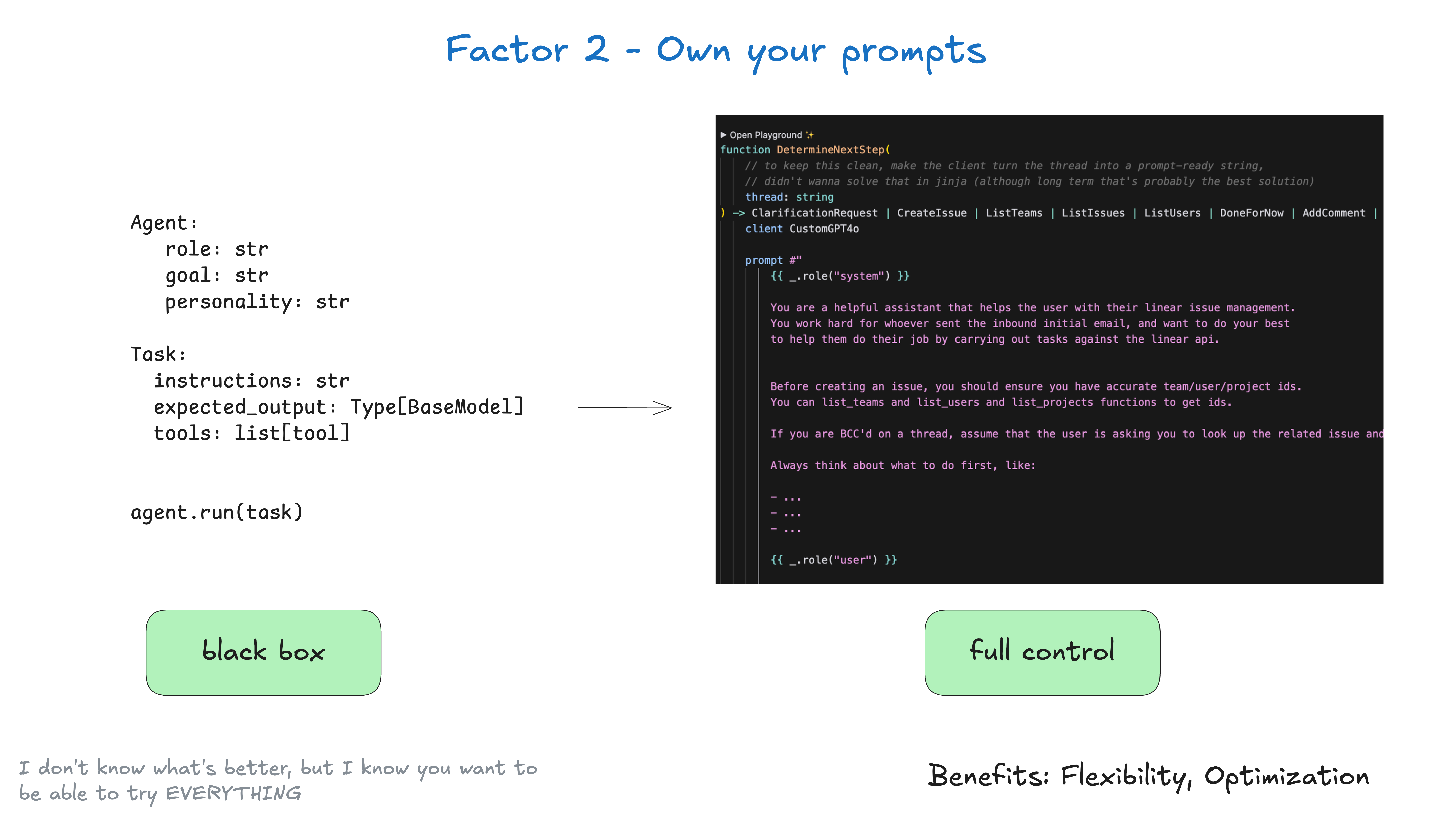

Don't outsource your input prompt engineering to a framework.

By the way.This is far from a novel suggestion:

Some frameworks provide a "black box" approach like this:

agent = Agent(

role="...",

goal="...",

personality="...",

tools=[tool1, tool2, tool3]

)

task = Task(

instructions="...",

expected_output=OutputModel

)

result = agent.run(task)

This is useful for introducing some top-notch input prompt engineering to help you get started, but it's often difficult to tweak and/or reverse-engineer to put just the right amount of Token Input to your model.

Instead, you should take control of your input prompts and treat them as code for first class citizens:

function DetermineNextStep(thread: string) -> DoneForNow | ListGitTags | DeployBackend | DeployFrontend | RequestMoreInformation {

prompt #"

{{ _.role("system") }}

你是一个乐于助人的助手,负责管理前端和后端系统的部署。

你通过遵循最佳实践和正确的部署程序,努力确保部署的安全和成功。

在部署任何系统之前,你应该检查:

- 部署环境 (预发环境 vs 生产环境)

- 要部署的正确标签/版本

- 当前的系统状态

你可以使用 deploy_backend、deploy_frontend 和 check_deployment_status 等工具来管理部署。对于敏感部署,使用 request_approval 来获得人工验证。

始终首先考虑该做什么,例如:

- 检查当前部署状态

- 验证部署标签是否存在

- 如果需要,请求批准

- 在部署到生产环境之前,先部署到预发环境

- 监控部署进度

{{ _.role("user") }}

{{ thread }}

下一步应该做什么?

"#

}

(The above example uses BAML Generate input prompts, but you can use any input prompt engineering tool you want to do this, or even create templates manually)

If this function signature seems a bit strange, we'll add it to the Element 4 - Tools as structured outputs Discussed in.

function DetermineNextStep(thread: string) -> DoneForNow | ListGitTags | DeployBackend | DeployFrontend | RequestMoreInformation {

Key benefits of taking control of your input prompts:

- Total control: Write exactly the commands your intelligences need, no black-box abstractions!

- Testing and Evaluation: Build tests and evaluations for your input prompts as you would for any other code!

- iteration (math.): Quickly modify input prompts based on actual performance

- transparency: know exactly which commands your intelligences are using

- Role Hacking: Take advantage of APIs that support non-standard use of user/assistant roles - e.g., a non-chat version of the now-deprecated OpenAI "completions" API. This includes a number of so-called "model gaslighting" techniques

Remember: Your input prompts are the primary interface between your application logic and the Large Language Model (LLM).

Taking full control of your input prompts can provide you with the flexibility and input prompt control you need for production-grade intelligences.

I don't know what the best input prompt is, but I do know that you want to have the flexibility of being able to try everything.