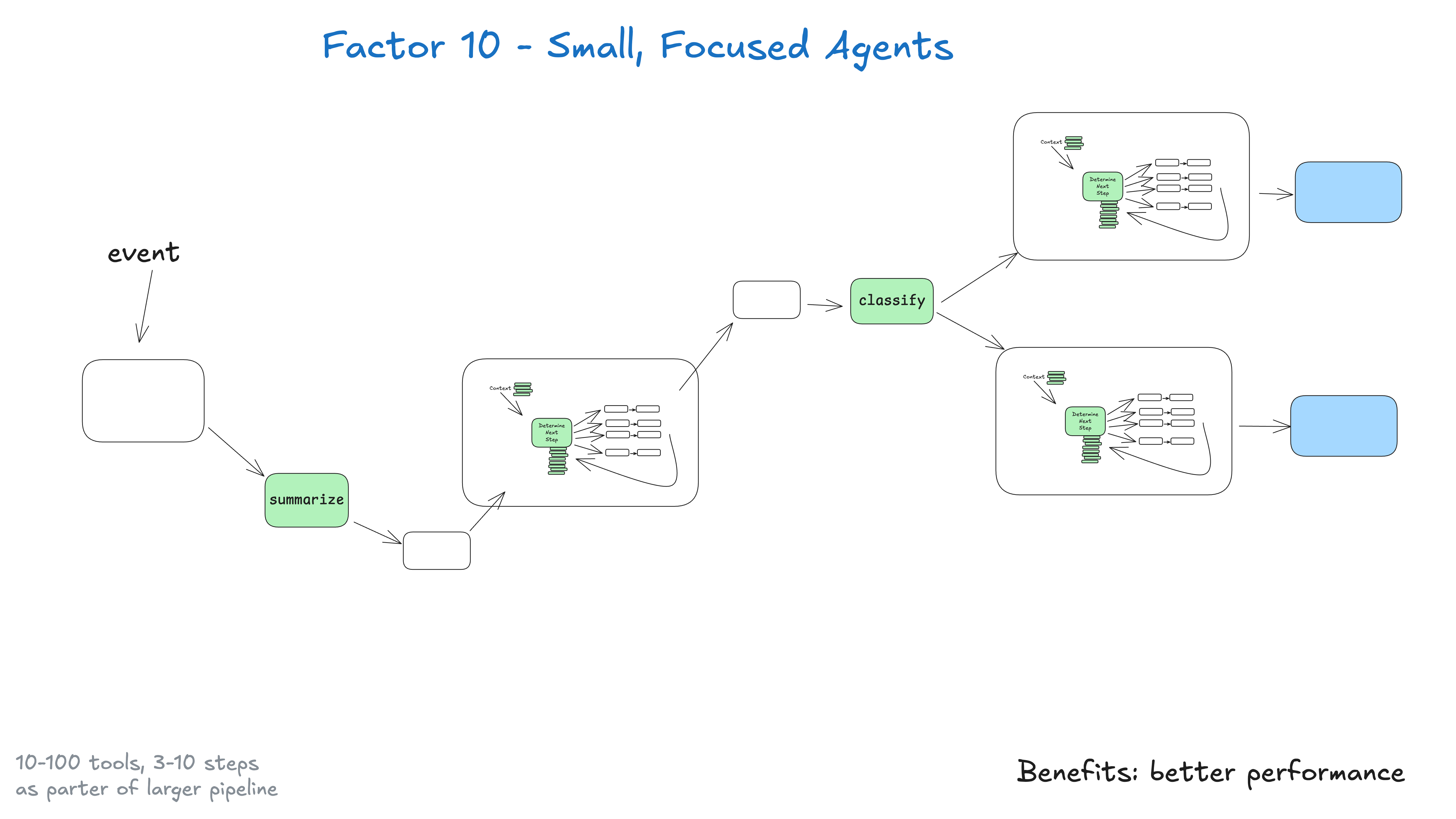

Instead of building monolithic intelligences that try to do everything, it is better to build small, focused intelligences that can do one thing well. Intelligentsia are just one building block in a larger, largely deterministic system.

The key insight here is the limitation of large language models: the larger and more complex the task, the more steps are required, which means a longer context window. As the context grows, the big language model is more likely to get lost or lose focus. By allowing the intelligences to focus on specific domains, executing a maximum of 3-10, perhaps 20 steps, we can keep the context window manageable and maintain the high performance of the big language model.

As context grows, large language models are more likely to get lost or lose focus

The advantages of small, focused intelligences:

- controlled context: smaller context windows mean better performance for large language models

- Clear responsibilities: Each intelligence has a clearly defined scope and purpose

- Higher reliability: Less likely to get lost in complex workflows

- Easier to test: Easier to test and validate specific features

- Improved debugging: Easier to recognize and fix problems when they occur

What happens if big language models become smarter?

If big language models become smart enough to handle workflows with more than 100 steps, do we still need to do this?

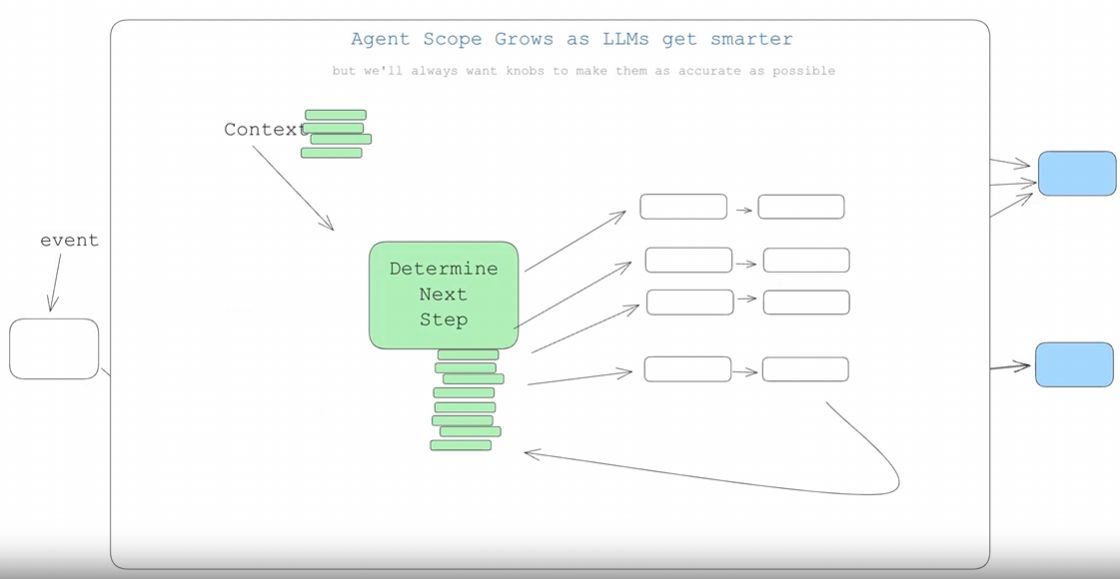

Long story short: yes. As intelligentsia and large language models advance, they probably will naturally expand to handle longer context windows. This means handling more parts of a larger DAG. This small, focused approach ensures that you get results today, and also prepares you for the gradual scaling of intelligences as the large language model context window becomes more reliable. (If you've refactored a large deterministic code base before, you're probably nodding your head right now.)

The key here is to consciously control the size/scope of the intelligences and expand only in ways that maintain quality. As in the case of What the team that built NotebookLM said:

I think that those most magical moments in building AI always come when I'm very, very, very close to the edge of the model's capabilities

Wherever that boundary is, if you can find it and get it right consistently, you can build amazing experiences. There are many moats that can be built here, but as always, it requires a certain amount of engineering rigor.