Detailed version: how we got to this point

You don't have to listen to me.

Whether you're new to intelligences or a grumpy veteran like me, I'm going to try to convince you to ditch most of your pre-existing views on AI intelligences, take a step back, and rethink them from first principles. (In case you missed the release of OpenAI's API features a couple weeks ago, here's the spoiler: pushing more intelligent body logic behind the API is not the right direction)

Intelligent bodies as software, and a brief history of them

Let's talk about how we got here.

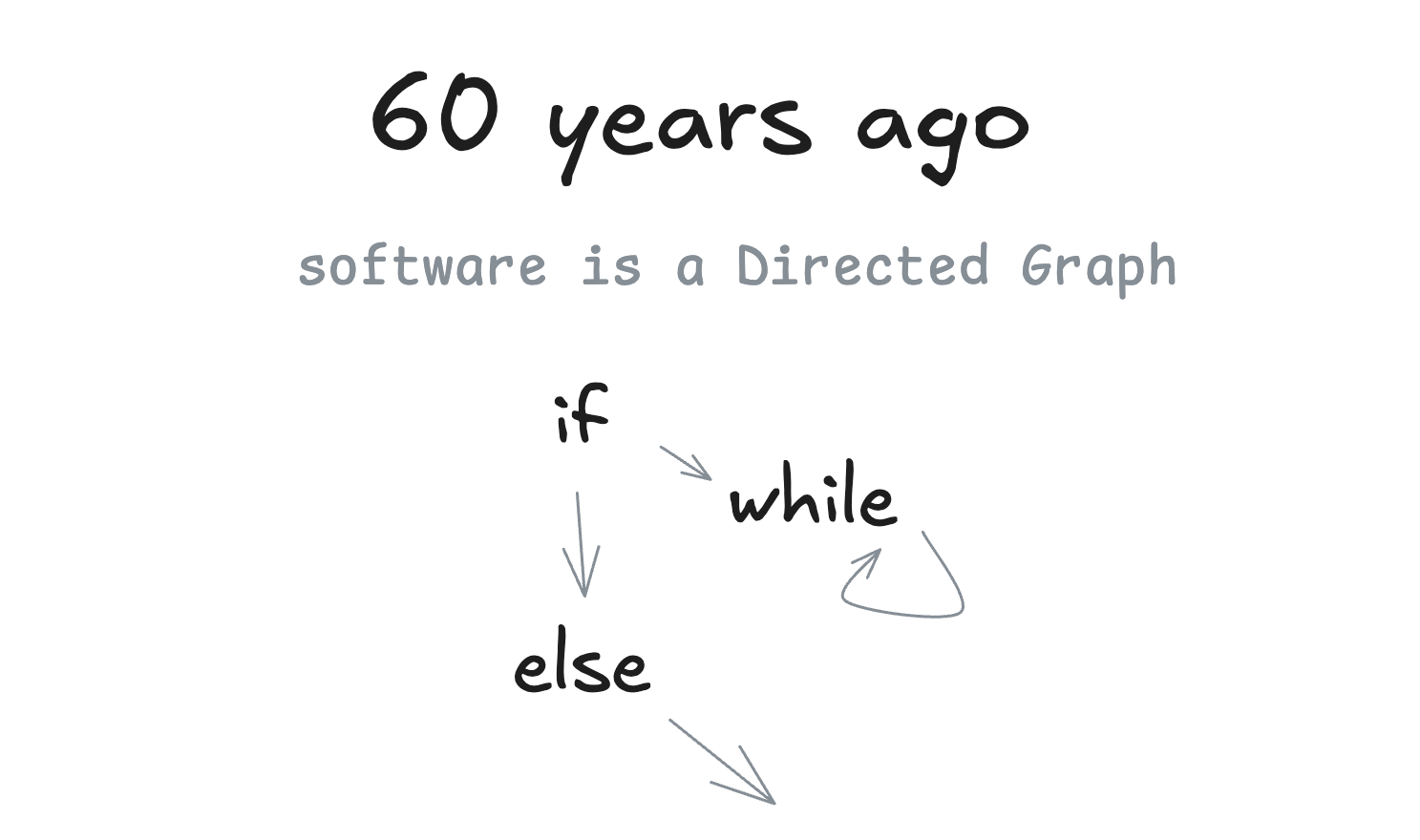

60 years ago

We will talk a lot about directed graphs (DGs) and its acyclic friends, directed acyclic graphs (DAGs). The first thing I want to point out is that ...... well ...... software is a directed graph. There's a reason we used to use flowcharts to represent programs.

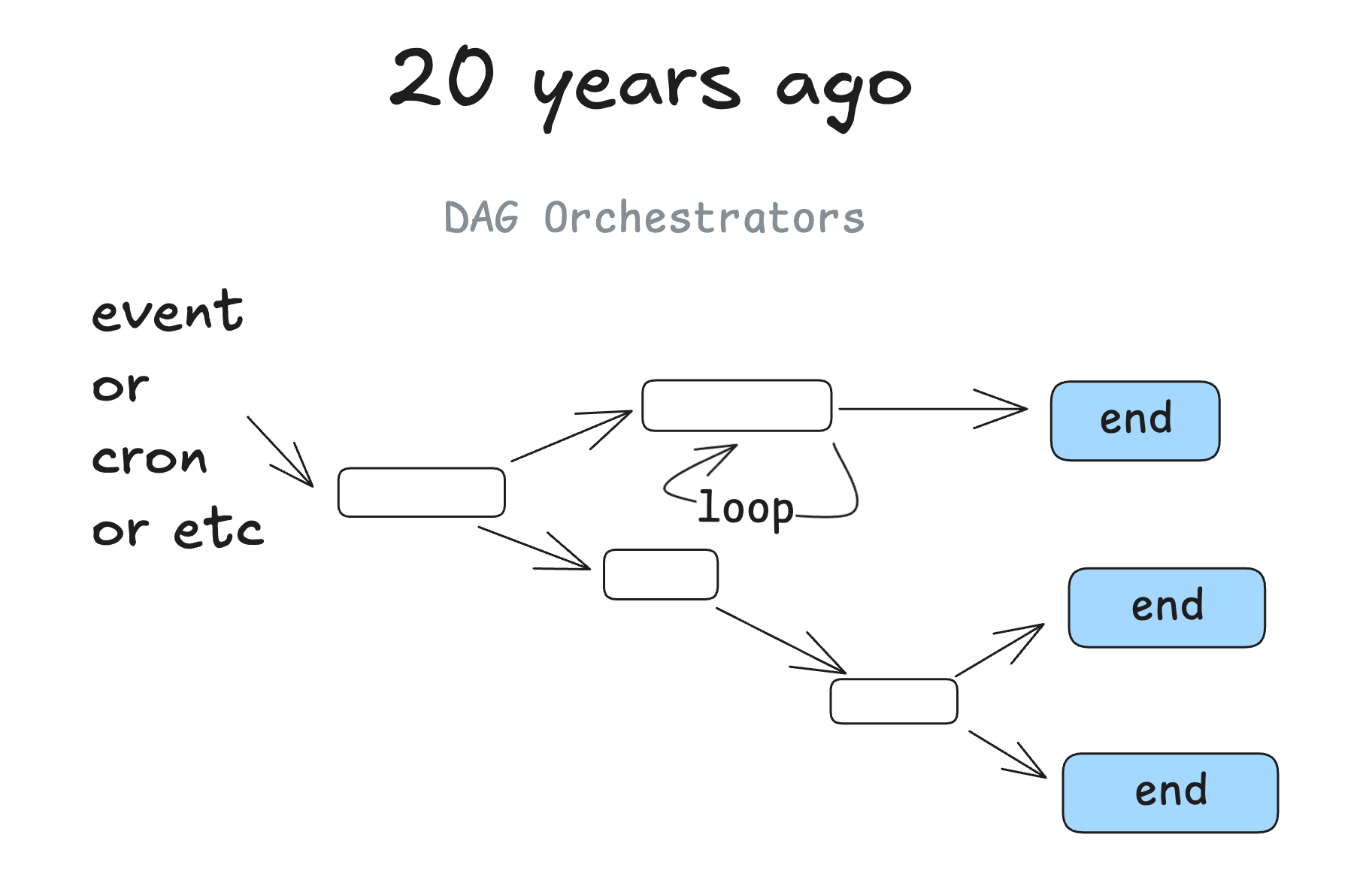

20 years ago

About 20 years ago, we started to see DAG orchestrators become popular. We're talking about things like Airflow、Prefect Such classic tools, as well as some predecessors and some newer ones like (dagster、inggest、windmill). They follow the same graph pattern with the added benefits of observability, modularity, retrying, and management.

10-15 years ago

When machine learning models start to become good enough to use, we start to see them dotted around in DAGs. You might think of steps like "summarize the text in this column into a new column" or "categorize support questions by severity or sentiment".

But at the end of the day, it's still essentially the same old deterministic software.

Future Prospects for Smart Bodies

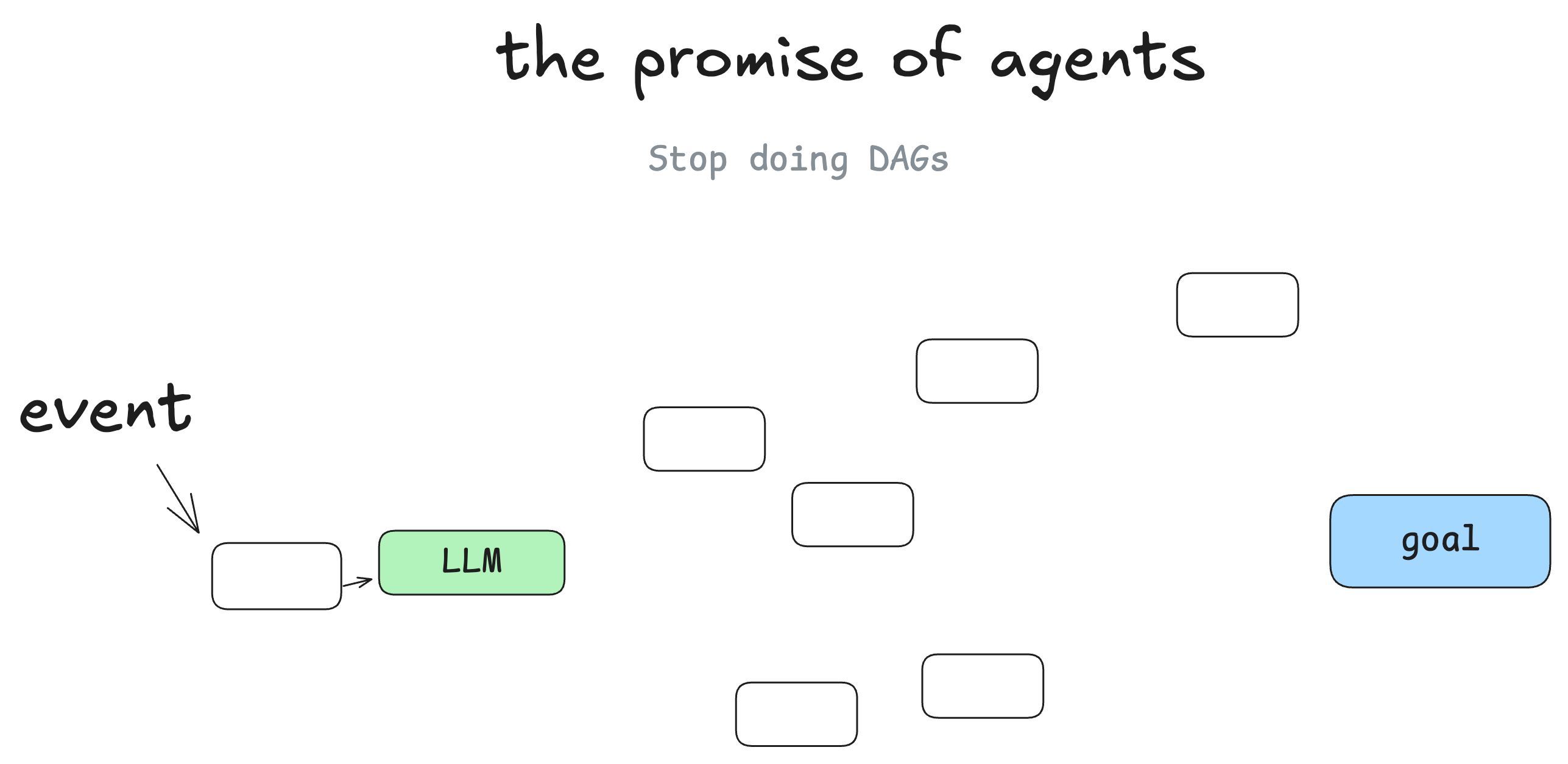

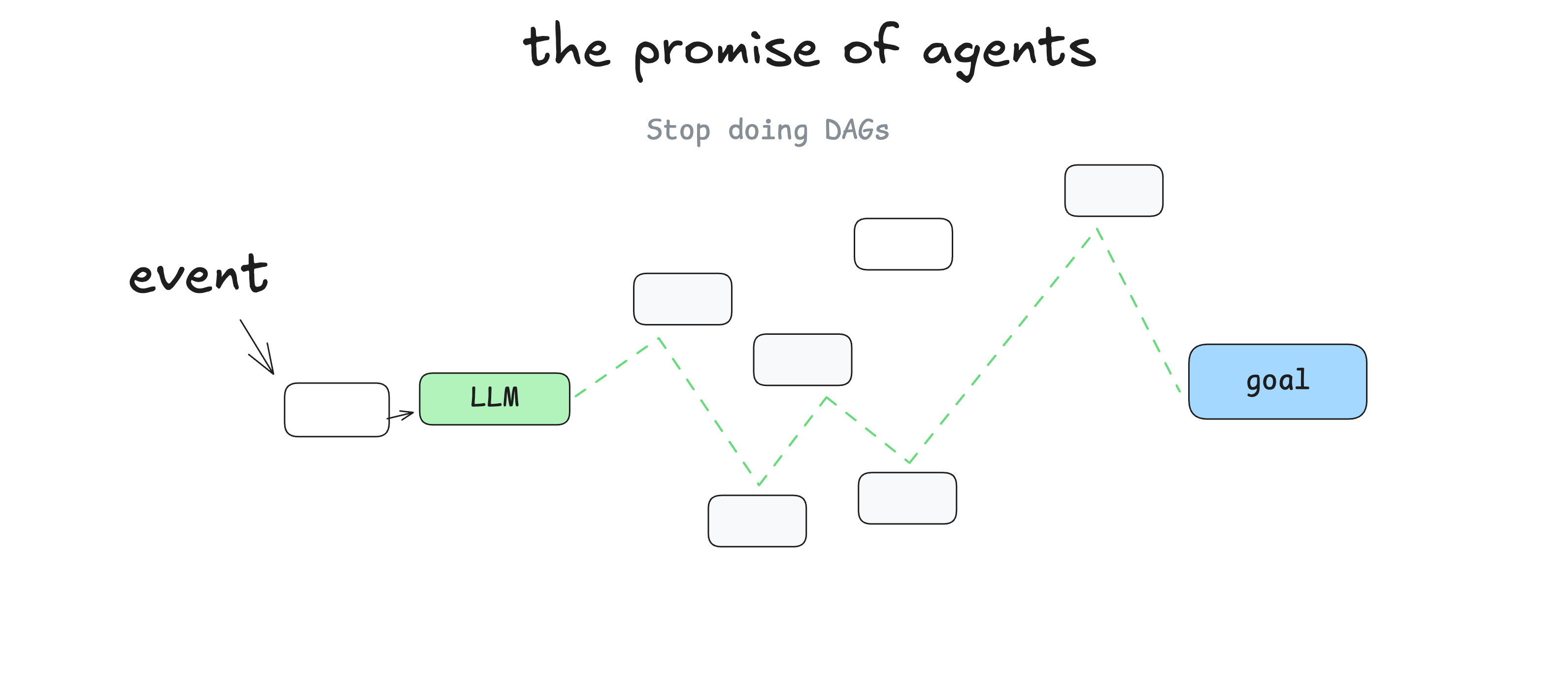

I'm not the first , but the biggest thing I learned when I started learning about intelligences is that you can ditch the DAG. software engineers no longer need to write code for every step and edge case, you can give intelligences a goal and a set of transitions:

Then let the big language model make decisions in real time to figure out the path.

The prospect here is that you write less software, and that you simply give the graph of the Big Language Model "edges" and let it figure out the "nodes" on its own. You can recover from bugs, you can write less code, and you may find that the big language model finds novel solutions to problems.

Intelligentsia as a cycle

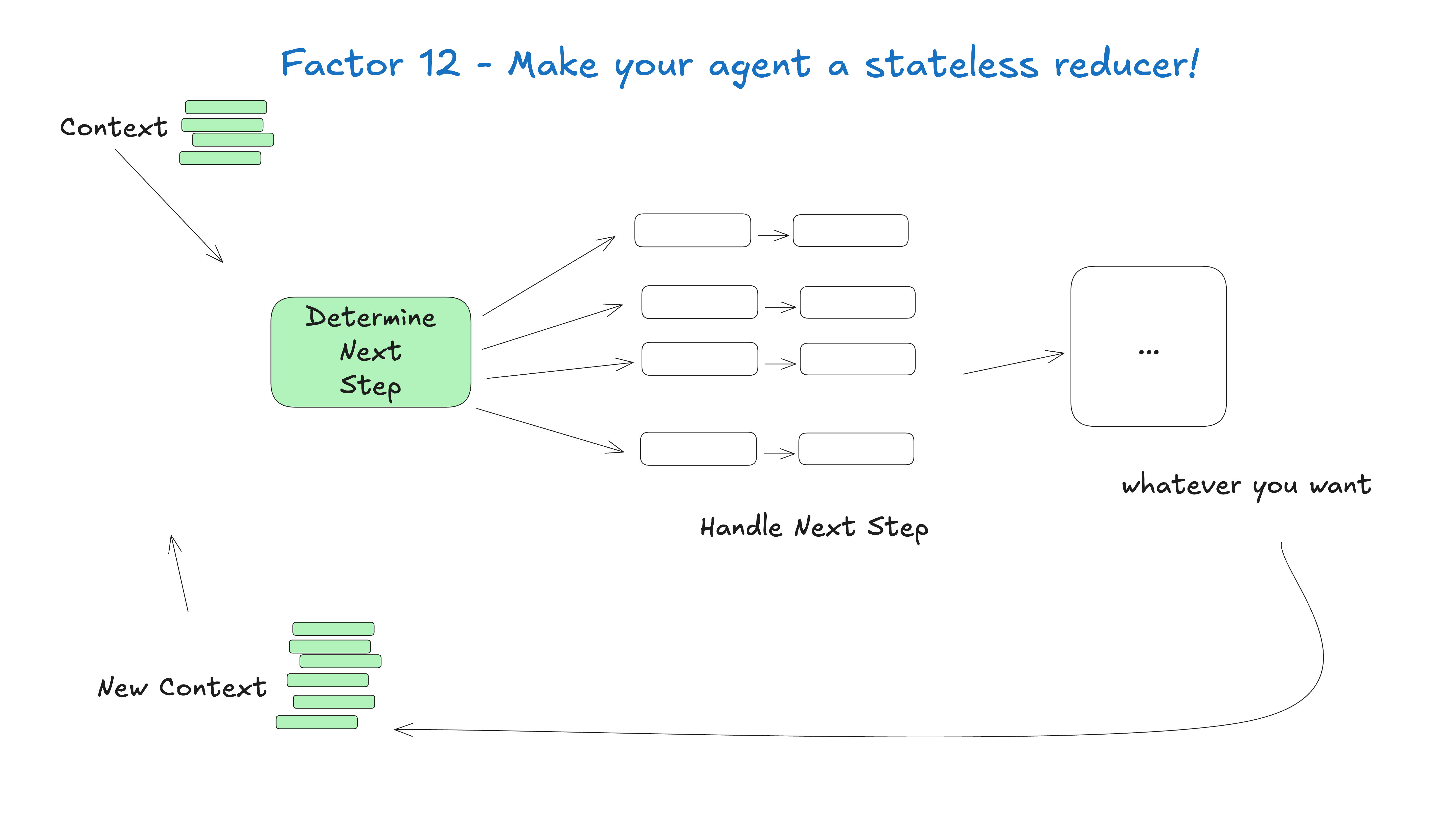

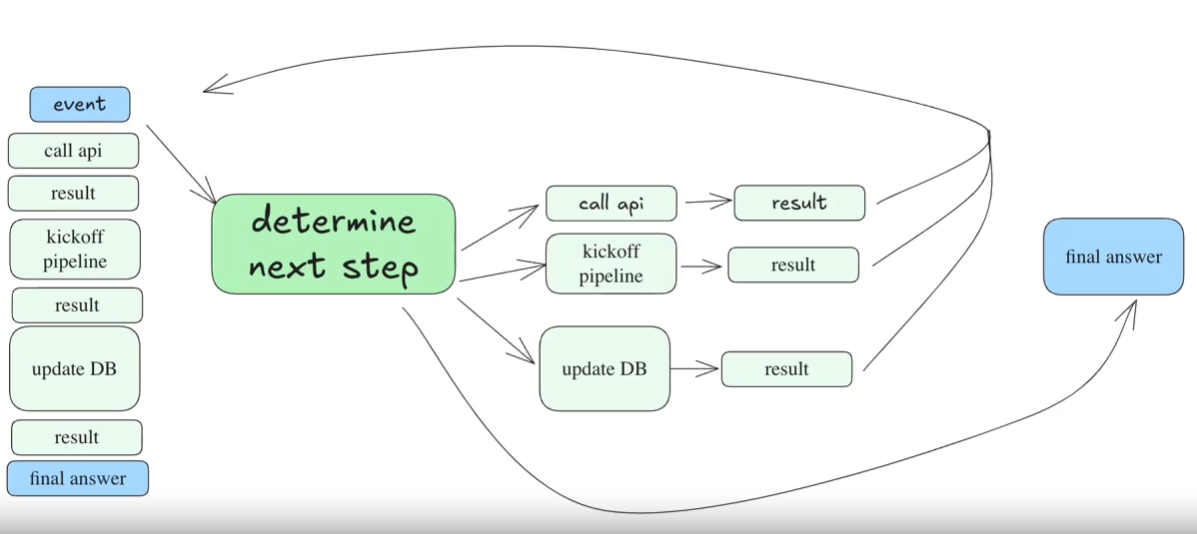

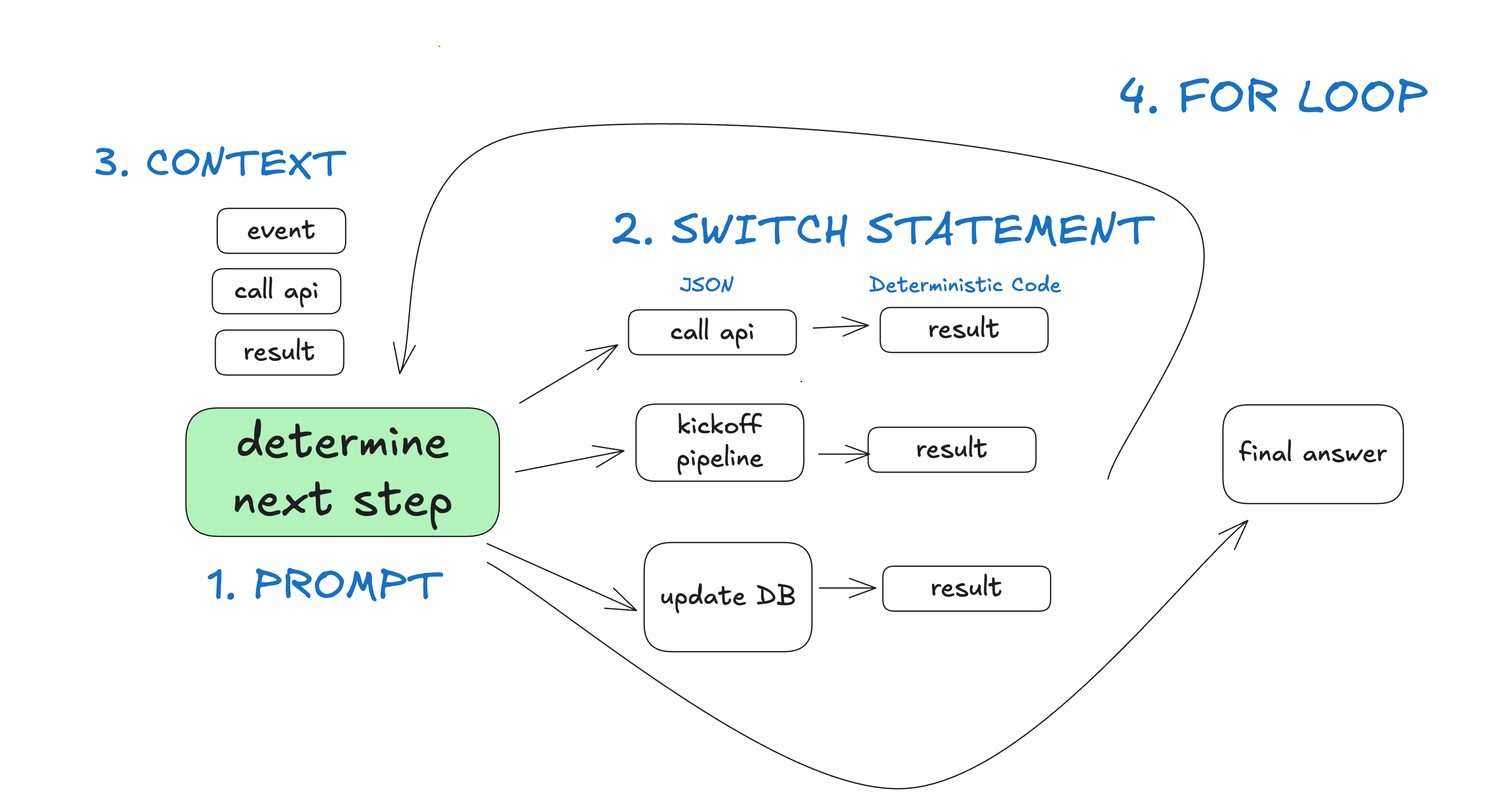

In other words, you have a loop consisting of 3 steps:

- The Big Language Model determines the next step in the workflow, outputting structured JSON ("tool calls")

- Deterministic code execution tool calls

- The result is appended to the context window

- Repeat this process until the next step is identified as "Finish".

initial_event = {"message": "..."}

context = [initial_event]

while True:

next_step = await llm.determine_next_step(context)

context.append(next_step)

if (next_step.intent === "done"):

return next_step.final_answer

result = await execute_step(next_step)

context.append(result)

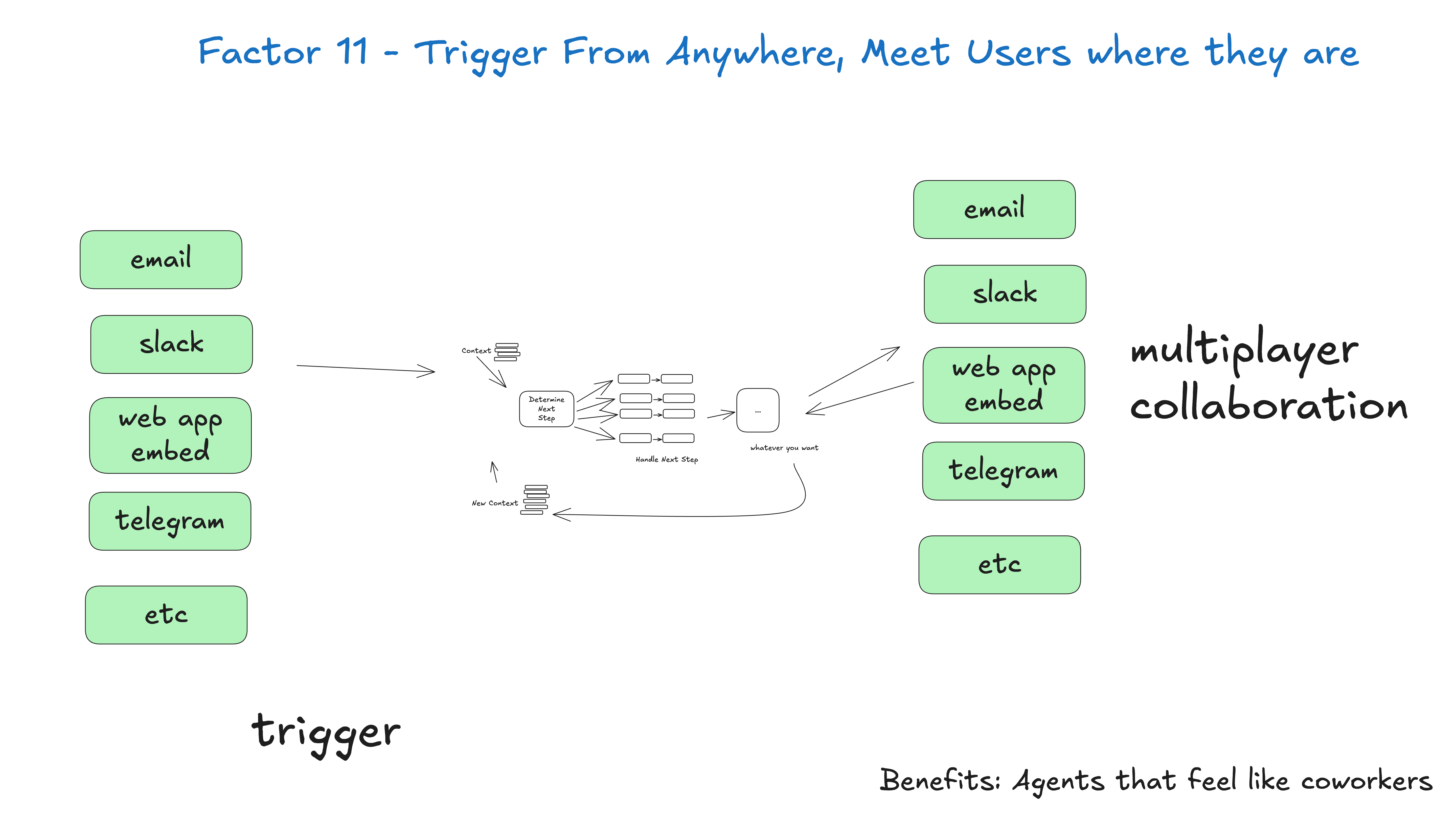

Our initial context is just the startup event (maybe a user message, a cron task trigger, a webhook, etc.), and then we let the big language model choose the next step (the tool) or determine if the task is complete.

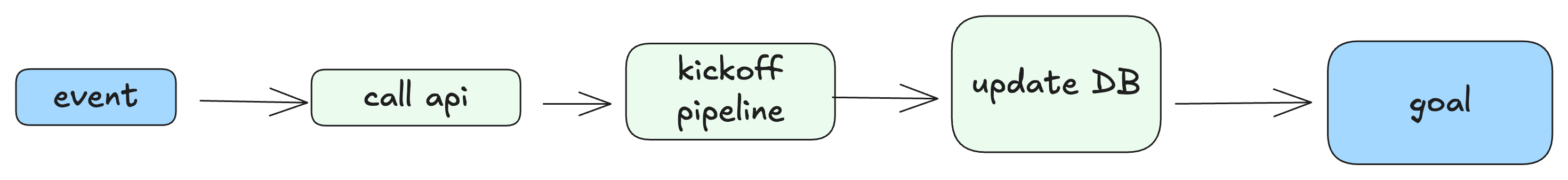

This is a multi-step example:

The resulting "materialized" DAG looks like this:

Problems with this "round-robin-until-solution" model

The biggest problem with this model is:

- When the context window becomes too long, the intelligences get lost - they keep trying the same failed methods over and over again.

- It's really just this one issue, but it's enough to make this method inch forward.

Even if you haven't built intelligences manually, you've probably seen this long context problem when using an intelligent body coding tool. They get lost in use and you need to open a new chat.

I'd even like to make a point that I've heard often and that you may have intuitively picked up on as well:

Even if the model supports longer and longer context windows, you'll always get better results with short, focused hints and contexts

Most of the developers I've talked to, when they realized that after more than 10-20 rounds of dialog, things got messy and the big language model couldn't recover from it, werePutting the "tool call loop" idea on the back burner.Even if the Intelligent Body 90% is timed correctly, this is far from being "good enough for delivery". Even if a smart body gets it right 90% of the time, that's still far from "good enough to hand over to the customer". Can you imagine a web application with a 10% page load that crashes?

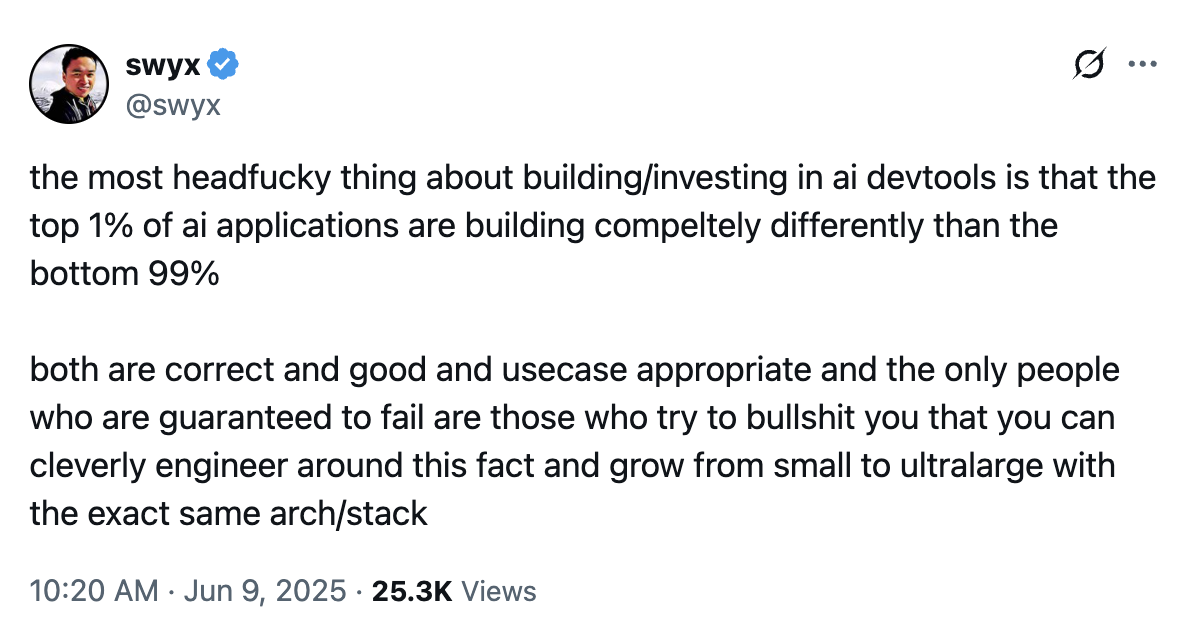

2025-06-09 Update - I like it very much. @swyx of this statement:

What really works -- micro-intelligence

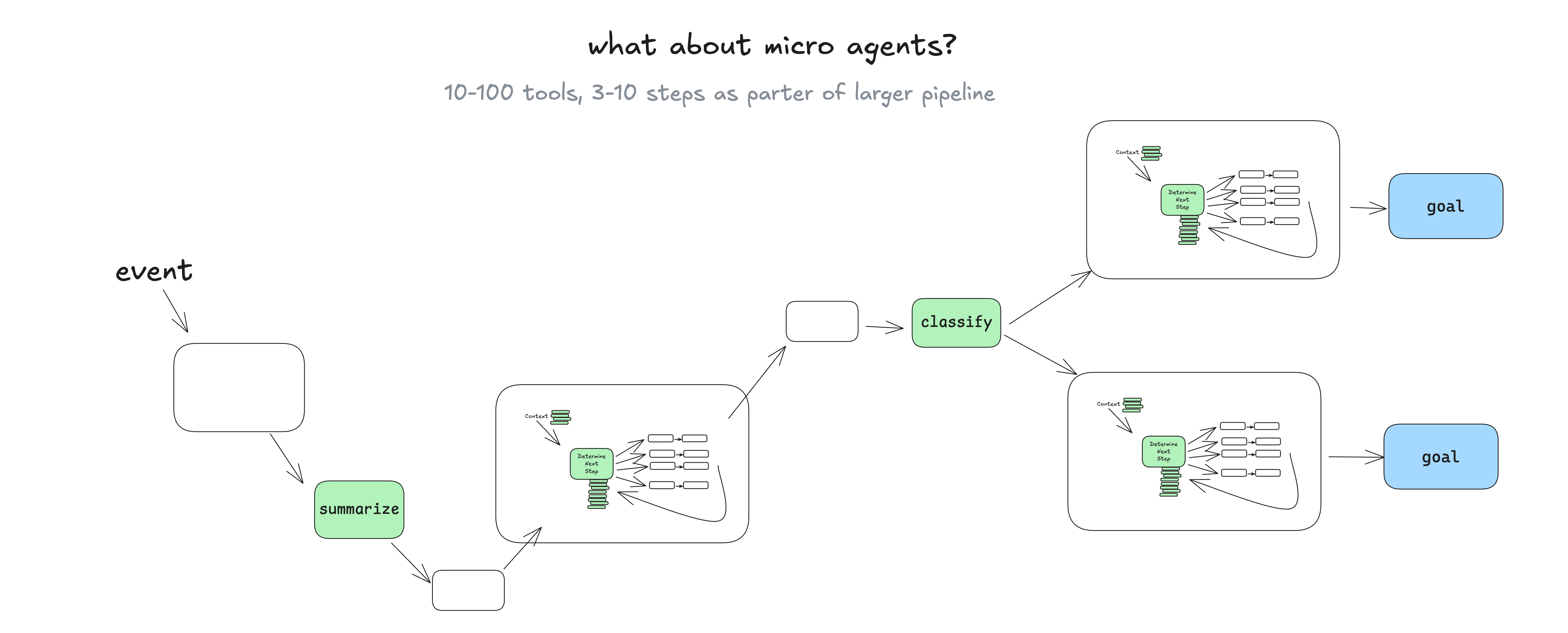

One approach that I do see often in real-world applications is to adopt and punctuate intelligent body patterns into a broader, more deterministic DAG.

You might ask -- "Why use intelligentsia in this case?" -- we'll get to that later, but basically, having a language model manage sets of well-scoped tasks makes it easy to integrate real-time feedback from real people and translate it into workflow steps without getting caught in a contextual error loop. (Element 1、Element 3、Elements 7) 。

Allowing language models to manage explicitly scoped task sets makes it easy to integrate real-time feedback from real people ...... without getting caught in contextual error loops

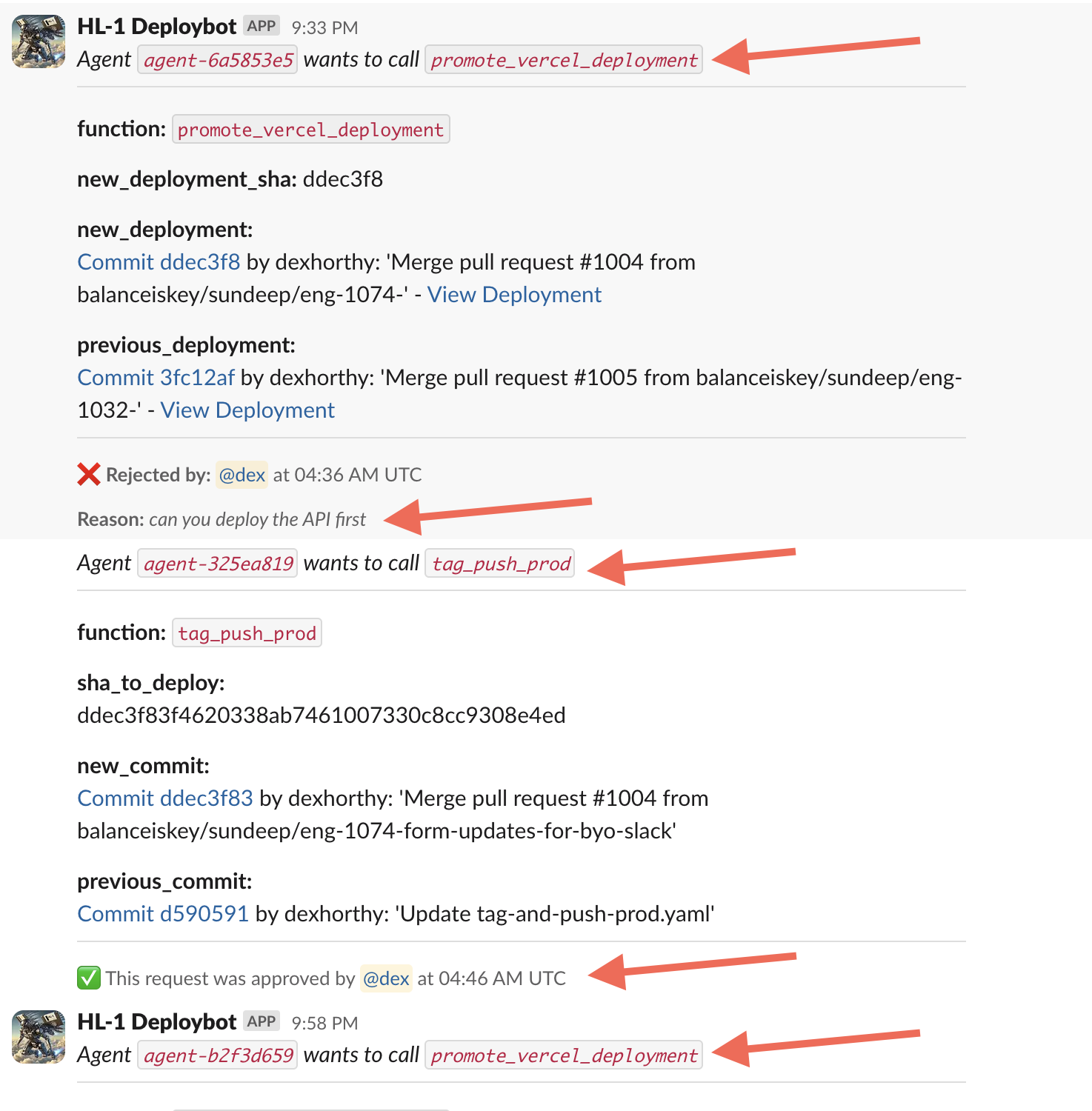

A real-life example of a micro-intelligence

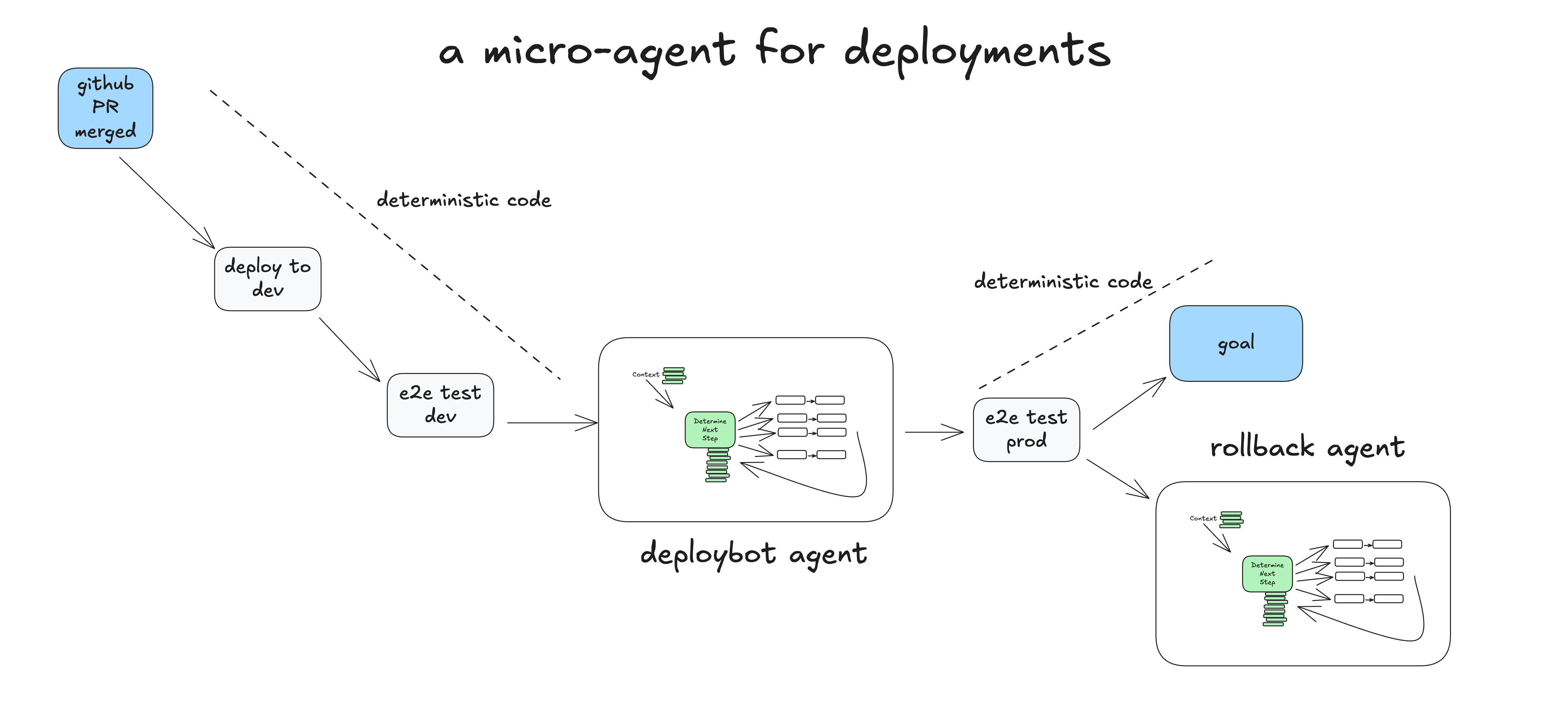

This is an example of how deterministic code can run a micro-intelligence that handles the "man in the loop" step of the deployment process.

- humankind Merge PRs into GitHub's main branch

- Deterministic code Deploying to a staging environment

- Deterministic code Run end-to-end (e2e) testing against pre-release environments

- Deterministic code Give the task to the Intelligence for production environment deployment with the initial context "Deploy SHA 4af9ec0 to the production environment"

- intelligent body invocations

deploy_frontend_to_prod(4af9ec0) - Deterministic code Requesting human approval for this operation

- humankind Reject the action with the feedback, "Can I deploy the backend first?"

- intelligent body invocations

deploy_backend_to_prod(4af9ec0) - Deterministic code Requesting human approval for this operation

- humankind Approve the operation

- Deterministic code Perform back-end deployment

- intelligent body invocations

deploy_frontend_to_prod(4af9ec0) - Deterministic code Requesting human approval for this operation

- humankind Approve the operation

- Deterministic code Perform front-end deployment

- intelligent body Determine the successful completion of the task, end!

- Deterministic code Run end-to-end tests against production environments

- Deterministic code Task completion, or passing the task to the Rollback Intelligence to review the failure and possibly rollback

This example is based on one of our Humanlayer releases that manages our deployments. Real Open Source Intelligence -- Here's a real conversation I had with it last week:

We have not given this intelligence a great deal of tools or tasks. The main value of the Big Language Model is in parsing plain text feedback from humans and suggesting updated courses of action. We isolate tasks and context as much as possible to keep the Big Language Model focused on a small, 5-10 step workflow.

Here's another one. More classic support/chatbot demo。

So, what exactly is an intelligent body?

- Prompt (prompt) - Tells the big language model how to act and what "tools" are available to it. The output of the hint is a JSON object that describes the next step in the workflow ("tool call" or "function call"). (Element 2)

- switch statement - Based on the JSON returned by the Big Language Model, decide what to do with it. ( Part of element 8)

- Cumulative context - Stores a list of steps that have occurred and their results. (Element 3)

- for loop - Before the Big Language Model issues some kind of "terminate" tool call (or plain text response), add the result of the switch statement to the context window and ask the Big Language Model to choose the next step. (Element 8)

In the "deploybot" example, we gained several benefits by mastering control flow and context accumulation:

- in our switch statement 和 for loop in which we can hijack the control flow to pause waiting for human input or waiting for a long-running task to complete.

- We can easily serialize (textual) context window for pause and resume.

- in our Prompt (prompt) In this case, we can strongly optimize the way instructions and "what's happened so far" are delivered to the big language model.

Part II 将 Formalizing these patternsThey can be used in any software project to add impressive AI functionality without having to adopt the traditional implementation/definition of an "AI Intelligence Body" in its entirety.