General Introduction

“12-Factor Agents” Not a specific software library or framework, but a set of design principles for building reliable, scalable and easy to maintain LLM (Large Language Model) applications. This project was initiated by developer Dex, who found that many teams could easily achieve 70-80% levels of quality when developing customer-facing functionality using existing AI Intelligent Body frameworks, but it was exceptionally difficult to break through this bottleneck into production environments. The root cause is that many high-level frameworks hide too much underlying control, leading developers to reverse engineer or start from scratch when deep customization and optimization is needed. Therefore, this project borrows the idea of the classic software development methodology "12-Factor App" and proposes 12 core principles. It aims to provide software engineers with a thought guide for integrating modular AI functionality into existing products in a gradual and reliable manner, rather than a disruptive rewrite. The core idea is that great AI applications are still essentially great software, the main part of which should consist of deterministic code, with the magic of LLM applied precisely where it is most needed.

Course Listings

- Preface:How we got here: a brief history of software

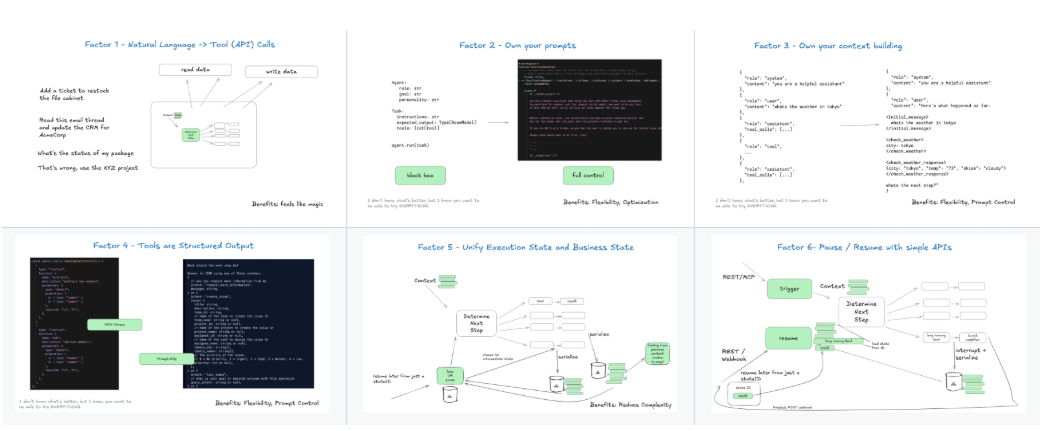

- Principle I:Natural Language to Tool Calls: Stable mapping of the user's natural language input to one or more specific tool (function) calls.

- Principle II:Take control of your cue words: Version control and manage prompts as part of the application's core code, rather than generating them dynamically.

- Principle III:Take Control of Your Context Window: Precise control of the contextual content of inputs to the LLM as the key to achieving reliability.

- Principle IV:Tools as structured outputs: Think of Tools as a way to force LLM to produce structured output, not just a collection of features.

- Principle V:Harmonization of execution state and business state: Combine the operational state of the intelligences with the core business state model of the application to achieve state consistency.

- Principle VI:Start/Pause/Resume via simple APIs: Ensure that long-duration tasks of intelligences can be controlled by external systems through simple interfaces.

- Principle VII:Interacting with humans through tool calls: When an intelligent requires human input or review, it should be triggered by a standard tool call, not special handling.

- Principle VIII:Take control of your control flow: The core business logic and process transformations of the application should be dominated by deterministic code and not left entirely to the discretion of LLM.

- Principle IX:Compressing error messages into a context window: When a tool fails to execute, its error message is summarized and fed back to the LLM for correction.

- Principle X:Building Small, Focused Intelligence: The tendency is to combine multiple small, compact intelligences rather than build one huge intelligence that can do everything.

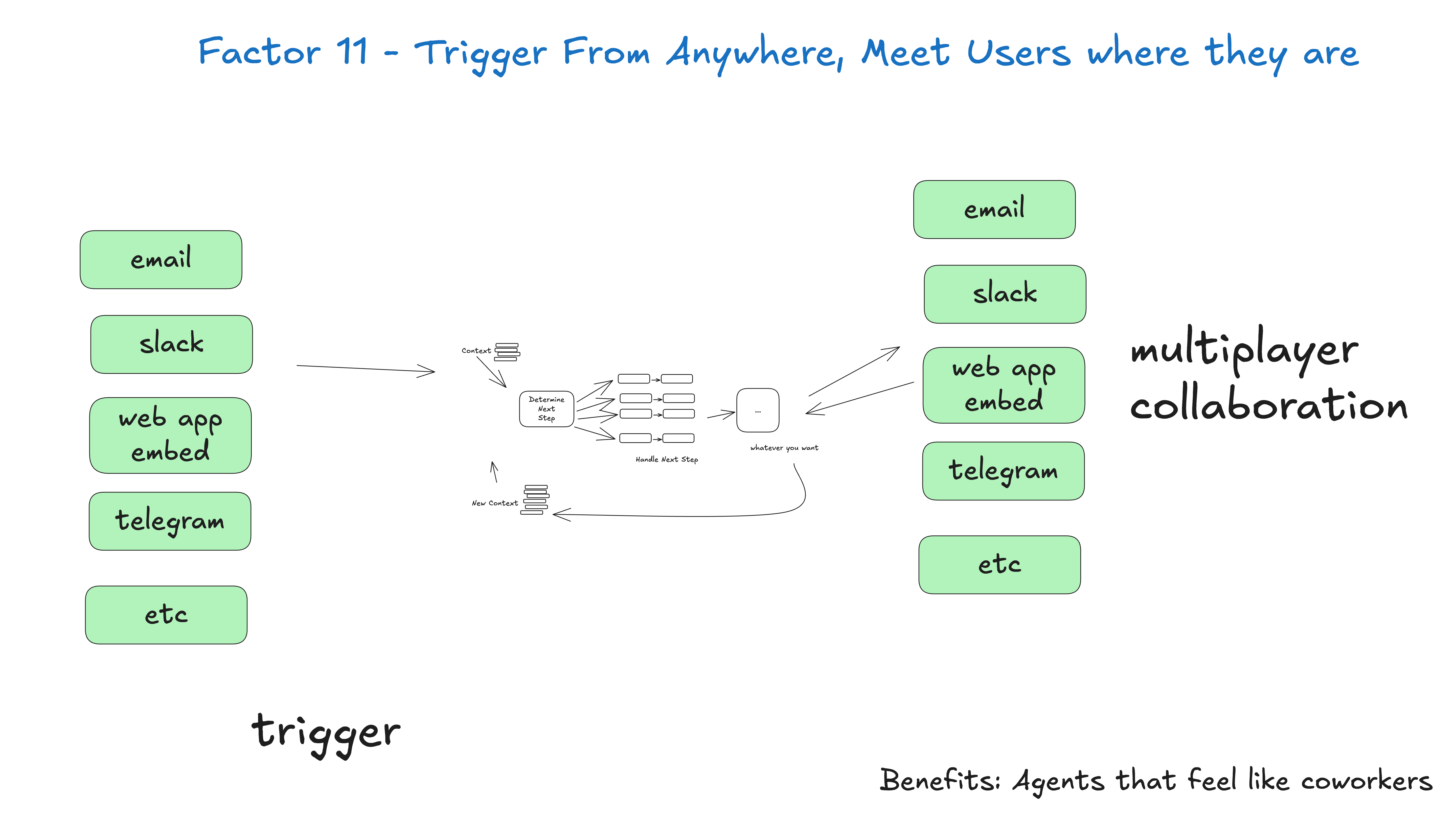

- Principle XI:Trigger anywhere, interact anywhere: Intelligentsia should be able to be triggered from various event sources (e.g., API requests, database changes, timed tasks) and interacted with on the platform where the user is located (e.g., Slack, Email).

- Principle XII:Designing an Intelligence as a Stateless Reducer: Drawing on Redux ideas, the smart body is designed as a pure function that receives the current state and events and returns the new state.

Using Help

"12-Factor Agents" is a set of ideas and architectural principles that have no installation process. It is "used" in the sense that developers adopt and practice these principles in their own projects. The following is a detailed description of how to apply these 12 principles in your software engineering practice.

Core concept: AI applications are first and foremost software

Before we begin, it is important to understand the core philosophy that the vast majority of the code of a so-called "AI application" or "AI intelligence" should be traditional, deterministic software engineering code, and that LLM calls are just one piece of the puzzle, used precisely at the point where natural language understanding or decision-making is required. natural language understanding, generation, or decision-making. Instead of handing over control of the entire application to a "request-tool-loop" black box, developers should think of the LLM as a function that can be called with special capabilities.

Detailed operational procedures for each principle

Principle 1: Natural Language to Tool Calls

This is the entry point for the intelligence to interact with the outside world. When receiving user input (e.g., "check the weather in Beijing yesterday"), you need a reliable mechanism to turn it into a function call, such as search_weather(date="2025-07-21", city="北京")。

- Operating Methods: Use LLM's "function call" or "tool use" functionality. In the request sent to the model, you provide not only user input, but also a detailed JSON Schema definition of the list of tools (functions) that can be called and their parameters. The model returns a JSON object indicating which function should be called and what parameters should be passed. Your code is responsible for parsing this JSON and executing the appropriate function.

Principle 2: Own your prompts.

Don't dynamically generate complex hint words in your code. It will make debugging and iteration very difficult.

- Operating Methods: Treat your prompts (Prompts) as static configuration files (e.g.

.txt或.mdfile) to manage. Load these cue word templates in your code and populate them with variables. Incorporating these cue word files into a version control system such as Git is like managing themain.py或index.jsSame. This way, you can keep track of every modification to the cue word and easily test and roll back.

Principle 3: Own your context window

The context window is the only "memory" of the LLM. The quality of the input directly determines the quality of the output. Don't indiscriminately stuff all historical messages into it.

- Operating Methods: Implement a precise context building strategy. Before each call to LLM, your code should carefully select and combine messages based on the needs of the task at hand. This might include: a system prompt, a few of the most important historical messages, relevant documentation snippets (RAG results), and the user's latest question. The goal is to provide the LLM with the least, most critical information needed to solve the problem at hand.

Principle 4: Tools are just structured outputs

Although these features are called "tools", from another perspective, they are the only reliable way you can force LLM to output the well-formed JSON you want.

- Operating Methods: Define LLM as a "tool" when you need it to extract information, categorize, or do any task that requires a deterministic output format. For example, if you need to extract names and companies from a text, you can define a

extract_entities(person: str, company: str)In order to "call" this tool, LLM must generate output in this format.

Principle #8: Own your control flow.

This is a point that runs counter to many automated intelligence frameworks, but is critical. The application's "what to do next" should not be determined entirely by LLM in a loop.

- Operating Methods: Use deterministic code (e.g., if/else, switch statements, or state machines) to write core business processes. For example, an order processing process might be:

接收订单 -> [LLM分类意图] -> if (查询) then call_query_api() else if (退货) then call_refund_api()Here, LLM is only responsible for the "classify tu" step. Here, LLM is only responsible for the "classify tu" step, and the whole process is controlled by your code. This makes the system behavior predictable and debuggable.

Principle 10: Small, Focused Agents

Don't try to build a "superintelligence" that can handle everything.

- Operating Methods: Decompose complex tasks. For example, a customer support system can be decomposed into: an intelligence for intent recognition, an intelligence for querying the knowledge base, and an intelligence for processing orders. Your main control-flow code (principle eight) is responsible for routing and scheduling between them. Each of the smaller intelligences has its own focused cue word (principle two) and toolset (principle four).

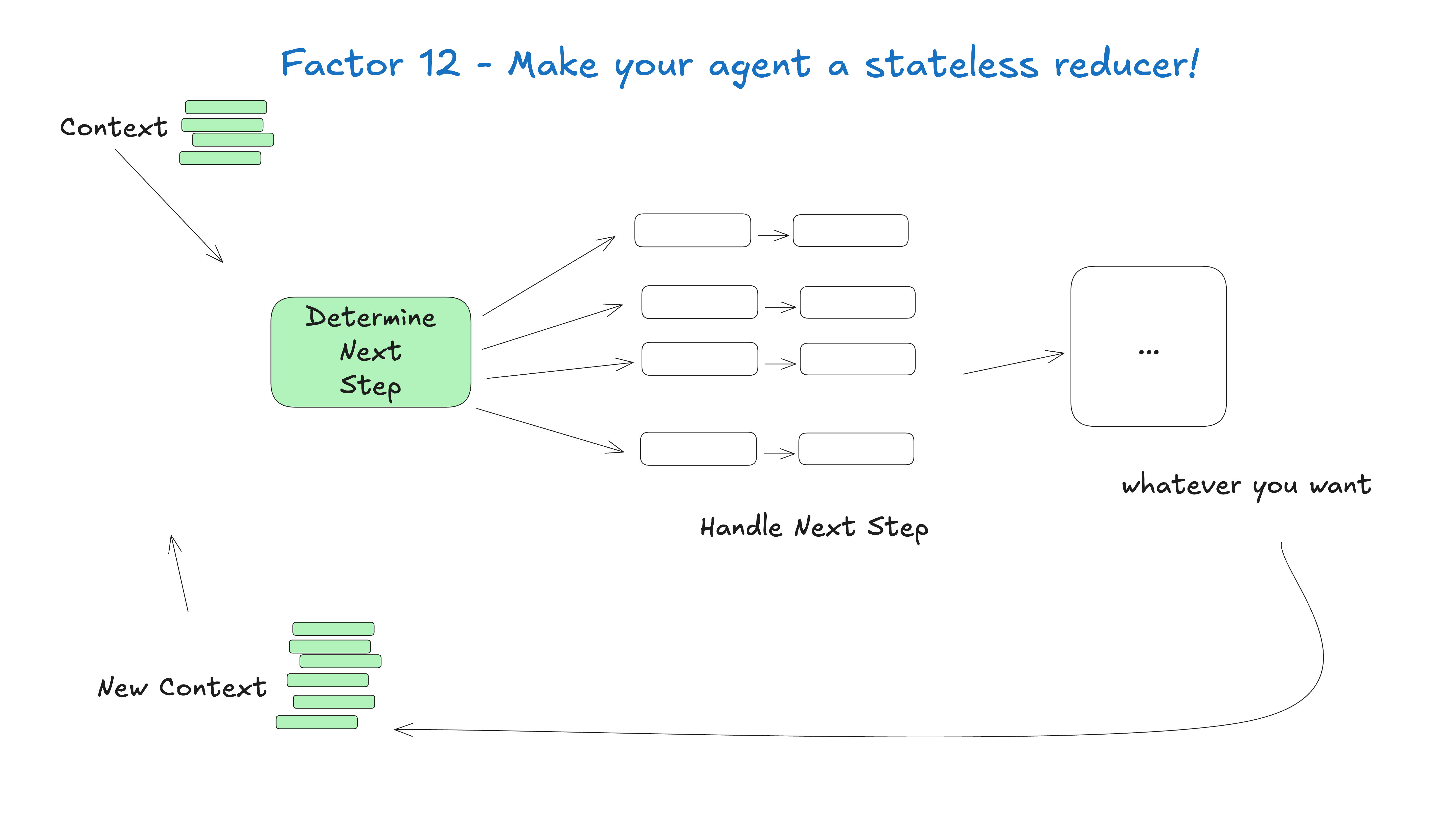

Principle 12: Make your agent a stateless reducer

This draws on the state management ideas of front-end frameworks such as Redux, and can greatly improve the testability and predictability of a system.

- Operating Methods: Implement your intelligence, or one of its steps, as a pure function with a signature like

(currentState, event) => newState。currentStateis all the states of the current application.eventis an event that has just occurred (such as a new message from the user or a returned result from the API).- The return value of a function

newStateis the updated state.

The function itself is stateless (it doesn't depend on any external variables) and all it does is compute the output based on the input. This makes it very easy to write unit tests: you just need to provide differentcurrentState和eventcombination and then assertsnewStateWhether it meets expectations.

By following these principles, you can build LLM applications that are more stable in their behavior, easier to debug, and that work in harmony with existing software systems.

application scenario

- Adding AI capabilities to existing SaaS products

For a mature SaaS product that already has stable business logic (e.g., CRM, project management tools), developers want to incrementally introduce AI functionality without rewriting the core code. For example, using Principles One and Eight, translate a user's natural language instructions ("Create a task that is due next week and assign it to Zhang San") into calls to existing APIs while maintaining the stability of the core task creation logic. - Developing high-quality AI assistants for end users

When developing an AI assistant that interacts directly with paying customers, reliability and user experience are critical. Using generic frameworks directly may result in unpredictable behavior when dealing with complex or edge cases. By applying these principles, specifically Principle III (Mastering Context), Principle VIII (Mastering Control Flow), and Principle IX (Compressing Error Messages), it is possible to ensure that the assistant handles problems gracefully or seeks help when needed through Principle VII (Interacting with Humans), rather than giving incorrect answers or crashing. - Productizing AI prototypes

Many teams use advanced frameworks to quickly build a stunning prototype (demo), but when they are ready to release it as a full-fledged product, they find that the prototype is unstable in real-world, complex scenarios (the quality doesn't break 80%). At this point, 12-Factor Agents provides a set of "refactoring" guidelines. Teams can revisit and revamp their prototype code with more robust software engineering practices that follow these principles, such as refactoring ambiguous control flows (loop-until-done) into explicit state machines (Principle Eight), and unifying fragmented prompts and state management (Principles Two and Five).

QA

- Is "12-Factor Agents" an installable software framework?

It isn't. It's not a place like LangChain or Griptape where you can directlypip installThe software package. It is a set of design philosophies and architectural principles designed to guide developers on how to better organize their code and way of thinking to build reliable LLM applications. It is a document, a methodology. - Why take control of the control flow yourself and not let LLM decide? Isn't that the beauty of intelligences?

This is one of the core ideas of the methodology. The appeal of letting the LLM completely determine the flow of control (i.e., "what to do next") lies in its flexibility and potential for discovering new paths. However, in a production environment, this uncertainty comes with significant risks and debugging difficulties. When something goes wrong with an application, it's hard to pinpoint whether the problem lies in the LLM's decision making or the tool's execution." 12-Factor Agents" advocates that core, high-risk business processes be defined in deterministic code (e.g., state machines), and that at certain points in the process, LLMs can be called to make local decisions (e.g., categorizing, extracting information, etc.). This leverages the capabilities of LLMs while ensuring the overall predictability and stability of the system. - Does this set of principles mean I can't use existing AI frameworks at all?

Not really. You can absolutely use existing frameworks while following these principles. The key is how you use them. You can think of a framework as a "library" that provides handy tools, rather than as a "framework" that controls the flow of your entire application. For example, you can use the tool call parsing functionality provided by a framework, but write the main control flow yourself, or use the RAG (Retrieval Augmentation Generation) module provided by it to populate the context, but the specifics of that context are under your precise control according to Principle Three. The point is to maintain control over key parts of the application, rather than outsourcing everything to the framework. - Is this set of principles easy to follow for traditional software engineers without an AI background?

It's so easy that one might even say that this set of principles is tailor-made for good software engineers. Much of it (e.g., version control, state management, modularity, deterministic control flow) is software engineering best practice. It encourages engineers to navigate the LLM with their already familiar and reliable engineering mindsets, rather than requiring them to learn a whole new probabilistic programming paradigm. It "demotes" LLM to a powerful but carefully managed "component" that allows engineers to work in their own familiar domains.