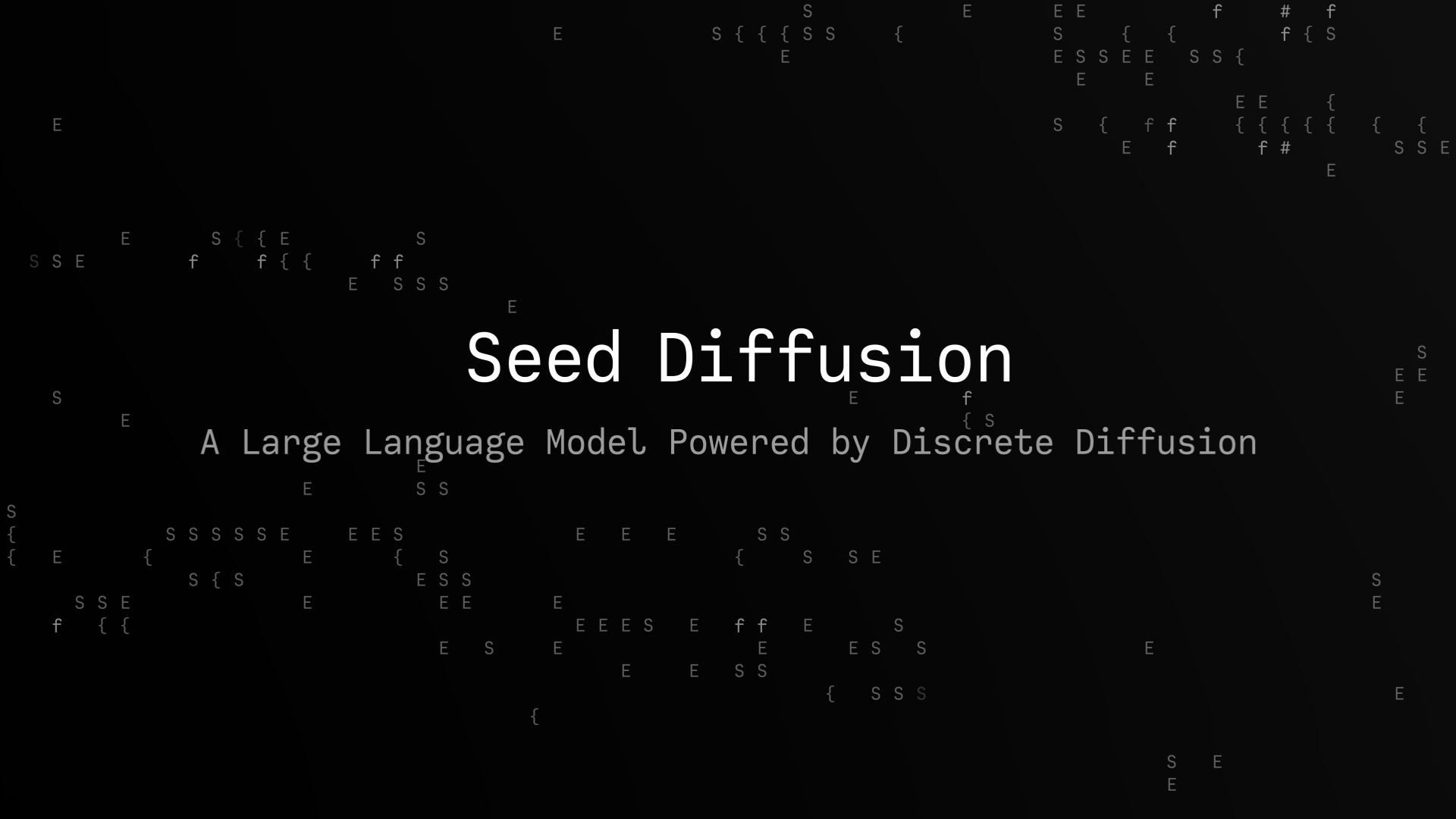

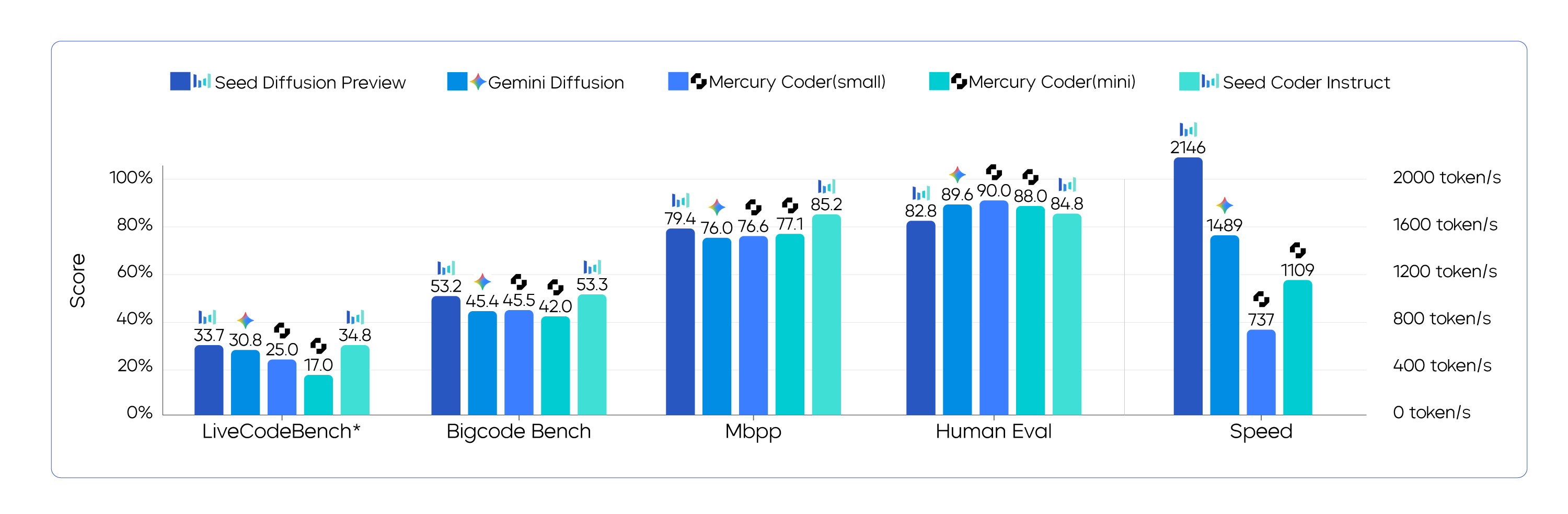

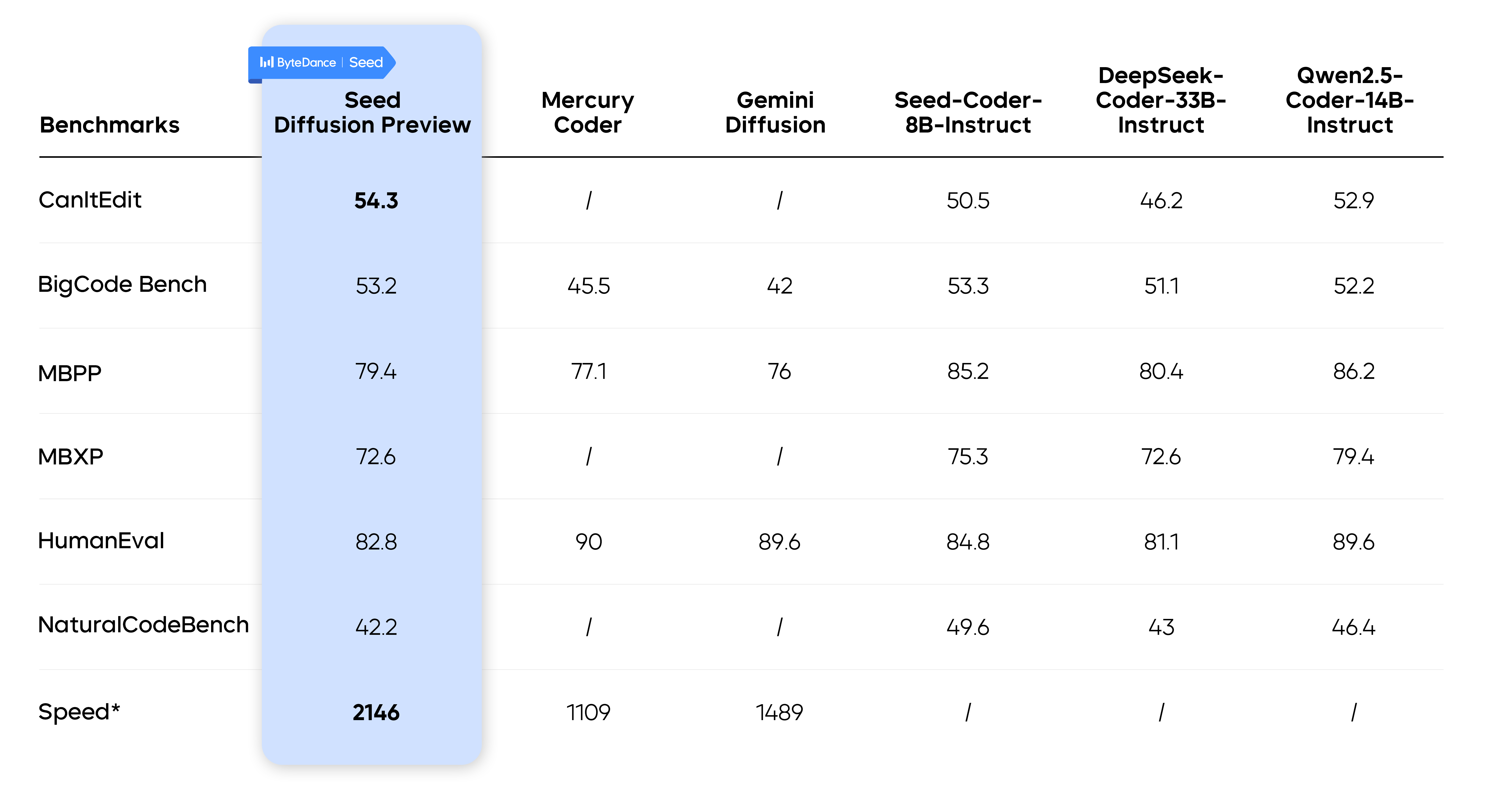

byte-jumping Seed The team has released a new product called Seed Diffusion Preview An experimental language model specialized in code generation. The model is based on discrete state diffusion techniques and achieves an inference speed of 2146 token/s, which is 5.4 times faster than an autoregressive model of the same size, while maintaining comparable performance in several core code benchmarks.

This result sets a new benchmark for the speed-quality Pareto frontier for code modeling and demonstrates the great potential of discrete diffusion methods in the field of code generation.

The "Speed Shackle" of Autoregressive Modeling

Most of the current mainstream code generation models use autoregressive architecture, they predict the next code by one by one token This is similar to the "verbatim output" of human writing. This mechanism ensures the coherence of the code, but it also creates an insurmountable speed bottleneck. Since the generation of each token depends on the previous token, the whole process cannot be efficiently parallelized, resulting in slower speed when dealing with long code sequences.

Seed Diffusion The adoption of the Diffusion Model was a fundamental game changer. The Diffusion Model, which initially made its mark in the field of image generation, works more like a "blur-to-clear" painting process. For code generation, it is possible to start with a noisy (or "blurry") image. [MASK] The first step is to recover the complete, structurally correct code in parallel through multi-step "denoising", starting from the template (tags). Theoretically, this parallel decoding capability can greatly improve the generation speed.

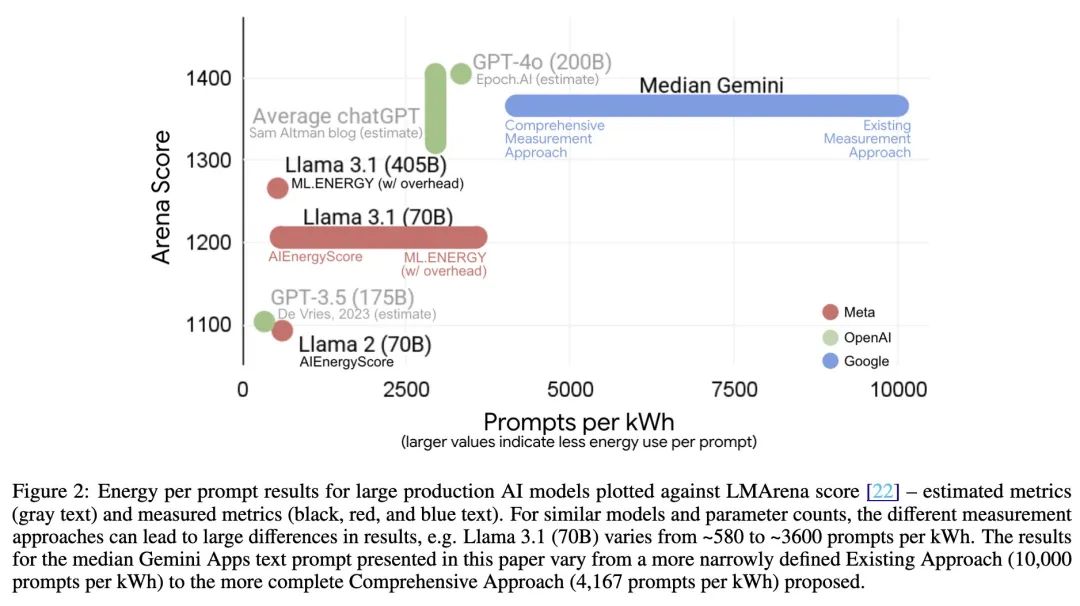

Seed Diffusion the speed of inference. Direct benchmarking with other models is challenging due to different testing conditions. For example.Mercury Coder was evaluated on a proprietary dataset and H100 hardware, and the Gemini Diffusion The speed data is taken from a mixed task benchmark on unknown hardware.

The core methodology behind the speed boost

Seed The team introduced several key technologies in order to translate the theoretical advantages of diffusion modeling into practical applications.

Two-stage learning from pattern filling to logical editing

There is a drawback to the traditional way of training diffusion models: the model will only focus on the band [MASK] Positioning tags and placing undue reliance on other uncovered code to be perfectly correct is technically known as "false correlation".

To break this limitation, the team designed a two-stage learning strategy:

- Phase 1: Mask-based diffusion training. In this phase, the model learns the local context and fixed patterns of the code through standard "fill-in-the-blank" tasks.

- Phase 2: Editorial-based diffusion training. This phase introduces edit-distance based perturbations (insertion and deletion operations) that force the model to revisit and fix all tokens, including those that were initially uncovered. This significantly improves the model's ability to understand and fix the global logic of the code.

The experimental data show that after the introduction of the second stage of training, the model is in the CanItEdit Benchmarking in the pass@1 scores were 4.81 TP3T higher than the autoregressive model (54.3 vs 50.5).

Constrained sequential diffusion of fused code structures a priori

The code, although sequential data, is not generated strictly from left to right. It contains strong causal dependencies, e.g. variables must be declared before they are used. Completely unordered generation ignores this structural a priori, leading to performance limitations.

To this end, the team proposes "constrained order training". In the post-training phase, the model learns and follows the correct code dependencies by means of self-distillation, thus respecting the intrinsic logic of the code during the generation process.

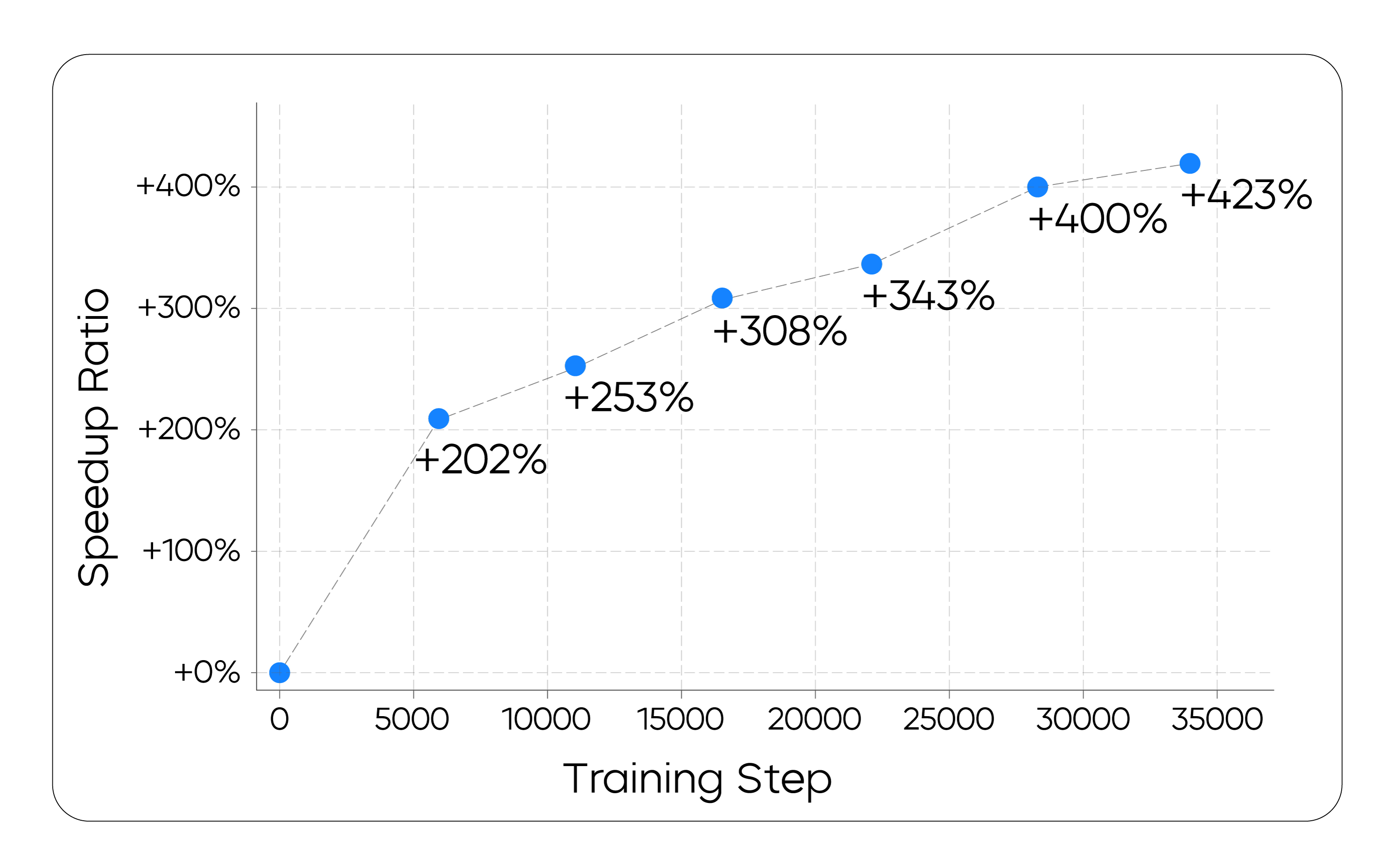

In Strategic Learning and Efficient Parallel Decoding

The theoretical advantages of parallel decoding are difficult to realize in practice. The computational overhead of a single parallel inference is large, and if the total number of steps is reduced to offset the overhead - the quality of generation is again sacrificed.

The team proposed the On-policy Learning paradigm for this purpose. The goal is to train the model to directly optimize its own generation process, minimizing the number of generation steps while maintaining the quality of the final output. |τ|. Its objective function is as follows:

Lτ = E(x0,τ)∼D[log V(xτ) - λ * |τ|]

In practice, directly minimizing the number of steps leads to unstable training. Instead, the team used a more stable proxy loss function to encourage the model to converge more efficiently. This process is similar to the "pattern filtering" techniques that have been used in the non-autoregressive generation literature, where inefficient or low-quality generation paths are implicitly "pruned" through training.

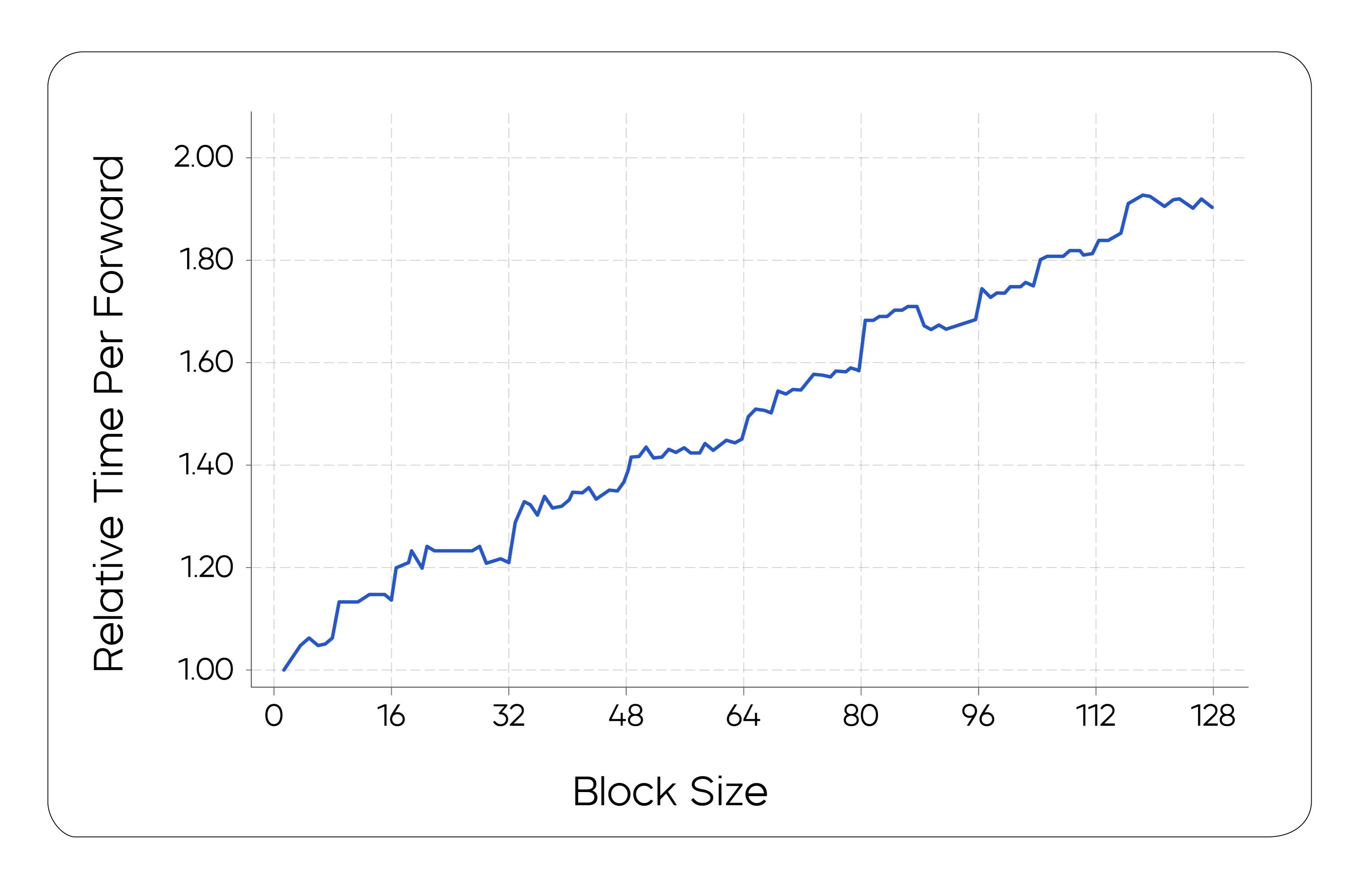

From theory to engineering realization

In order to balance computation and latency, theSeed The team used a chunked parallel diffusion sampling scheme. This scheme maintains causal order between chunks while utilizing the KV-caching techniques reuse information from generated chunks. The team has also specifically optimized the underlying infrastructure framework to efficiently support chunked parallel reasoning.

Experimental results and market prospects

In the generation task test, theSeed Diffusion Preview The full parallel potential of the diffusion model is unleashed, achieving a speedup of 5.4 times faster than an autoregressive model of the same size.

What's more, this high speed didn't come at the expense of quality. In multiple industry benchmark tests, theSeed Diffusion Preview The performance is comparable to the top autoregressive models and even surpasses them on tasks such as code editing.

This result suggests that to Seed Diffusion The discrete diffusion approach, represented by the discrete diffusion method, not only has the potential to become the basic framework for the next generation of generative models, but also foretells its broad application prospects. For developers, the 5.4x speedup means smoother real-time code completion, faster unit test generation, and more efficient bug fixes, which will radically improve the experience of collaborative human-computer programming.

in the wake of Seed Diffusion The continued exploration of the project, its potential for application in complex reasoning tasks, and the scaling laws will be further revealed.