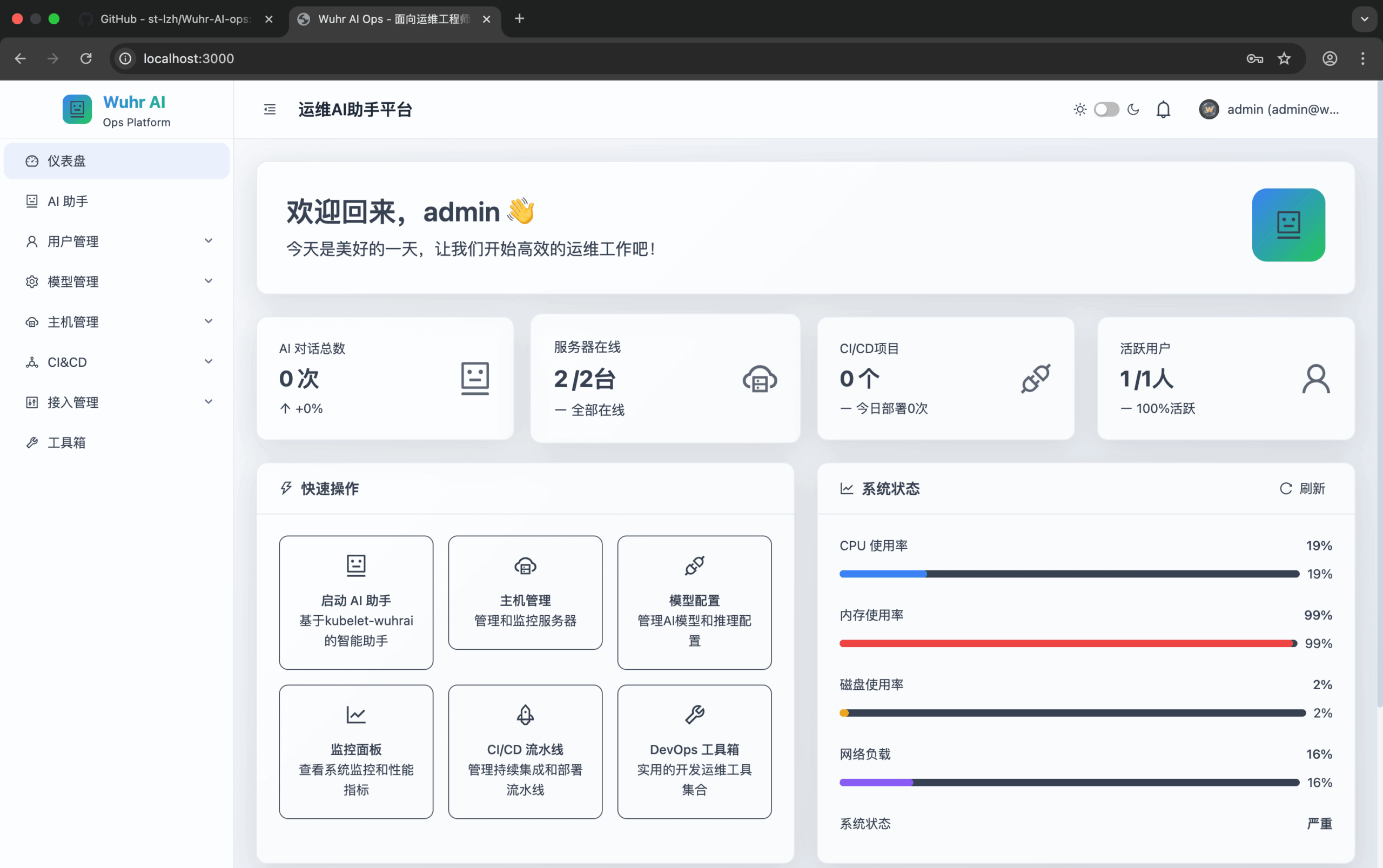

Wuhr-AI-ops is an open source intelligent O&M management platform that integrates multimodal AI assistants, real-time monitoring, log analysis and CI/CD management. It simplifies complex O&M tasks through AI technology and helps O&M teams manage IT systems efficiently. The platform supports natural language operations and combines mainstream AI models such as GPT-4o and Gemini to provide a one-stop solution. Users can monitor system performance, analyze logs, manage permissions, and improve efficiency through automated deployment.Wuhr-AI-ops is suitable for enterprises or teams that need to unify the management of local and remote hosts, and the code is hosted on GitHub, which makes it easy for developers to contribute and customize.

Function List

- Intelligent AI Assistant: Supports natural language input, calls models such as GPT-4o and Gemini, and performs O&M tasks.

- Multi-mode command execution: Support Kubernetes cluster and Linux system commands, intelligent switching execution environment.

- real time monitoring: Integrate ELK log analysis and Grafana performance monitoring to view system status in real time.

- CI/CD management: Supports automated deployment pipelines and integrates with Jenkins for an efficient development process.

- Rights Management: Role-based access control, setting up approval processes and ensuring system security.

- Multi-environment supportUnified management of local and remote hosts to simplify multi-environment operations and maintenance.

Using Help

Installation process

To use Wuhr-AI-ops, it needs to be installed in an environment that supports Node.js and Docker. Here are the detailed installation steps:

- cloning project::

git clone https://github.com/st-lzh/wuhr-ai-ops.git cd wuhr-ai-ops

This will download the project code locally and go to the project directory.

- Configuring Environment Variables::

Copy the sample environment variable file and edit it:cp .env.example .envOpen with a text editor

.envfile to configure the database connection information and the API key for the AI model. Example:DATABASE_URL=postgresql://user:password@localhost:5432/wuhrai OPENAI_API_KEY=sk-xxxMake sure to fill in the correct database address and a valid API key.

- Setting up npm mirrors (for domestic users)::

To speed up dependency installation, configure the npm mirror source:npm config set registry https://registry.npmmirror.com/ - Download kubelet-wuhrai tool::

Download and set execution permissions:wget -O kubelet-wuhrai https://wuhrai-wordpress.oss-cn-hangzhou.aliyuncs.com/kubelet-wuhrai chmod +x kubelet-wuhrai - Starting database services::

Start PostgreSQL, Redis and pgAdmin with Docker Compose:docker-compose up -d postgres redis pgadmin sleep 30Wait 30 seconds to make sure the service is fully started.

- Installation of dependencies::

Run the following command to install the project dependencies:npm install - Database initialization::

Initialize the database structure and data:npx prisma migrate reset --force npx prisma generate npx prisma db push - Initialize users and permissions::

Run the following script to set the administrator user and permissions:node scripts/ensure-admin-user.js node scripts/init-permissions.js node scripts/init-super-admin.ts - Initialize preset models and ELK templates::

Configure AI models and log analysis templates:node scripts/init-preset-models.js node scripts/init-elk-templates.js - Starting the Development Server::

Run the development environment:npm run devOnce the project is started, the local service can be accessed through a browser (default port depending on the configuration).

- Running tests (optional)::

Verify that the project functions properly:npm test

Using the main functions

Intelligent AI Assistant

The core function of Wuhr-AI-ops is an intelligent AI assistant. Users can enter O&M commands in natural language, such as "check logs in production" or "deploy latest code to test server", and the AI assistant will parse the commands, invoke GPT-4o or Gemini model, generate corresponding commands and execute them. execute. The steps are as follows:

- Log in to the Wuhr-AI-ops web interface.

- Enter natural language commands in the AI Assistant input box.

- The system automatically parses and displays execution results, such as log analysis reports or deployment status.

- If you need to adjust the AI model, you can do so in the

.envfile to replace the API key.

real time monitoring

The platform integrates with ELK and Grafana for real-time monitoring of system performance and logging:

- ELK Log Analysis: Select the "Log Analysis" module in the web interface to view system logs. Users can filter the time range or keywords to quickly locate the error logs. For example, enter "error" to display all error logs.

- Grafana Performance Monitoring: Access the Grafana dashboard to view metrics such as CPU, memory, network, and more. Users can customize the dashboard to add specific monitoring items, such as Kubernetes Pod status.

CI/CD management

Wuhr-AI-ops supports automated deployment pipelines with Jenkins integration:

- Select the "CI/CD Management" module in the web interface.

- Configure Jenkins tasks, such as setting the code repository address and deployment target.

- Deployments are triggered by natural language commands, such as "deploy main branch to production".

- The system automatically executes the Jenkins pipeline and returns deployment results.

Rights Management

The platform supports role-based access control:

- Administrators can add users and roles through the Rights Management module.

- Set up approval processes, such as requiring senior administrators to approve high-risk operations.

- Users can only access functions within their role permissions after logging in to ensure system security.

caveat

- Ensure that the Docker and Node.js versions are compatible with the project requirements (refer to the GitHub documentation).

- Domestic users are recommended to use the Chinese installation script

./install-zh.shUsed by foreign users./install-en.shThe - Check the GitHub repository regularly for updates to the latest features and fixes.

application scenario

- Enterprise IT Operations

Wuhr-AI-ops is suitable for managing complex enterprise IT systems. Ops teams can quickly troubleshoot problems using natural language, such as analyzing server logs or monitoring Kubernetes cluster performance, reducing time spent on manual tasks. - Development Team Collaboration

Development teams can utilize CI/CD management capabilities to automate code deployment to test or production environments. Combined with rights management, it ensures that only authorized personnel can perform sensitive operations. - Multi-environmental management

For organizations that need to manage both local and cloud hosts, the platform provides a unified interface that simplifies cross-environment operations tasks, such as synchronizing configuration or monitoring remote servers.

QA

- What AI models does Wuhr-AI-ops support?

The platform supports many mainstream multimodal AI models such as GPT-4o and Gemini. Users can find more information on the.envfile to configure the API key to switch models. - How do I secure my system?

The platform ensures security through role-based access control and approval processes. Administrator approval is required for sensitive operations, and logs and permission changes are traceable. - Is it suitable for small teams?

Yes, Wuhr-AI-ops is open source and easy to deploy for small teams. Its natural language operations lower the technical barrier. - How do I handle dependency errors in my installation?

Check that the Node.js and npm versions are up to snuff, and use a domestic mirror source to speed up the download. If the problem persists, check the GitHub Issues page or submit an issue.