WhiteLightning is an open source command line tool designed to help developers quickly generate lightweight text classification models with a single line of command. The tool generates synthetic data using a large language model, trains ONNX models smaller than 1MB through faculty-student distillation techniques, and supports fully offline operation for edge devices such as Raspberry Pi, cell phones, or low-power devices.Developed by the Ukrainian company Inoxoft over the course of a year, WhiteLightning supports multi-language programming with an emphasis on privacy protection and low-cost deployment. Users can generate models without preparing real data, which is suitable for scenarios such as sentiment analysis and spam filtering. The project is licensed under the GPL-3.0 license, the model is licensed under the MIT license, and community support is available through GitHub and Discord.

Function List

- Generate ONNX text classification model less than 1MB with one click, support multi-class classification, such as sentiment analysis, spam detection.

- Generate synthetic training data using large language models (e.g., Grok-3-beta, GPT-4o-mini) without real data.

- Supports complete offline operation, no cloud API required after model deployment to protect data privacy.

- Compatible with Python, Rust, Swift, Node.js, Dart and other programming languages, adapted to a variety of development environments.

- Provides Docker containers with macOS, Linux, and Windows support to simplify installation and operation.

- Integration with GitHub Actions to support cloud-based model training and CI/CD automation processes.

- Provides an interactive command generator to simplify complex parameter configuration.

- Edge case generation is supported to ensure stable model performance in complex input scenarios.

- The model training process is transparent, with logs showing progress, accuracy and loss values.

Using Help

Installation process

WhiteLightning is distributed via Docker containers to ensure cross-platform consistency and simplicity. Here are the detailed installation steps:

- Installing Docker

Ensure that Docker is installed on the device. macOS and Linux users can download the installation package from the Docker website. Windows users are advised to run the command using PowerShell. Verify Docker installation:docker --versionIf not installed, please refer to the official guide to complete the installation.

- Pulling WhiteLightning mirrors

Pull the latest image from Docker Hub:docker pull ghcr.io/inoxoft/whitelightning:latestThe image has all dependencies built-in, no additional configuration of Python or other environments is required.

- Setting the API key

WhiteLightning needs to call large language models (such as those provided by OpenRouter) to generate synthetic data during the training phase, so API keys need to be configured. For example:export OPEN_ROUTER_API_KEY="sk-..."将

"sk-..."Replace it with the actual key obtained from the OpenRouter website. The key is used for training only, and the generated model runs without an internet connection. - Running containers

Run WhiteLightning using Docker to generate a classification model. For example, categorize customer reviews as positive, neutral, or negative:docker run --rm -e OPEN_ROUTER_API_KEY="sk-..." -v "$(pwd)":/app/models ghcr.io/inoxoft/whitelightning:latest python -m text_classifier.agent -p "Categorize customer reviews as positive, neutral, or negative"Command Description:

--rmAutomatically delete containers after running to save space.-eSet the API key environment variable.-v "$(pwd)":/app/modelsMount the current directory to the container and save the generated model.-pAssigning a categorized task requires a succinct description of the task objectives.

Windows users will$(pwd)Replace with${PWD}。

- Verify Installation

After running the command, the tool generates the ONNX model in about 10 minutes, saves it to the mount directory (e.g.models_multiclass/customer_review_classifier/customer_review_classifier.onnx). Check the log to confirm that training is complete, example log:✅ - INFO - Test set evaluation - Loss: 0.0006, Accuracy: 1.0000Make sure the output directory contains the model files.

Using the main functions

The core function of WhiteLightning is to generate and deploy lightweight text classification models with one click. Below is the detailed operation flow:

Generate a text categorization model

Users are provided with-pThe parameters specify the classification task, and the tool automatically completes synthetic data generation and model training. For example, a spam classifier is generated:

docker run --rm -e OPEN_ROUTER_API_KEY="sk-..." -v "$(pwd)":/app/models ghcr.io/inoxoft/whitelightning:latest python -m text_classifier.agent -p "Classify emails as spam or not spam"

- workflows:

- Tools invoke large language models (such as

x-ai/grok-3-beta或openai/gpt-4o-mini) Generate synthetic data, default 50 entries per category, including edge cases. - Training lightweight ONNX models based on synthetic data using faculty-student distillation.

- After training is complete, the model is exported to a specified directory and the log shows the training progress (e.g.

Epoch 20/20 - accuracy: 1.0000)。

- Tools invoke large language models (such as

- exports: Model files (e.g.

customer_review_classifier.onnx) and logs that record data generation and training details.

Deployment and operational models

The generated ONNX model supports running in multiple languages, has a small footprint, and is suitable for edge devices. The following is an example in Python:

- Install the ONNX runtime:

pip install onnxruntime - Load and run the model:

import onnxruntime as ort import numpy as np session = ort.InferenceSession("models_multiclass/customer_review_classifier/customer_review_classifier.onnx") input_data = np.array(["This product is amazing!"], dtype=np.object_) outputs = session.run(None, {"input": input_data}) print(outputs) # 输出结果,如 ['positive'] - For other languages (e.g. Rust, Swift) refer to the runtime documentation on the official ONNX website.

Advanced Configuration

WhiteLightning offers flexible parameter configuration to optimize model performance:

- Cue Optimization Loop: By

-rThe parameter sets the number of optimizations, default 1. Example:-r 3Adding cycles improves data quality but extends training time.

- Edge Case Generation: By

--generate-edge-casesEnable, on by default, to generate 50 edge cases per category:--generate-edge-cases TrueEnsure that the model behaves consistently when processing complex inputs.

- Model Selection: Supports a variety of large language models that can be specified in the configuration file (e.g.

x-ai/grok-3-beta)。 - Data volume adjustment: By

--target-volume-per-classSet the amount of data per category, default 50 entries. Example:--target-volume-per-class 100

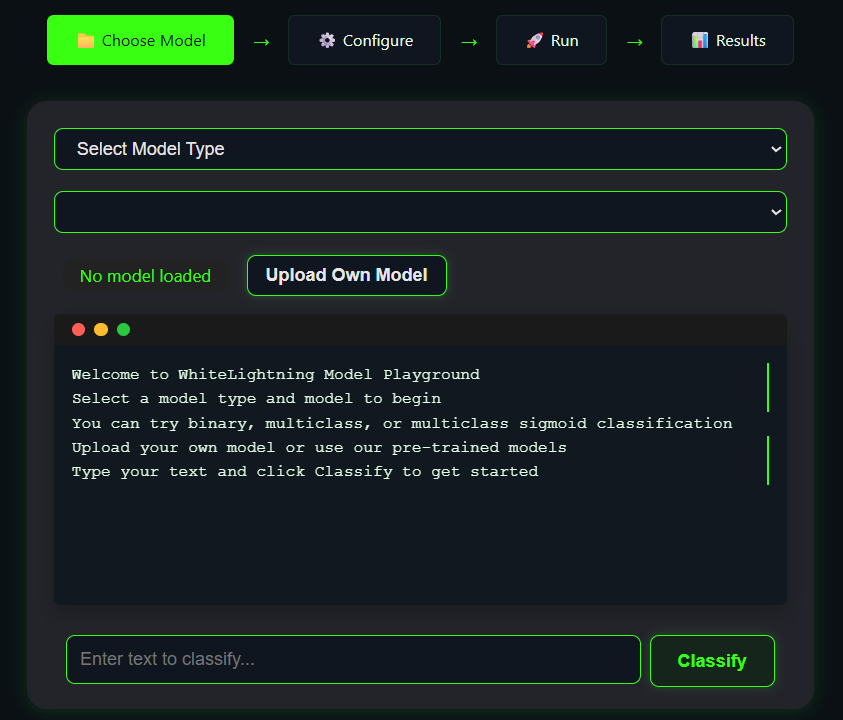

Online Playground

WhiteLightning provides an online Playground that allows users to test model generation without installation. Visit the Playground, enter a task description (e.g., "Classify tweets as happy or sad"), click Generate, and view the model output.The Playground is ideal for quick validation of a task.

Community Support and Commissioning

- GitHub ActionsTo support cloud training, visit the "Actions" page of the GitHub repository, select "Test Model Training", configure the parameters and run it.

- adjust components during testing:

- If the Docker command fails, check if the Docker service is running and confirm the permissions.

- If the API key is invalid, re-obtain it from OpenRouter.

- The log file is located in the output directory and records detailed error messages.

- Community Support: Join the Discord server to communicate with developers, or submit issues to GitHub.

caveat

- The training phase involves stabilizing the network connection in order to call the API to generate data.

- The model runs completely offline and is suitable for privacy-sensitive scenarios.

- Ensure that the output directory has write permissions, and that Windows users pay attention to the path format.

- Model training time varies depending on the complexity of the task and the amount of data, usually 10-15 minutes.

application scenario

- Mobile Application Development

WhiteLightning generates lightweight models that are suitable for integration into mobile applications such as sentiment analysis functions in chat software. The models have a small footprint, run efficiently, and are suitable for low-power devices. - Edge device deployment

Run text categorization models on a Raspberry Pi or industrial controller to process real-time logs or user input. Run offline to avoid data leakage, suitable for industrial scenarios. - Privacy Sensitive Scenarios

When processing sensitive text data in the medical and financial fields, WhiteLightning's offline model ensures that the data is not uploaded to the cloud, protecting privacy. - Rapid Prototyping

Developers can quickly generate classification models, test the effect of different tasks, and shorten the development cycle, suitable for startups or research teams.

QA

- Does WhiteLightning require constant networking?

Only the training phase requires networking to call the API to generate synthetic data, and the generated model runs completely offline. - What is the size of the model?

Models are typically less than 1MB, depending on task complexity and data volume, and much smaller than traditional large-scale models. - What programming languages are supported?

The ONNX model supports Python, Rust, Swift, Node.js, Dart, C++, etc. Refer to the ONNX runtime documentation. - How do you handle training failures?

Check API keys, network connections, or Docker permissions. Log files document detailed errors, and community help can be sought on GitHub or Discord. - Is it necessary to prepare real data?

No. WhiteLightning uses large language models to generate synthetic data and supports zero-data scene modeling.