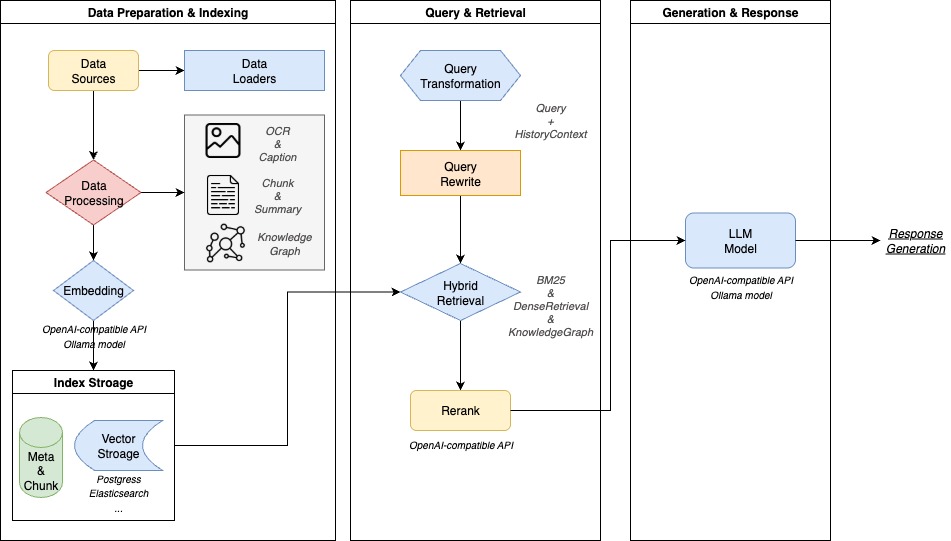

WeKnora is an enterprise-class document understanding and retrieval Q&A framework open-sourced by Tencent. It is mainly used to deal with complex structure , diverse content document scenarios.WeKnora core is the retrieval of augmented generation (RAG) technology , the technology will be retrieved from the document context snippets and the combination of large language models , in order to generate more accurate , closer to the facts of the answer . The whole framework adopts a modularized design, decoupling document parsing, content recall, and model inference to form a complete and efficient document Q&A process. Users can not only manage documents and Q&A through an intuitive web interface, but also through the API interface for secondary development and integration. This framework supports private deployment, which can guarantee the security and control of enterprise data.

Function List

- Multimodal Document Analysis: Can accurately parse PDF, Word, images and other documents in the content of mixed-text, extract text, tables and image semantics.

- Modular RAG assembly line: It supports free assembly of search strategies, large language models and vector databases, and can seamlessly integrate with platforms such as Ollama, and flexibly switch between mainstream models such as Qwen and DeepSeek.

- Intelligent Reasoning Quiz: Understanding user intent and document context using large language models, supporting accurate Q&A and multi-round conversations.

- Flexible search strategy: A mixture of strategies such as keyword search, vector search and knowledge graph search are used and free combinations are supported to enhance the accuracy of recall results.

- Out-of-the-box experience: Provides one-click startup scripts and an intuitive Web UI interface that allows non-technical users to quickly complete deployments and applications.

- Secure and controlled deployment: It supports localized and private cloud deployment and has a built-in monitoring logging system to achieve full link observability, so that the user's data is completely under his or her control.

- knowledge graph construction: The ability to transform document content into a knowledge graph, visualizing the associations between information and assisting users in understanding and in-depth retrieval.

- Rich model support: Compatible with a variety of embedding models (e.g., BGE/GTE), vector databases (e.g., PostgreSQL, Elasticsearch), and mainstream large language models.

Using Help

WeKnora provides a complete set of toolchain, through Docker to achieve one-click deployment and start, the user does not need complex configuration can run the entire system in the local environment, realizing the production environment out of the box.

Environmental requirements

Before starting the installation, make sure you have the following three basic tools installed on your computer:

Docker: Used to manage and run application containers.Docker Compose: Used to define and run Docker applications with multiple containers.Git: For cloning code repositories from GitHub.

Installation and startup process

- Clone Code Repository

First, open a terminal (command-line tool) and use thegitcommand to clone the official WeKnora code base to your local computer.git clone https://github.com/Tencent/WeKnora.git - Go to the project directory

Once the cloning is complete, go to the folder where the project is located.cd WeKnora - Configuring Environment Variables

WeKnora has made this possible through a.envfile to manage all the environment variables of the project. You can create your own configuration file by copying the example file provided with the project.cp .env.example .env ``` 复制后,你可以用文本编辑器打开`.env`文件,根据文件内的注释说明,填入你自己的配置信息。对于初次使用者,可以暂时跳过此步骤,直接启动服务,后续通过Web界面进行配置。 - One-Click Start Service

The project provides a handy startup script to start all the required services at once, including Ollama (for managing local big models) and WeKnora's backend services../scripts/start_all.shYou can also use the

makecommand achieves the same result:make start-allWhen the terminal shows that the service has started successfully, you can start using it.

- Accessing the Web Interface

After a successful launch, visit the following address in your browser:- Web Main Interface:

http://localhost - Backend API Documentation:

http://localhost:8080 - Link Tracing System (Jaeger):

http://localhost:16686(Used by developers to observe internal system calls)

- Web Main Interface:

Initialize Model Configuration

If this is your first time using it, visithttp://localhostWhen you do so, the system will automatically jump to the initialization configuration page. This page can help you complete the configuration of key components such as large language models and embedded models through a graphical interface, reducing the complexity of manually modifying configuration files. Please follow the prompts on the page to fill in the correct model API address, key and other information, and then the system will automatically save and jump to the knowledge base homepage.

Functional operation flow

- Upload Knowledge File

On the Knowledge Base Management page of the web interface, you can upload your documents (e.g. product manuals, company regulations, research reports, etc.) to the system by directly dragging and dropping the files or clicking the upload button. The system will automatically start parsing the document, extracting the content and creating a vector index for it, and the processing progress and status will be clearly displayed on the interface. - Conducting Intelligent Q&A

Once the document has been processed, you can access the Q&A screen. Ask your questions in the input box, such as "What is the company's reimbursement process?" or "How does this product handle failures?" . Based on your question, the system will perform semantic search in the uploaded knowledge base to find the most relevant document fragments, and then utilize a large language model to generate a smooth and accurate answer. The answer will be accompanied by a citation, making it easy for you to trace the information back to its source. - Using the Knowledge Graph

For documents with a complex structure, you can turn on the Knowledge Graph feature. The system will analyze the relationships between different paragraphs and entities within the document and display them visually in the form of a graph. This not only helps you understand the internal logic of the document more deeply, but also provides the system with a more structured basis for retrieval and improves the relevance of the Q&A. - Discontinuation of services

When you are done, you can run the following script to stop all services:./scripts/start_all.sh --stopOr use

makeCommand:make stop-all

application scenario

- Enterprise Knowledge Management

Integrate decentralized knowledge documents within the enterprise, such as internal documents, rules and regulations, operation manuals, etc., to build a smart Q&A portal. This can improve the efficiency of employees to find information and reduce internal training costs. - Analysis of scientific literature

It can be utilized by researchers to quickly sift through and make sense of the vast amount of academic literature, research reports and scholarly materials. This accelerates preliminary literature research and aids research decisions. - Product Technical Support

By entering the product's technical manuals and frequently asked questions into the system, a 24-hour online intelligent customer service can be built. It can quickly respond to users' questions about product features, troubleshooting, etc., and improve the quality of customer service. - Legal compliance review

In the legal field, it can assist attorneys or legal staff to quickly locate key information in a large number of contract clauses, regulations, policies and cases, and improve the efficiency of compliance review. - Financial investment research analysis

Financial analysts can use it to process a large number of company earnings reports, industry research reports and market announcements, quickly extracting key data and opinions to assist investment decisions.

QA

- What types of large language models does WeKnora support?

WeKnora supports a variety of mainstream large language models, such as Qwen (Tongyi Thousand Questions), DeepSeek and so on. It manages models through platforms such as Ollama, and users can also access external API services, which is highly flexible and can be switched in configuration files or initialized Web interfaces. - Is there a fee to deploy WeKnora?

The WeKnora project itself is open source based on the MIT license, and you are free to use, modify and distribute it, even for commercial purposes. However, if you use a third-party large language model API service that requires payment in your configuration, then the corresponding model provider will charge a fee. - Can non-technical people use this framework?

WeKnora provides an "out-of-the-box" web interface and a one-click startup script, which allows users to upload knowledge and conduct quizzes by dragging and dropping files, clicking buttons, etc., without writing code and with a low threshold of use. - Is the uploaded document data secure?

Very secure. weKnora supports localized and private cloud deployment, which means that the entire Q&A system can run entirely on your own server or local computer. All uploaded documents and data are under your control and will not be uploaded to any external server, guaranteeing data security in highly sensitive scenarios.