Collaborating with AI is sometimes like mentoring a junior programmer. You ask him to develop a new feature, and he's able to quickly commit the code and seemingly complete the task. But when you do a code review, you find a fundamental logic flaw in his implementation that you want him to fix. This programmer may simply patch over the existing buggy code instead of going back to the design level, understanding the root cause of the flaw, and refactoring - often resulting in the introduction of more problems and making the code difficult to maintain.

AI's reasoning process is often a "black box", and once it starts a certain path of thinking, it is very difficult to fundamentally correct it.Sequential Thinking MCP The emergence of the black box is to break this black box, it is like letting AI have a senior architect's blueprint of thinking, before hands-on coding, every step of planning, every decision is clearly presented.

What is Sequential Thinking? Empowering AI to "plan" and "reflect"?

In essence.Sequential Thinking Rather than injecting new knowledge into AI, it installs a "metacognitive" engine. It allows AI to learn to scrutinize its own thinking process like a human expert. This approach puts an end to the traditional AI black box model of "one question, one answer" and transforms the reasoning process into a structured and dynamic conversation that can be observed and guided.

The tool reshapes the workflow of AI in several ways:

- Structured disassembly: Instead of trying to tackle complex tasks in one step, AI learns to break them down into a series of manageable, actionable, and clear steps, like a project manager sorting through a work plan.

- dynamic correctionThis is the closest thing it has to human "reflection". During the reasoning process, if the AI discovers that its previous judgment was wrong or that there is a better solution, it can always correct its own idea instead of making a mistake.

- Multi-path explorationWhen faced with a decision point that requires trade-offs, AI doesn't need to play a "multiple choice" game. It can open up different reasoning paths, explore the pros and cons of multiple options simultaneously, and provide a more comprehensive basis for decision-making.

- elastic telescoping (i.e. flexible): AI dynamically adjusts the number of steps and depth of thinking according to the actual complexity of the task, ensuring that neither critical information is missed by thinking too shallowly nor computational resources are wasted by thinking too deeply.

- Hypothesis and verification: This feature allows AI to work like a scientist. It will first come up with possible solutions (hypotheses) and then systematically verify or disprove these hypotheses through subsequent thinking steps to eventually find the optimal solution.

Sequential Thinking Upgrade AI from a mere answer generator to a thinking partner that can plan, reflect, explore and verify. Its thinking process is no longer a black box, but a "glass box" that is completely transparent to the user and can be interacted with at any time.

How to use Sequential Thinking in AI applications?

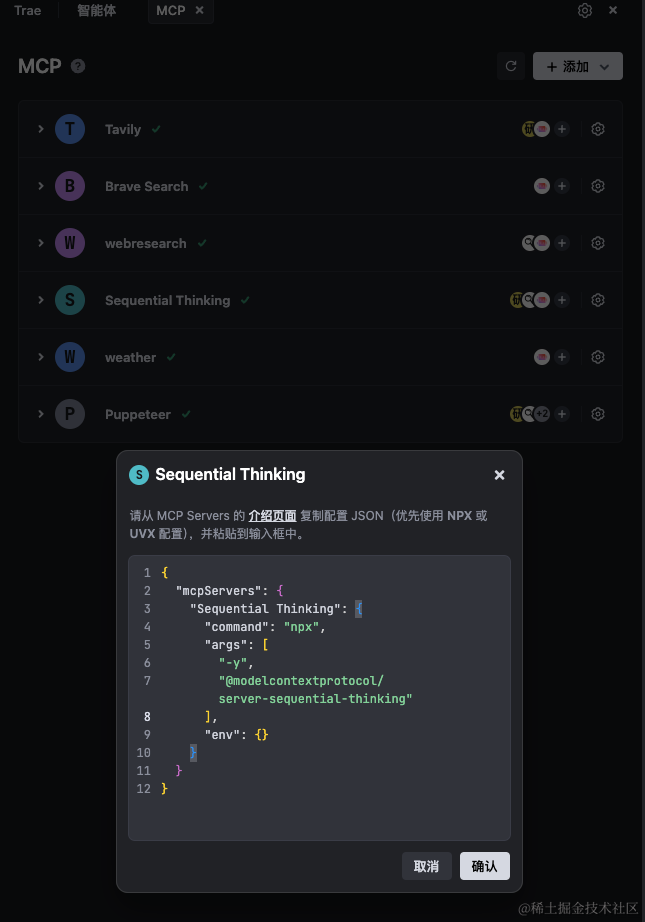

In a program that supports MCP (Model Context Protocol) of the AI platform (as mentioned in the original article) Trae AI) in the configuration Sequential Thinking It's usually pretty straightforward. Users can search from the platform's marketplace and add Sequential Thinking Services.

Once added, the platform automatically loads the service. Users can then be guided through the prompts while conversing with the AI to use the sequential_thinking Tools.

sequential_thinking Tool Parameter Analysis

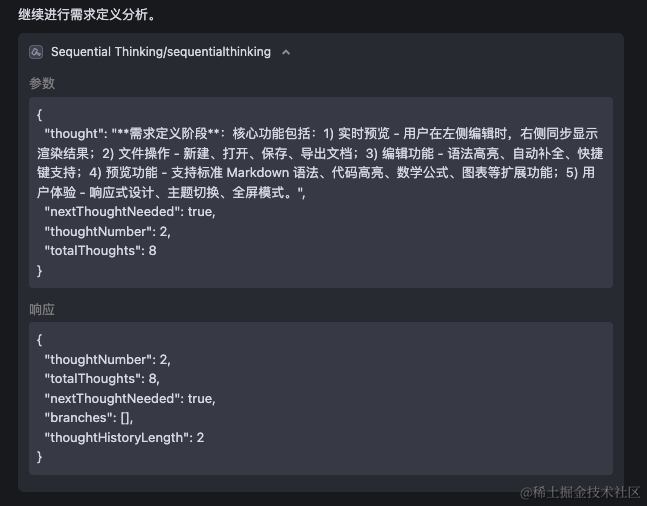

When the AI model calls sequential_thinking When the tool is used, a series of parameters are passed to control its thought process. These parameters are usually automatically generated and managed by the AI in the background based on the user's prompts and dialog context, without the user having to set them manually. But understanding these parameters can help design more efficient prompts.

The following are the core input parameters for the tool:

thought(string): The specifics of the current thinking step.nextThoughtNeeded(boolean): Whether next thinking is required. If it istrueAI will continue to call on this tool.thoughtNumber(integer): The number of the current thinking step, starting from 1.totalThoughts(integer): Estimate the total number of steps needed to complete the entire task.isRevision(boolean, optional): Marks if the current step is a revision of a previous idea.revisesThought(integer, optional): ifisRevision为trueThis parameter indicates which step is being corrected.branchFromThought(integer, optional): Indicates the step from which the current thinking diverges, for exploring different reasoning paths.branchId(string, optional): A unique identifier for the specific branch.needsMoreThoughts(boolean, optional): Indicates that the AI judgment may require more thought steps than originally planned.

In short, these parameters constitute the operational instructions for the AI to think structurally. They are populated by the AI model based on the user's high-level instructions (Prompt), which are then passed to the sequential_thinking Service. The service acts as a "thought state manager", recording, organizing and returning the entire chain of thoughts.

Three real-world scenarios for making AI work efficiently

Here are three well-designed, real-world scenarios that allow you to experience how to transform AI from an answer generator to a thinking partner.

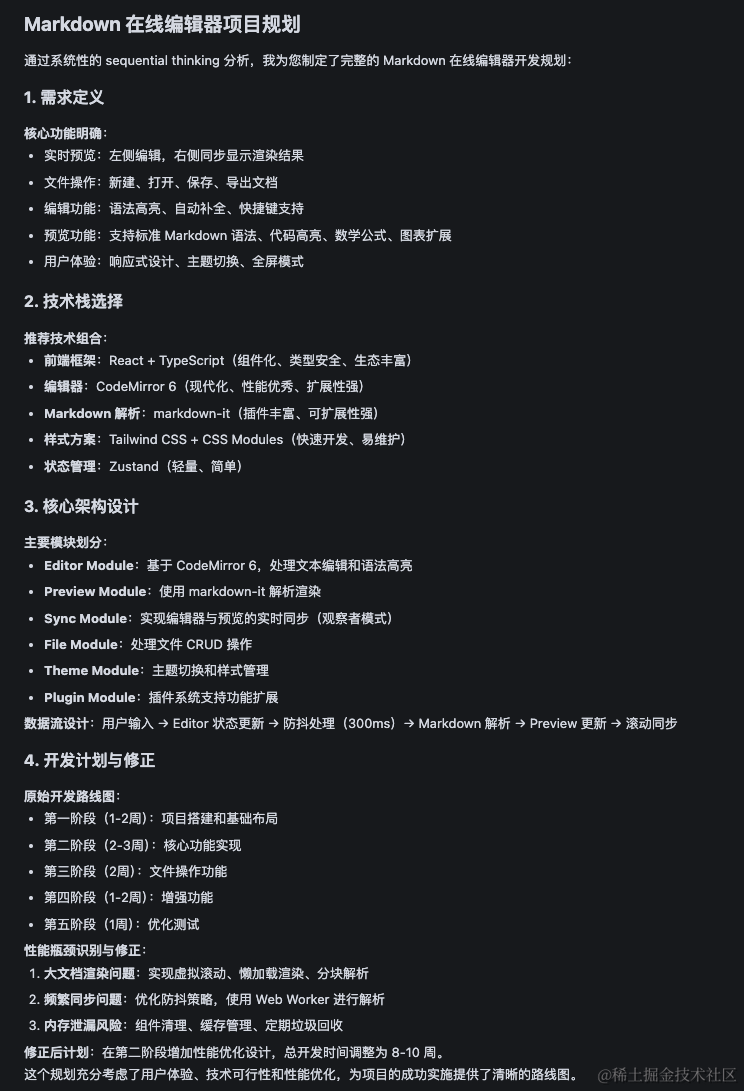

Scenario 1: Let AI become a software architect to plan complex projects

point of pain: When launching a new software project, factors such as requirements, technology selection, module partitioning and development risks are intertwined. Lack of systematic planning makes it very easy to get lost in development or make wrong technical decisions.

prescription: Utilization Sequential Thinking Guiding AI for structured planning.

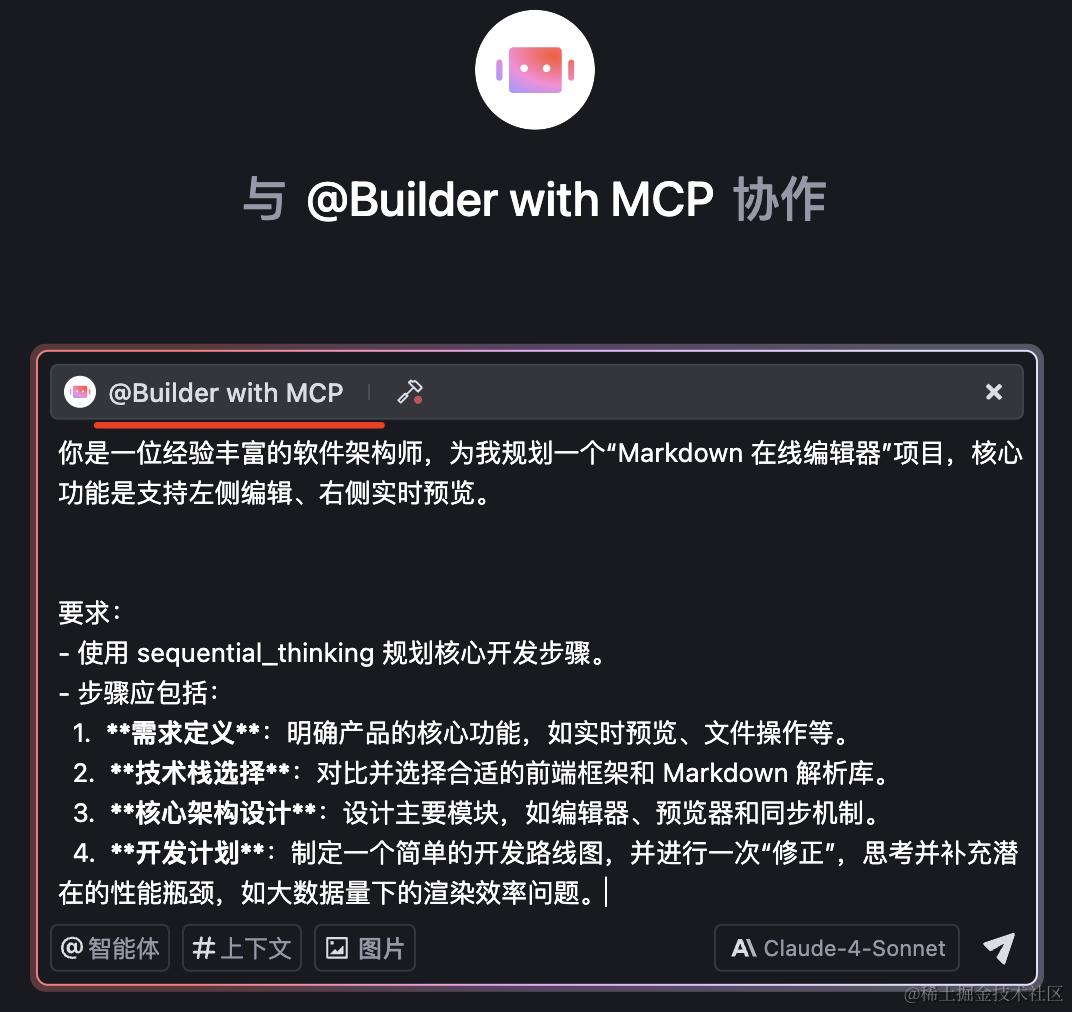

Examples of prompts:

你是一位经验丰富的软件架构师,请为我规划一个“Markdown 在线编辑器”项目,核心功能是支持左侧编辑、右侧实时预览。

要求:

- 使用 sequential_thinking 规划核心开发步骤。

- 步骤应包括:

1. **需求定义**:明确产品的核心功能,如实时预览、文件操作等。

2. **技术栈选择**:对比并选择合适的前端框架和 Markdown 解析库。

3. **核心架构设计**:设计主要模块,如编辑器、预览器和同步机制。

4. **开发计划**:制定一个简单的开发路线图,并进行一次“修正”,思考并补充潜在的性能瓶颈,如大数据量下的渲染效率问题。

In MCP-enabled AI platforms, select the Intelligent Body mode in which the tool can be invoked to run the command.

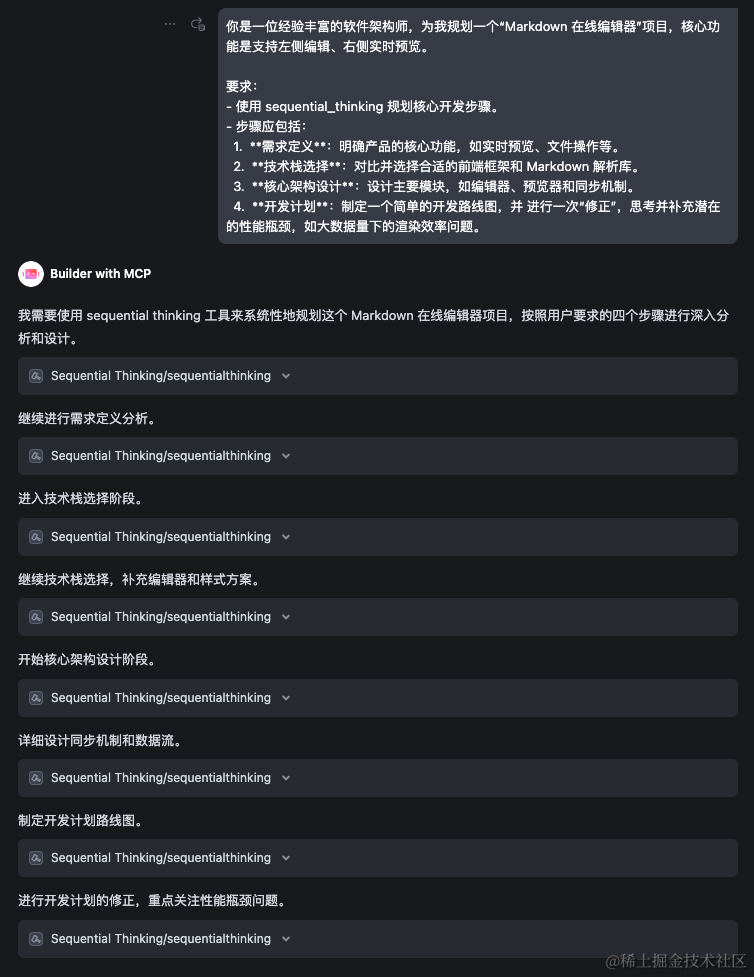

Execution effect demonstration:

AI will leverage Sequential Thinking tools, step-by-step thinking and planning.

As you can see from the thought process above:

- systematic analysis: AI first conducts a comprehensive requirements analysis, from the core real-time preview function, expanding to file operations (new, open, save, export), etc., constituting a complete thinking framework.

- Deep Technology Comparison: During the technology selection phase, AI systematically compared

React与Vueof ecological differences,Monaco Editor与CodeMirrorThe performance characteristics of themarked与markdown-itand other parsing libraries, and gives requirements-based recommendations. - Hierarchical Architecture Design: AI breaks down the system architecture into clear modules, including state management for the editor component, rendering optimization for the previewer, and a real-time synchronization mechanism between the two.

- Proactive Risk Identification and CorrectionThe most critical step is that the AI takes the initiative to "correct" the thinking after making the development plan. It identifies potential risks such as large file rendering lag and real-time synchronization delays, and proposes specific solutions such as virtual scrolling, anti-jitter optimization, and chunked parsing. This self-correcting ability is

Sequential ThinkingThe core values of the

- An implementable road mapThe final output is a development plan with specific timelines, technical details, and testing strategies, with a clear iterative path from MVP version to full feature.

Effectiveness Analysis:

Sequential Thinking 的 Structured disassembly characteristics ensure logical planning, and the dynamic correction functionality, on the other hand, allows the AI to review and optimize decisions at a later stage. In contrast, AI that doesn't use the tool may also output results, but the process is black-boxed, lacks a traceable thought process, and is much less valuable for tasks that require rigorous planning.

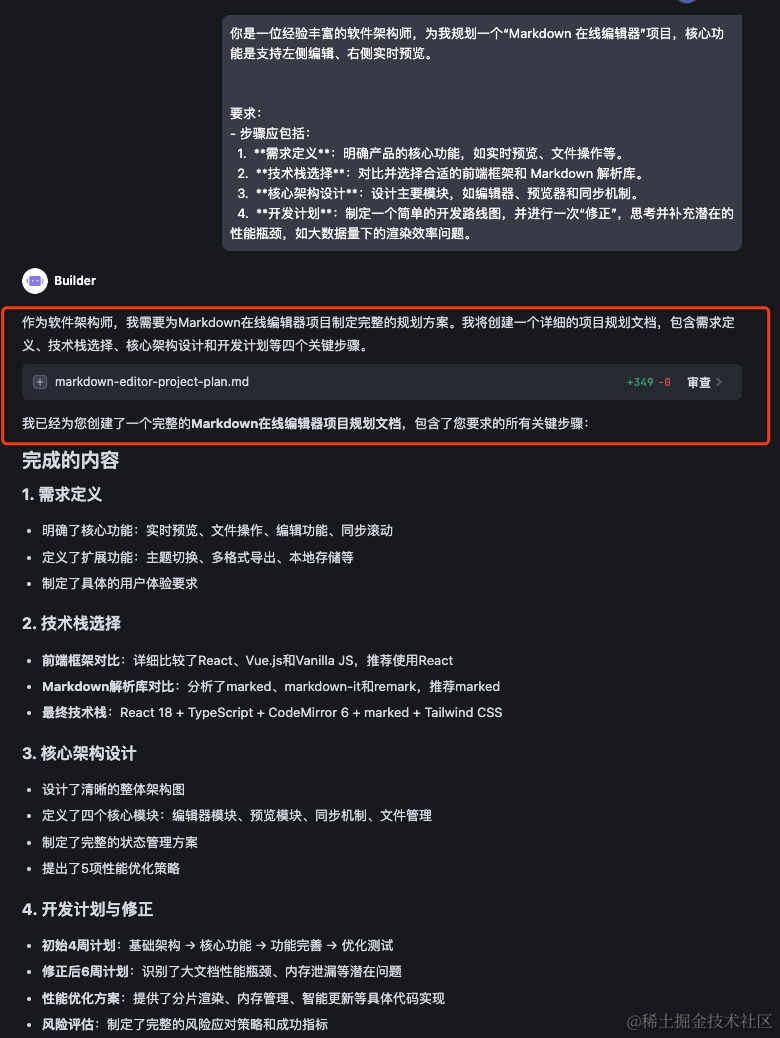

Scenario 2: Building a lightweight automated research workflow

point of pain: When conducting technology selection or research, how to comprehensively and objectively compare similar programs to avoid making wrong decisions due to incomplete information?

prescription: Combinations sequential_thinking(responsible for planning) and Tavily(responsible for searching) to build a low-cost but efficient automated research workflow.

Tavily is a search engine designed for large language models that delivers high-quality, real-time, ad-free structured search results, making it an ideal tool for AI to conduct deep research.

Examples of prompts:

我需要一份关于 MCP (Model Context Protocol) 和传统 Function Calling 的详细技术对比分析报告。

请使用 sequential_thinking 工具来系统性地分析此问题,并结合 Tavily 工具搜索最新信息,确保报告的全面性、时效性和实用性,最终以 Markdown 格式呈现。请覆盖以下几点:

- 核心设计理念与架构差异。

- 功能优势与适用场景。

- 开发体验、学习成本与生态支持。

- 如果分析过程中发现有遗漏的重要方面,请及时补充和修正。

Operational results and analysis:

After receiving the task, the AI will start a cycle of "Think-Search-Integrate":

- Initiate the chain of thought: Use

sequential_thinkingThe task "Compare MCP and Function Call" is broken down into subtasks such as "Analyze the design concepts of the two", "Search for the latest information on MCP", "Search for the implementation of Function Calling", and "Integrate the comparison table", "Search for Function Calling implementations," and "Integrate the comparison table". - Call Tavily Deep Search: At the node where the information needs to be collected, the AI calls the

Tavilytool performs accurate search queries.

- Integration and Export: Eventually, the AI will consolidate all the information and produce a clearly structured report.

[Additional Knowledge] MCP vs. Function Calling Core Differences

- design philosophy:

Function Callingis model-driven, the model "decides" which predefined function to call based on the context, more like an enhanced RPC call. In contrast, theMCPIt is protocol-driven, defining a common contextual exchange format that allows models and external tools to collaborate in richer contexts, with a focus on "conversations" rather than "invocations". - interactive mode:

Function CallingIt is usually one-way and one-time. The model makes the call request and the tool returns the result.MCPSupporting more complex two-way, multi-round interactions, the tool can actively provide context to the model, initiate conversations, and enable true collaboration. - dexterity:

Function CallingThe signature of each function needs to be strictly defined in the code in advance.MCPIn contrast, it is more flexible in that it allows the tool to dynamically describe its own capabilities, and the model can decide how to use the tool based on these descriptions, reducing the rigidity of integration.

This lightweight workflow can be seen as an effective "research assistant".

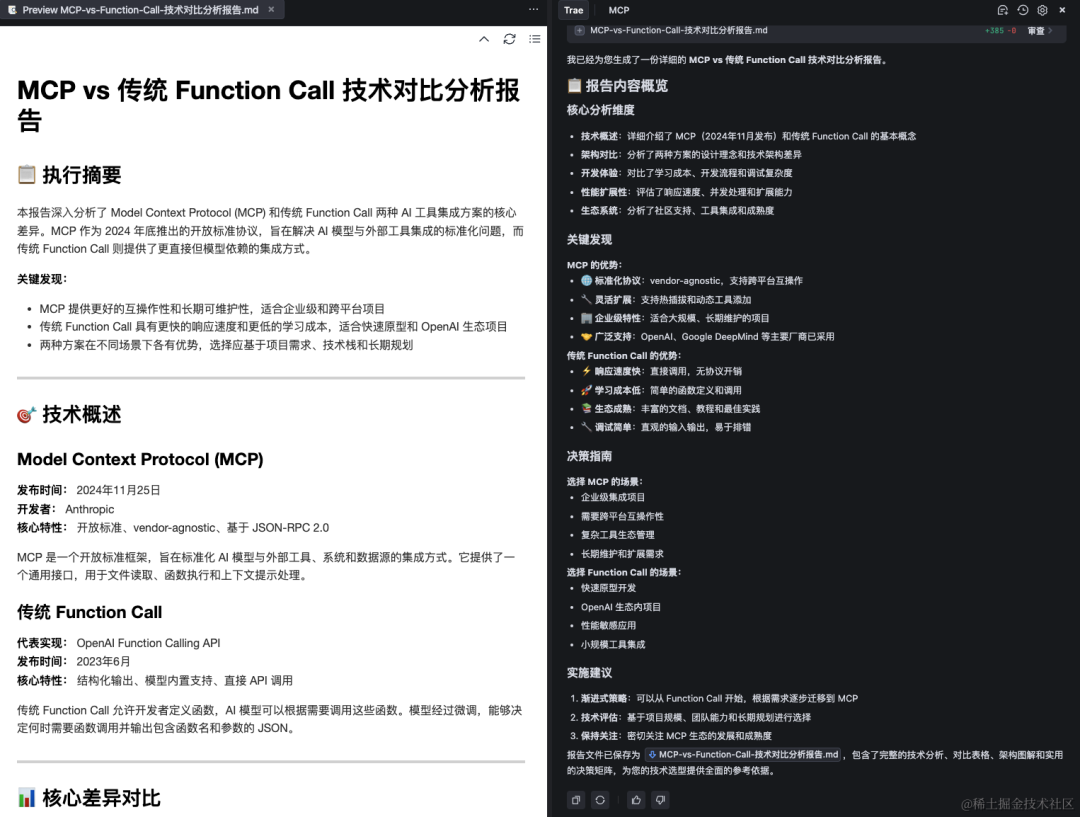

Scenario 3: Creating an all-around "Research Intelligence Body"

point of pain: The simple "think + search" combination is still insufficient in real web environments. Information retrieval often requires reading long texts, interacting with dynamic pages (e.g., clicking "load more"), or even dealing with anti-crawler mechanisms. Each manual combination Tavily、Puppeteer and other tools and write complex cues, which is inefficient and difficult to reuse.

prescription: Leveraging the platform's Agent functionality to encapsulate the complete workflow of Thinking Chain + Complex Information Acquisition into a reusable and customizable Intelligence.

[Additional knowledge] Introduction to tools

- Puppeteer: is a

Node.jslibrary, which provides a set of high-level APIs to pass theDevToolsprotocol controlChrome或Chromium. Simply put, it drives a real browser to visit web pages, performJavaScriptIt is a powerful tool for handling dynamic web pages and complex interactions, taking screenshots, generating PDFs, and more.

Implementation steps:

1. Configuration of the full-featured toolset: Ensure that the AI environment has installed and configured the sequential-thinking、tavily as well as puppeteerThe first is to equip intelligences with the full set of capabilities of "Thinking", "Searching" and "Browser Interaction".

2. Creating and configuring intelligences: Create a new smartbody in the platform's smartbody marketplace.

- name (of a thing): Study of Intelligent Bodies

- Prompt: Setting roles, competencies, and tool use strategies for them.

# 角色

你是一名深度研究智能体,核心使命是系统性地拆解复杂问题,并利用工具箱深入挖掘和整合网络信息,最终呈现条理清晰的综合报告。

# 核心能力

利用 `sequential_thinking` 制定和调整研究计划,并根据任务情境智能选择最合适的工具获取信息。

# 工具箱与使用策略

1. **`Tavily` (搜索)**: 默认起点。用于快速进行初步信息检索,发现关键信息源链接。

2. **`Puppeteer` (网页交互)**: 当 `Tavily` 提供的链接指向需要深度阅读的静态或动态页面时使用。尤其适用于需要执行 JavaScript (如无限滚动、点击加载) 或需简单交互 (如关闭弹窗) 的网站。

# 工作流程

1. **规划**: 接收请求后,使用 `sequential_thinking` 规划研究框架。

2. **执行与决策**: 默认从 `Tavily` 开始。根据搜索结果,动态决策下一步是继续搜索,还是使用 `Puppeteer` 深入分析某个链接。

3. **适应与修正**: 研究过程中若遇到障碍 (如访问失败),需反思原因并调整策略,用 `sequential_thinking` 记录调整过程。

4. **整合与报告**: 收集足够信息后,整合所有发现,生成一份结构清晰的 Markdown 报告。

5. **文件交付**: 将最终报告写入文件,完成任务。

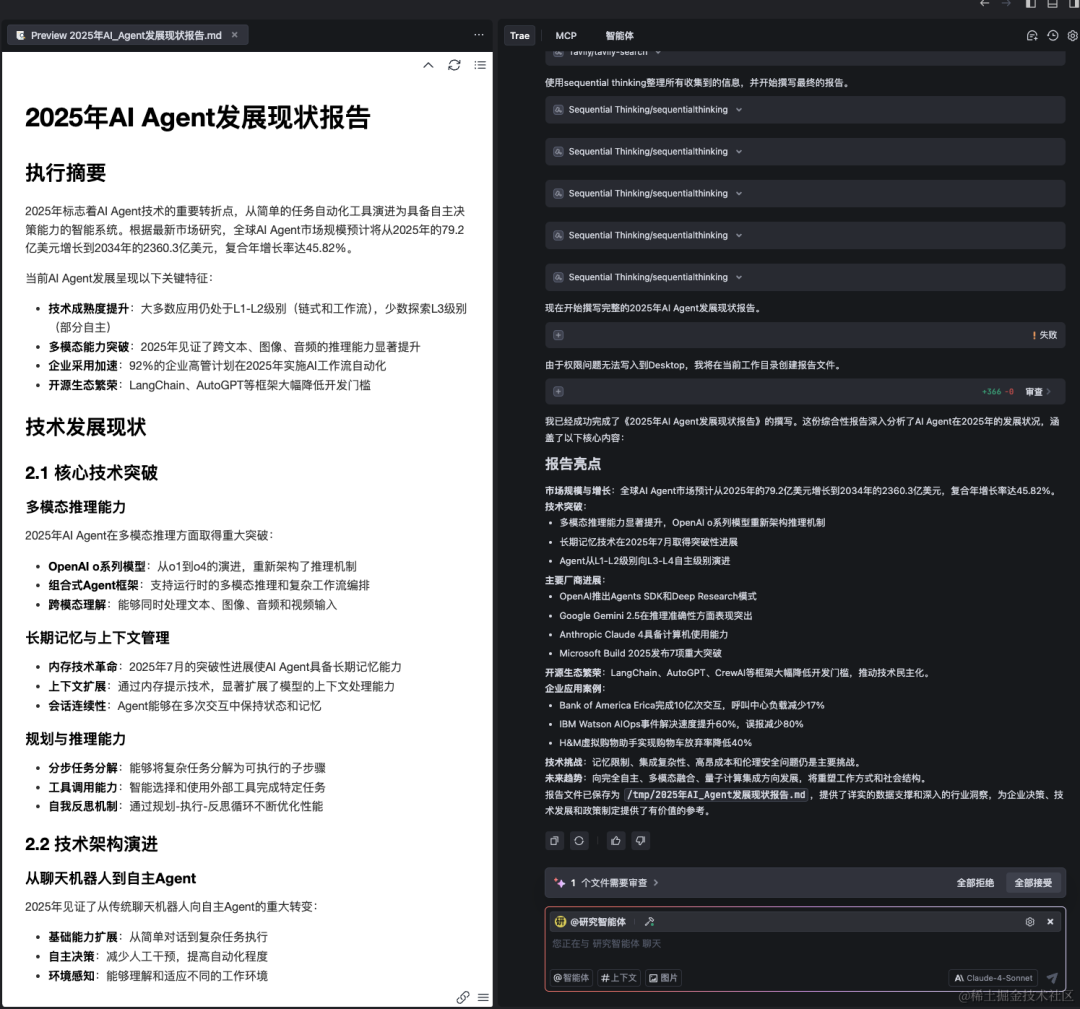

3. Operational results: Use this intelligence to complete a research task: "Write a report on the 'State of the Art of AI Agent Development in 2025'".

- Task initiation and execution: The intelligence receives the command and begins to utilize the

sequential_thinkingDecompose the task and call theTavilyPerforms multiple rounds of searching and may switch to thePuppeteerVisit the specific web page and the whole process is clearly visible.

- Delivery of results: After a rigorous "think-execute-reflect" cycle, the intelligences delivered an informative and clearly structured report, which was deposited in the document as required.

With this case, a configuration of the Sequential Thinking and multifunctional toolset of the intelligence is no longer a simple question-and-answer robot, but a capable assistant that can autonomously plan and execute complex research tasks. However, it should also be noted that this deep thinking chain increases the AI's token consumption and eventual response time, a strategy that trades computational cost for higher quality results.