Wan is an AI-powered visual content generation site centered around a website calledWan 2.2The open source model. Users can use this tool to quickly convert text, images or audio into high-quality video. The website supports multiple generation methods, including "Text to Video", "Picture to Video", and the unique "Voice to Video" feature, which can generate digital human videos with natural expressions and movements based on the characters in the audio file-driven pictures. The Wan 2.2 model utilizes an advanced MoE (Mixed-Mode Expertise) architecture that improves the quality and efficiency of the generated video, and can even run on consumer graphics cards. It is designed to provide a powerful and easy-to-use video creation platform for content creators, developers, and academic researchers, whether they are creating short films with a cinematic feel or generating realistic digital human images.

Function List

- Text to Video. Enter a descriptive text and the AI converts it into a moving video with precise control over the cinematic style of the video.

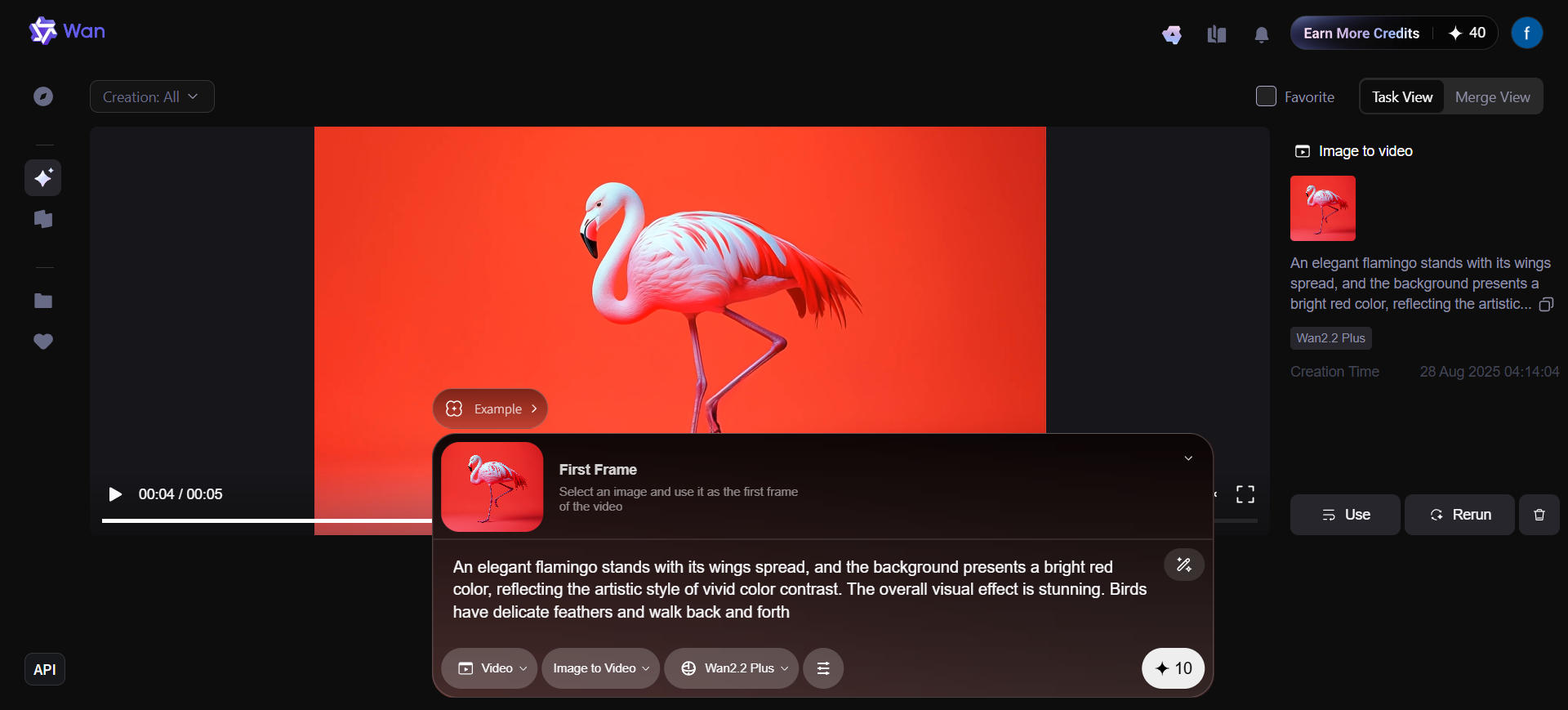

- Image to Video. Upload a static image and AI can make it move to generate a video. The model maintains the subject and style consistency of the original image very well.

- Speech to Video. This is a special feature of the website. Upload a picture (such as a cartoon image, animal or character photo) and an audio clip, and AI can drive the character in the picture to generate a video with rich facial expressions and body movements based on the audio content.

- Text to Image. Enter text prompts to generate high-quality images with photo-realism or multiple artistic styles.

- Open Source Models. The core model Wan 2.2 is open source and developers can use it for their own projects or for secondary development.

- High Definition. Support for generating video at 720p resolution, 24fps, and optimized to run efficiently on consumer graphics cards such as the 4090.

Using Help

The Wan website offers a range of powerful AI tools that make video and image creation quick and easy. Even if you don't have any professional background, you can easily get started with the following steps.

1. Access to and understanding of the main interface

First, visit Wan's official website through your browser. The homepage of the website clearly shows its core functional modules:

- Speech to Video

- Image to Video

- Text to Video

- Text to Image

Each function has a "Try now" or "Try now" button, which can be clicked to enter the corresponding function interface. At the bottom of the homepage, you can see a detailed description of its core technology, Wan 2.2 model, including its MoE architecture, data scale and technical advantages, which will help you understand the power of the tool.

2. Use of core functions

How to use the "Text to Video" function

This is one of the most commonly used features for quickly visualizing an idea or story scene.

- Step 1: Enter the function screen. Find the "Text to Video" module on the home page of the website and click the "Try Now" button.

- Step 2: Enter the description text. You will see a text input box where you enter a detailed description of the video footage you want to generate. The more specific the description, the more the generated effect will be as expected. For example, you can enter: "An astronaut riding a horse on Mars with a gorgeous starry sky in the background, movie-like footage".

- Step 3: Adjust the parameters (if available). The interface may provide some advanced options, such as video duration, resolution (support 480P and 720P), screen style (such as "movie style", "anime style") and so on. Choose according to your needs.

- Step 4: Generate and Download. Click the "Generate" button and the AI will start processing your request, which may take a few minutes. Once the processing is complete, the video will appear on the interface and you can preview the result, and if you are satisfied, you can download and save it to your computer.

How to use the "Picture to Video" function

This feature can bring a static picture to life.

- Step 1: Enter the function screen. Find the "Image to Video" module on the homepage and click "Try Now".

- Step 2: Upload your image. Click on the Upload button and select an image that you want to make into a video. For best results, it is recommended that you choose an image with a clear, high-quality subject.

- Step 3: Set up dynamic effects. You can enter some simple text prompts to guide the AI how to make the picture move, such as "the breeze blows, the leaves sway gently, the character's hair flutters with the wind".

- Step 4: Generate the video. Click the Generate button and wait for AI to process it. When it's done, you'll be able to see what was a still image turn into a moving video.

How to use the "Voice Generated Video" function (Featured Function)

This is one of the highlights of the Wan website and can be used to create digital people podcasts, storytelling, and more.

- Step 1: Enter the function screen. Find the "Speech to Video" module at the top of the homepage.

- Step 2: Upload a picture of your character. Upload a picture as the main character of the video. This image can be a photo of a real person, a cartoon image, or even a picture of an animal.

- Step 3: Upload the audio file. Upload a piece of audio that will be used as a source to drive the character to speak and make expressions. It can be a recording of you or a voiceover file.

- Step 4: Start generating. Click the Generate button. the AI analyzes the audio for voice and emotion and translates it into photo-realistic facial expressions, mouth shapes, and head movements of the character.

- Step 5: Preview and Export. When it's done, you can see the character in the picture "talking" according to your audio, and the effect is very vivid. You can export it as a video file and use it in your projects.

3. Use of open source models

For developers and tech enthusiasts, the Wan website also offers its core modelWan2.2The open source version of the

- Visit GitHub: There is usually a "Github" link underneath the corresponding functional module of a website. Clicking on the link will take you to the open source project page.

- Download and deploy: On the GitHub page, you can find the model's source code, pre-training weights, and detailed deployment documentation. Following the documentation, you can deploy the model on your own server or on a local computer (which needs to be equipped with a consumer-grade graphics card such as the NVIDIA 4090).

- Secondary development: Open source models provide you with great flexibility to fine-tune the model to your needs or integrate it into your own applications for more customized functionality.

application scenario

- Content Creators and Social Media

For video bloggers and social media operators, the "text to video" function can be used to quickly turn copy into engaging short videos. With the "Voice Generated Video" function, you can create a unique virtual digital person IP for broadcasting news, explaining knowledge, or interacting with fans, greatly reducing the threshold and cost of appearing on camera. - Advertising & Marketing

Marketing teams can quickly create demos of product promotional videos, and with the "Image to Video" function, static product images or posters can be transformed into dynamic advertisements to enhance the attractiveness of promotional materials. At the same time, you can also use the "Text to Video" function to quickly generate multiple versions of video ads based on marketing copy for A/B testing. - Education and training

Teachers or trainers can use the "Voice Generated Video" function to upload a cartoon image and the audio of the course explanation to generate interesting animated teaching videos to enhance students' learning interest. Complex concepts can also be visualized through "Text to Video" to help students better understand. - Developers and Academic Research

Since the Wan 2.2 model is open source, developers can integrate it into their applications to add AI video generation capabilities to their products. Academic researchers can conduct secondary development based on this advanced model to explore the frontiers of video generation technology and promote research progress in related fields.

QA

- Is the Wan website free?

The website offers a "Try now" experience, but does not specify a detailed pricing strategy. Typically, such services offer a limited free trial, and may require a fee for heavy or commercial use. - What is the quality of the generated video?

According to the website, its Wan 2.2 model excels in video generation quality, supporting up to 720p resolution and 24fps frame rate. Its MoE architecture and large-scale data training make the generated videos industry-leading in terms of motion, semantics and aesthetics, even outperforming some closed-source commercial models in some aspects. - What kind of computer do I need to use it?

For online use of the tools provided on the site, all you need is a regular computer with Internet access, as all calculations are done on the cloud servers. If you are a developer and want to deploy their open source model (e.g. TI2V-5B) locally, you will need a computer with a consumer-grade high-end graphics card (e.g. NVIDIA 4090). - Who owns the copyright to the generated video?

The website does not explicitly state the copyright ownership of generated content. Normally, the copyright of content generated from original material (text, images, audio) provided by the user belongs to the user, but it is recommended to read the website's user agreement and copyright policy in detail before use.