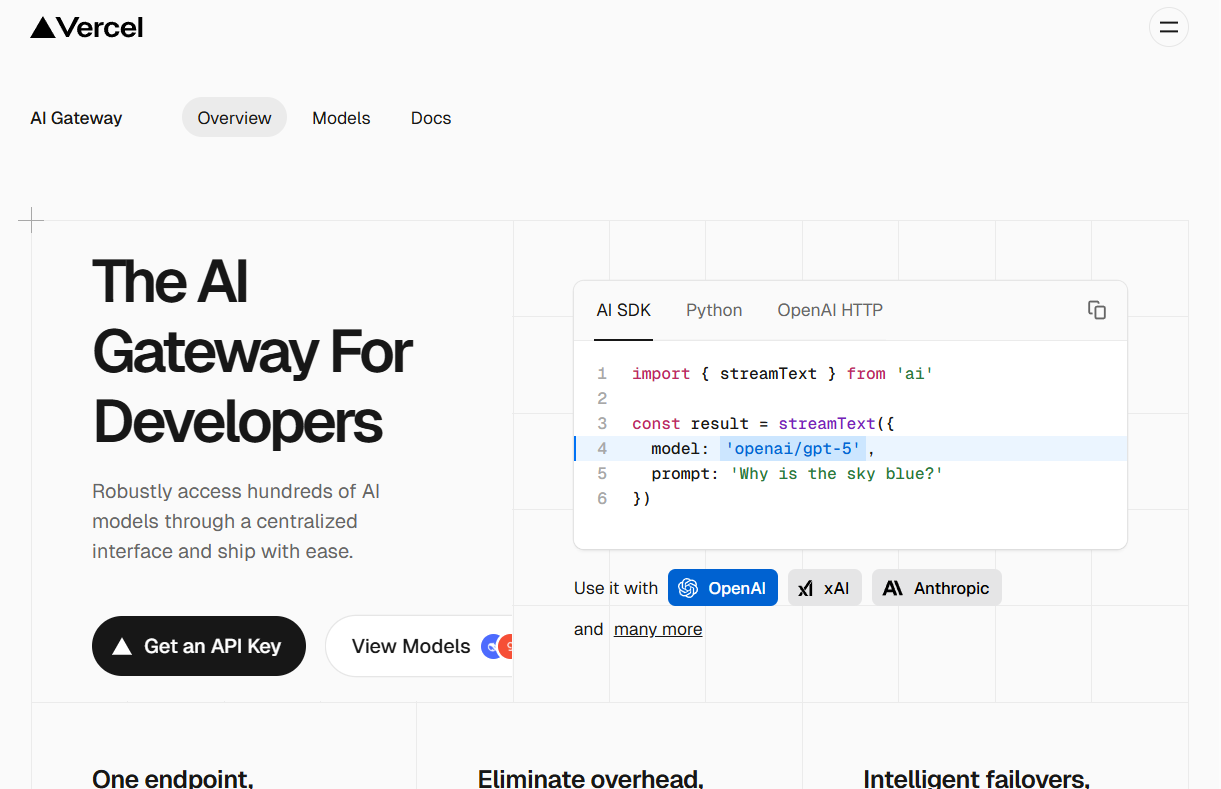

Vercel AI Gateway is a middle-tier service designed for developers. It allows developers to access multiple AI models from different vendors with one set of code by providing a unified API interface. The main purpose of this service is to simplify the process of developing and managing AI applications. Developers no longer need to manage separate API keys for each AI model, deal with complex billing and cope with rate limits from different service providers. It also has a built-in intelligent fault backup feature that enables the system to automatically switch to a backup model when an AI model service fails, thus improving application stability and reliability. In addition, AI Gateway provides logging and analysis tools to help developers monitor application spend and performance for better cost control and service optimization.

Function List

- Unified API interface: Provide a single API entry point (endpoint) to switch and use hundreds of AI models from different vendors such as OpenAI, Anthropic, xAI, etc. by modifying one line of code.

- Intelligent fault backup: When the service of an AI model provider is interrupted, the gateway can automatically switch the request to an alternate model or provider, guaranteeing the continuity of application services.

- Performance Monitoring and Logging: Centralized logging of all AI requests passing through the gateway, making it easy for developers to track, analyze and debug application performance issues.

- Cost control and budget management: Allow developers to set budgets and monitor the cost of AI model calls in real time to avoid cost overruns.

- Simplified key management: Developers do not need to manage API keys from multiple vendors in their own applications, all keys are handled centrally by Vercel's backend.

- seamless integration: It can be easily integrated with Vercel AI SDK, OpenAI SDK, and other mainstream frameworks, and developers can use their own familiar tools for development.

- unlimited speed: Vercel itself does not set rate limits on API requests, allowing developers to take full advantage of the maximum throughput offered by upstream providers.

Using Help

Vercel AI Gateway is designed to simplify the process of integrating and managing Large Language Models (LLMs) in applications. It acts as a proxy layer that allows developers to invoke many of the major AI models on the market by simply interacting with Vercel's unified interface.

Step 1: Get the API key

- Visit the official Vercel AI Gateway website.

- Click "Sign Up" to sign up for a Vercel account. New users get a free $5 credit per month to experience any model on the platform.

- After logging in, in the AI Gateway control panel, you can create a new project and get an exclusive API key. This key will be used for authentication for all subsequent API requests, so please keep it safe.

Step 2: Configure the development environment

AI Gateway can be integrated with multiple programming languages and frameworks. The following is an example of how to configure and use the Python environment and the official OpenAI SDK.

First, make sure you have the OpenAI Python libraries installed in your development environment. If it is not already installed, you can install it via thepipcommand to install it:

pip install openai

Once the installation is complete, you need to configure the API key you got from Vercel as an environment variable. Doing so is more secure than hardcoding the key in your code.

Set the environment variables in your terminal:

export AI_GATEWAY_API_KEY='你的Vercel AI Gateway API密钥'

please include你的Vercel AI Gateway API密钥Replace it with the real key you obtained in the first step.

Step 3: Send API request

Once the configuration is complete, you can invoke the AI model by writing code. The core operation is to initialize the OpenAI client with thebase_urlparameter is specified as the Unified Interface address of the Vercel AI Gateway.

Below is a complete Python code example showing how to call xAI through AI Gateway'sgrok-4Model:

import os

from openai import OpenAI

# 初始化OpenAI客户端

# 客户端会自动读取名为'AI_GATEWAY_API_KEY'的环境变量作为api_key

# 同时,必须将base_url指向Vercel的网关地址

client = OpenAI(

api_key=os.getenv('AI_GATEWAY_API_KEY'),

base_url='https://ai-gateway.vercel.sh/v1'

)

# 发起聊天补全请求

try:

response = client.chat.completions.create(

# 'model'参数指定了你想要调用的模型

# 格式为 '供应商/模型名称'

model='xai/grok-4',

messages=[

{

'role': 'user',

'content': '天空为什么是蓝色的?'

}

]

)

# 打印模型返回的回答

print(response.choices[0].message.content)

except Exception as e:

print(f"请求发生错误: {e}")

In this code, thebase_url='https://ai-gateway.vercel.sh/v1'is the key. It redirects the request that would otherwise be sent to OpenAI to the Vercel AI Gateway.The gateway receives the request and, depending on themodel='xai/grok-4', forwards the request to the appropriate AI provider (xAI in this case) and returns the final result to you.

Switching Models

One of the biggest conveniences of AI Gateway is the ability to easily switch models. If you want to switch to Anthropic'sclaude-sonnet-4model, just modify themodelparameter is sufficient, and the rest of the code remains unchanged:

response = client.chat.completions.create(

model='anthropic/claude-sonnet-4',

messages=[

{

'role': 'user',

'content': '请给我写一个关于太空旅行的短故事。'

}

]

)

print(response.choices[0].message.content)

This design gives developers the flexibility to test and switch between models without having to modify any underlying code related to authentication and network requests.

application scenario

- Rapid Prototyping

In the early stages of developing an AI application, developers need to try many different models to find the one that best fits the application scenario. With AI Gateway, developers can quickly test and compare the performance and cost of different models by simply modifying the model identifier, without having to develop a full integration for each model. - Improve application reliability

For production environment applications that are already online and user-oriented, service stability is critical.AI model providers occasionally experience service interruptions. By configuring AI Gateway's fault backup function, the system can automatically switch to the standby model when the primary model service is unavailable, ensuring that the core functions of the application are not affected, thus enhancing the user experience. - Cost Control for Startups

Startups or small development teams have limited resources and must keep a tight rein on operational costs. AI Gateway's monitoring and budget management features track Token consumption and expenses in real time, helping teams understand cost components. By setting a budget cap, you can effectively prevent unexpectedly high bills caused by code errors or traffic spikes. - Unified management of multi-model applications

Some complex AI applications may require the use of different models for different tasks, for example, using one model for text generation and another for content review.AI Gateway simplifies the complexity of O&M by providing a unified management platform where developers can monitor and manage API calls, logs, and expenses for all models in one place.

QA

- Do I have to pay to use Vercel AI Gateway?

Vercel offers a free $5 per month credit for new registrations, which can be used to experience any of the AI models on the platform. When the free credit runs out or the user makes the initial payment, subsequent usage will be billed on a per-volume basis.Vercel itself does not mark up the price of the Token, and the fee is consistent with the price of using the upstream provider directly. - What AI models does AI Gateway support?

AI Gateway supports hundreds of models from OpenAI, Anthropic, Google, xAI, Groq and many other major vendors. The full list of support can be viewed in Vercel's official documentation or in the Model Marketplace. - Does Vercel AI Gateway limit the speed of API requests?

Vercel itself does not set rate limits on API requests through the gateway. The request rate and throughput available to developers is largely dependent on the upstream AI model provider's own limiting policies. - If I'm already using OpenAI's SDK, will it be complicated to migrate to AI Gateway?

The migration process is very simple. You just need to initialize the OpenAI client with thebase_urlparameter is changed to the gateway address provided by Vercel, and theapi_keyReplacing the key with the one obtained from Vercel is sufficient, and the core business code needs little or no change.