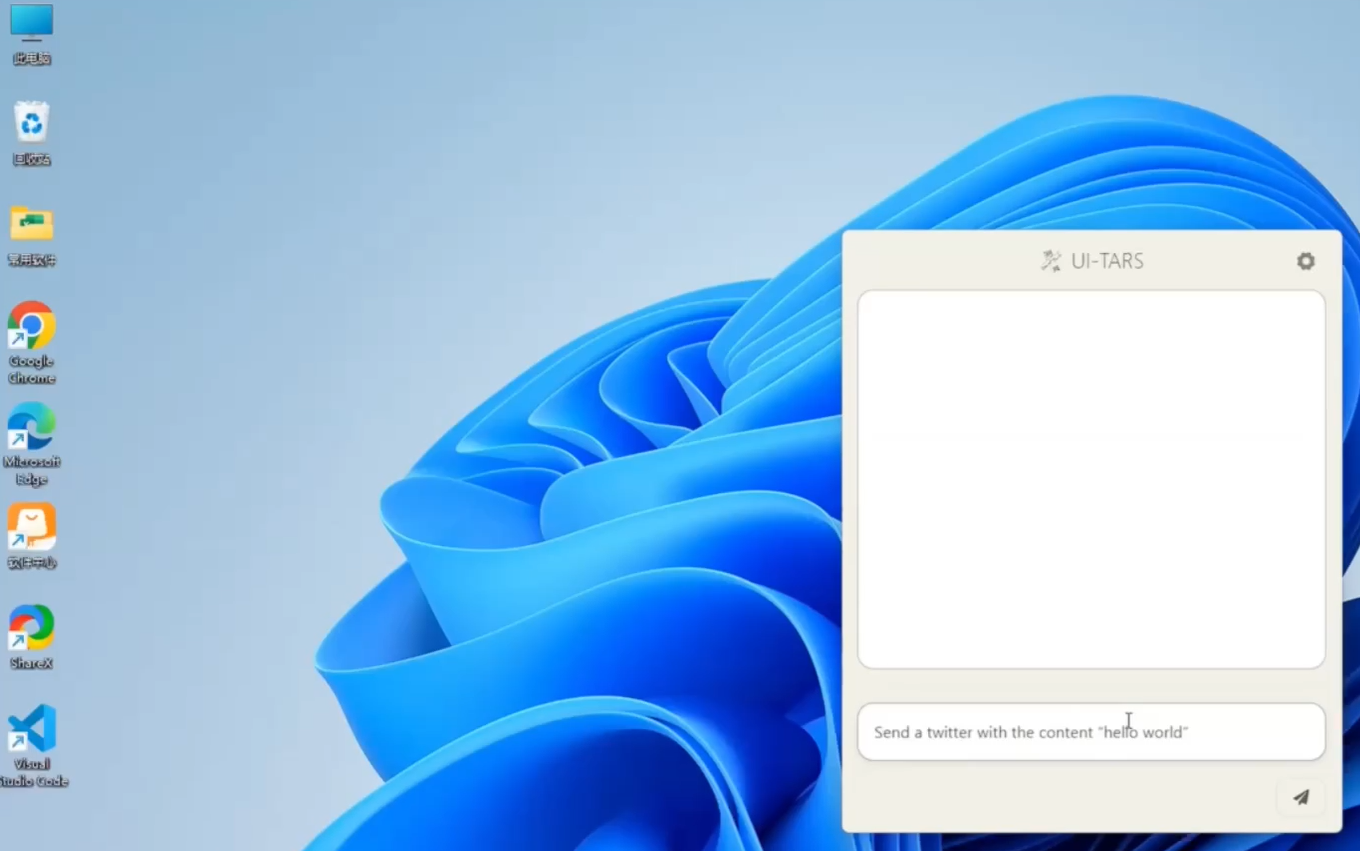

UI-TARS-desktop is a desktop application open-sourced by Bytedance, which is essentially a multimodal AI intelligence. This tool allows users to operate a local or remote computer by typing simple natural language commands. It is powered by UI-TARS and the Seed-1.5-VL/1.6 family of visual language models behind it, enabling it to understand what's on the screen and perform actions accordingly. The core capability of UI-TARS-desktop lies in its ability to understand the user interface (GUI) by recognizing screenshots, and then simulate precise mouse and keyboard actions to accomplish tasks, supporting a wide range of operating systems including Windows and MacOS. The tool not only operates regular desktop applications, but also controls browsers and automates complex workflows across applications. As a tool that processes information entirely locally, it also ensures the privacy and security of user data.

Function List

- natural language control: Use commands from everyday speech to operate the computer without writing code.

- Visual Recognition and Understanding: Apps can "see" and understand on-screen interface elements to enable precise operations.

- Precision cursor and keyboard control: Able to simulate human user actions such as mouse clicking, dragging, scrolling and keyboard input.

- Cross-platform support: Supports running on Windows and MacOS operating systems.

- Remote operation capability: Not only can you control your own computer, but also support remote control of other computers or browsers without additional configuration.

- Real-time feedback: The current status and operation process will be displayed in real time when the task is executed.

- localization: All identification and operations are done locally, ensuring the privacy and security of user data.

Using Help

UI-TARS-desktop comes as an out-of-the-box AI intelligence designed to allow users to perform computer operations through the most intuitive natural language commands. How to install and use this tool is described in detail below.

Installation process

The project is available on GitHub as a direct downloadable installer, which users can choose from depending on their operating system.

- Visit the project release page:

Go to the GitHub repository for UI-TARS-desktop and find the "Releases" section in the right navigation bar. - Download the corresponding installation package:

Depending on your operating system (Windows or macOS), download the latest version of the installation file. For example, download for Windows.exe或.msiFile to download for macOS.dmgDocumentation. - Perform the installation:

- Windows user: Double-click the downloaded installer and follow the standard installation wizard prompts to complete the installation.

- macOS users: double-click to open

.dmgfile and drag the application icon to the Applications folder.

Core Functions Operation Guide

After installation, start UI-TARS-desktop, you will see a simple interface. The core logic is very simple and can be summarized in three steps:Give instructions -> model understands and plans -> automated execution。

1. Local Operator

This is the most basic and core feature that allows AI intelligences to directly operate the computer you are currently using.

workflow:

- launch an application: Open the UI-TARS-desktop application.

- Confirmation of operating mode: Select or confirm that you are currently in "local operation" mode on the main screen.

- input: In the text input box, clearly describe the task you want to accomplish in natural language. The more specific the instruction, the better the execution.

- Example 1 (Setting up VS Code): "Please help me to turn on the autosave feature in VS Code and set the autosave delay to 500 milliseconds."

- Example 2 (Operating GitHub): "Help me check the latest unresolved issue for the UI-TARS-Desktop project on GitHub."

- commence: Press Enter or click the "Execute" button.

- Observation of the implementation process: At this point, you should be able to see the mouse pointer start moving, clicking, and typing text automatically, like an invisible person operating the computer for you. The application interface will provide real-time feedback on the steps currently being performed.

- Mission accomplished: The smart body will stop operation after completing all the steps and wait for your next command.

2. Remote computer/browser operation (Remote Operator)

This is a great feature of UI-TARS-desktop, which allows you to operate another device from one computer through it, and the whole process does not require complex configuration.

workflow:

- Switching Mode: In the main screen of the application, switch to "Remote PC Operation" or "Remote Browser Operation" mode.

- Connecting Remote Devices: The app may ask you to enter the IP address of the target device or connect via a specific pairing code (please refer to the in-app prompts for specific connection methods).

- issue instructions: After a successful connection, the operation is exactly the same as in local mode. Give your command in the input box.

- Example (remote browser): "Open booking.com on a remote browser and help me search for the highest rated Ritz-Carlton hotels near LAX from Sept. 1 to Sept. 6."

- Monitoring Remote Execution: You can see a live view of the remote device's screen on your local screen and watch every step of the smart body's operation.

Tips & Best Practices

- Instructions should be clear and unambiguous: Avoid ambiguous terms. For example, instead of saying "open that file", say "open the file `ProjectReport.docx' on your desktop".

- Decompose complex tasks: For a very complex multi-step task, try breaking it down into several simple subtasks and giving instructions in steps. This helps to increase the success rate of execution.

- context-sensitive information: If the task involves a specific application, it's a good idea to make sure the program is open and in the foreground first. Or include the steps to open the application in the instructions, e.g., "Open Excel and create a new blank workbook."

With the above steps, you can easily utilize UI-TARS-desktop to use AI Intelligentsia as your personal computer assistant to handle various daily and repetitive desktop tasks.

application scenario

- Daily office automation

Users can reduce manual labor by using natural language commands to allow AI intelligences to automate repetitive operations in office software (e.g. Word, Excel), such as formatting documents, filling out forms, and organizing data. - Software Testing and Demo

Developers or testers can instruct the AI intelligence to execute a series of test cases on the GUI to check if the software functions properly. It can also be used to record a video demonstration of the product's functionality, automating all the operational steps. - Information collection and organization

When information needs to be collected from multiple web pages or applications, UI-TARS-desktop can be instructed to automatically open the relevant pages, copy the required content, and paste it into a specified document to form a summarized report. - Remote technical support

Technical support personnel can perform repair steps directly on the other computer under the user's authorization through the remote operation function, which solves the problem of inefficiency of instruction only through voice or text.

QA

- Which operating systems does UI-TARS-desktop support?

Windows and macOS desktop operating systems are currently supported. - Is programming knowledge required to use this tool?

Not at all. Its core design concept is interaction through natural language, making it easy for users without any programming background to automate their computer operations. - Is my data safe?

The tool performs local computer operations, all the screenshot recognition and model processing are carried out locally, and will not upload your screen data to the cloud, which can effectively protect personal privacy and data security. - How does it differ from other automation tools like Selenium?

Traditional tools such as Selenium automate based on code and the DOM structure of web pages, and cannot manipulate desktop applications and require scripting, while UI-TARS-desktop is based on visual understanding, and can be operated by "looking" at the screen like a human being, controlling both browsers and any desktop software. UI-TARS-desktop, on the other hand, is based on visual understanding and can "see" the screen like a human being, controlling both browsers and any desktop software, and is driven by natural language, requiring no code.