CogVLM2 是由清华大学数据挖掘研究组(THUDM)开发的开源多模态模型,基于 Llama3-8B 架构,旨在提供与 GPT-4V 相当甚至更优的性能。该模型支持图像理解、多轮对话以及视频理解,能够处理长达 8K 的内容,并支持高达 1344×1344 的图像分辨率。CogVLM2 系列包括多个子模型,分别针对不同任务进行了优化,如文本问答、文档问答和视频问答等。该模型不仅支持中英文双语,还提供了多种在线体验和部署方式,方便用户进行测试和应用。

Related information:How long can a large model understand a video? Smart Spectrum GLM-4V-Plus: 2 hours

Function List

- graphic understanding: Supports the understanding and processing of high-resolution images.

- many rounds of dialogue: Capable of multiple rounds of dialog, suitable for complex interaction scenarios.

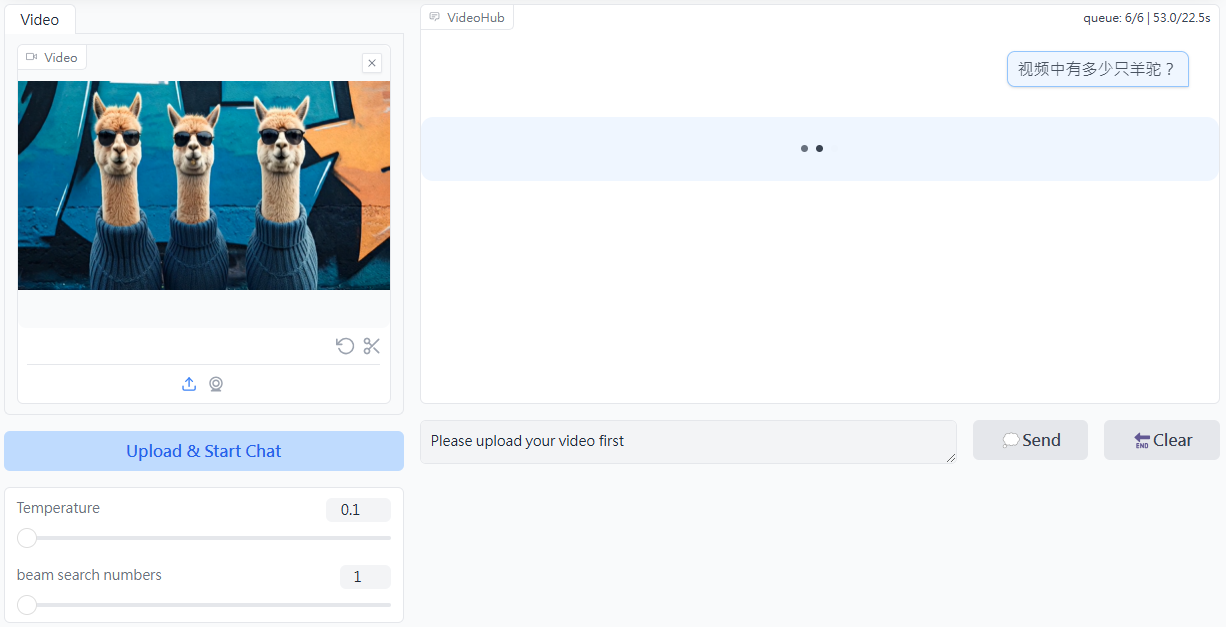

- Video comprehension: Supports comprehension of video content up to 1 minute in length by extracting keyframes.

- Multi-language support: Support Chinese and English bilingualism to adapt to different language environments.

- open source (computing): Full source code and model weights are provided to facilitate secondary development.

- Online Experience: Provides an online demo platform where users can directly experience the model functionality.

- Multiple Deployment Options: Supports Huggingface, ModelScope, and other platforms.

Using Help

Installation and Deployment

- clone warehouse::

git clone https://github.com/THUDM/CogVLM2.git

cd CogVLM2

- Installation of dependencies::

pip install -r requirements.txt

- Download model weights: Download the appropriate model weights as needed and place them in the specified directory.

usage example

graphic understanding

- Loading Models::

from cogvlm2 import CogVLM2

model = CogVLM2.load('path_to_model_weights')

- process image::

image = load_image('path_to_image')

result = model.predict(image)

print(result)

many rounds of dialogue

- Initializing the Dialog::

conversation = model.start_conversation()

- hold a dialog::

response = conversation.ask('你的问题')

print(response)

Video comprehension

- Load Video::

video = load_video('path_to_video')

result = model.predict(video)

print(result)

Online Experience

Users can access the CogVLM2 online demo platform to experience the model's functionality online without local deployment.