Sim is an open source AI agent workflow building platform designed to help users quickly create and deploy Large Language Models (LLMs) that connect various tools. It offers an intuitive interface for developers, technology enthusiasts, and enterprise users.Sim Studio supports both cloud and local deployments, and is flexible and compatible with a wide range of operating environments. Users can start it quickly with simple NPM commands or Docker Compose, or choose to run it manually by configuring or developing containers. The platform supports local model loading and is adapted to NVIDIA GPU or CPU environments. Sim Studio emphasizes lightweight design and user-friendly experience for rapid development of AI-driven workflows.

Function List

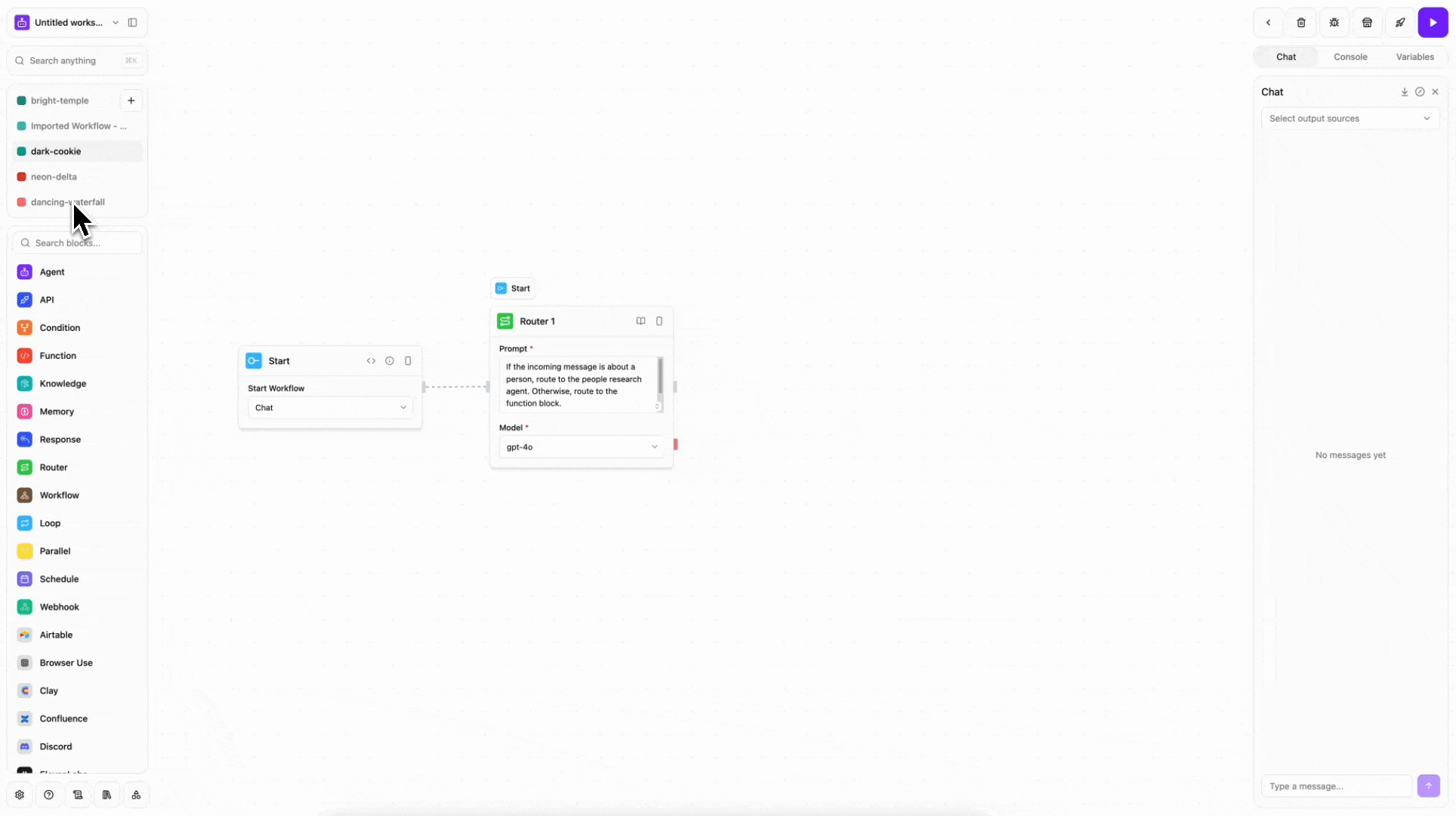

- Workflow construction: Rapidly design AI agent workflows and connect to external tools and data sources through an intuitive interface.

- Multiple Deployment Options: Support for cloud hosting, local running (NPM, Docker Compose, development containers, manual configuration).

- Local Model Support: Integrate native large-language models, pulling them through scripts and adapting them to GPU or CPU environments.

- Live Server: Provide real-time communication capabilities to support dynamic workflow adjustments and data interactions.

- Database Integration: Supports configuration of databases through Drizzle ORM to simplify data management.

- Open Source and Community Contributions: Based on the Apache-2.0 license, community involvement in development and optimization is encouraged.

Using Help

Installation and Deployment

Sim Studio offers a variety of installation and deployment options to meet different user needs. Below are the detailed steps:

Method 1: Use NPM packages (easiest)

This is the fastest way to deploy locally, just install Docker.

- Make sure Docker is installed and running.

- Run the following command in the terminal:

npx simstudio - Open your browser and visit

http://localhost:3000/。 - Optional Parameters:

-p, --port <port>: Specifies the run port (default 3000).--no-pull: Skip pulling the latest Docker image.

Method 2: Using Docker Compose

Ideal for users who need more control.

- Cloning Warehouse:

git clone https://github.com/simstudioai/sim.git cd sim - Run Docker Compose:

docker compose -f docker-compose.prod.yml up -d - interviews

http://localhost:3000/。 - If a local model is used:

- Pull the model:

./apps/sim/scripts/ollama_docker.sh pull <model_name> - Start an environment that supports local models:

- GPU environment:

docker compose --profile local-gpu -f docker-compose.ollama.yml up -d - CPU environment:

docker compose --profile local-cpu -f docker-compose.ollama.yml up -d

- GPU environment:

- Server deployment: editing

docker-compose.prod.ymlSettingsOLLAMA_URLis the server's public IP (e.g.http://1.1.1.1:11434), and then re-run it.

- Pull the model:

Approach 3: Use of Development Containers

For developers using VS Code.

- Install VS Code and the Remote - Containers extension.

- When you open the cloned Sim Studio project, VS Code will prompt "Reopen in Container".

- Click the prompt and the project automatically runs in the container.

- Runs in the terminal:

bun run dev:fullor use a shortcut command:

sim-start

Method 4: Manual Configuration

Ideal for users who need full customization.

- Clone the repository and install the dependencies:

git clone https://github.com/simstudioai/sim.git cd sim bun install - Configure environment variables:

cd apps/sim cp .env.example .envcompiler

.envfile, set theDATABASE_URL、BETTER_AUTH_SECRET和BETTER_AUTH_URL。 - Initialize the database:

bunx drizzle-kit push - Start the service:

- Run the Next.js front end:

bun run dev - Run a real-time server:

bun run dev:sockets - Run both at the same time (recommended):

bun run dev:full

- Run the Next.js front end:

Main function operation flow

- Creating AI Workflows:

- Log in to Sim Studio (cloud or local).

- Select "New Workflow" in the interface.

- Drag and drop modules or configure AI agents via templates to connect to tools such as Slack, Notion, or custom APIs.

- Set trigger conditions and output targets, save and test the workflow.

- Using Local Models:

- Pull models (e.g. LLaMA or other open source models):

./apps/sim/scripts/ollama_docker.sh pull <model_name> - Select Local Model in the workflow configuration to specify GPU or CPU mode.

- Test model response to ensure that the workflow is working properly.

- Pull models (e.g. LLaMA or other open source models):

- real time communication:

- Sim Studio's Real-Time Server supports dynamic workflow adjustments.

- Enable real-time mode in the interface to observe the data flow and output results.

- Workflow updates can be triggered manually through the API or interface.

- Database management:

- Use Drizzle ORM to configure a database to store workflow data.

- 在

.envset up inDATABASE_URLRunningbunx drizzle-kit pushInitialization. - View and manage data tables through the interface.

caveat

- Make sure the Docker version is up to date to avoid compatibility issues.

- Local models require large storage space and computational resources, so high-performance GPUs are recommended.

- Server deployment requires configuration of public IPs and ports to ensure proper external access.

application scenario

- Automated Customer Service

Use Sim Studio to build AI customer service agents that connect to CRM systems and chat tools to automatically respond to customer inquiries with less human intervention. - Content generation

Developers can generate articles, code or design drafts from native models, integrate Notion or Google Drive storage output. - Data analysis workflow

Configure AI agents to analyze CSV data, generate visual reports, connect to Tableau or custom APIs to automate processing. - Personal productivity tools

Connect to calendar, email and task management tools to automate scheduling or generate meeting summaries.

QA

- Is Sim Studio free?

Sim Studio is an open source project based on the Apache-2.0 license and is free to use. The cloud version may involve hosting fees, check official pricing for details. - Programming experience required?

Not required. The interface is simple and suitable for non-technical users. However, developers can implement more complex functionality through manual configuration or APIs. - What large language models are supported?

Supports a variety of open source models (e.g. LLaMA) that can be pulled via scripts and run locally. - How do I contribute code?

Refer to the Contributing Guide in the GitHub repository and submit a Pull Request to participate in the development.