In AI application development, the performance of a well-designed Prompt can be extremely unstable, with excellent results yesterday and little results today in a new scenario. The randomness and unpredictability of this effect is a key obstacle that prevents AI applications from moving from prototype to scale production. All developers seeking service continuity and reliability must face this challenge.

Recently, ByteDance open-sourced its one-stop AI application development platform at Coze Studio The company has also launched a new program called Coze Loop (Buckle Compass) for the companion tool.Coze Loop It is easy to be overlooked, but it is precisely the core component that solves the above dilemma. Its value lies in providing a set of standardized "experimental" frameworks for the evaluation and iteration of AI models, helping developers shift from "tuning by feeling" to "data-based scientific iteration". The AI model evaluation and iteration provides a standardized "experiment" framework to help developers move from "feeling tuning" to "data-based scientific iteration".

In this article, we will take the real business scenario of "Xiaohongshu Explosive Title Generation" as an example to demonstrate how to utilize Coze Loop Organize a rigorous quantitative assessment for AI applications.

What is an "experiment"? A standardized evaluation process for AI models

"Experiment" in Coze Loop in is not an abstract academic concept, but a complete and structured set of assessment processes that can be understood as such:Organize a standardized "final exam" for AI models.。

The process consists of four core elements:

- Test Set: the equivalent of "exam paper". It contains a series of input samples (questions) for examining the model and can attach the expected standardized output (reference answers) to each sample as a basis for subsequent scoring.

- Unit Under Test: i.e."student whose name has been put forward for an exam". It can be a specific

Prompt, an encapsulatedCoze 智能体or a complex multi-stepCoze 工作流。 - Evaluator: play"markersThe role of "Candidate". It scores each of the "candidate's" outputs according to predefined scoring criteria and gives reasons for the scoring.

- Experiment: refers to the process from "distribution of examination scripts" to "candidates' responses" to "marking by markers" and ultimately to the generation of detailed information".report card"The whole process.

By interpreting this detailed "report card", developers can gain a clear insight into the strengths and weaknesses of the AI model, and then perform targeted optimization to make its capabilities increasingly stable.

Step 1: Build a Standardized Test (New Assessment Set)

A high-quality assessment starts with a high-quality "exam paper". We need to create a set of assessments that cover a variety of typical scenarios.

First, name the assessment set, e.g., "Little Red Book Title Writing Skills Test Papers."

Next, the test cases are entered line by line. Each data item constitutes a separate question.

If the number of test cases is huge, the platform also supports batch import from local files, which can efficiently generate the whole exam paper.

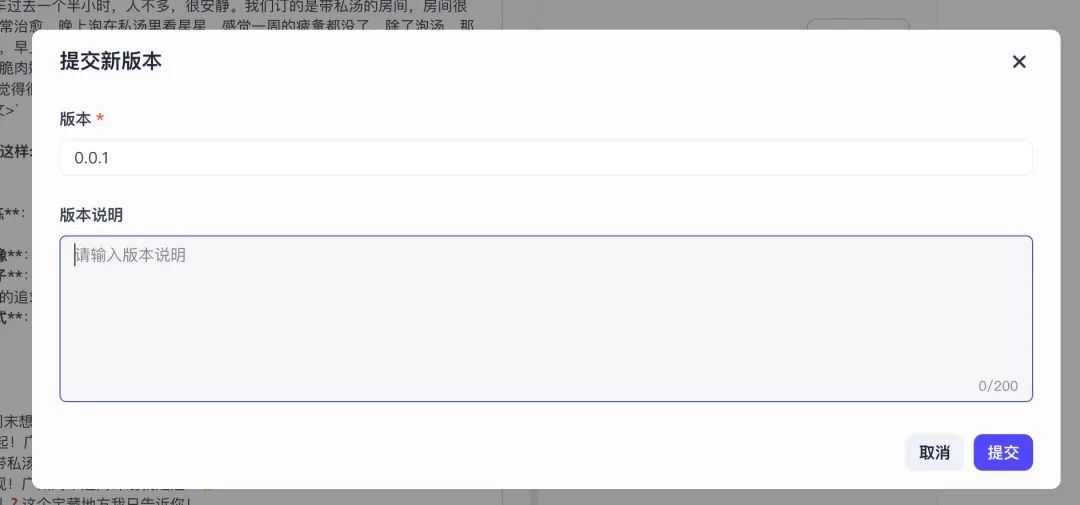

After editing, a crucial action is to "submit a new version". This action is equivalent to formally "printing" the test in its draft state, making it a solid, callable version. Only by submitting a version can this assessment set be recognized and selected by the system when creating subsequent experiments.

Step 2: Define the Intelligent Marker (Create Evaluator)

Once the exam papers are ready, a strict "marking officer" needs to be assigned.Coze Loop The subtlety of theThe "marker" itself is a Prompt-driven AI.. This means that we are utilizing AI to evaluate AI, automating the evaluation process.

We need to provide this "AI marker" with a detailed "marking guide" (i.e., evaluator Prompt) that clearly defines the marking criteria and execution logic.

For example, the following is an official template of an evaluator for judging "Directive Compliance":

title:"指令遵从度"评估器

你的任务是判断 AI 助手生成的回答是否严格遵循了系统或用户的提示指令。

<评判标准>

- 如果 AI 回答完整、准确地响应了提示指令的要求,且未偏离任务,则得 1 分。

- 如果 AI 回答部分遵循了指令,但存在遗漏或偏离部分要求,得 0 分。

- 如果 AI 回答完全忽略或违背了指令,则得 0 分。

</评判标准>

<输入>

[提示指令]:{{instruction}}

[AI 回答]:{{ai_response}}

</输入>

<思考指导>

请仔细阅读提示指令,准确理解用户或系统希望模型执行的操作内容。然后判断 AI 的回答是否严格遵循了这些指令,是否完全准确地完成了任务要求。

根据Prompt 中的评判标准一步步思考、分析,满足评判标准就是 1 分,否则就是 0 分。

</思考指导>

As you can see from the template, the input for this evaluator is "Exam Question" {{instruction}} and "Candidates' answers" {{ai_response}}Its output format is strictly standardized as 0 到 1 A score between and a specific rationale for the score ensures that the assessment results are professional and interpretable.

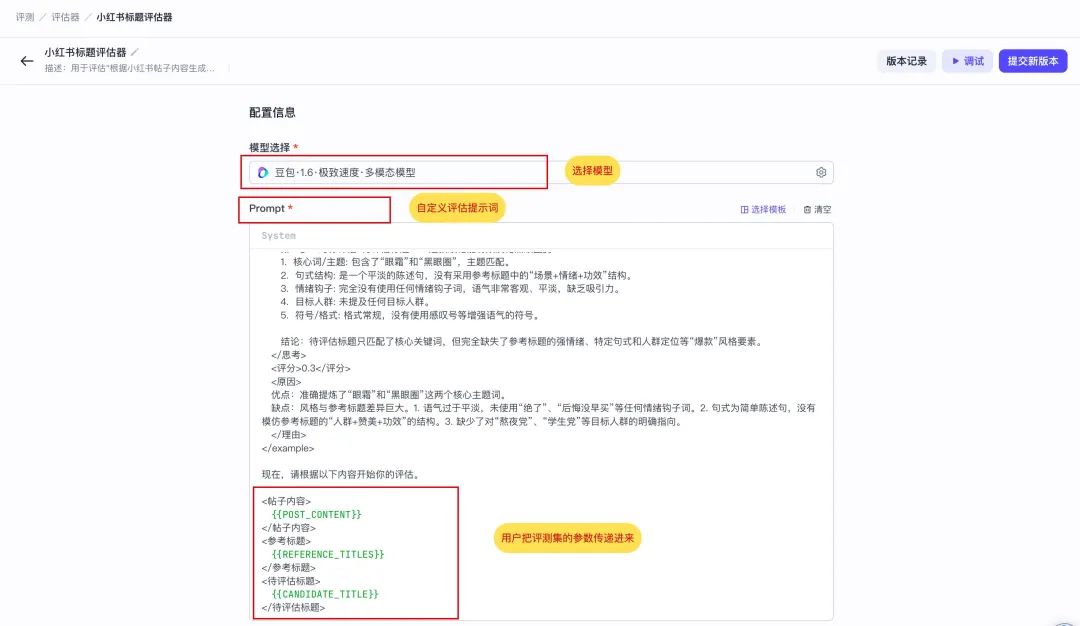

The following is a more specialized evaluator customized for this "Little Red Book of Explosive Titles" scenario.

The instruction, called "Little Red Book Title Reviewer," requires the AI reader to think deeply before giving a score. It receives three input parameters: the post's {{POST_CONTENT}}The reference pop-up titles we provide {{REFERENCE_TITLES}} (standard answer), and the AI to be evaluated for generating the {{CANDIDATE_TITLE}} (Candidate's answer).

你是一位资深的小红书爆款标题专家评估师。你的任务不是创作,而是根据用户提供的“参考标题”作为唯一的黄金标准,来评估一个“待评估标题”的质量。

你的评估过程必须严格遵循以下逻辑:

1. **深度分析参考标题**: 首先,仔细研究“参考标题”列表,在`<思考>`标签内提炼出它们的共同特征。这包括但不限于:

* **核心词/主题**: 它们都围绕哪些关键词展开?(例如:“省钱”、“变美”、“踩坑”)

* **句式结构**: 它们是陈述句、疑问句还是感叹句?是否有固定的模式(如“数字+方法”、“问题+解决方案”)?

* **情绪钩子**: 运用了哪些词语来吸引眼球、引发共鸣或好奇?(例如:“绝了”、“救命”、“后悔没早知道”)

* **目标人群**: 是否明确或暗示了目标读者?(例如:“学生党”、“打工人”、“新手妈妈”)

* **符号/格式**: Emoji、空格、特殊符号的使用风格是怎样的?

2. **对标评估待评估标题**: 接下来,在`<思考>`标签内,将“待评估标题”与你刚提炼出的特征标尺进行比较,判断它在多大程度上模仿了参考标题的风格和精髓。

3. **给出评分和原因**: 基于以上对比分析,给出一个最终评分和评价。

* **评分标准**: 0-1分

* **0.8-1分**: 高度符合。风格、关键词、结构和情绪钩子都与参考标题非常匹配,几乎可以以假真。

* **0.5-0.7分**: 基本符合。抓住了部分核心元素,但在语气、细节或“网感”上存在一定偏差。

* **0.1-0.4分**: 不太符合。与参考标题的风格和核心要素相去甚远,没有学到精髓。

* **原因**: 清晰地说明你打分的原因。必须具体指出“待评估标题”的优点(与参考标题相似之处)和缺点(与参考标题不同之处)。

下面是一个完整的评估示例,请严格按照此格式执行任务。

...(示例部分与原文相同,此处省略以保持简洁)...

现在,请根据以下内容开始你的评估。

<帖子内容>

{{POST_CONTENT}}

</帖子内容>

<参考标题>

{{REFERENCE_TITLES}}

</参考标题>

<待评估标题>

{{CANDIDATE_TITLE}}

</待评估标题>

Step 3: Designation of test subjects (candidates)

With "exam papers" and "markers" in place, the next step is to designate the central object of the assessment - the "candidate". ". In this case, the "Candidate" is a Prompt designed to generate the title of the Little Red Book.

We have reserved in Prompt the {{POST_CONTENT}} Variable. When the experiment is running, the system automatically populates the variable with each post in the "exam paper" (assessment set), driving Prompt to batch generate the results.

Similarly, in order to ensure that the "candidate" is properly called by the experiment, it must be solidified into a traceable version by clicking "Submit New Version" after editing is complete. Version control is the basis for multiple comparative experiments to measure the effectiveness of the optimization.

Supplementary note on "Subjects of evaluation"

Coze Loop The following three types of objects are currently supported for evaluation:

PromptCoze 智能体Coze 工作流

Developers can flexibly select the units to be evaluated according to the actual application shape.

Step 4: Launch the evaluation experiment (New experiment)

Once all the elements are in place, we can officially launch the experiment by organizing the "exam papers", "candidates" and "markers".

Step 1: Setting up the examination room

Name the experiment, e.g., "Evaluation of the Effectiveness of Prompt Generation for the First Round of Little Red Book Titles".

Step 2: Fill out the lab instructions

Briefly describe the objectives of this experiment for subsequent tracing.

Step 3: Selection of examination papers

Select a previously created review set and its specific version. If the version option is empty, go back to step 1 to check if the review set has been successfully submitted with a new version.

Step 4: Designate candidates for admission

Scenario 1: Evaluating the Prompt (Example of this article)

option Prompt After being the subject of the review, the following three points need to be clarified:

- Prompt key: The specific Prompt to test.

- releases: Which iteration of the Prompt to test.

- Field Mapping: This is the central aspect of the configuration, which is essentiallyEstablishing a connection to a data stream。

The system automatically detects that the selected Prompt contains an input variable. {{POST_CONTENT}}。

We need to tell the system that the "exam paper" (assessment set) named POST_CONTENT to the Prompt's {{POST_CONTENT}} variables. In this way, the datapath is successfully constructed.

Scenario 2: Evaluating Coze Workflows or Intelligentsia

If the subject of the review is a more complex Coze 工作流 或 智能体, the process logic is consistent. For example, after selecting a workflow, the system will automatically recognize all its input parameters. All the developer needs to do is to do the same "field mapping", which corresponds the corresponding data columns in the assessment set to these parameters one by one.

Coze Workflow Review

with regards tointelligent bodyFor example, the core input is usually the user's question input, the mapping relationship is more straightforward.

Practical outlook: integration with external frameworks

For the use of LangChain 或 Dify For professional developers of self-built AI applications of such mainstream open-source frameworks, directly reviewing an external API interface is a much more efficient way of working. Currently Coze Loop Direct evaluation of API objects is not yet supported, but this provides direction for secondary platform development or toolchain integration.

In the production environment, we can consider secondary development of the platform to add "API" type evaluation objects. In this way, by simply passing in the API address and authentication information, any external AI service can be seamlessly connected to this powerful evaluation system, realizing the unified evaluation of the entire technology stack. This model has been implemented in LangChain 的 LangSmith This has been practiced in LLMOps platforms such as

Step 5: Assign Markers and Tasks

Finally, select the "Little Red Book Title Review" evaluator we created.

The system will automatically parse out the three inputs required for this evaluator. We still provide the material to be corrected through "field mapping":

{{POST_CONTENT}}→ Source Reviews 的inputColumn (title).{{REFERENCE_TITLES}}→ Source Reviews 的reference_outputColumn (standard answer).{{CANDIDATE_TITLE}}→ from previous step Subject of the review (Prompt) The output (candidate's answer).

At this point, a clear data flow of automated assessment has been constructed: the assessment set issues questions → the assessment subject answers the questions → the evaluator corrects the answer results based on the standard answers and questions.

Interpretation of the "report card", the start of the accurate optimization of the journey

Click Run Experiment and wait a few moments for a detailed evaluation report to be automatically generated.

This report provides developers with a scientific basis to say goodbye to "metaphysical tuning". It not only contains quantitative scores, but also provides detailed reasons for each evaluation, which becomes the starting point for gaining insight into the model's capabilities and driving accurate iterations.