Annot8 is an image annotation tool designed for macOS, aiming to help users quickly prepare high-quality datasets for machine learning models. It supports batch uploading of images and simplifies the annotation process through an intuitive interface and shortcut key operations, suitable for machine learning beginners and professional developers. Users can add custom labels to images and export them to C...

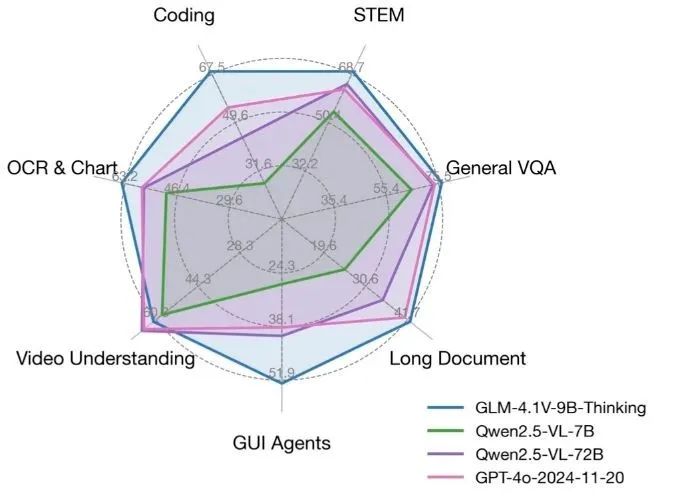

GLM-4.1V-Thinking is an open source visual language model developed by the KEG Lab at Tsinghua University (THUDM), focusing on multimodal reasoning capabilities. Based on the GLM-4-9B-0414 base model, GLM-4.1V-Thinking utilizes reinforcement learning and "chain-of-mind" reasoning mechanisms to...

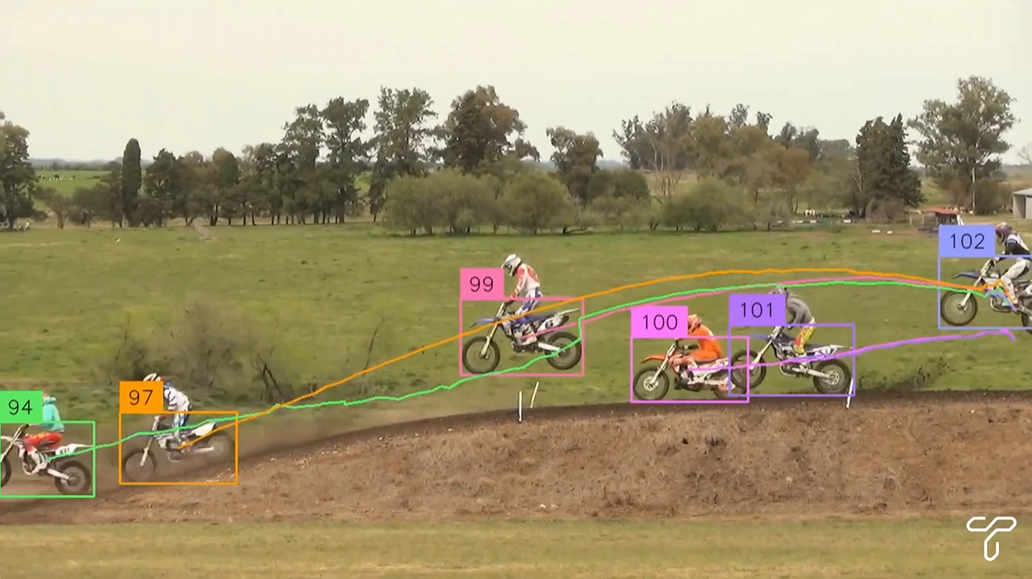

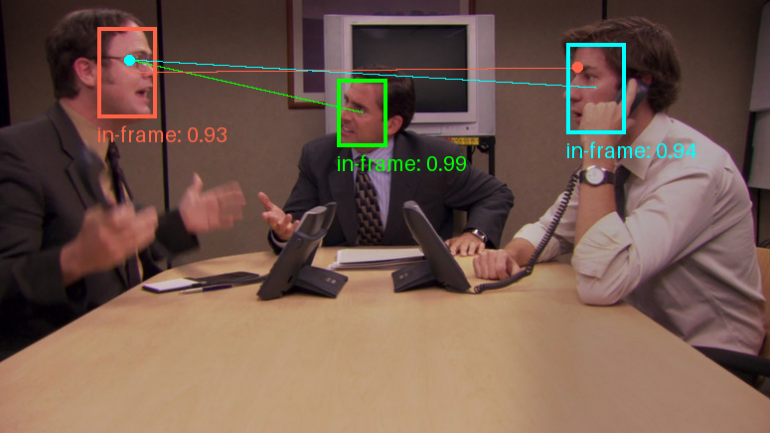

Trackers is an open source Python tool library focused on multi-object tracking in video. It integrates several leading tracking algorithms, such as SORT and DeepSORT, allowing users to combine different object detection models (e.g., YOLO, RT-DETR) for flexible video analysis. Users can easily...

Describe Anything is an open source project developed by NVIDIA and several universities, with the Describe Anything Model (DAM) at its core. This tool generates detailed descriptions based on areas (such as dots, boxes, doodles, or masks) that the user marks in an image or video. It does not ...

Find My Kids is an open source project hosted on GitHub and created by developer Tomer Klein. It combines DeepFace face recognition technology with the WhatsApp Green API, and is designed to help parents monitor their children's WhatsApp groups through...

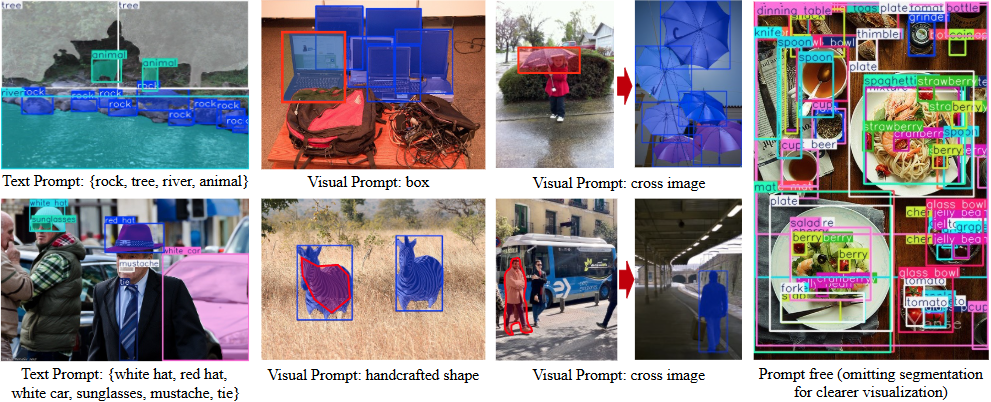

YOLOE is an open source project developed by the Multimedia Intelligence Group (THU-MIG) at Tsinghua University School of Software, with the full name "You Only Look Once Eye". It is based on the PyTorch framework, belongs to the YOLO series of extensions, can detect and segment any object in real time. The project is hosted on GitHu...

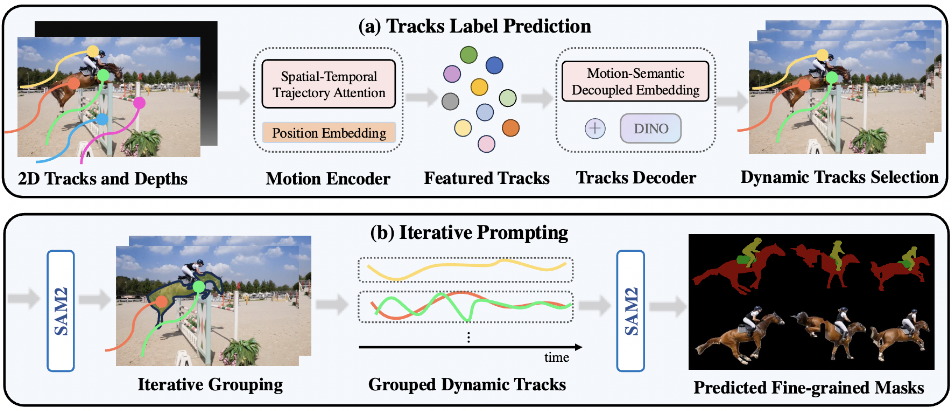

SegAnyMo is an open source project developed by a team of researchers at UC Berkeley and Peking University, including members such as Nan Huang. This tool focuses on video processing and can automatically recognize and segment arbitrary moving objects in a video, such as people, animals or vehicles. It combines TAPNet, DINOv2 and S...

RF-DETR is an open source object detection model developed by the Roboflow team. It is based on the Transformer architecture and its core feature is real-time efficiency. For the first time, the model achieves over 60 APs of real-time detection on the Microsoft COCO dataset, and also performs well in the RF100-VL benchmark...

HumanOmni is an open source multimodal big model developed by the HumanMLLM team and hosted on GitHub. It focuses on analyzing human video and can process both picture and sound to help understand emotions, actions, and conversational content. The project used 2.4 million human-centered video clips and 14 million finger...

Vision Agent is an open source project developed by LandingAI (Enda Wu's team) and hosted on GitHub to help users quickly generate code to solve computer vision tasks. It utilizes an advanced agent framework and multimodal model to generate efficient vision AI code with simple prompts for...

Make Sense is a free online image annotation tool designed to help users quickly prepare datasets for computer vision projects. It requires no complicated installation, just open a browser access to use it, supports multiple operating systems, and is perfect for small deep learning projects. Users can use it to add labels to images and export the results to a variety of formats, such as...

YOLOv12 is an open source project developed by GitHub user sunsmarterjie , focusing on real-time target detection technology . The project is based on YOLO (You Only Look Once) series of frameworks , the introduction of attention mechanism to optimize the performance of traditional convolutional neural networks (CNN) , not only ...

VLM-R1 is an open source visual language modeling project developed by Om AI Lab and hosted on GitHub. The project is based on DeepSeek's R1 approach, combined with the Qwen2.5-VL model, and significantly improves the model through reinforcement learning (R1) and supervised fine-tuning (SFT) techniques in...

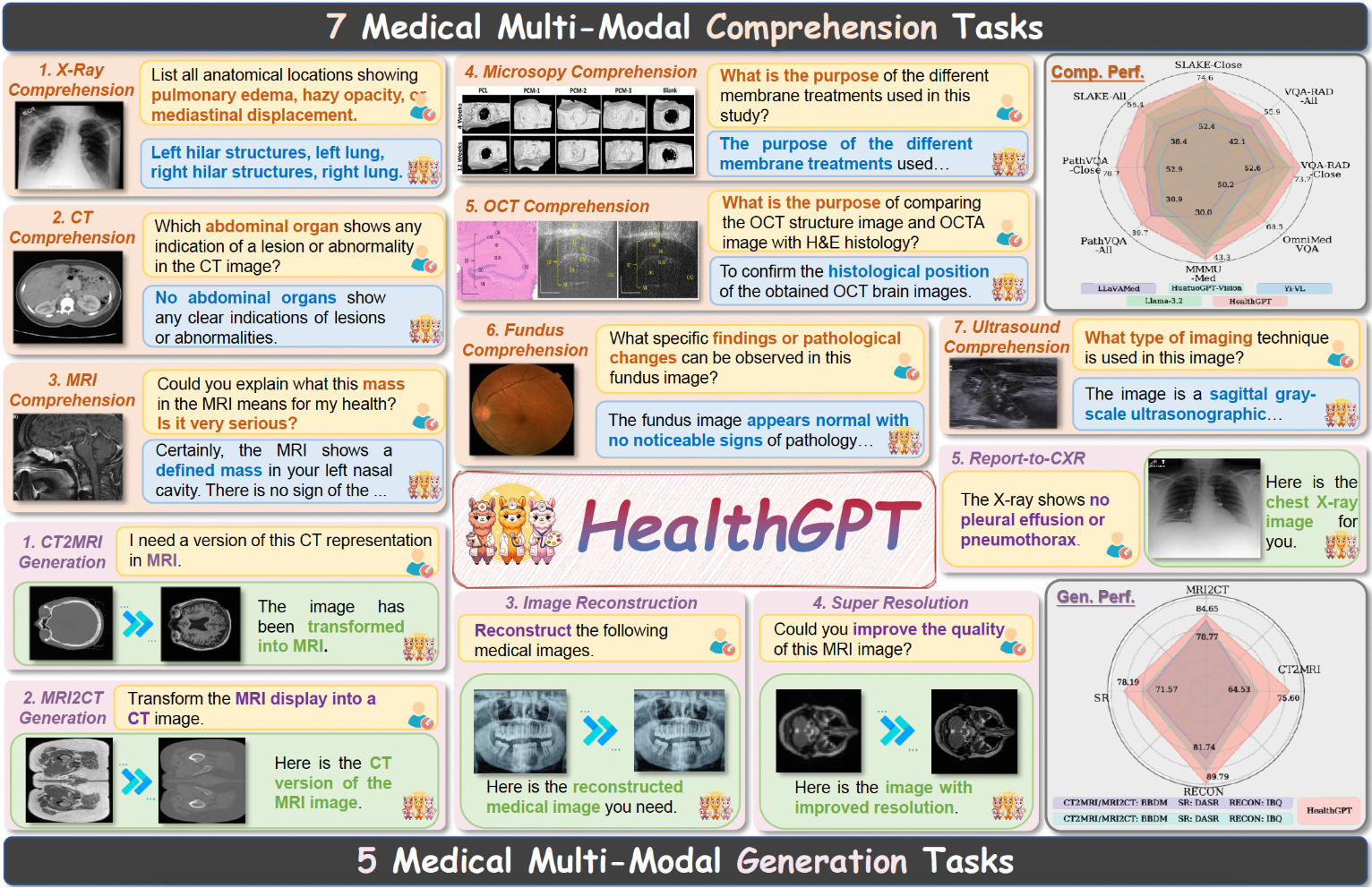

HealthGPT is a state-of-the-art medical grand visual language model designed to enable unified medical visual understanding and generation capabilities through heterogeneous knowledge adaptation. The goal of this project is to integrate medical vision understanding and generation capabilities into a unified autoregressive framework that significantly improves the efficiency and accuracy of medical image processing.HealthGPT supports a variety of...

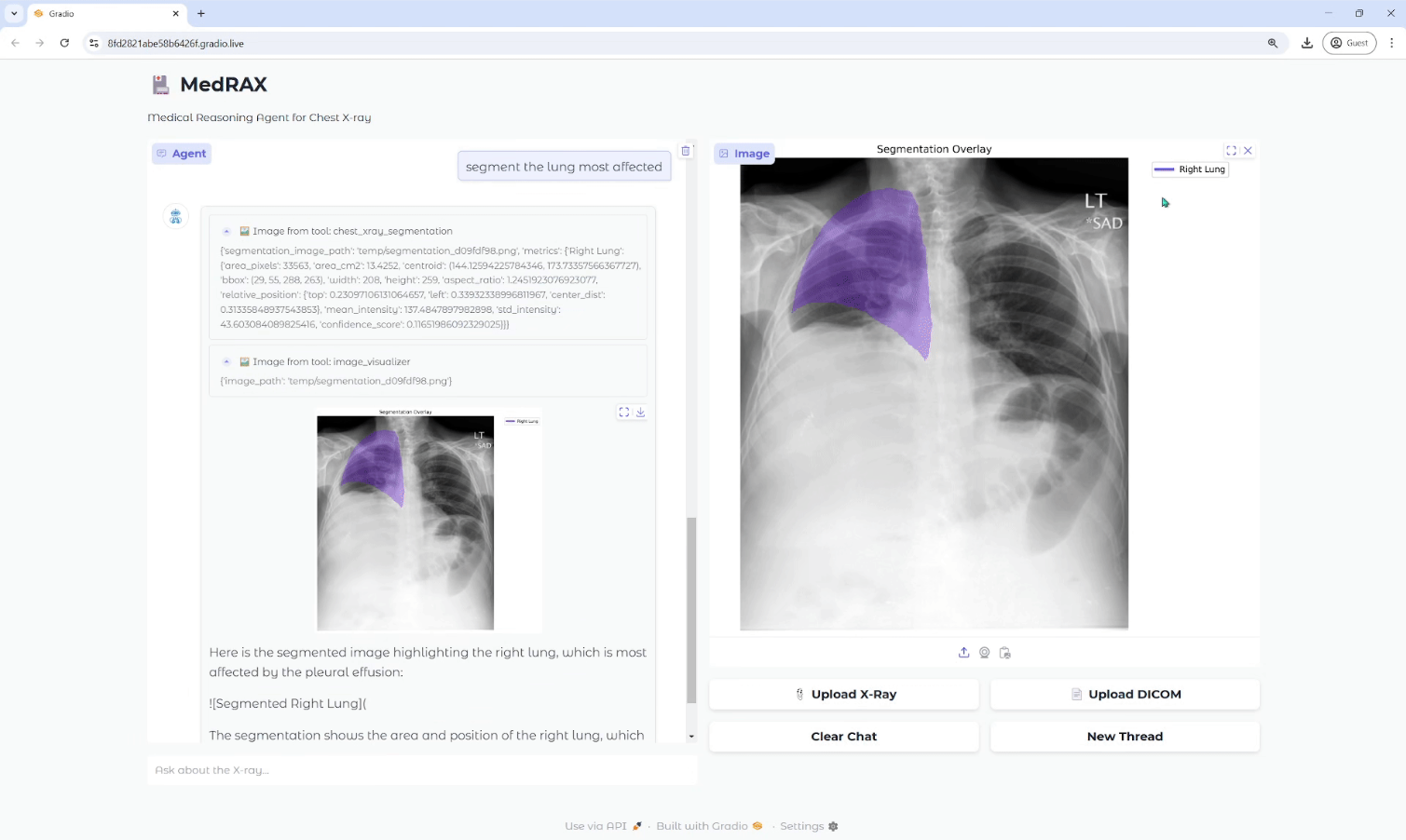

MedRAX is a state-of-the-art AI intelligence designed specifically for Chest X-ray (CXR) analysis. It integrates state-of-the-art CXR analysis tools and a multimodal large language model to dynamically process complex medical queries without additional training.MedRAX provides a unified framework through its modular design and strong technical foundation, significantly enhancing...

Agentic Object Detection is an advanced target detection tool from Landing AI. The tool greatly simplifies the traditional target detection process by using text prompts for detection without the need for data labeling and model training. Users simply upload an image and enter the detection prompts, and the AI agent will...

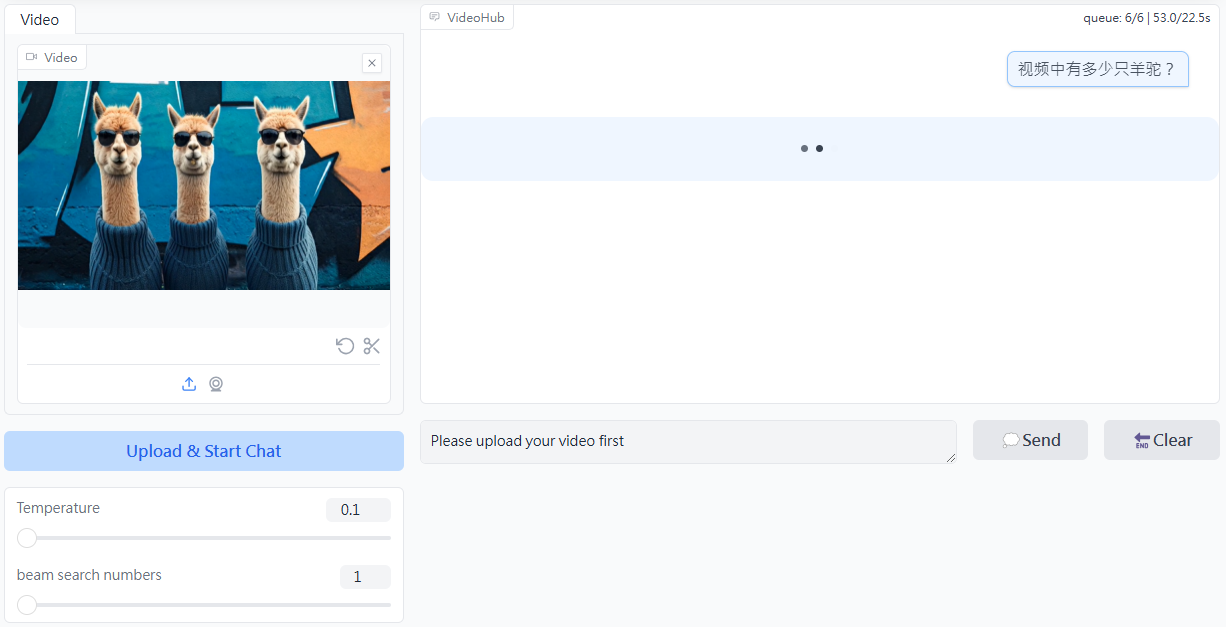

CogVLM2 is an open source multimodal model developed by the Tsinghua University Data Mining Research Group (THUDM), based on the Llama3-8B architecture, and designed to provide performance comparable to or even better than GPT-4V. The model supports image understanding, multi-round conversations, and video understanding, and is capable of processing content up to 8K long, and supports up to 1...

Deeptrain is a platform focusing on AI video processing, which can effectively integrate video content into various AI applications through its advanced technology that supports over 200 language models. Users can train models directly by providing the video URL without downloading the video.Deeptrain provides a range of video transcription to compression and other...

Gaze-LLE is a gaze target prediction tool based on a large-scale learning encoder. Developed by Fiona Ryan, Ajay Bati, Sangmin Lee, Daniel Bolya, Judy Hoffman, and James M. Rehg, it is designed to use pre-trained visual...

Top