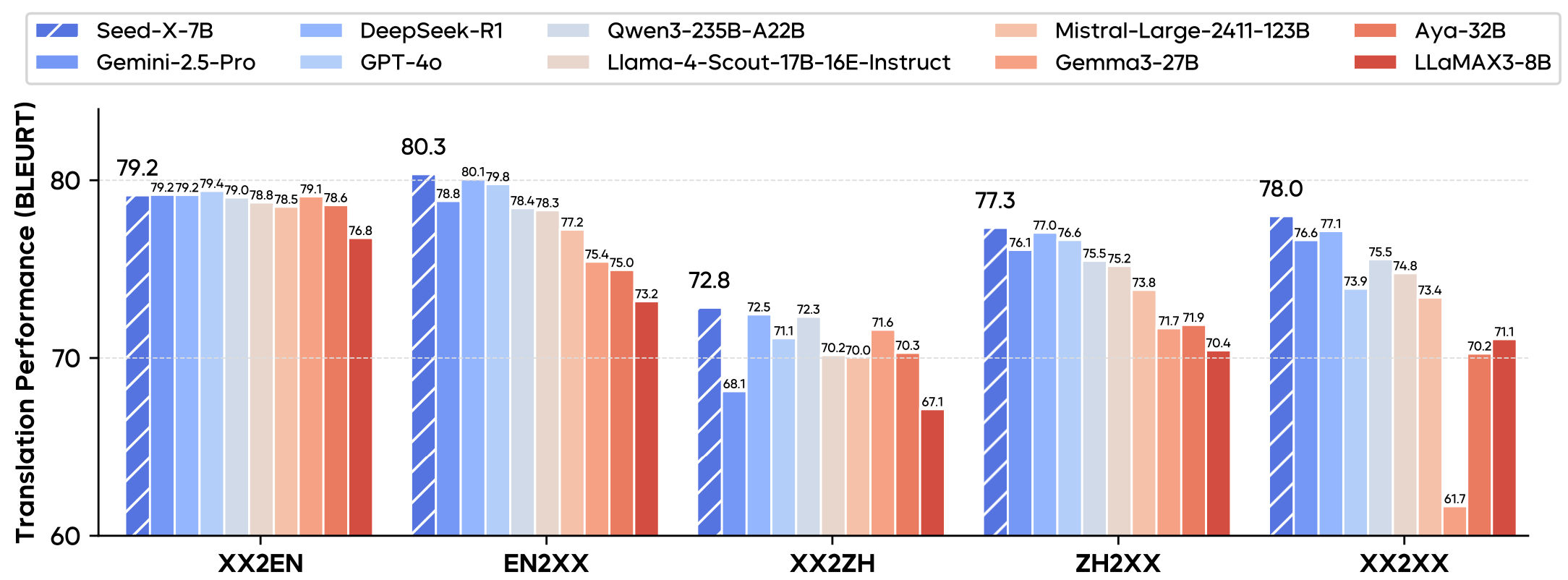

Seed-X-7B is an open source multi-language translation large language model developed by the Seed team at ByteDance, focusing on providing efficient and accurate translation functions. It is based on the 7B parameters of the Mistral Seed-X-7B is designed to support translation in 28 languages, covering a wide range of fields such as Internet, technology, e-commerce, and biomedicine. The model is optimized by pre-training, instruction fine-tuning and reinforcement learning, and the translation quality is comparable to large-scale models such as Gemini-2.5 and GPT-4o. Seed-X-7B is easy to deploy and suitable for both research and practical application scenarios. Users can obtain the model weights and code through Hugging Face or GitHub and use them for translation tasks for free.

Function List

- Supports text translation in 28 languages, covering both high- and low-resource languages.

- Provides instruction model (Seed-X-Instruct) and reinforcement learning optimization model (Seed-X-PPO), the latter has better translation performance.

- Supports Chain-of-Thought (Chained Reasoning) to improve the accuracy of complex translation tasks.

- Using Mistral architecture with a parameter scale of 7B, the inference is efficient and suitable for multi-device deployment.

- Open source model weights and code to support community secondary development and research.

- Supports diverse translation scenarios, including Internet, technology, law, literature and other fields.

- Provides Beam Search and Sampling decoding methods to optimize translation output quality.

Using Help

Installation process

To use the Seed-X-7B model, users need to install the necessary environment and dependencies first. Below are the detailed installation and usage steps, based on the instructions in the official GitHub repository:

- clone warehouse

Clone the Seed-X-7B project code using Git:git clone https://github.com/ByteDance-Seed/Seed-X-7B.git cd Seed-X-7B - Creating a Virtual Environment

Create a Python 3.10 environment with Conda and activate it:conda create -n seedx python=3.10 -y conda activate seedx - Installation of dependencies

Install the Python libraries needed for your project:pip install -r requirements.txtIf you need to speed up reasoning, install Flash Attention:

pip install flash_attn==2.5.9.post1 --no-build-isolation - Download model weights

Download the Seed-X-7B model weights from Hugging Face. The Seed-X-PPO-7B model is recommended because of its better translation performance:from huggingface_hub import snapshot_download save_dir = "ckpts/" repo_id = "ByteDance-Seed/Seed-X-PPO-7B" cache_dir = save_dir + "/cache" snapshot_download( cache_dir=cache_dir, local_dir=save_dir, repo_id=repo_id, local_dir_use_symlinks=False, resume_download=True, allow_patterns=["*.json", "*.safetensors", "*.pth", "*.bin", "*.py", "*.md", "*.txt"] ) - hardware requirement

- Single GPU reasoning: 1 H100-80G can handle 720×1280 video or text translation tasks.

- Multi-GPU Reasoning: 4 H100-80Gs support 1080p or 2K resolution tasks, requires settings

sp_size=4。 - High-performance GPUs are recommended for faster inference.

Usage

Seed-X-7B provides two main models: Seed-X-Instruct-7B and Seed-X-PPO-7B. The following is an example of how to perform a translation task using Seed-X-PPO-7B.

Single-sentence translation (no chained reasoning)

utilization vLLM The library performs fast reasoning to translate English sentences into Chinese:

from vllm import LLM, SamplingParams

model_path = "./ByteDance-Seed/Seed-X-PPO-7B"

model = LLM(model=model_path, max_num_seqs=512, tensor_parallel_size=8, enable_prefix_caching=True, gpu_memory_utilization=0.95)

messages = ["Translate the following English sentence into Chinese:\nMay the force be with you <zh>"]

decoding_params = SamplingParams(temperature=0, max_tokens=512, skip_special_tokens=True)

results = model.generate(messages, decoding_params)

responses = [res.outputs[0].text.strip() for res in results]

print(responses) # 输出:['愿原力与你同在']

Translation with chained reasoning

For complex sentences, Chain-of-Thought can be enabled to improve translation accuracy:

messages = ["Translate the following English sentence into Chinese and explain it in detail:\nMay the force be with you <zh>"]

decoding_params = BeamSearchParams(beam_width=4, max_tokens=512)

results = model.generate(messages, decoding_params)

responses = [res.outputs[0].text.strip() for res in results]

print(responses) # 输出翻译结果及推理过程

batch inference

If multiple sentences need to be translated, this can be handled by vLLM's batch reasoning feature:

messages = [

"Translate the following English sentence into Chinese:\nThe sun sets slowly behind the mountain <zh>",

"Translate the following English sentence into Chinese:\nKnowledge is power <zh>"

]

results = model.generate(messages, decoding_params)

responses = [res.outputs[0].text.strip() for res in results]

print(responses) # 输出:['太阳慢慢地落在山后', '知识就是力量']

Featured Function Operation

- Multi-language support

The Seed-X-7B supports 28 languages, including high-resource languages such as English, Chinese, Spanish, French, and some low-resource languages. Users only need to specify the target language in the input (e.g.<zh>(denoting Chinese), the model can generate the corresponding translation. - Chained reasoning (CoT)

For complex sentences, enabling chained reasoning allows the model to analyze the semantics and context of the sentence step by step. For example, when translating literary or legal texts, the model will break down the sentence structure before generating an accurate translation. - Beam Search Decoding

Use Beam Search (beam_width=4Beam Search produces higher quality translations and is suitable for scenarios where precise output is required. Compared to Sampling decoding, Beam Search is more stable but slightly more computationally expensive. - Cross-disciplinary translation

Seed-X-7B excels in translation in the fields of Internet, technology, e-commerce, biomedicine and law. Users can directly input specialized terminology or long passages of text, and the model maintains terminological accuracy and contextual coherence.

caveat

- GPU Performance: Single GPU inference may be slow, and parallel processing with multiple GPUs is recommended to improve efficiency.

- Model Selection: Priority is given to the use of Seed-X-PPO-7B, which is optimized for reinforcement learning and has better translation results than Seed-X-Instruct-7B.

- Error FeedbackIf you encounter translation errors, you can submit an issue in the GitHub repository or contact the official email address to provide sample inputs and outputs to help improve the model.

application scenario

- multinational e-commerce company

The Seed-X-7B can be used to translate product descriptions, user reviews, and customer service conversations with support for multilingual market expansion. For example, translate English product descriptions into Chinese, Spanish, etc., maintaining terminology accuracy. - academic research

Researchers can use the model to translate academic papers, conference materials or technical documents. For example, translating English scientific papers into Chinese retains the accuracy of specialized terminology. - content creation

Content creators can translate articles, blogs or social media content to support multilingual publishing. For example, translate a Chinese blog into English to attract international readers. - Legal and financial

Models can handle the translation of legal contracts or financial reports, ensuring that terminology is standardized. For example, translating English contracts into Chinese maintains the rigor of the legal language.

QA

- What languages does Seed-X-7B support?

Support 28 languages, including English, Chinese, Spanish, French and other high-resource languages, and some low-resource languages. For a list of languages, please refer to the official documentation. - How to choose between Seed-X-Instruct and Seed-X-PPO models?

Seed-X-PPO is optimized for reinforcement learning for better translation performance and is recommended for production environments.Seed-X-Instruct is suitable for quick testing or lightweight tasks. - Does the model support real-time translation?

The current model is more suitable for offline batch translation, real-time translation needs to optimize the inference speed, and it is recommended to use high-performance GPUs. - How do I deal with translation errors?

You can submit an issue on GitHub with the original text and translations, or contact the official email address for feedback.